Spaces:

Runtime error

A newer version of the Streamlit SDK is available:

1.40.1

Parameter-free LLaVA for video captioning works like magic! 🤩 Let's take a look!

Most of the video captioning models work by downsampling video frames to reduce computational complexity and memory requirements without losing a lot of information in the process. PLLaVA on the other hand, uses pooling! 🤩

How? 🧐 It takes in frames of video, passed to ViT and then projection layer, and then output goes through average pooling where input shape is (# frames, width, height, text decoder input dim) 👇

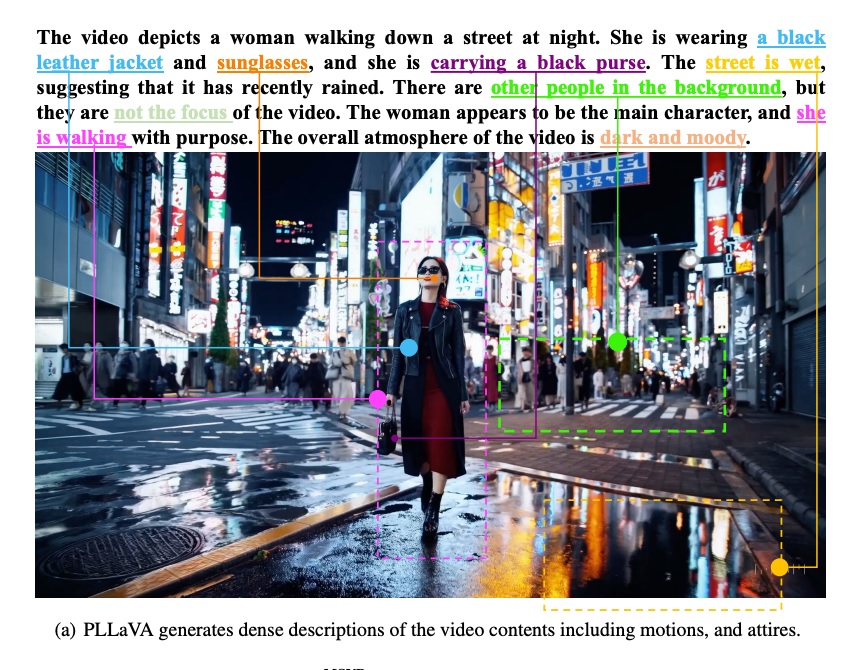

Pooling operation surprisingly reduces the loss of spatial and temporal information. See below some examples on how it can capture the details 🤗

according to authors' findings, it performs way better than many of the existing models (including proprietary VLMs) and scales very well (on text decoder)

Model repositories 🤗 7B, 13B, 34B

Spaces🤗 7B, 13B

Ressources:

PLLaVA : Parameter-free LLaVA Extension from Images to Videos for Video Dense Captioning by Lin Xu, Yilin Zhao, Daquan Zhou, Zhijie Lin, See Kiong Ng, Jiashi Feng (2024) GitHub

Original tweet (May 3, 2024)