We offer a TensorRT model in various precisions including int8, fp16, fp32, and mixed, converted from Deci-AI's YOLO-NAS-Pose pre-trained weights (which is only allowed for non-commerical use) in PyTorch. This (TensorRT) model is compatible with JetPack 5.1.1, benchmarked and tested on Jetson Orin Nano Deveoper Kit.

Note that all quantization that has been introduced in the conversion is purely static, meaning that the corresponding model has potentillay bad accuracy compared to the original one.

Todo: use cppe-5 dataset to calibrate int8 model

More information on calibration for post-training quantization, check this slide

Large

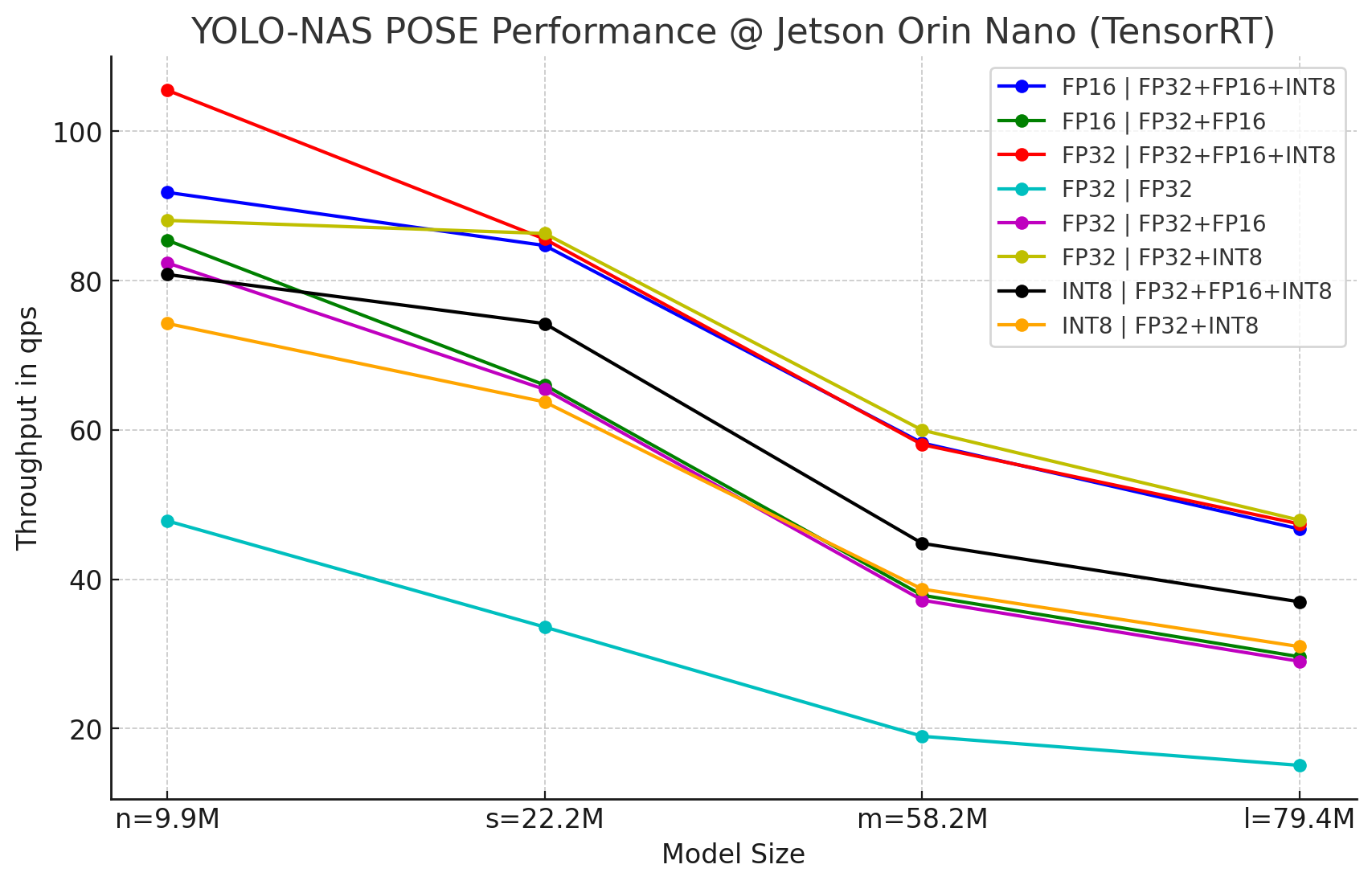

| Model Name | ONNX Precision | TensorRT Preicion | Throughput (TensorRT) |

|---|---|---|---|

| yolo_nas_pose_l_fp16.onnx.best.engine | FP16 | FP32+FP16+INT8 | 46.7231 qps |

| yolo_nas_pose_l_fp16.onnx.fp16.engine | FP16 | FP32+FP16 | 29.6093 qps |

| yolo_nas_pose_l_fp32.onnx.best.engine | FP32 | FP32+FP16+INT8 | 47.4032 qps |

| yolo_nas_pose_l_fp32.onnx.engine | FP32 | FP32 | 15.0654 qps |

| yolo_nas_pose_l_fp32.onnx.fp16.engine | FP32 | FP32+FP16 | 29.0005 qps |

| yolo_nas_pose_l_fp32.onnx.int8.engine | FP32 | FP32+INT8 | 47.9071 qps |

| yolo_nas_pose_l_int8.onnx.best.engine | INT8 | FP32+FP16+INT8 | 36.9695 qps |

| yolo_nas_pose_l_int8.onnx.int8.engine | INT8 | FP32+INT8 | 30.9676 qps |

Medium

| Model Name | ONNX Precision | TensorRT Preicion | Throughput (TensorRT) |

|---|---|---|---|

| yolo_nas_pose_m_fp16.onnx.best.engine | FP16 | FP32+FP16+INT8 | 58.254 qps |

| yolo_nas_pose_m_fp16.onnx.fp16.engine | FP16 | FP32+FP16 | 37.8547 qps |

| yolo_nas_pose_m_fp32.onnx.best.engine | FP32 | FP32+FP16+INT8 | 58.0306 qps |

| yolo_nas_pose_m_fp32.onnx.engine | FP32 | FP32 | 18.9603 qps |

| yolo_nas_pose_m_fp32.onnx.fp16.engine | FP32 | FP32+FP16 | 37.193 qps |

| yolo_nas_pose_m_fp32.onnx.int8.engine | FP32 | FP32+INT8 | 59.9746 qps |

| yolo_nas_pose_m_int8.onnx.best.engine | INT8 | FP32+FP16+INT8 | 44.8046 qps |

| yolo_nas_pose_m_int8.onnx.int8.engine | INT8 | FP32+INT8 | 38.6757 qps |

Small

| Model Name | ONNX Precision | TensorRT Preicion | Throughput (TensorRT) |

|---|---|---|---|

| yolo_nas_pose_s_fp16.onnx.best.engine | FP16 | FP32+FP16+INT8 | 84.7072 qps |

| yolo_nas_pose_s_fp16.onnx.fp16.engine | FP16 | FP32+FP16 | 66.0151 qps |

| yolo_nas_pose_s_fp32.onnx.best.engine | FP32 | FP32+FP16+INT8 | 85.5718 qps |

| yolo_nas_pose_s_fp32.onnx.engine | FP32 | FP32 | 33.5963 qps |

| yolo_nas_pose_s_fp32.onnx.fp16.engine | FP32 | FP32+FP16 | 65.4357 qps |

| yolo_nas_pose_s_fp32.onnx.int8.engine | FP32 | FP32+INT8 | 86.3202 qps |

| yolo_nas_pose_s_int8.onnx.best.engine | INT8 | FP32+FP16+INT8 | 74.2494 qps |

| yolo_nas_pose_s_int8.onnx.int8.engine | INT8 | FP32+INT8 | 63.7546 qps |

Nano

| Model Name | ONNX Precision | TensorRT Preicion | Throughput (TensorRT) |

|---|---|---|---|

| yolo_nas_pose_n_fp16.onnx.best.engine | FP16 | FP32+FP16+INT8 | 91.8287 qps |

| yolo_nas_pose_n_fp16.onnx.fp16.engine | FP16 | FP32+FP16 | 85.4187 qps |

| yolo_nas_pose_n_fp32.onnx.best.engine | FP32 | FP32+FP16+INT8 | 105.519 qps |

| yolo_nas_pose_n_fp32.onnx.engine | FP32 | FP32 | 47.8265 qps |

| yolo_nas_pose_n_fp32.onnx.fp16.engine | FP32 | FP32+FP16 | 82.3834 qps |

| yolo_nas_pose_n_fp32.onnx.int8.engine | FP32 | FP32+INT8 | 88.0719 qps |

| yolo_nas_pose_n_int8.onnx.best.engine | INT8 | FP32+FP16+INT8 | 80.8271 qps |

| yolo_nas_pose_n_int8.onnx.int8.engine | INT8 | FP32+INT8 | 74.2658 qps |