license: other

Overview

This is a fine-tuned 13b parameter LlaMa model, using completely synthetic training data created by https://github.com/jondurbin/airoboros

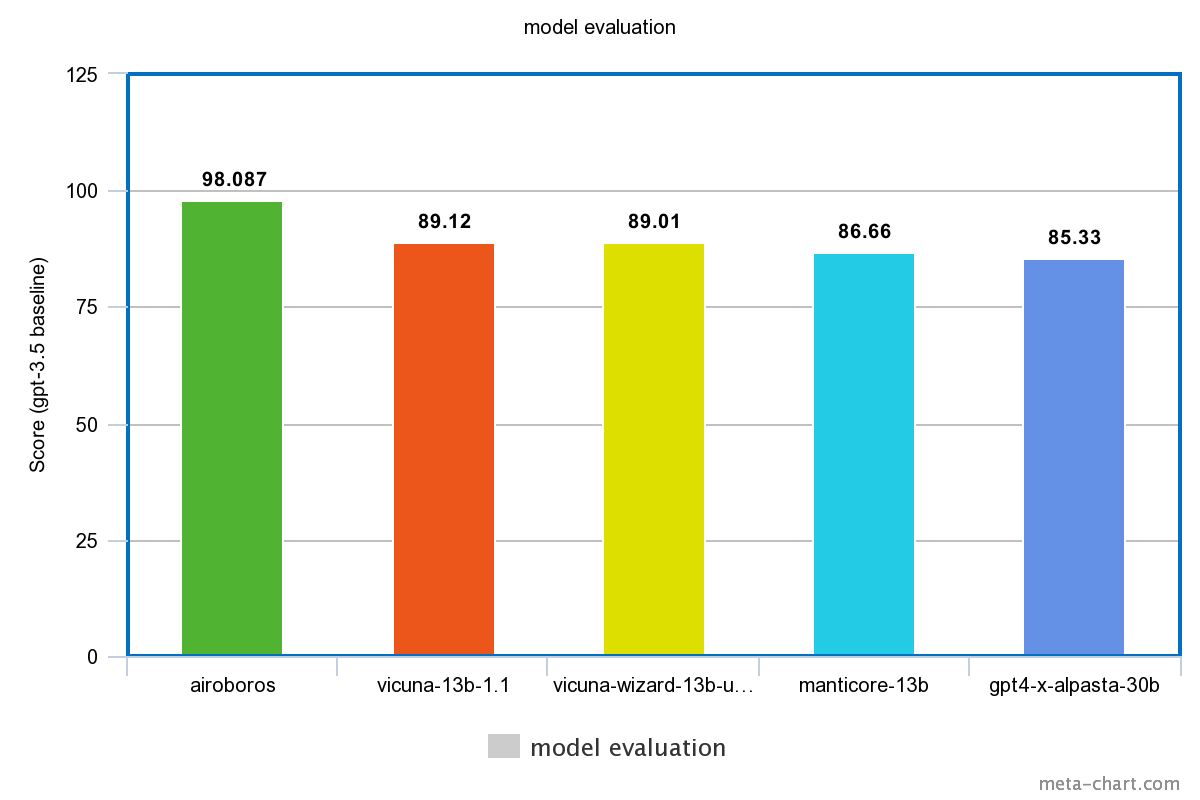

Eval (gpt4 judging)

| model | raw score | gpt-3.5 adjusted score |

|---|---|---|

| airoboros-13b | 17947 | 98.087 |

| gpt35 | 18297 | 100.0 |

| gpt4-x-alpasta-30b | 15612 | 85.33 |

| manticore-13b | 15856 | 86.66 |

| vicuna-13b-1.1 | 16306 | 89.12 |

| wizard-vicuna-13b-uncensored | 16287 | 89.01 |

individual question scores, with shareGPT links (200 prompts generated by gpt-4)

| question | airoboros-13b | gpt35 | gpt4-x-alpasta-30b | manticore-13b | vicuna-13b-1.1 | wizard-vicuna-13b-uncensored | link |

|---|---|---|---|---|---|---|---|

| 1 | 80 | 95 | 70 | 90 | 85 | 60 | eval |

| 2 | 20 | 95 | 40 | 30 | 90 | 80 | eval |

| 3 | 100 | 100 | 100 | 95 | 95 | 100 | eval |

| 4 | 90 | 100 | 85 | 60 | 95 | 100 | eval |

| 5 | 95 | 90 | 80 | 85 | 95 | 75 | eval |

| 6 | 100 | 95 | 90 | 95 | 98 | 92 | eval |

| 7 | 50 | 100 | 80 | 95 | 60 | 55 | eval |

| 8 | 70 | 90 | 80 | 60 | 85 | 40 | eval |

| 9 | 100 | 95 | 50 | 85 | 40 | 60 | eval |

| 10 | 85 | 60 | 55 | 65 | 50 | 70 | eval |

| 11 | 95 | 100 | 85 | 90 | 60 | 75 | eval |

| 12 | 100 | 95 | 70 | 80 | 50 | 85 | eval |

| 13 | 100 | 95 | 80 | 70 | 60 | 90 | eval |

| 14 | 95 | 100 | 70 | 85 | 90 | 90 | eval |

| 15 | 80 | 95 | 90 | 60 | 30 | 85 | eval |

| 16 | 60 | 95 | 0 | 75 | 50 | 40 | eval |

| 17 | 100 | 95 | 90 | 98 | 95 | 95 | eval |

| 18 | 60 | 85 | 40 | 50 | 20 | 0 | eval |

| 19 | 100 | 90 | 85 | 95 | 95 | 80 | eval |

| 20 | 100 | 95 | 100 | 95 | 90 | 95 | eval |

| 21 | 95 | 90 | 96 | 80 | 92 | 88 | eval |

| 22 | 95 | 92 | 90 | 93 | 89 | 91 | eval |

| 23 | 95 | 93 | 90 | 94 | 96 | 92 | eval |

| 24 | 95 | 90 | 93 | 88 | 92 | 85 | eval |

| 25 | 95 | 90 | 85 | 96 | 88 | 92 | eval |

| 26 | 95 | 95 | 90 | 93 | 92 | 91 | eval |

| 27 | 95 | 98 | 80 | 97 | 99 | 96 | eval |

| 28 | 95 | 93 | 90 | 87 | 92 | 89 | eval |

| 29 | 90 | 85 | 95 | 80 | 92 | 75 | eval |

| 30 | 90 | 85 | 95 | 93 | 80 | 92 | eval |

| 31 | 95 | 92 | 90 | 91 | 93 | 89 | eval |

| 32 | 100 | 95 | 90 | 85 | 80 | 95 | eval |

| 33 | 95 | 97 | 93 | 92 | 96 | 94 | eval |

| 34 | 95 | 93 | 94 | 90 | 88 | 92 | eval |

| 35 | 90 | 95 | 98 | 85 | 96 | 92 | eval |

| 36 | 90 | 88 | 85 | 80 | 82 | 84 | eval |

| 37 | 90 | 95 | 85 | 87 | 92 | 88 | eval |

| 38 | 95 | 97 | 96 | 90 | 93 | 92 | eval |

| 39 | 95 | 93 | 92 | 90 | 89 | 91 | eval |

| 40 | 90 | 95 | 93 | 92 | 94 | 91 | eval |

| 41 | 90 | 85 | 95 | 80 | 88 | 75 | eval |

| 42 | 85 | 90 | 95 | 88 | 92 | 80 | eval |

| 43 | 90 | 95 | 92 | 85 | 80 | 87 | eval |

| 44 | 85 | 90 | 95 | 80 | 88 | 75 | eval |

| 45 | 85 | 80 | 75 | 90 | 70 | 82 | eval |

| 46 | 90 | 85 | 95 | 92 | 93 | 80 | eval |

| 47 | 90 | 95 | 75 | 85 | 80 | 70 | eval |

| 48 | 85 | 90 | 80 | 88 | 82 | 83 | eval |

| 49 | 85 | 90 | 95 | 92 | 88 | 80 | eval |

| 50 | 85 | 90 | 80 | 75 | 95 | 88 | eval |

| 51 | 85 | 90 | 80 | 88 | 84 | 92 | eval |

| 52 | 80 | 90 | 75 | 85 | 70 | 95 | eval |

| 53 | 90 | 88 | 85 | 80 | 92 | 83 | eval |

| 54 | 85 | 75 | 90 | 80 | 78 | 88 | eval |

| 55 | 85 | 90 | 80 | 82 | 75 | 88 | eval |

| 56 | 90 | 85 | 40 | 95 | 80 | 88 | eval |

| 57 | 85 | 95 | 90 | 75 | 88 | 80 | eval |

| 58 | 85 | 95 | 90 | 92 | 89 | 88 | eval |

| 59 | 80 | 85 | 75 | 60 | 90 | 70 | eval |

| 60 | 85 | 90 | 87 | 80 | 88 | 75 | eval |

| 61 | 85 | 80 | 75 | 50 | 90 | 80 | eval |

| 62 | 95 | 80 | 90 | 85 | 75 | 82 | eval |

| 63 | 85 | 90 | 80 | 70 | 95 | 88 | eval |

| 64 | 90 | 95 | 70 | 85 | 80 | 75 | eval |

| 65 | 90 | 85 | 70 | 75 | 80 | 60 | eval |

| 66 | 95 | 90 | 70 | 50 | 85 | 80 | eval |

| 67 | 80 | 85 | 40 | 60 | 90 | 95 | eval |

| 68 | 75 | 60 | 80 | 55 | 70 | 85 | eval |

| 69 | 90 | 85 | 60 | 50 | 80 | 95 | eval |

| 70 | 45 | 85 | 60 | 20 | 65 | 75 | eval |

| 71 | 85 | 90 | 30 | 60 | 80 | 70 | eval |

| 72 | 90 | 95 | 80 | 40 | 85 | 70 | eval |

| 73 | 85 | 90 | 70 | 75 | 80 | 95 | eval |

| 74 | 90 | 70 | 50 | 20 | 60 | 40 | eval |

| 75 | 90 | 95 | 75 | 60 | 85 | 80 | eval |

| 76 | 85 | 80 | 60 | 70 | 65 | 75 | eval |

| 77 | 90 | 85 | 80 | 75 | 82 | 70 | eval |

| 78 | 90 | 95 | 80 | 70 | 85 | 75 | eval |

| 79 | 85 | 75 | 30 | 80 | 90 | 70 | eval |

| 80 | 85 | 90 | 50 | 70 | 80 | 60 | eval |

| 81 | 100 | 95 | 98 | 99 | 97 | 96 | eval |

| 82 | 95 | 90 | 92 | 93 | 91 | 89 | eval |

| 83 | 95 | 92 | 90 | 85 | 88 | 91 | eval |

| 84 | 100 | 95 | 98 | 97 | 96 | 99 | eval |

| 85 | 100 | 100 | 100 | 90 | 100 | 95 | eval |

| 86 | 100 | 95 | 98 | 97 | 94 | 99 | eval |

| 87 | 95 | 90 | 92 | 93 | 94 | 91 | eval |

| 88 | 100 | 95 | 98 | 90 | 96 | 95 | eval |

| 89 | 95 | 96 | 92 | 90 | 89 | 93 | eval |

| 90 | 100 | 95 | 93 | 90 | 92 | 88 | eval |

| 91 | 100 | 100 | 98 | 97 | 99 | 100 | eval |

| 92 | 95 | 90 | 92 | 85 | 93 | 94 | eval |

| 93 | 95 | 93 | 90 | 92 | 96 | 91 | eval |

| 94 | 95 | 96 | 92 | 90 | 93 | 91 | eval |

| 95 | 95 | 90 | 92 | 93 | 91 | 89 | eval |

| 96 | 100 | 98 | 95 | 97 | 96 | 99 | eval |

| 97 | 90 | 95 | 85 | 88 | 92 | 87 | eval |

| 98 | 95 | 93 | 90 | 92 | 89 | 88 | eval |

| 99 | 100 | 95 | 97 | 90 | 96 | 94 | eval |

| 100 | 95 | 93 | 90 | 92 | 94 | 91 | eval |

| 101 | 95 | 92 | 90 | 93 | 94 | 88 | eval |

| 102 | 95 | 92 | 60 | 97 | 90 | 96 | eval |

| 103 | 95 | 90 | 92 | 93 | 91 | 89 | eval |

| 104 | 95 | 90 | 97 | 92 | 91 | 93 | eval |

| 105 | 90 | 95 | 93 | 85 | 92 | 91 | eval |

| 106 | 95 | 90 | 40 | 92 | 93 | 85 | eval |

| 107 | 100 | 100 | 95 | 90 | 95 | 90 | eval |

| 108 | 90 | 95 | 96 | 98 | 93 | 92 | eval |

| 109 | 90 | 95 | 92 | 89 | 93 | 94 | eval |

| 110 | 100 | 95 | 100 | 98 | 96 | 99 | eval |

| 111 | 100 | 100 | 95 | 90 | 100 | 90 | eval |

| 112 | 90 | 85 | 88 | 92 | 87 | 91 | eval |

| 113 | 95 | 97 | 90 | 92 | 93 | 94 | eval |

| 114 | 90 | 95 | 85 | 88 | 92 | 89 | eval |

| 115 | 95 | 93 | 90 | 92 | 94 | 91 | eval |

| 116 | 90 | 95 | 85 | 80 | 88 | 82 | eval |

| 117 | 95 | 90 | 60 | 85 | 93 | 70 | eval |

| 118 | 95 | 92 | 94 | 93 | 96 | 90 | eval |

| 119 | 95 | 90 | 85 | 93 | 87 | 92 | eval |

| 120 | 95 | 96 | 93 | 90 | 97 | 92 | eval |

| 121 | 100 | 0 | 0 | 100 | 0 | 0 | eval |

| 122 | 60 | 100 | 0 | 80 | 0 | 0 | eval |

| 123 | 0 | 100 | 60 | 0 | 0 | 90 | eval |

| 124 | 100 | 100 | 0 | 100 | 100 | 100 | eval |

| 125 | 100 | 100 | 100 | 100 | 95 | 100 | eval |

| 126 | 100 | 100 | 100 | 50 | 90 | 100 | eval |

| 127 | 100 | 100 | 100 | 100 | 95 | 90 | eval |

| 128 | 100 | 100 | 100 | 95 | 0 | 100 | eval |

| 129 | 50 | 95 | 20 | 10 | 30 | 85 | eval |

| 130 | 100 | 100 | 60 | 20 | 30 | 40 | eval |

| 131 | 100 | 0 | 0 | 0 | 0 | 100 | eval |

| 132 | 0 | 100 | 60 | 0 | 0 | 80 | eval |

| 133 | 50 | 100 | 20 | 90 | 0 | 10 | eval |

| 134 | 100 | 100 | 100 | 100 | 100 | 100 | eval |

| 135 | 100 | 100 | 100 | 100 | 100 | 100 | eval |

| 136 | 40 | 100 | 95 | 0 | 100 | 40 | eval |

| 137 | 100 | 100 | 100 | 100 | 80 | 100 | eval |

| 138 | 100 | 100 | 100 | 0 | 90 | 40 | eval |

| 139 | 0 | 100 | 100 | 50 | 70 | 20 | eval |

| 140 | 100 | 100 | 50 | 90 | 0 | 95 | eval |

| 141 | 100 | 95 | 90 | 85 | 98 | 80 | eval |

| 142 | 95 | 98 | 90 | 92 | 96 | 89 | eval |

| 143 | 90 | 95 | 75 | 85 | 80 | 82 | eval |

| 144 | 95 | 98 | 50 | 92 | 96 | 94 | eval |

| 145 | 95 | 90 | 0 | 93 | 92 | 94 | eval |

| 146 | 95 | 90 | 85 | 92 | 80 | 88 | eval |

| 147 | 95 | 93 | 75 | 85 | 90 | 92 | eval |

| 148 | 90 | 95 | 88 | 85 | 92 | 89 | eval |

| 149 | 100 | 100 | 100 | 95 | 97 | 98 | eval |

| 150 | 85 | 40 | 30 | 95 | 90 | 88 | eval |

| 151 | 90 | 95 | 92 | 85 | 88 | 93 | eval |

| 152 | 95 | 96 | 92 | 90 | 89 | 93 | eval |

| 153 | 90 | 95 | 85 | 80 | 92 | 88 | eval |

| 154 | 95 | 98 | 65 | 90 | 85 | 93 | eval |

| 155 | 95 | 92 | 96 | 97 | 90 | 89 | eval |

| 156 | 95 | 90 | 92 | 91 | 89 | 93 | eval |

| 157 | 95 | 90 | 80 | 75 | 95 | 90 | eval |

| 158 | 92 | 40 | 30 | 95 | 90 | 93 | eval |

| 159 | 90 | 92 | 85 | 88 | 89 | 87 | eval |

| 160 | 95 | 80 | 90 | 92 | 91 | 88 | eval |

| 161 | 95 | 93 | 92 | 90 | 91 | 94 | eval |

| 162 | 100 | 98 | 95 | 90 | 92 | 96 | eval |

| 163 | 95 | 92 | 80 | 85 | 90 | 93 | eval |

| 164 | 95 | 98 | 90 | 88 | 97 | 96 | eval |

| 165 | 90 | 95 | 85 | 88 | 86 | 92 | eval |

| 166 | 100 | 100 | 100 | 100 | 100 | 100 | eval |

| 167 | 90 | 95 | 85 | 96 | 92 | 88 | eval |

| 168 | 100 | 98 | 95 | 99 | 97 | 96 | eval |

| 169 | 95 | 92 | 70 | 90 | 93 | 89 | eval |

| 170 | 95 | 90 | 88 | 92 | 94 | 93 | eval |

| 171 | 95 | 90 | 93 | 92 | 85 | 94 | eval |

| 172 | 95 | 93 | 90 | 87 | 92 | 91 | eval |

| 173 | 95 | 93 | 90 | 96 | 92 | 91 | eval |

| 174 | 95 | 97 | 85 | 96 | 98 | 90 | eval |

| 175 | 95 | 92 | 90 | 85 | 93 | 94 | eval |

| 176 | 95 | 96 | 92 | 90 | 97 | 93 | eval |

| 177 | 95 | 93 | 96 | 94 | 90 | 92 | eval |

| 178 | 95 | 94 | 93 | 92 | 90 | 89 | eval |

| 179 | 90 | 85 | 95 | 80 | 87 | 75 | eval |

| 180 | 95 | 94 | 92 | 93 | 90 | 96 | eval |

| 181 | 95 | 100 | 90 | 95 | 95 | 95 | eval |

| 182 | 100 | 95 | 85 | 100 | 0 | 90 | eval |

| 183 | 100 | 95 | 90 | 95 | 100 | 95 | eval |

| 184 | 95 | 90 | 60 | 95 | 85 | 80 | eval |

| 185 | 100 | 95 | 90 | 98 | 97 | 99 | eval |

| 186 | 95 | 90 | 85 | 95 | 80 | 92 | eval |

| 187 | 100 | 95 | 100 | 98 | 100 | 90 | eval |

| 188 | 100 | 95 | 80 | 85 | 90 | 85 | eval |

| 189 | 100 | 90 | 95 | 85 | 95 | 100 | eval |

| 190 | 95 | 90 | 85 | 80 | 88 | 92 | eval |

| 191 | 100 | 100 | 0 | 0 | 100 | 0 | eval |

| 192 | 100 | 100 | 100 | 50 | 100 | 75 | eval |

| 193 | 100 | 100 | 0 | 0 | 100 | 0 | eval |

| 194 | 0 | 100 | 0 | 0 | 0 | 0 | eval |

| 195 | 100 | 100 | 50 | 0 | 0 | 0 | eval |

| 196 | 100 | 100 | 100 | 100 | 100 | 95 | eval |

| 197 | 100 | 100 | 50 | 0 | 0 | 0 | eval |

| 198 | 100 | 100 | 0 | 0 | 100 | 0 | eval |

| 199 | 90 | 85 | 80 | 95 | 70 | 75 | eval |

| 200 | 100 | 100 | 0 | 0 | 0 | 0 | eval |

Training data

I used a jailbreak prompt to generate the synthetic instructions, which resulted in some training data that would likely be censored by other models, such as how-to prompts about synthesizing drugs, making homemade flamethrowers, etc. Mind you, this is all generated by ChatGPT, not me. My goal was to simply test some of the capabilities of ChatGPT when unfiltered (as much as possible), and not to intentionally produce any harmful/dangerous/etc. content.

The jailbreak prompt I used is the default prompt in the python code when using the --uncensored flag: https://github.com/jondurbin/airoboros/blob/main/airoboros/self_instruct.py#L39

I also did a few passes of manually cleanup to remove some bad prompts, but mostly I left the data as-is. Initially, the model was fairly bad at math/extrapolation, closed question-answering (heavy hallucination), and coding, so I did one more fine tuning pass with additional synthetic instructions aimed at those types of problems.

Both the initial instructions and final-pass fine-tuning instructions will be published soon.

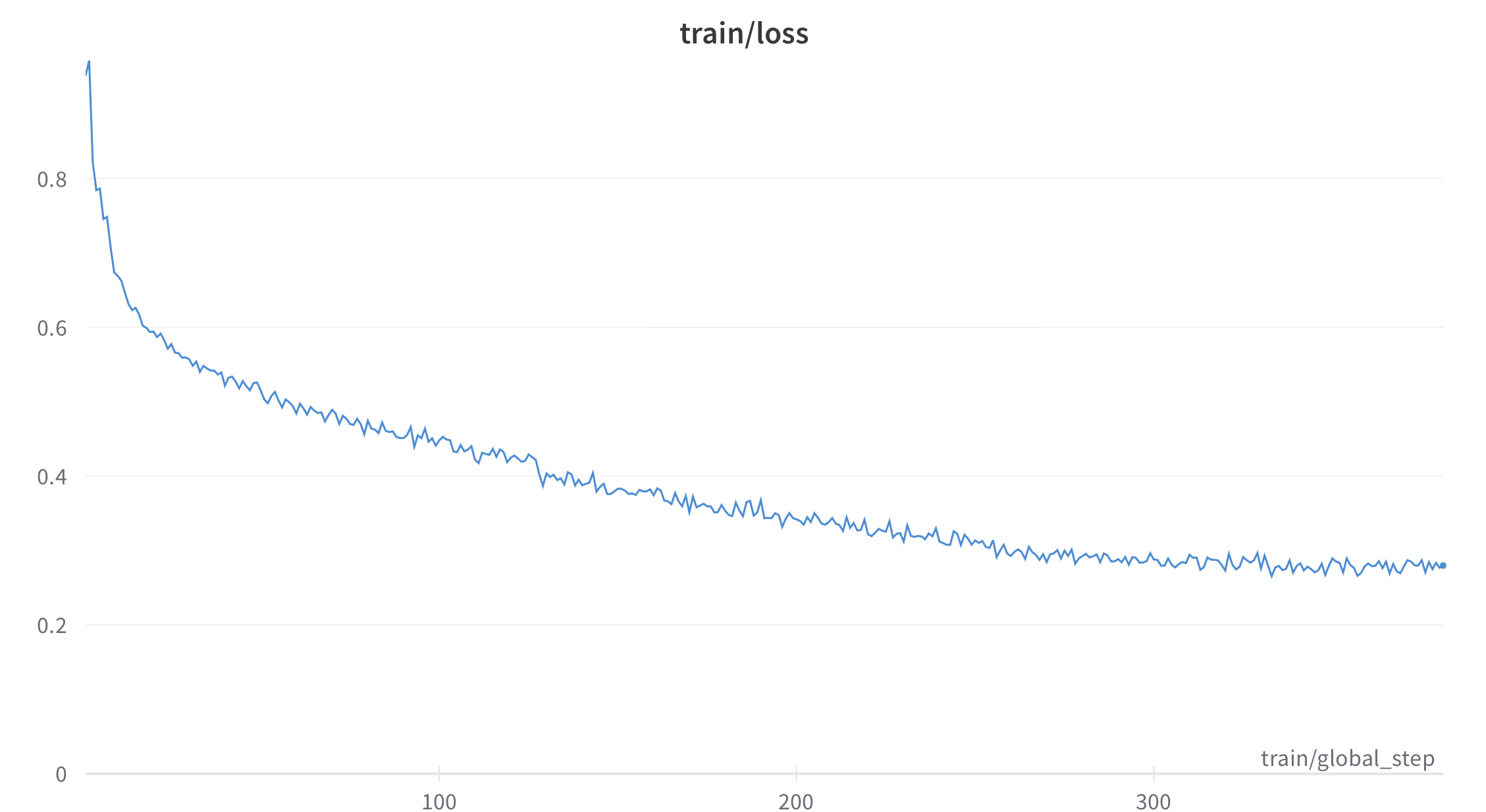

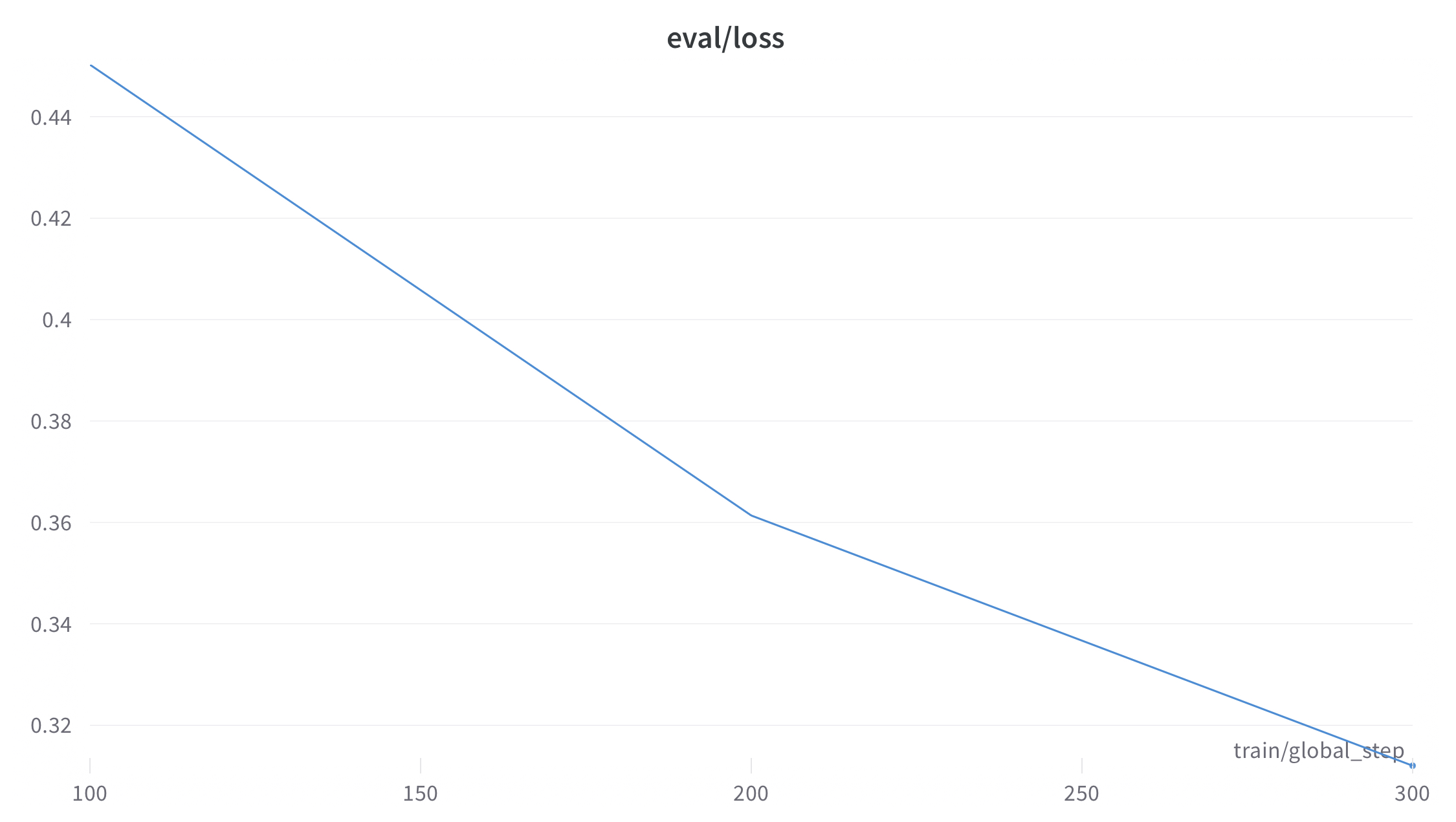

Fine-tuning method

I used the excellent FastChat module, running with:

source /workspace/venv/bin/activate

export NCCL_P2P_DISABLE=1

export NCCL_P2P_LEVEL=LOC

torchrun --nproc_per_node=8 --master_port=20001 /workspace/FastChat/fastchat/train/train_mem.py \

--model_name_or_path /workspace/llama-13b \

--data_path /workspace/as_conversations.json \

--bf16 True \

--output_dir /workspace/airoboros-uncensored-13b \

--num_train_epochs 3 \

--per_device_train_batch_size 20 \

--per_device_eval_batch_size 20 \

--gradient_accumulation_steps 2 \

--evaluation_strategy "steps" \

--eval_steps 500 \

--save_strategy "steps" \

--save_steps 500 \

--save_total_limit 10 \

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.04 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--fsdp "full_shard auto_wrap offload" \

--fsdp_transformer_layer_cls_to_wrap 'LlamaDecoderLayer' \

--tf32 True \

--model_max_length 2048 \

--gradient_checkpointing True \

--lazy_preprocess True

This ran on 8x nvidia 80gb a100's for about 40 hours.

Prompt format

The prompt should be 1:1 compatible with the FastChat/vicuna format, e.g.:

With a preamble:

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions.

USER: [prompt]

<\s>

ASSISTANT:

Or just:

USER: [prompt]

<\s>

ASSISTANT:

License

The model is licensed under the LLaMA model, and the dataset is licensed under the terms of OpenAI because it uses ChatGPT. Everything else is free.