ITALIAN-LEGAL-BERT:A pre-trained Transformer Language Model for Italian Law

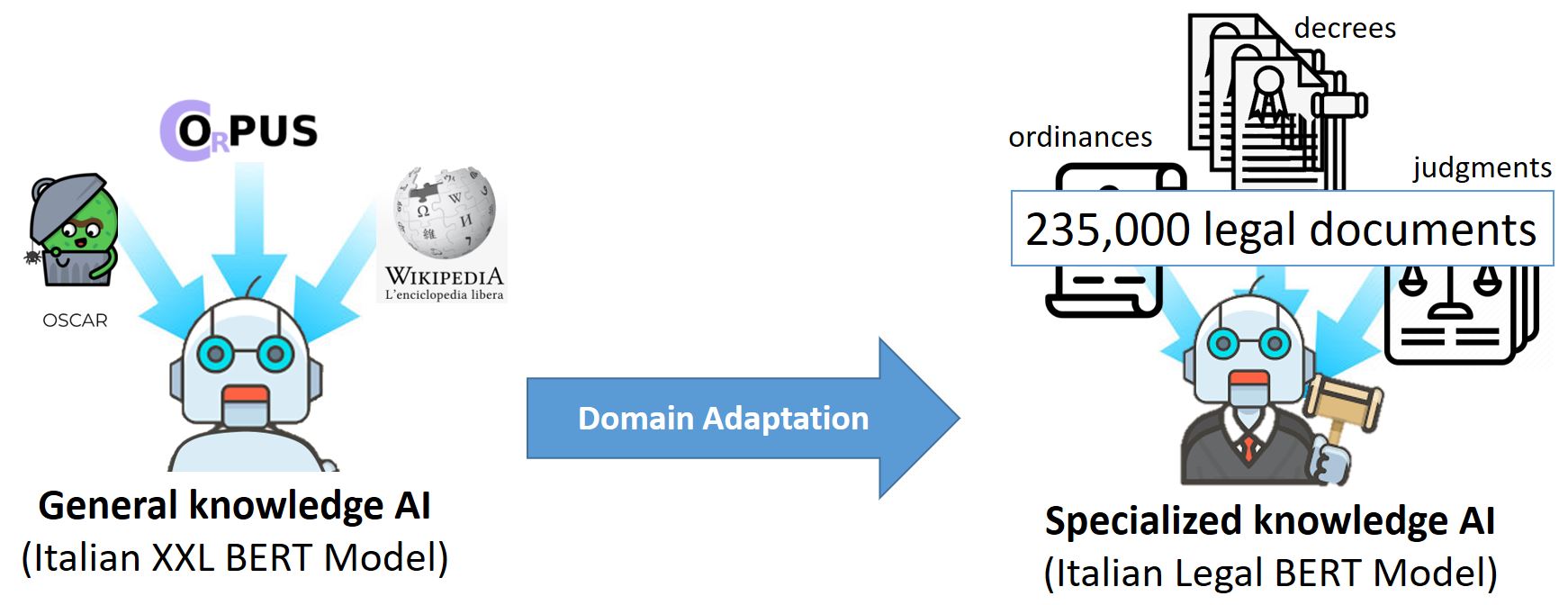

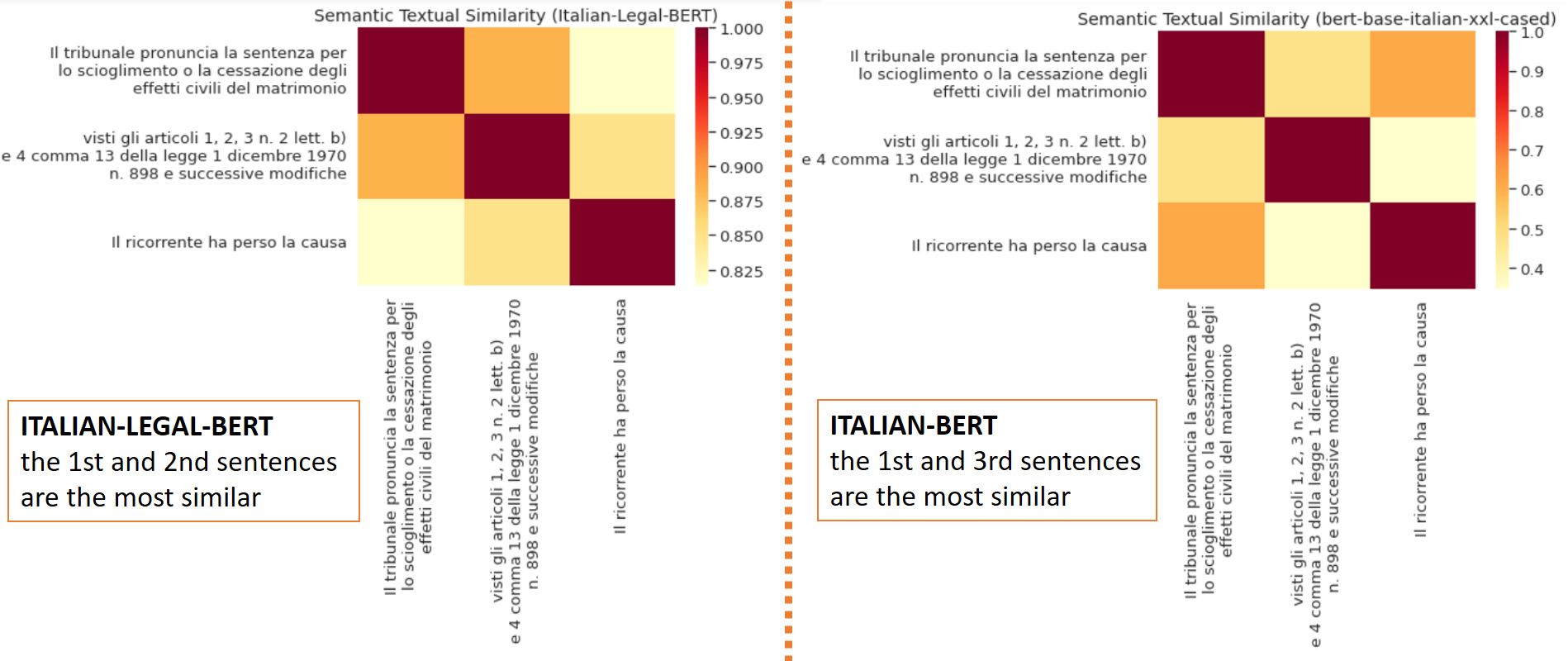

ITALIAN-LEGAL-BERT is based on bert-base-italian-xxl-cased with additional pre-training of the Italian BERT model on Italian civil law corpora. It achieves better results than the ‘general-purpose’ Italian BERT in different domain-specific tasks.

ITALIAN-LEGAL-BERT variants [NEW!!!]

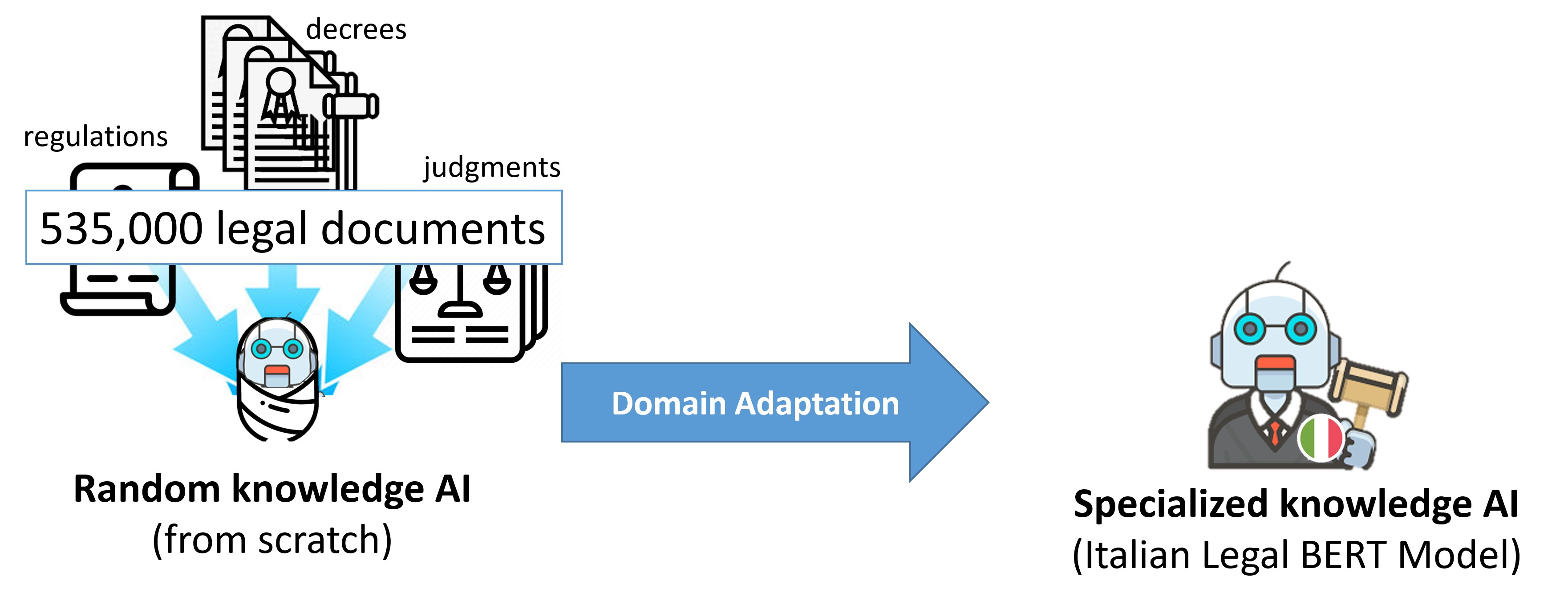

- FROM SCRATCH, It is the ITALIAN-LEGAL-BERT variant pre-trained from scratch on Italian legal documents (ITA-LEGAL-BERT-SC) based on the CamemBERT architecture

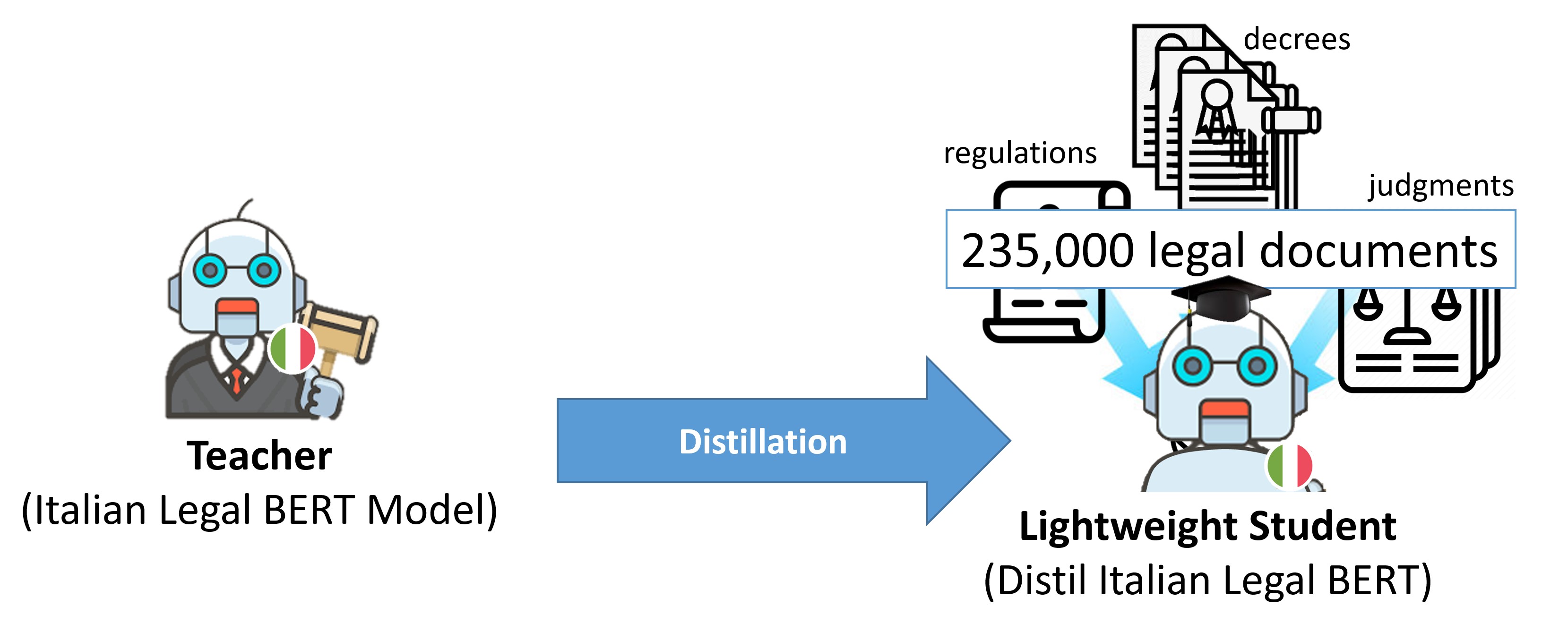

- DISTILLED, a distilled version of ITALIAN-LEGAL-BERT ( DISTIL-ITA-LEGAL-BERT)

For long documents

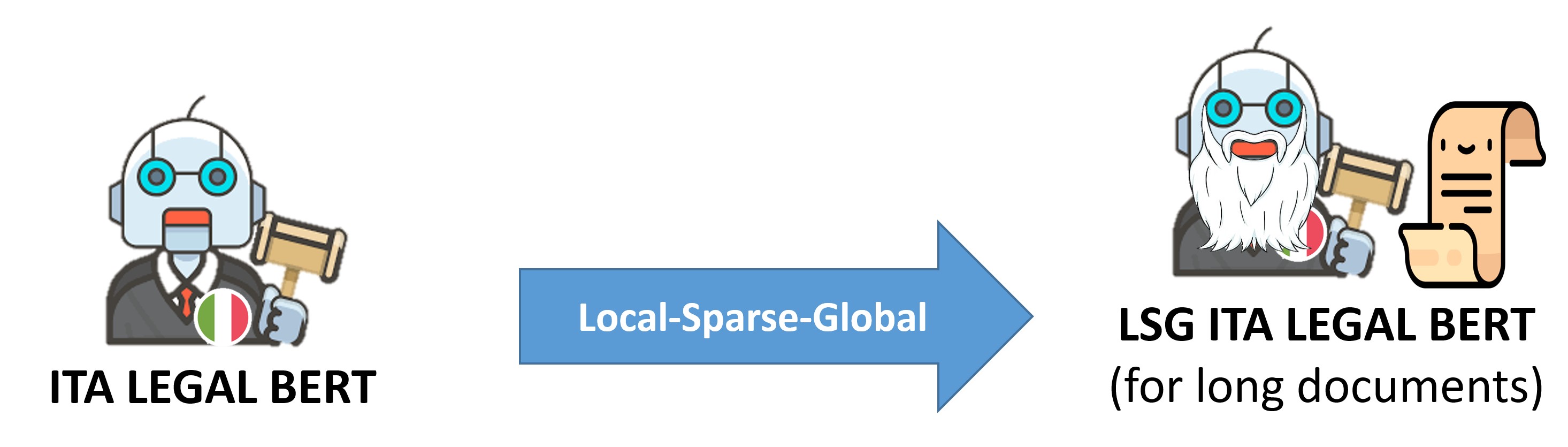

- LSG ITA LEGAL BERT, Local-Sparse-Global version of ITALIAN-LEGAL-BERT (FURTHER PRETRAINED)

- LSG ITA LEGAL BERT-SC, Local-Sparse-Global version of ITALIAN-LEGAL-BERT-SC (FROM SCRATCH)

Note: We are working on the extended version of the paper with more details and the results of these new models. We will update you soon

Training procedure

We initialized ITALIAN-LEGAL-BERT with ITALIAN XXL BERT and pretrained for an additional 4 epochs on 3.7 GB of preprocessed text from the National Jurisprudential Archive using the Huggingface PyTorch-Transformers library. We used BERT architecture with a language modeling head on top, AdamW Optimizer, initial learning rate 5e-5 (with linear learning rate decay, ends at 2.525e-9), sequence length 512, batch size 10 (imposed by GPU capacity), 8.4 million training steps, device 1*GPU V100 16GB

Usage

ITALIAN-LEGAL-BERT model can be loaded like:

from transformers import AutoModel, AutoTokenizer

model_name = "dlicari/Italian-Legal-BERT"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name)

You can use the Transformers library fill-mask pipeline to do inference with ITALIAN-LEGAL-BERT.

from transformers import pipeline

model_name = "dlicari/Italian-Legal-BERT"

fill_mask = pipeline("fill-mask", model_name)

fill_mask("Il [MASK] ha chiesto revocarsi l'obbligo di pagamento")

#[{'sequence': "Il ricorrente ha chiesto revocarsi l'obbligo di pagamento",'score': 0.7264330387115479},

# {'sequence': "Il convenuto ha chiesto revocarsi l'obbligo di pagamento",'score': 0.09641049802303314},

# {'sequence': "Il resistente ha chiesto revocarsi l'obbligo di pagamento",'score': 0.039877112954854965},

# {'sequence': "Il lavoratore ha chiesto revocarsi l'obbligo di pagamento",'score': 0.028993653133511543},

# {'sequence': "Il Ministero ha chiesto revocarsi l'obbligo di pagamento", 'score': 0.025297977030277252}]

In this COLAB: ITALIAN-LEGAL-BERT: Minimal Start for Italian Legal Downstream Tasks how to use it for sentence similarity, sentence classification, and named entity recognition

Citation

If you find our resource or paper is useful, please consider including the following citation in your paper.@inproceedings{licari_italian-legal-bert_2022,

address = {Bozen-Bolzano, Italy},

series = {{CEUR} {Workshop} {Proceedings}},

title = {{ITALIAN}-{LEGAL}-{BERT}: {A} {Pre}-trained {Transformer} {Language} {Model} for {Italian} {Law}},

volume = {3256},

shorttitle = {{ITALIAN}-{LEGAL}-{BERT}},

url = {https://ceur-ws.org/Vol-3256/#km4law3},

language = {en},

urldate = {2022-11-19},

booktitle = {Companion {Proceedings} of the 23rd {International} {Conference} on {Knowledge} {Engineering} and {Knowledge} {Management}},

publisher = {CEUR},

author = {Licari, Daniele and Comandè, Giovanni},

editor = {Symeonidou, Danai and Yu, Ran and Ceolin, Davide and Poveda-Villalón, María and Audrito, Davide and Caro, Luigi Di and Grasso, Francesca and Nai, Roberto and Sulis, Emilio and Ekaputra, Fajar J. and Kutz, Oliver and Troquard, Nicolas},

month = sep,

year = {2022},

note = {ISSN: 1613-0073},

file = {Full Text PDF:https://ceur-ws.org/Vol-3256/km4law3.pdf},

}

- Downloads last month

- 13,273