The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Error code: DatasetGenerationError

Exception: ArrowNotImplementedError

Message: Cannot write struct type '_format_kwargs' with no child field to Parquet. Consider adding a dummy child field.

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2011, in _prepare_split_single

writer.write_table(table)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 583, in write_table

self._build_writer(inferred_schema=pa_table.schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 404, in _build_writer

self.pa_writer = self._WRITER_CLASS(self.stream, schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pyarrow/parquet/core.py", line 1010, in __init__

self.writer = _parquet.ParquetWriter(

File "pyarrow/_parquet.pyx", line 2157, in pyarrow._parquet.ParquetWriter.__cinit__

File "pyarrow/error.pxi", line 154, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 91, in pyarrow.lib.check_status

pyarrow.lib.ArrowNotImplementedError: Cannot write struct type '_format_kwargs' with no child field to Parquet. Consider adding a dummy child field.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2027, in _prepare_split_single

num_examples, num_bytes = writer.finalize()

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 602, in finalize

self._build_writer(self.schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 404, in _build_writer

self.pa_writer = self._WRITER_CLASS(self.stream, schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pyarrow/parquet/core.py", line 1010, in __init__

self.writer = _parquet.ParquetWriter(

File "pyarrow/_parquet.pyx", line 2157, in pyarrow._parquet.ParquetWriter.__cinit__

File "pyarrow/error.pxi", line 154, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 91, in pyarrow.lib.check_status

pyarrow.lib.ArrowNotImplementedError: Cannot write struct type '_format_kwargs' with no child field to Parquet. Consider adding a dummy child field.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1529, in compute_config_parquet_and_info_response

parquet_operations = convert_to_parquet(builder)

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1154, in convert_to_parquet

builder.download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1027, in download_and_prepare

self._download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1122, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1882, in _prepare_split

for job_id, done, content in self._prepare_split_single(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2038, in _prepare_split_single

raise DatasetGenerationError("An error occurred while generating the dataset") from e

datasets.exceptions.DatasetGenerationError: An error occurred while generating the datasetNeed help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

_data_files

list | _fingerprint

string | _format_columns

sequence | _format_kwargs

dict | _format_type

null | _indexes

dict | _output_all_columns

bool | _split

null |

|---|---|---|---|---|---|---|---|

[

{

"filename": "dataset.arrow"

}

] | 6f6a46094968e7bb | [

"tags",

"tokens"

] | {} | null | {} | false | null |

Stocks NER 2000 Sample Test Dataset for Named Entity Recognition

This dataset has been automatically processed by AutoTrain for the project stocks-ner-2000-sample-test, and is perfect for training models for Named Entity Recognition (NER) in the stock market domain.

Dataset Description

The dataset includes 2000 samples of stock market related text, with each sample consisting of a sequence of tokens and their corresponding named entity tags. The language of the dataset is English (BCP-47 code: 'en').

Dataset Structure

The dataset is structured as a list of data instances, where each instance includes the following fields:

- tokens: a sequence of strings representing the text in the sample.

- tags: a sequence of integers representing the named entity tags for each token in the sample. There are a total of 12 named entities in the dataset, including 'NANA', 'btst', 'delivery', 'enter', 'entry_momentum', 'exit', 'exit2', 'exit3', 'intraday', 'sl', 'symbol', and 'touched'.

Each sample in the dataset looks like this:

[

{

"tokens": [

"MAXVIL",

" : CONVERGENCE OF AVERAGES HAPPENING, VOLUMES ABOVE AVERAGE RSI FULLY BREAK OUT "

],

"tags": [

10,

0

]

},

{

"tokens": [

"INTRADAY",

" : BUY ",

"CAMS",

" ABOVE ",

"2625",

" SL ",

"2595",

" TARGET ",

"2650",

" - ",

"2675",

" - ",

"2700",

" "

],

"tags": [

8,

0,

10,

0,

3,

0,

9,

0,

5,

0,

6,

0,

7,

0

]

}

]

Dataset Splits

The dataset is split into a train and validation split, with 1261 samples in the train split and 480 samples in the validation split.

This dataset is designed to train models for Named Entity Recognition in the stock market domain and can be used for natural language processing (NLP) research and development. Download this dataset now and take the first step towards building your own state-of-the-art NER model for stock market text.

GitHub Link to this project : Telegram Trade Msg Backtest ML

Need custom model for your application? : Place a order on hjLabs.in : Custom Token Classification or Named Entity Recognition (NER) model as in Natural Language Processing (NLP) Machine Learning

What this repository contains? :

Label data using LabelStudio NER(Named Entity Recognition or Token Classification) tool.

convert to

convert to

Convert LabelStudio CSV or JSON to HuggingFace-autoTrain dataset conversion script

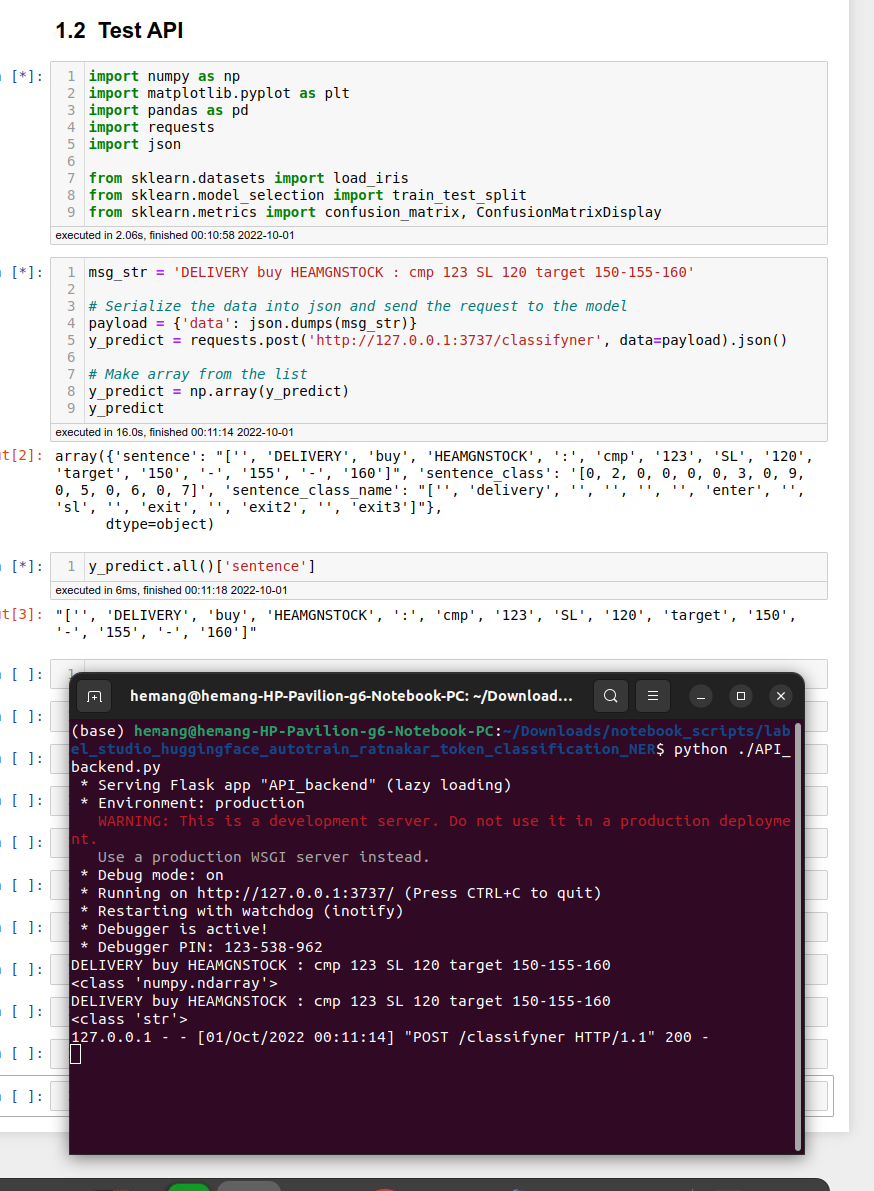

Use Hugginface-autoTrain model to predict labels on new data in LabelStudio using LabelStudio-ML-Backend.

Define python function to predict labels using Hugginface-autoTrain model.

Only label new data from newly predicted-labels-dataset that has falsified labels.

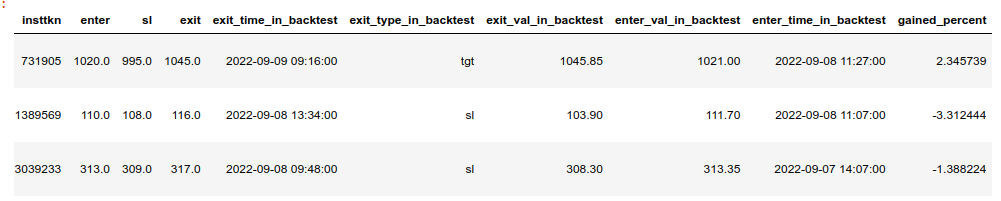

Backtest Truely labelled dataset against real historical data of the stock using zerodha kiteconnect and jugaad_trader.

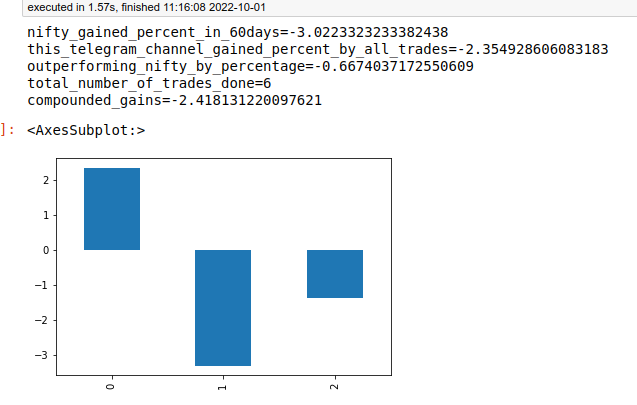

Evaluate total gained percentage since inception summation-wise and compounded and plot.

Listen to telegram channel for new LIVE messages using telegram API for algotrading.

Serve the app as flask web API for web request and respond to it as labelled tokens.

Outperforming or underperforming results of the telegram channel tips against exchange index by percentage.

Place a custom order on hjLabs.in : https://hjLabs.in

Social Media :

- WhatsApp/917016525813

- telegram/hjlabs

- Gmail/[email protected]

- Facebook/hemangjoshi37

- Twitter/HemangJ81509525

- LinkedIn/hemang-joshi-046746aa

- Tumblr/hemangjoshi37a-blog

- Pinterest/hemangjoshi37a

- Blogger/hemangjoshi

- Instagram/hemangjoshi37

Checkout Our Other Repositories

- pyPortMan

- transformers_stock_prediction

- TrendMaster

- hjAlgos_notebooks

- AutoCut

- My_Projects

- Cool Arduino and ESP8266 or NodeMCU Projects

- Telegram Trade Msg Backtest ML

Checkout Our Other Products

- WiFi IoT LED Matrix Display

- SWiBoard WiFi Switch Board IoT Device

- Electric Bicycle

- Product 3D Design Service with Solidworks

- AutoCut : Automatic Wire Cutter Machine

- Custom AlgoTrading Software Coding Services

- SWiBoard :Tasmota MQTT Control App

- Custom Token Classification or Named Entity Recognition (NER) model as in Natural Language Processing (NLP) Machine Learning

Some Cool Arduino and ESP8266 (or NodeMCU) IoT projects:

- IoT_LED_over_ESP8266_NodeMCU : Turn LED on and off using web server hosted on a nodemcu or esp8266

- ESP8266_NodeMCU_BasicOTA : Simple OTA (Over The Air) upload code from Arduino IDE using WiFi to NodeMCU or ESP8266

- IoT_CSV_SD : Read analog value of Voltage and Current and write it to SD Card in CSV format for Arduino, ESP8266, NodeMCU etc

- Honeywell_I2C_Datalogger : Log data in A SD Card from a Honeywell I2C HIH8000 or HIH6000 series sensor having external I2C RTC clock

- IoT_Load_Cell_using_ESP8266_NodeMC : Read ADC value from High Precision 12bit ADS1015 ADC Sensor and Display on SSD1306 SPI Display as progress bar for Arduino or ESP8266 or NodeMCU

- IoT_SSD1306_ESP8266_NodeMCU : Read from High Precision 12bit ADC seonsor ADS1015 and display to SSD1306 SPI as progress bar in ESP8266 or NodeMCU or Arduino

Checkout Our Awesome 3D GrabCAD Models:

- AutoCut : Automatic Wire Cutter Machine

- ESP Matrix Display 5mm Acrylic Box

- Arcylic Bending Machine w/ Hot Air Gun

- Automatic Wire Cutter/Stripper

Our HuggingFace Models :

Our HuggingFace Datasets :

We sell Gigs on Fiverr :

- Downloads last month

- 13