image

imagewidth (px) 271

900

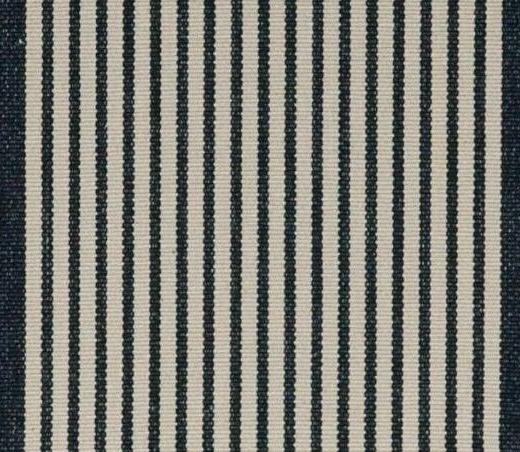

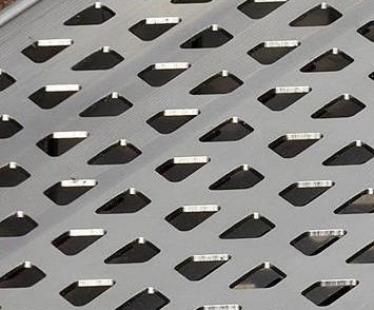

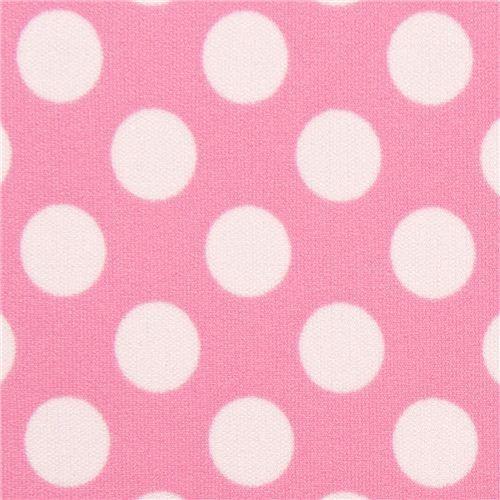

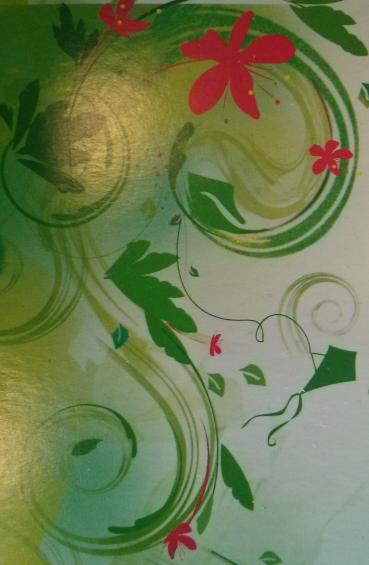

| label

class label 47

classes |

|---|---|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

|

0banded

|

Not sure about the license.

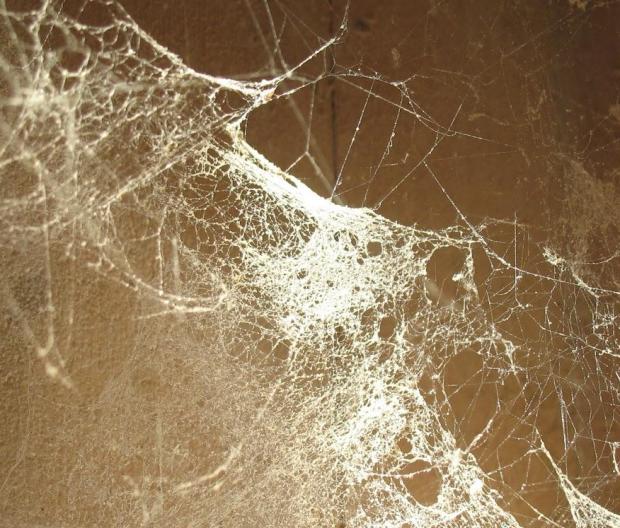

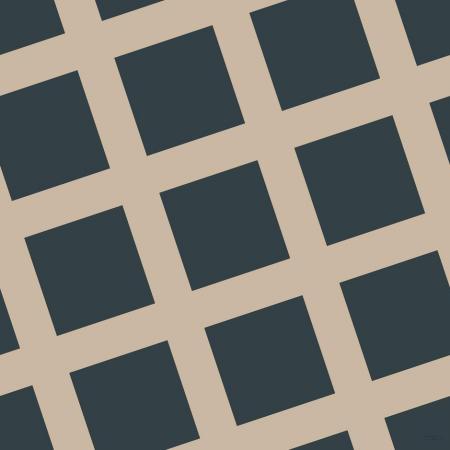

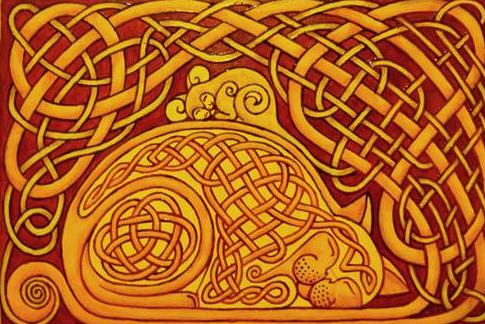

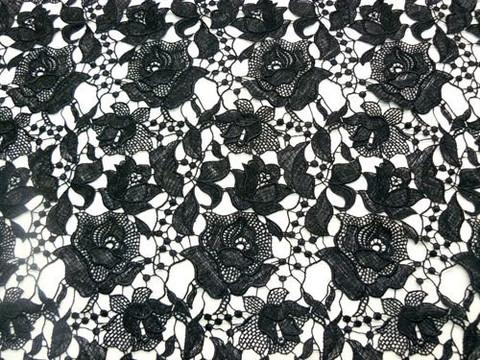

Describable Textures Dataset (DTD)

The Describable Textures Dataset (DTD) is an evolving collection of textural images in the wild, annotated with a series of human-centric attributes, inspired by the perceptual properties of textures. This data is made available to the computer vision community for research purposes.

Download dataset Download code Evaluation Citation

Overview

Our ability of vividly describing the content of images is a clear demonstration of the power of human visual system. Not only we can recognise objects in images (e.g. a cat, a person, or a car), but we can also describe them to the most minute details, extracting an impressive amount of information at a glance. But visual perception is not limited to the recognition and description of objects. Prior to high-level semantic understanding, most textural patterns elicit a rich array of visual impressions. We could describe a texture as "polka dotted, regular, sparse, with blue dots on a white background"; or as "noisy, line-like, and irregular".

Our aim is to reproduce this capability in machines. Scientifically, the aim is to gain further insight in how textural information may be processed, analysed, and represented by an intelligent system. Compared to classic task of textural analysis such as material recognition, such perceptual properties are much richer in variety and structure, inviting new technical challenges.

DTD is a texture database, consisting of 5640 images, organized according to a list of 47 terms (categories) inspired from human perception. There are 120 images for each category. Image sizes range between 300x300 and 640x640, and the images contain at least 90% of the surface representing the category attribute. The images were collected from Google and Flickr by entering our proposed attributes and related terms as search queries. The images were annotated using Amazon Mechanical Turk in several iterations. For each image we provide key attribute (main category) and a list of joint attributes.

The data is split in three equal parts, in train, validation and test, 40 images per class, for each split. We provide the ground truth annotation for both key and joint attributes, as well as the 10 splits of the data we used for evaluation.

Related paper

M.Cimpoi, S. Maji, I. Kokkinos, S. Mohamed, A. Vedaldi, "Describing Textures in the Wild" ( PDF | Poster )

@InProceedings{cimpoi14describing, Author = {M. Cimpoi and S. Maji and I. Kokkinos and S. Mohamed and and A. Vedaldi}, Title = {Describing Textures in the Wild}, Booktitle = {Proceedings of the {IEEE} Conf. on Computer Vision and Pattern Recognition ({CVPR})}, Year = {2014}}

Downloads

| Filename | Description | Size |

| README.txt | README file describing: _ Dataset structure. _ Ground truth annotations: key attributes and joint attributes. |

185K |

| dtd-r1.0.1.tar.gz | The package contains: _ Dataset images, train, validation and test. _ Ground truth annotations and splits used for evaluation. * imdb.mat file, containing a struct holding file names and ground truth labels. |

625M |

| dtd-r1.0.1-labels.tar.gz | Annotations and splits _ Ground truth annotations: key attributes, joint attributes. _ Splits of the data into train, val and test, as used in our experiments. |

1.4M |

| dtd-r1-decaf_feats.tar.gz | Compressed decaf_feats.mat, containing a 5640x4096 matrix, represented DeCAF features for the images from DTD. Each row represents the 4096 dimensional feature vector for one image, assuming images are sorted by name. |

82M |

Acknowledgements

This research is based on work done at the 2012 CLSP Summer Workshop, and was partially supported by NSF Grant #1005411, ODNI via the JHU-HLTCOE and Google Research. Mircea Cimpoi was supported by the ERC grant VisRec no. 228180 and Iasonas Kokkinos by ANR-10-JCJC-0205.

The development of the describable textures dataset started in June and July 2012 at the Johns Hopkins Centre for Language and Speech Processing (CLSP) Summer Workshop. The authors are most grateful to Prof. Sanjeev Khudanpur and Prof. Greg Hager.

- Downloads last month

- 433