license: apache-2.0

language: fr

library_name: nemo

datasets:

- mozilla-foundation/common_voice_13_0

- multilingual_librispeech

- facebook/voxpopuli

- google/fleurs

- gigant/african_accented_french

thumbnail: null

tags:

- automatic-speech-recognition

- speech

- audio

- Transducer

- FastConformer

- CTC

- Transformer

- pytorch

- NeMo

- hf-asr-leaderboard

model-index:

- name: stt_fr_fastconformer_hybrid_large

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 13.0

type: mozilla-foundation/common_voice_13_0

config: fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 9.16

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: Multilingual LibriSpeech (MLS)

type: facebook/multilingual_librispeech

config: french

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 4.82

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: VoxPopuli

type: facebook/voxpopuli

config: french

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 9.23

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: Fleurs

type: google/fleurs

config: fr_fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 8.65

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: African Accented French

type: gigant/african_accented_french

config: fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 6.55

FastConformer-Hybrid Large (fr)

This model aims to replicate nvidia/stt_fr_fastconformer_hybrid_large_pc with the goal of predicting only the lowercase French alphabet, hyphen, and apostrophe. While this choice sacrifices broader functionalities like predicting casing, numbers, and punctuation, it can enhance accuracy for specific use cases.

Similar to its sibling, this is a "large" version of the FastConformer Transducer-CTC model (around 115M parameters). It's a hybrid model trained using two loss functions: Transducer (default) and CTC.

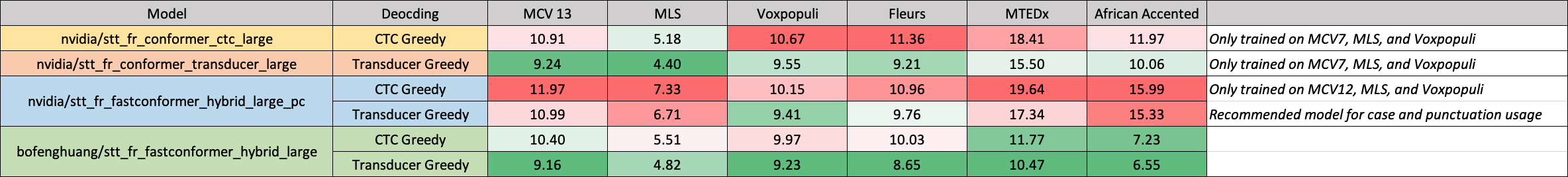

Performance

We evaluated our model on the following datasets and re-ran the evaluation on other models for comparison. Please note that the reported WER is the result after converting numbers to text, removing punctuation (except for apostrophes and hyphens), and converting all characters to lowercase.

All the evaluation results can be found here.

Usage

The model is available for use in the NeMo toolkit, and can be used as a pre-trained checkpoint for inference or for fine-tuning on another dataset.

# Install nemo

# !pip install nemo_toolkit['all']

import nemo.collections.asr as nemo_asr

model_name = "bofenghuang/stt_fr_fastconformer_hybrid_large"

asr_model = nemo_asr.models.ASRModel.from_pretrained(model_name=model_name)

# Path to your 16kHz mono-channel audio file

audio_path = "/path/to/your/audio/file"

# Transcribe with defaut transducer decoder

asr_model.transcribe([audio_path])

# (Optional) Switch to CTC decoder

asr_model.change_decoding_strategy(decoder_type="ctc")

# (Optional) Transcribe with CTC decoder

asr_model.transcribe([audio_path])

Datasets

This model has been trained on a composite dataset comprising over 2500 hours of French speech audio and transcriptions, including Common Voice 13.0, Multilingual LibriSpeech, Voxpopuli, Fleurs, African Accented French, and more.

Limitations

Since this model was trained on publically available speech datasets, the performance of this model might degrade for speech which includes technical terms, or vernacular that the model has not been trained on. The model might also perform worse for accented speech.

The model exclusively generates the lowercase French alphabet, hyphen, and apostrophe. Therefore, it may not perform well in situations where uppercase characters and additional punctuation are also required.

References

[1] Fast Conformer with Linearly Scalable Attention for Efficient Speech Recognition

[2] Google Sentencepiece Tokenizer

Acknowledgements

Thanks to Nvidia's research on the advanced model architecture and the NeMo team's training framework.