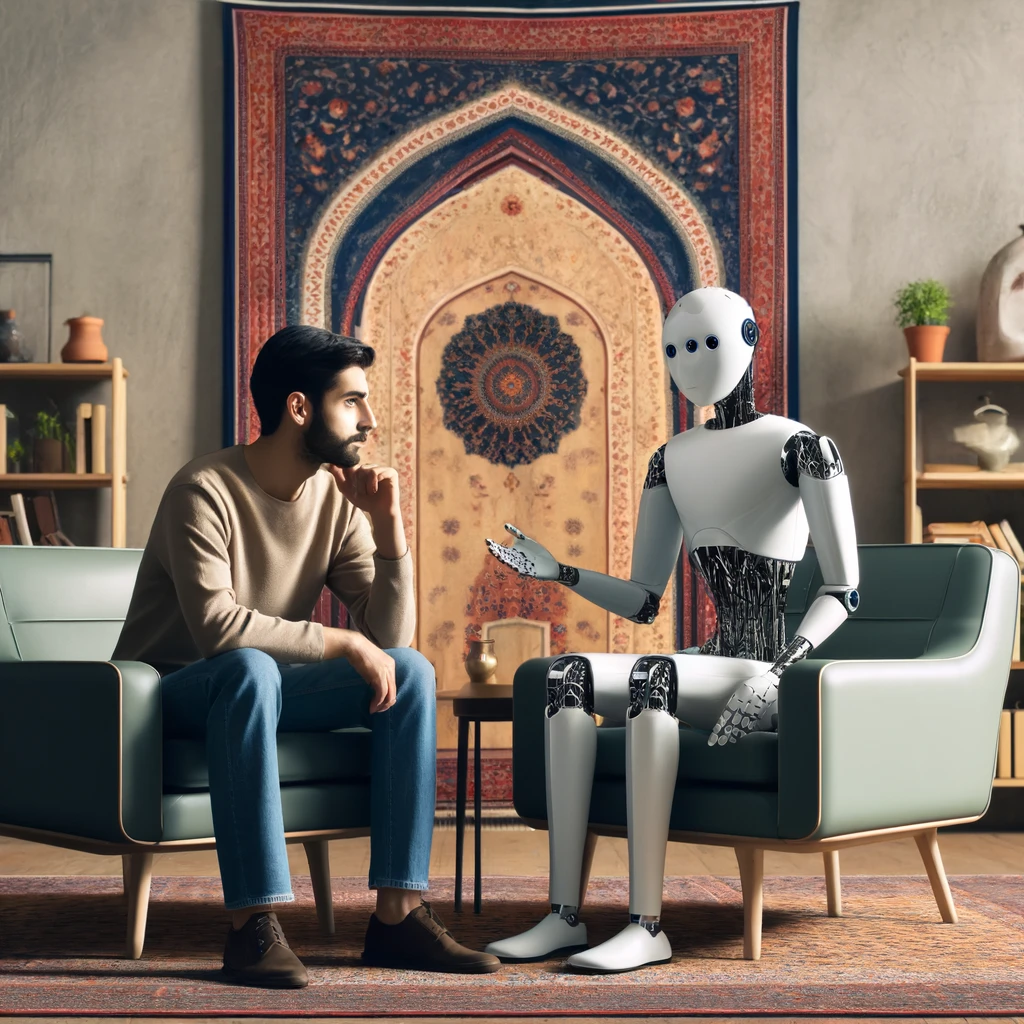

Persian Therapist Model: Dr. Aram and Mohammad

This model has been fine-tuned on the LLaMA-3-8B to simulate therapeutic conversations in Persian between a therapist named Dr. Aram and a person named Mohammad. It is designed to aid developers and researchers in creating applications that require empathetic dialogue in a therapeutic context. The utilization of LLaMA-3-8B, known for its powerful language understanding capabilities, enhances the model's ability to generate nuanced and contextually appropriate responses, making it an ideal tool for advancing digital therapeutic solutions.

Model Description

This conversational model is fine-tuned on a collection of high-quality simulated therapy session transcripts in Persian, representing interactions that mimic real-world therapeutic conversations. It is intended for use in scenarios where natural and empathetic dialogue generation is needed.

How to Use

To use this model, you can load it through the Hugging Face Transformers library as follows:

from transformers import AutoModelForCausalLM

import torch

from peft import PeftModel

from transformers import pipeline

model = AutoModelForCausalLM.from_pretrained(

"meta-llama/Meta-Llama-3-8B",

device_map="auto",

torch_dtype=torch.float16

)

model.config.use_cache = False

model = PeftModel.from_pretrained(

model,

"aminabbasi/Persian-Therapist-Llama-3-8B"

)

pipe = pipeline(task="text-generation", model=model, tokenizer="meta-llama/Meta-Llama-3-8B", max_length=2048,

do_sample=True,

temperature=0.9,

top_p=0.9,

eos_token_id=14711,

pad_token_id=14711)

user_input = "سلام. حالم خوب نیست"

chat_text = f"""

### Instruction:

شما یک مدل زبانی هوشمند هستید که نام آن "دکتر آرام" است. شما در نقش یک روانشناس عمل میکنید. شخصی به نام "محمد" به شما مراجعه کرده است. محمد به دنبال کمک است تا بتواند احساسات خود را مدیریت کند و راهحلهایی برای مشکلات خود پیدا کند. وظیفه شما ارائه پاسخهای حمایتکننده و همدردانه است. شما باید به صحبتها با دقت گوش دهید و با مهربانی پاسخ دهید."""

while True:

user_input = input("Mohammad:")

if user_input == "exit":

break

else:

chat_text = chat_text + f"""

### Human:

{user_input}

### Therapist:

"""

answer = pipe(chat_text)[0]['generated_text'].split("### Therapist:")[-1].replace("#", "").strip()

print("Dr. Aram:", answer)

chat_text = chat_text + answer

@misc{Persian-Therapist-Llama-3-8B,

title={Persian Therapist Model: Dr. Aram and Mohammad},

author={Mohammad Amin Abbasi},

year={2024},

publisher={Hugging Face},

}

Model tree for aminabbasi/Persian-Therapist-Llama-3-8B

Base model

meta-llama/Meta-Llama-3-8B