license: other

license_name: bespoke-lora-trained-license

license_link: >-

https://multimodal.art/civitai-licenses?allowNoCredit=True&allowCommercialUse=Rent&allowDerivatives=True&allowDifferentLicense=False

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

- disney

- pixar

- dreamworks

- style

- cartoon

- sdxl style lora

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: DreamDisPix style

widget:

- text: 'Shrek playing with olaf in a frozen field DreamDisPix style '

output:

url: 4857923.jpeg

- text: 'The girl from the incredibles shooting a laser gun DreamDisPix style '

output:

url: 4857954.jpeg

- text: 'The girl with a pearl earring DreamDisPix style '

output:

url: 4857994.jpeg

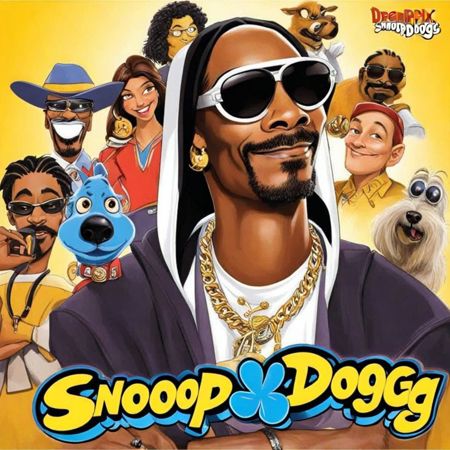

- text: 'Snoop Dogg DreamDisPix style '

output:

url: 4857995.jpeg

- text: 'A mighty dragon at the dentist DreamDisPix style '

output:

url: 4858005.jpeg

- text: 'A cute dragon playing with Eevee DreamDisPix style '

output:

url: 4857996.jpeg

- text: 'Zombie Cinderella has rissen from the dead DreamDisPix style '

output:

url: 4858004.jpeg

- text: 'Santa riding a might dragon DreamDisPix style '

output:

url: 4858026.jpeg

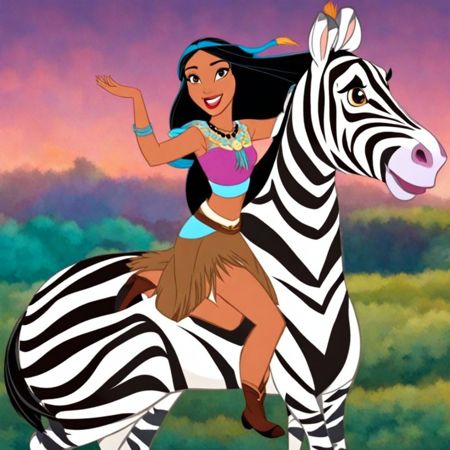

- text: 'Pocahontas riding a Zebra DreamDisPix style '

output:

url: 4858031.jpeg

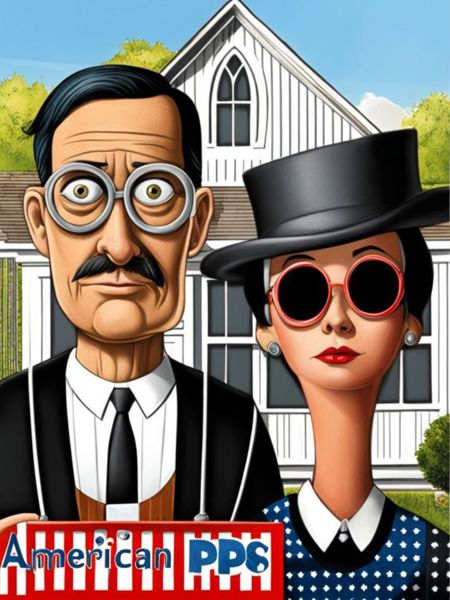

- text: 'American gothic DreamDisPix style '

output:

url: 4858028.jpeg

datasets:

- Norod78/DreamDisPix-blip2-captions

Dream Dis Pix XL

- Prompt

- Shrek playing with olaf in a frozen field DreamDisPix style

- Prompt

- The girl from the incredibles shooting a laser gun DreamDisPix style

- Prompt

- The girl with a pearl earring DreamDisPix style

- Prompt

- Snoop Dogg DreamDisPix style

- Prompt

- A mighty dragon at the dentist DreamDisPix style

- Prompt

- A cute dragon playing with Eevee DreamDisPix style

- Prompt

- Zombie Cinderella has rissen from the dead DreamDisPix style

- Prompt

- Santa riding a might dragon DreamDisPix style

- Prompt

- Pocahontas riding a Zebra DreamDisPix style

- Prompt

- American gothic DreamDisPix style

(CivitAI)

Model description

A (kinda failed) attempt to train an SDXL LoRA model upon on a mixed style dataset of images from Dreamworks, Disney and Pixar. I have uploaded this Blip2-Captioned Dataset to Huggingface.

I usually prefer to train in LoRA ranks 4-16 because so far it always seemed to be enough and provided a reasonably small checkpoint but in this case, I only started getting results when I went for Rank-32, even tried 24 and it was not enough.

My conclusion is that it should have probably been trained as several different lower ranked LoRAs each for the specific visual sub-style and it would have probably looked way better for each.

Trigger words

You should use DreamDisPix style to trigger the image generation.

Download model

Weights for this model are available in Safetensors format.

Download them in the Files & versions tab.

Use it with the 🧨 diffusers library

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('stabilityai/stable-diffusion-xl-base-1.0', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('Norod78/dream-dis-pix-xl', weight_name='SDXL-DreamDisPix-Lora-r32.safetensors')

image = pipeline('American gothic DreamDisPix style ').images[0]

For more details, including weighting, merging and fusing LoRAs, check the documentation on loading LoRAs in diffusers