NanoLM-365M-base

English | 简体中文

Introduction

在 Qwen2-0.5B 的基础上,将 tokenizer 替换为了 BilingualTokenizer-8K,以达到减小参数的目的。总参数从 0.5B 降低到了 365M。

Details

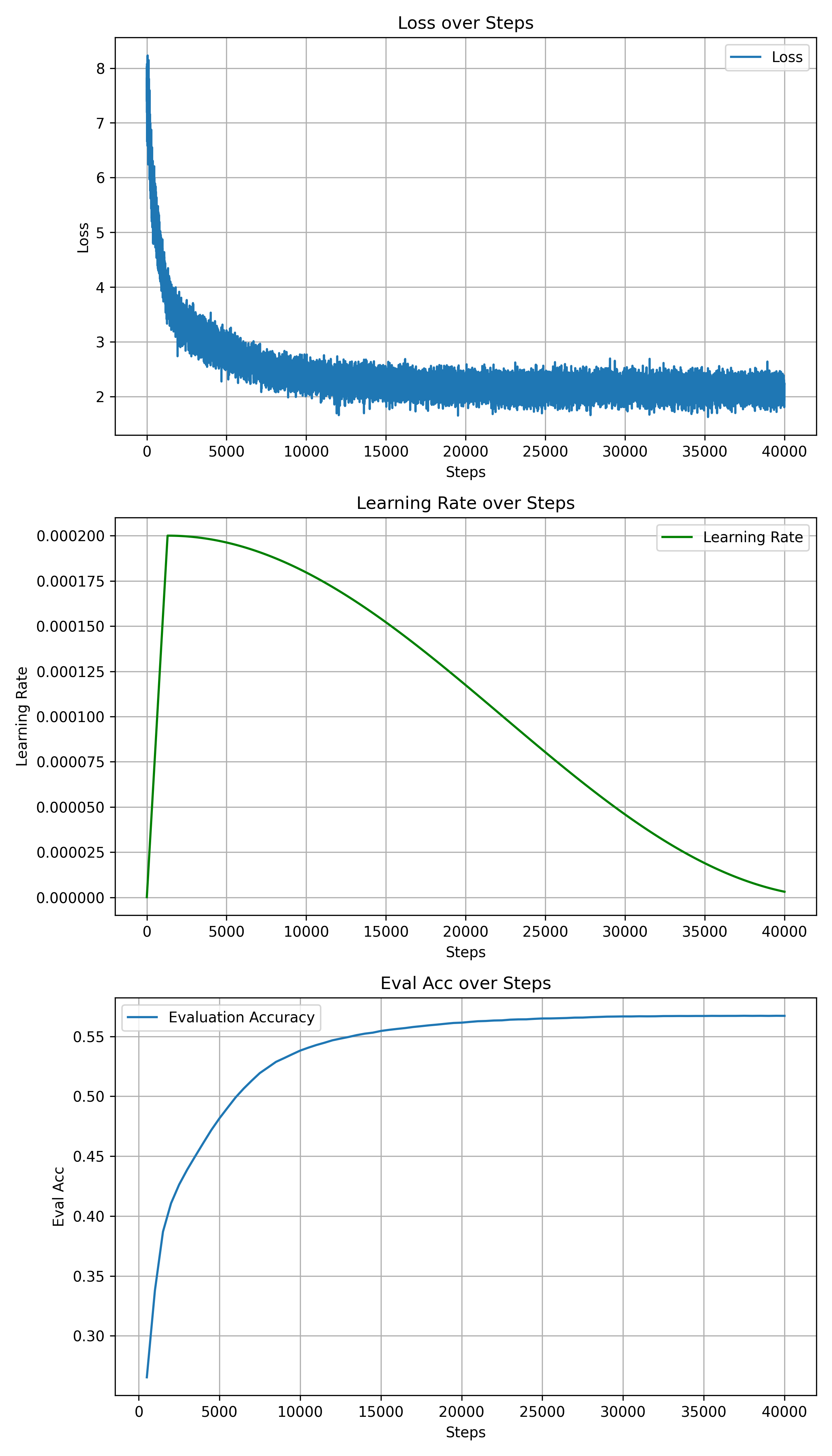

为了恢复一定的性能,便于下游任务微调,替换 tokenizer 后我选择冻结主干参数,仅训练 embedding 部分,在 wikipedia-zh 和 cosmopedia-100k 上训练了 40,000 steps。

| Value | |

|---|---|

| Total Params | 365 M |

| Trainable Params | < 10 M |

| Trainable Parts | model.embed_tokens |

| Training Steps | 40,000 |

| Training Dataset | wikipedia-zh, cosmopedia-100k |

| Optimizer | adamw_torch |

| Learning Rate | 2e-4 |

| LR Scheduler | cosine |

| Weight Decay | 0.1 |

| Warm-up Ratio | 0.03 |

| Batch Size | 16 |

| Gradient Accumulation Steps | 1 |

| Seq Len | 4096 |

| Dtype | bf16 |

| Peak GPU Memory | < 48 GB |

| Device | NVIDIA A100-SXM4-80GB |