Model Details: Neural-Chat-v1-1

This model is a fine-tuned model for chat based on mosaicml/mpt-7b with a max sequence length of 2048 on the dataset Intel/neural-chat-dataset-v1-1, which is a compilation of open-source datasets.

Prompt of "an image of a brain that has to do with LLMs" from https://clipdrop.co/stable-diffusion-turbo.

Prompt of "an image of a brain that has to do with LLMs" from https://clipdrop.co/stable-diffusion-turbo.

| Model Detail | Description |

|---|---|

| Model Authors | Intel. The NeuralChat team with members from DCAI/AISE/AIPT. Core team members: Kaokao Lv, Liang Lv, Chang Wang, Wenxin Zhang, Xuhui Ren, and Haihao Shen. |

| Date | July, 2023 |

| Version | v1-1 |

| Type | 7B Large Language Model |

| Paper or Other Resources | Base model: mosaicml/mpt-7b; Dataset: Intel/neural-chat-dataset-v1-1 |

| License | Apache 2.0 |

| Questions or Comments | Community Tab and Intel DevHub Discord |

| Intended Use | Description |

|---|---|

| Primary intended uses | You can use the fine-tuned model for several language-related tasks. Checkout the LLM Leaderboard to see this model's performance relative to other LLMs. |

| Primary intended users | Anyone doing inference on language-related tasks. |

| Out-of-scope uses | This model in most cases will need to be fine-tuned for your particular task. The model should not be used to intentionally create hostile or alienating environments for people. |

How To Use

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- distributed_type: multi-GPU

- num_devices: 4

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- total_eval_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.02

- num_epochs: 3.0

Use The Model

Loading the model with Transformers

import transformers

model = transformers.AutoModelForCausalLM.from_pretrained(

'Intel/neural-chat-7b-v1-1',

trust_remote_code=True

)

Inference with INT8

Follow the instructions at the GitHub repository to install the necessary dependencies for quantization to INT8. Use the below command to quantize the model using Intel Neural Compressor to accelerate inference.

python run_generation.py \

--model Intel/neural-chat-7b-v1-1 \

--quantize \

--sq \

--alpha 0.95 \

--ipex

| Factors | Description |

|---|---|

| Groups | More details about the dataset can be found at Intel/neural-chat-dataset-v1-1. |

| Instrumentation | The performance of the model can vary depending on the inputs to the model. In this case, the prompts provided can drastically change the prediction of the language model. |

| Environment | - |

| Card Prompts | Model deployment on varying hardware and software will change model performance. |

| Metrics | Description |

|---|---|

| Model performance measures | The model metrics are: ARC, HellaSwag, MMLU, and TruthfulQA. Bias evaluation was also evaluated using using Toxicity Rito (see Quantitative Analyses below). The model performance was evaluated against other LLMs according to the standards at the time the model was published. |

| Decision thresholds | No decision thresholds were used. |

| Approaches to uncertainty and variability | - |

Training Data

The training data are from Intel/neural-chat-dataset-v1-1. The total number of instruction samples is about 1.1M, and the number of tokens is 326M. This dataset is composed of several other datasets:

| Type | Language | Dataset | Number |

|---|---|---|---|

| HC3 | en | HC3 | 24K |

| dolly | en | databricks-dolly-15k | 15K |

| alpaca-zh | zh | tigerbot-alpaca-zh-0.5m | 500K |

| alpaca-en | en | TigerResearch/tigerbot-alpaca-en-50k | 50K |

| math | en | tigerbot-gsm-8k-en | 8K |

| general | en | tigerbot-stackexchange-qa-en-0.5m | 500K |

Note: There is no contamination from the GSM8k test set, as this is not a part of this dataset.

Quantitative Analyses

LLM metrics

We used the same evaluation metrics as HuggingFaceH4/open_llm_leaderboard, which uses Eleuther AI Language Model Evaluation Harness, a unified framework to test generative language models on a large number of different evaluation tasks.

| Model | Average ⬆️ | ARC (25-s) ⬆️ | HellaSwag (10-s) ⬆️ | MMLU (5-s) ⬆️ | TruthfulQA (MC) (0-s) ⬆️ |

|---|---|---|---|---|---|

| mosaicml/mpt-7b | 47.4 | 47.61 | 77.56 | 31 | 33.43 |

| mosaicml/mpt-7b-chat | 49.95 | 46.5 | 75.55 | 37.60 | 40.17 |

| Intel/neural-chat-dataset-v1-1 | 51.41 | 50.09 | 76.69 | 38.79 | 40.07 |

Bias evaluation

Following the blog evaluating-llm-bias, we selected 10000 samples randomly from allenai/real-toxicity-prompts to evaluate toxicity bias.

| Model | Toxicity Rito ↓ |

|---|---|

| mosaicml/mpt-7b | 0.027 |

| Intel/neural-chat-dataset-v1-1 | 0.0264 |

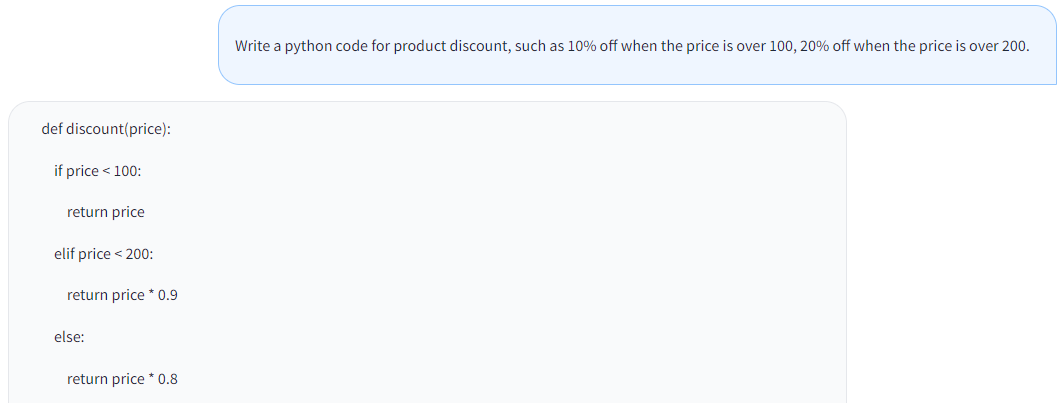

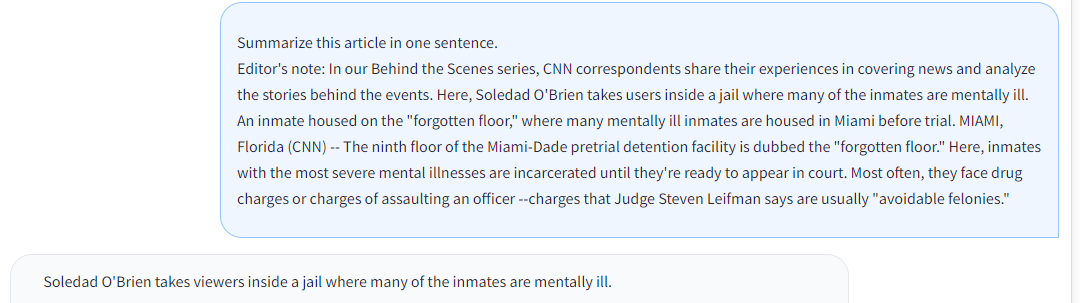

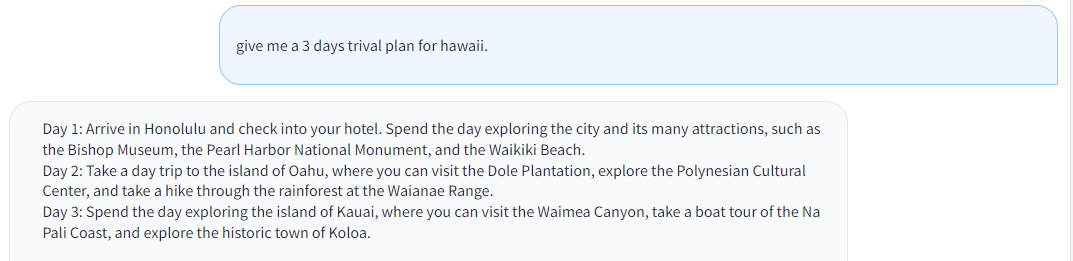

Examples

Ethical Considerations and Limitations

Neural-chat-7b-v1-1 can produce factually incorrect output, and should not be relied on to produce factually accurate information. neural-chat-7b-v1-1 was trained on various instruction/chat datasets based on mosaicml/mpt-7b. Because of the limitations of the pretrained model and the finetuning datasets, it is possible that this model could generate lewd, biased or otherwise offensive outputs.

Therefore, before deploying any applications of the model, developers should perform safety testing.

Caveats and Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model.

Here are some useful GitHub repository links to learn more about Intel's open-source AI software:

Disclaimer

The license on this model does not constitute legal advice. We are not responsible for the actions of third parties who use this model. Please cosult an attorney before using this model for commercial purposes.

- Downloads last month

- 40

Model tree for Intel/neural-chat-7b-v1-1

Datasets used to train Intel/neural-chat-7b-v1-1

Space using Intel/neural-chat-7b-v1-1 1

Collections including Intel/neural-chat-7b-v1-1

Evaluation results

- Average on Intel/neural-chat-dataset-v1-1self-reported51.410

- ARC (25-shot) on Intel/neural-chat-dataset-v1-1self-reported50.090

- HellaSwag (10-shot) on Intel/neural-chat-dataset-v1-1self-reported76.690

- MMLU (5-shot) on Intel/neural-chat-dataset-v1-1self-reported38.790

- TruthfulQA (0-shot) on Intel/neural-chat-dataset-v1-1self-reported40.070

- Toxicity Rito on Intel/neural-chat-dataset-v1-1self-reported0.026