Qwen1.5-MoE-2x72B

Description

This model is created using MoE (Mixture of Experts) through mergekit based on Qwen/Qwen1.5-72B-Chat and abacusai/Liberated-Qwen1.5-72B without further FT.

It utilizes a customized script for MoE via mergekit, which is available here.

Due to the structural modifications introduced by MoE, the use of this model requires custom modeling file and custom configuration file. When using the model, please place these files in the same folder as the model.

This model inherits the the tongyi-qianwen license.

Benchmark

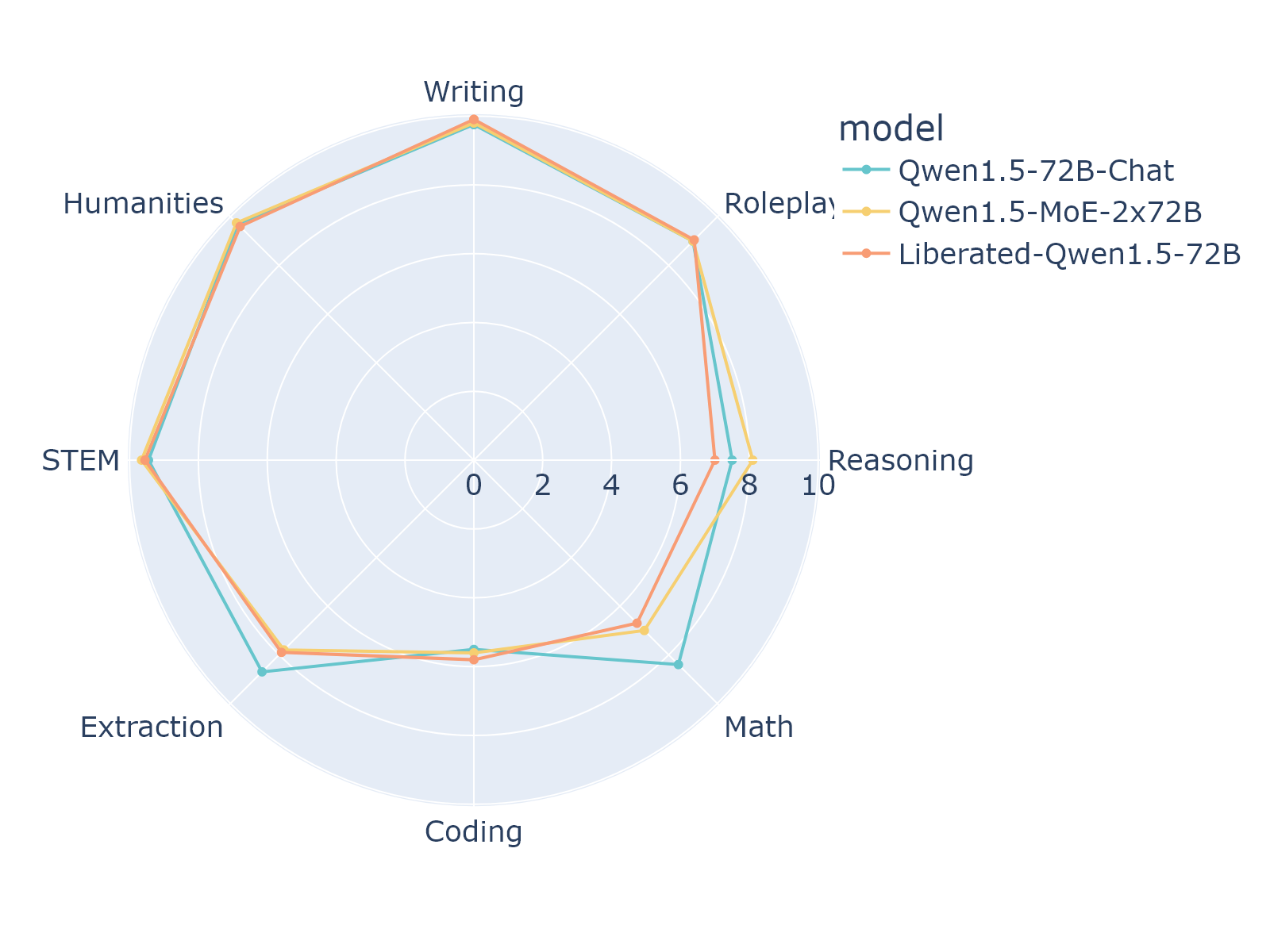

The benchmark score of the mt-bench for this model and the two base models are as follows:

1-turn, 4-bit quantization

| Model | Size | Coding | Extraction | Humanities | Math | Reasoning | Roleplay | STEM | Writing | avg_score |

|---|---|---|---|---|---|---|---|---|---|---|

| Liberated-Qwen1.5-72B | 72B | 5.8 | 7.9 | 9.6 | 6.7 | 7.0 | 9.05 | 9.55 | 9.9 | 8.1875 |

| Qwen1.5-72B-Chat | 72B | 5.5 | 8.7 | 9.7 | 8.4 | 7.5 | 9.0 | 9.45 | 9.75 | 8.5000 |

| This model | 2x72B | 5.6 | 7.8 | 9.75 | 7.0 | 8.1 | 9.0 | 9.65 | 9.8 | 8.3375 |

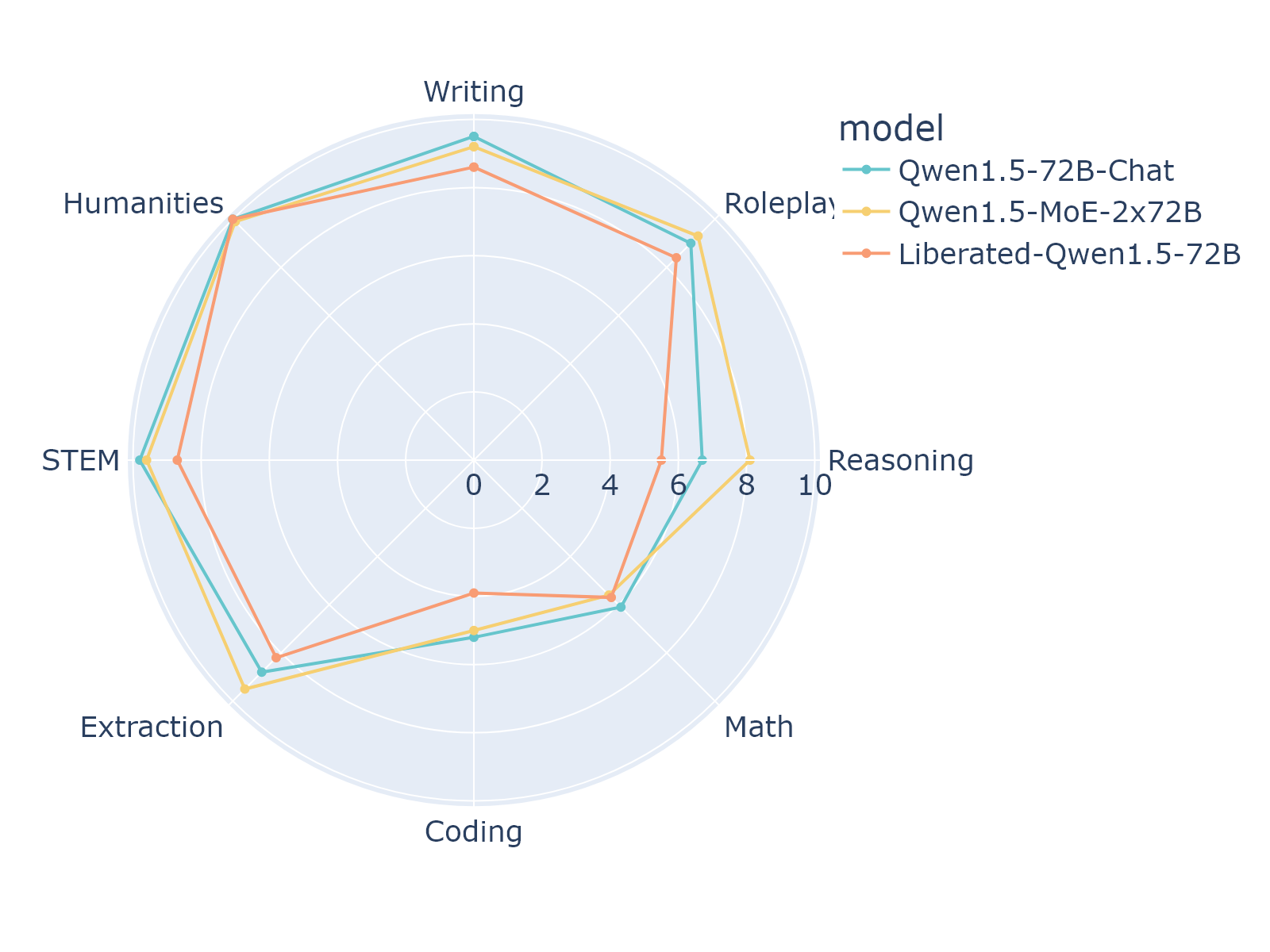

2-turn, 4-bit quantization

| Model | Size | Coding | Extraction | Humanities | Math | Reasoning | Roleplay | STEM | Writing | avg_score |

|---|---|---|---|---|---|---|---|---|---|---|

| Liberated-Qwen1.5-72B | 72B | 3.9 | 8.2 | 10.0 | 5.7 | 5.5 | 8.4 | 8.7 | 8.6 | 7.3750 |

| Qwen1.5-72B-Chat | 72B | 5.2 | 8.8 | 10.0 | 6.1 | 6.7 | 9.0 | 9.8 | 9.5 | 8.1375 |

| This model | 2x72B | 5.0 | 9.5 | 9.9 | 5.6 | 8.1 | 9.3 | 9.6 | 9.2 | 8.2750 |

Merge config

base_model: ./Qwen1.5-72B-Chat

gate_mode: random

dtype: bfloat16

experts:

- source_model: ./Qwen1.5-72B-Chat

positive_prompts: []

- source_model: ./Liberated-Qwen1.5-72B

positive_prompts: []

tokenizer_source: model:./Qwen1.5-72B-Chat

Gratitude

- Huge thanks to Alibaba Cloud Qwen for training and publishing the weights of Qwen model

- Thank you to abacusai for publishing fine-tuned model from Qwen

- And huge thanks to mlabonne, as I customized modeling file using phixtral as a reference

- Downloads last month

- 7

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.