Commit

•

ca8cd25

1

Parent(s):

abaa336

Model save

Browse files- README.md +74 -0

- adapter_config.json +34 -0

- adapter_model.safetensors +3 -0

- added_tokens.json +6 -0

- all_results.json +12 -0

- configuration.json +1 -0

- eval_results.json +7 -0

- merges.txt +0 -0

- runs/Sep01_12-22-50_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725164586.dsw-83959-5f9bd48d7d-89klm.2955372.0 +3 -0

- runs/Sep01_12-24-16_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725164672.dsw-83959-5f9bd48d7d-89klm.2956536.0 +3 -0

- runs/Sep01_12-25-35_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725164753.dsw-83959-5f9bd48d7d-89klm.2957417.0 +3 -0

- runs/Sep01_12-29-54_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725165017.dsw-83959-5f9bd48d7d-89klm.2960877.0 +3 -0

- runs/Sep01_12-33-50_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725165255.dsw-83959-5f9bd48d7d-89klm.2964077.0 +3 -0

- runs/Sep01_12-33-50_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725165429.dsw-83959-5f9bd48d7d-89klm.2964077.1 +3 -0

- runs/Sep01_13-09-02_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725167504.dsw-83959-5f9bd48d7d-89klm.2978286.0 +3 -0

- runs/Sep01_13-22-18_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725168155.dsw-83959-5f9bd48d7d-89klm.2984305.0 +3 -0

- runs/Sep01_13-27-40_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725168480.dsw-83959-5f9bd48d7d-89klm.2986770.0 +3 -0

- runs/Sep01_13-27-40_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725168654.dsw-83959-5f9bd48d7d-89klm.2986770.1 +3 -0

- runs/Sep01_13-40-47_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725169266.dsw-83959-5f9bd48d7d-89klm.2992860.0 +3 -0

- runs/Sep01_13-40-47_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725169510.dsw-83959-5f9bd48d7d-89klm.2992860.1 +3 -0

- runs/Sep02_14-55-48_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260171.dsw-83959-5f9bd48d7d-89klm.3489225.0 +3 -0

- runs/Sep02_14-55-48_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260414.dsw-83959-5f9bd48d7d-89klm.3489225.1 +3 -0

- runs/Sep02_15-01-36_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260523.dsw-83959-5f9bd48d7d-89klm.3493333.0 +3 -0

- runs/Sep02_15-01-36_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260783.dsw-83959-5f9bd48d7d-89klm.3493333.1 +3 -0

- runs/Sep02_15-12-15_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725261155.dsw-83959-5f9bd48d7d-89klm.3498880.0 +3 -0

- runs/Sep02_15-17-02_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725261590.dsw-83959-5f9bd48d7d-89klm.3500834.0 +3 -0

- runs/Sep02_15-29-51_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262408.dsw-83959-5f9bd48d7d-89klm.3506248.0 +3 -0

- runs/Sep02_15-29-51_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262666.dsw-83959-5f9bd48d7d-89klm.3506248.1 +3 -0

- runs/Sep02_15-39-28_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262788.dsw-83959-5f9bd48d7d-89klm.3511533.0 +3 -0

- runs/Sep02_15-39-28_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262931.dsw-83959-5f9bd48d7d-89klm.3511533.1 +3 -0

- runs/Sep02_15-51-19_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725263663.dsw-83959-5f9bd48d7d-89klm.3522427.0 +3 -0

- special_tokens_map.json +20 -0

- tokenizer.json +0 -0

- tokenizer_config.json +52 -0

- train_results.json +8 -0

- trainer_log.jsonl +12 -0

- trainer_state.json +129 -0

- training_args.bin +3 -0

- training_eval_loss.png +0 -0

- training_loss.png +0 -0

- vocab.json +0 -0

README.md

ADDED

|

@@ -0,0 +1,74 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model: Qwen/Qwen2-1.5B-Instruct

|

| 3 |

+

library_name: peft

|

| 4 |

+

license: apache-2.0

|

| 5 |

+

tags:

|

| 6 |

+

- llama-factory

|

| 7 |

+

- lora

|

| 8 |

+

- generated_from_trainer

|

| 9 |

+

model-index:

|

| 10 |

+

- name: test0901-5

|

| 11 |

+

results: []

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 15 |

+

should probably proofread and complete it, then remove this comment. -->

|

| 16 |

+

|

| 17 |

+

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="200" height="32"/>](None)

|

| 18 |

+

# test0901-5

|

| 19 |

+

|

| 20 |

+

This model is a fine-tuned version of [Qwen/Qwen2-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2-1.5B-Instruct) on the None dataset.

|

| 21 |

+

It achieves the following results on the evaluation set:

|

| 22 |

+

- Loss: 1.8424

|

| 23 |

+

|

| 24 |

+

## Model description

|

| 25 |

+

|

| 26 |

+

More information needed

|

| 27 |

+

|

| 28 |

+

## Intended uses & limitations

|

| 29 |

+

|

| 30 |

+

More information needed

|

| 31 |

+

|

| 32 |

+

## Training and evaluation data

|

| 33 |

+

|

| 34 |

+

More information needed

|

| 35 |

+

|

| 36 |

+

## Training procedure

|

| 37 |

+

|

| 38 |

+

### Training hyperparameters

|

| 39 |

+

|

| 40 |

+

The following hyperparameters were used during training:

|

| 41 |

+

- learning_rate: 0.0001

|

| 42 |

+

- train_batch_size: 1

|

| 43 |

+

- eval_batch_size: 1

|

| 44 |

+

- seed: 42

|

| 45 |

+

- gradient_accumulation_steps: 8

|

| 46 |

+

- total_train_batch_size: 8

|

| 47 |

+

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

|

| 48 |

+

- lr_scheduler_type: cosine

|

| 49 |

+

- lr_scheduler_warmup_ratio: 0.1

|

| 50 |

+

- training_steps: 10

|

| 51 |

+

|

| 52 |

+

### Training results

|

| 53 |

+

|

| 54 |

+

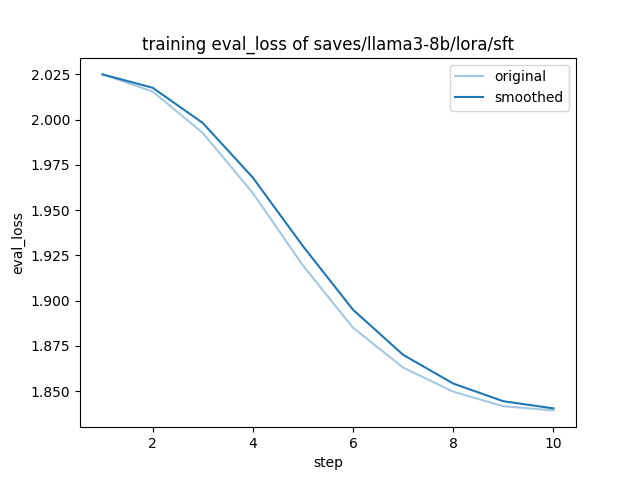

| Training Loss | Epoch | Step | Validation Loss |

|

| 55 |

+

|:-------------:|:------:|:----:|:---------------:|

|

| 56 |

+

| No log | 0.0082 | 1 | 2.0249 |

|

| 57 |

+

| No log | 0.0163 | 2 | 2.0155 |

|

| 58 |

+

| No log | 0.0245 | 3 | 1.9926 |

|

| 59 |

+

| No log | 0.0326 | 4 | 1.9594 |

|

| 60 |

+

| No log | 0.0408 | 5 | 1.9194 |

|

| 61 |

+

| No log | 0.0489 | 6 | 1.8850 |

|

| 62 |

+

| No log | 0.0571 | 7 | 1.8630 |

|

| 63 |

+

| No log | 0.0652 | 8 | 1.8497 |

|

| 64 |

+

| No log | 0.0734 | 9 | 1.8417 |

|

| 65 |

+

| 1.6809 | 0.0815 | 10 | 1.8424 |

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

### Framework versions

|

| 69 |

+

|

| 70 |

+

- PEFT 0.12.0

|

| 71 |

+

- Transformers 4.42.4

|

| 72 |

+

- Pytorch 2.4.0+cu121

|

| 73 |

+

- Datasets 2.21.0

|

| 74 |

+

- Tokenizers 0.19.1

|

adapter_config.json

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"alpha_pattern": {},

|

| 3 |

+

"auto_mapping": null,

|

| 4 |

+

"base_model_name_or_path": "Qwen/Qwen2-1.5B-Instruct",

|

| 5 |

+

"bias": "none",

|

| 6 |

+

"fan_in_fan_out": false,

|

| 7 |

+

"inference_mode": true,

|

| 8 |

+

"init_lora_weights": true,

|

| 9 |

+

"layer_replication": null,

|

| 10 |

+

"layers_pattern": null,

|

| 11 |

+

"layers_to_transform": null,

|

| 12 |

+

"loftq_config": {},

|

| 13 |

+

"lora_alpha": 16,

|

| 14 |

+

"lora_dropout": 0.0,

|

| 15 |

+

"megatron_config": null,

|

| 16 |

+

"megatron_core": "megatron.core",

|

| 17 |

+

"modules_to_save": null,

|

| 18 |

+

"peft_type": "LORA",

|

| 19 |

+

"r": 8,

|

| 20 |

+

"rank_pattern": {},

|

| 21 |

+

"revision": null,

|

| 22 |

+

"target_modules": [

|

| 23 |

+

"gate_proj",

|

| 24 |

+

"up_proj",

|

| 25 |

+

"o_proj",

|

| 26 |

+

"v_proj",

|

| 27 |

+

"q_proj",

|

| 28 |

+

"k_proj",

|

| 29 |

+

"down_proj"

|

| 30 |

+

],

|

| 31 |

+

"task_type": "CAUSAL_LM",

|

| 32 |

+

"use_dora": false,

|

| 33 |

+

"use_rslora": false

|

| 34 |

+

}

|

adapter_model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cb4949db77552905d86b2683e94157b4d08fd4fd49d317d9e195443f5d3e3f9b

|

| 3 |

+

size 36981072

|

added_tokens.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"<|endoftext|>": 151643,

|

| 3 |

+

"<|eot_id|>": 151646,

|

| 4 |

+

"<|im_end|>": 151645,

|

| 5 |

+

"<|im_start|>": 151644

|

| 6 |

+

}

|

all_results.json

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 0.08154943934760449,

|

| 3 |

+

"eval_loss": 1.8393741846084595,

|

| 4 |

+

"eval_runtime": 6.7009,

|

| 5 |

+

"eval_samples_per_second": 16.416,

|

| 6 |

+

"eval_steps_per_second": 16.416,

|

| 7 |

+

"total_flos": 124335445598208.0,

|

| 8 |

+

"train_loss": 1.6808662414550781,

|

| 9 |

+

"train_runtime": 109.4363,

|

| 10 |

+

"train_samples_per_second": 0.731,

|

| 11 |

+

"train_steps_per_second": 0.091

|

| 12 |

+

}

|

configuration.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"framework": "pytorch", "task": "text-generation", "allow_remote": true}

|

eval_results.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 0.08154943934760449,

|

| 3 |

+

"eval_loss": 1.8393741846084595,

|

| 4 |

+

"eval_runtime": 6.7009,

|

| 5 |

+

"eval_samples_per_second": 16.416,

|

| 6 |

+

"eval_steps_per_second": 16.416

|

| 7 |

+

}

|

merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

runs/Sep01_12-22-50_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725164586.dsw-83959-5f9bd48d7d-89klm.2955372.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0a3c77ce1d9270172ad09e30294ac5f9891271ecf559c8d0a617349a92a94505

|

| 3 |

+

size 88

|

runs/Sep01_12-24-16_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725164672.dsw-83959-5f9bd48d7d-89klm.2956536.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:26f4e529c9f9d8ff6d0f39d4f78854b7b209b8e55c1cfad012fe433ee982d8a8

|

| 3 |

+

size 88

|

runs/Sep01_12-25-35_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725164753.dsw-83959-5f9bd48d7d-89klm.2957417.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:42b281ea4b3edfc07df50015fcff414bcc7e4f103fe919776c1a719b9c196f6d

|

| 3 |

+

size 8519

|

runs/Sep01_12-29-54_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725165017.dsw-83959-5f9bd48d7d-89klm.2960877.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9103074bb1616cdc52dcecff4f69920302256a488d7a4b564d63b59d7d57d653

|

| 3 |

+

size 8524

|

runs/Sep01_12-33-50_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725165255.dsw-83959-5f9bd48d7d-89klm.2964077.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5a5b558359896c24478d43ebf013cd2d6b059afab60dd34c693d96311bb399b0

|

| 3 |

+

size 8524

|

runs/Sep01_12-33-50_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725165429.dsw-83959-5f9bd48d7d-89klm.2964077.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f5876d3ee9b2bee94a9f13accc0197a7b865eb1a31d1313ed3cc4641fc0ae211

|

| 3 |

+

size 354

|

runs/Sep01_13-09-02_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725167504.dsw-83959-5f9bd48d7d-89klm.2978286.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2d1a2c163e4f1c922b3b7b6fc6f94a4406f1e0b37af9538c25db02289b411925

|

| 3 |

+

size 8520

|

runs/Sep01_13-22-18_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725168155.dsw-83959-5f9bd48d7d-89klm.2984305.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1f882d66ba57084631fbe8812724242d3abe4acdf9ce79c7d0f398e6e3bc184c

|

| 3 |

+

size 8520

|

runs/Sep01_13-27-40_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725168480.dsw-83959-5f9bd48d7d-89klm.2986770.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:276f0d4a167d4b16f52b6f128bf540ba9b63faf87e05d0caab0a9669dc075dbe

|

| 3 |

+

size 8520

|

runs/Sep01_13-27-40_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725168654.dsw-83959-5f9bd48d7d-89klm.2986770.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e57a0908320ceed68034f038cda64c38d6956f15a972f8c33f3b77f75e412b2d

|

| 3 |

+

size 354

|

runs/Sep01_13-40-47_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725169266.dsw-83959-5f9bd48d7d-89klm.2992860.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d09cb7d8d076a4a10cb06925d28f0527910089be48b37243114fde454e1d1a03

|

| 3 |

+

size 8520

|

runs/Sep01_13-40-47_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725169510.dsw-83959-5f9bd48d7d-89klm.2992860.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:042f860a428095d8bce1975d7326f0b956c047ac7177f30a56a3440f59544a3a

|

| 3 |

+

size 354

|

runs/Sep02_14-55-48_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260171.dsw-83959-5f9bd48d7d-89klm.3489225.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4de0e54345339f9e680a79a60cf68eecd9c5a0f2938f6164cfd866df778763d9

|

| 3 |

+

size 8397

|

runs/Sep02_14-55-48_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260414.dsw-83959-5f9bd48d7d-89klm.3489225.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:825edda66163b92ab0f388e44cf7d052ccd10c838d38a68f969188b979b434dc

|

| 3 |

+

size 354

|

runs/Sep02_15-01-36_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260523.dsw-83959-5f9bd48d7d-89klm.3493333.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ae54ac0e73940dfe3f7d02b7144133320bd571050e110e746370d2e13459e431

|

| 3 |

+

size 8397

|

runs/Sep02_15-01-36_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725260783.dsw-83959-5f9bd48d7d-89klm.3493333.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fe9b3776401687c668c26a690f522fd73da5d669643e665f1f2bbcfbf59abfd4

|

| 3 |

+

size 354

|

runs/Sep02_15-12-15_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725261155.dsw-83959-5f9bd48d7d-89klm.3498880.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bcefcadf980901da21301188c2b2fb84b29641765fced77f8f325b8e83542247

|

| 3 |

+

size 8397

|

runs/Sep02_15-17-02_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725261590.dsw-83959-5f9bd48d7d-89klm.3500834.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1e323f227993f83987e9410d993534ce21a23388170125d958b80b0566876978

|

| 3 |

+

size 8375

|

runs/Sep02_15-29-51_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262408.dsw-83959-5f9bd48d7d-89klm.3506248.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9ab107b9407e1c223d6aede1b1340539a41f9d2b9a229b268aa6c370594423fa

|

| 3 |

+

size 8380

|

runs/Sep02_15-29-51_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262666.dsw-83959-5f9bd48d7d-89klm.3506248.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:189221f412a7b8935a422267bdc0f9f44956a55fddd2a638514da9d753cbe82f

|

| 3 |

+

size 354

|

runs/Sep02_15-39-28_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262788.dsw-83959-5f9bd48d7d-89klm.3511533.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4c5960dcdfe8ed3986114ca01440bd73be2299b56338fbb8fd582bee547eb7eb

|

| 3 |

+

size 8375

|

runs/Sep02_15-39-28_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725262931.dsw-83959-5f9bd48d7d-89klm.3511533.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a33da9717bebc5d7d32d38e3be3dcdadf061180f46b35ee86f527eaef245b786

|

| 3 |

+

size 354

|

runs/Sep02_15-51-19_dsw-83959-5f9bd48d7d-89klm/events.out.tfevents.1725263663.dsw-83959-5f9bd48d7d-89klm.3522427.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0f4d24899d5075f2e6d70aac06e9bf7600396dc15c35894a3756c10976e2a9ef

|

| 3 |

+

size 8336

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"additional_special_tokens": [

|

| 3 |

+

"<|im_start|>",

|

| 4 |

+

"<|im_end|>"

|

| 5 |

+

],

|

| 6 |

+

"eos_token": {

|

| 7 |

+

"content": "<|eot_id|>",

|

| 8 |

+

"lstrip": false,

|

| 9 |

+

"normalized": false,

|

| 10 |

+

"rstrip": false,

|

| 11 |

+

"single_word": false

|

| 12 |

+

},

|

| 13 |

+

"pad_token": {

|

| 14 |

+

"content": "<|endoftext|>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false

|

| 19 |

+

}

|

| 20 |

+

}

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_prefix_space": false,

|

| 3 |

+

"added_tokens_decoder": {

|

| 4 |

+

"151643": {

|

| 5 |

+

"content": "<|endoftext|>",

|

| 6 |

+

"lstrip": false,

|

| 7 |

+

"normalized": false,

|

| 8 |

+

"rstrip": false,

|

| 9 |

+

"single_word": false,

|

| 10 |

+

"special": true

|

| 11 |

+

},

|

| 12 |

+

"151644": {

|

| 13 |

+

"content": "<|im_start|>",

|

| 14 |

+

"lstrip": false,

|

| 15 |

+

"normalized": false,

|

| 16 |

+

"rstrip": false,

|

| 17 |

+

"single_word": false,

|

| 18 |

+

"special": true

|

| 19 |

+

},

|

| 20 |

+

"151645": {

|

| 21 |

+

"content": "<|im_end|>",

|

| 22 |

+

"lstrip": false,

|

| 23 |

+

"normalized": false,

|

| 24 |

+

"rstrip": false,

|

| 25 |

+

"single_word": false,

|

| 26 |

+

"special": true

|

| 27 |

+

},

|

| 28 |

+

"151646": {

|

| 29 |

+

"content": "<|eot_id|>",

|

| 30 |

+

"lstrip": false,

|

| 31 |

+

"normalized": false,

|

| 32 |

+

"rstrip": false,

|

| 33 |

+

"single_word": false,

|

| 34 |

+

"special": true

|

| 35 |

+

}

|

| 36 |

+

},

|

| 37 |

+

"additional_special_tokens": [

|

| 38 |

+

"<|im_start|>",

|

| 39 |

+

"<|im_end|>"

|

| 40 |

+

],

|

| 41 |

+

"bos_token": null,

|

| 42 |

+

"chat_template": "{% if messages[0]['role'] == 'system' %}{% set loop_messages = messages[1:] %}{% set system_message = messages[0]['content'] %}{% else %}{% set loop_messages = messages %}{% endif %}{% if system_message is defined %}{{ '<|start_header_id|>system<|end_header_id|>\n\n' + system_message + '<|eot_id|>' }}{% endif %}{% for message in loop_messages %}{% set content = message['content'] %}{% if message['role'] == 'user' %}{{ '<|start_header_id|>user<|end_header_id|>\n\n' + content + '<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n' }}{% elif message['role'] == 'assistant' %}{{ content + '<|eot_id|>' }}{% endif %}{% endfor %}",

|

| 43 |

+

"clean_up_tokenization_spaces": false,

|

| 44 |

+

"eos_token": "<|eot_id|>",

|

| 45 |

+

"errors": "replace",

|

| 46 |

+

"model_max_length": 32768,

|

| 47 |

+

"pad_token": "<|endoftext|>",

|

| 48 |

+

"padding_side": "right",

|

| 49 |

+

"split_special_tokens": false,

|

| 50 |

+

"tokenizer_class": "Qwen2Tokenizer",

|

| 51 |

+

"unk_token": null

|

| 52 |

+

}

|

train_results.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 0.08154943934760449,

|

| 3 |

+

"total_flos": 124335445598208.0,

|

| 4 |

+

"train_loss": 1.6808662414550781,

|

| 5 |

+

"train_runtime": 109.4363,

|

| 6 |

+

"train_samples_per_second": 0.731,

|

| 7 |

+

"train_steps_per_second": 0.091

|

| 8 |

+

}

|

trainer_log.jsonl

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{"current_steps": 1, "total_steps": 10, "eval_loss": 2.0249228477478027, "epoch": 0.00815494393476045, "percentage": 10.0, "elapsed_time": "0:00:09", "remaining_time": "0:01:28"}

|

| 2 |

+

{"current_steps": 2, "total_steps": 10, "eval_loss": 2.0154640674591064, "epoch": 0.0163098878695209, "percentage": 20.0, "elapsed_time": "0:00:20", "remaining_time": "0:01:21"}

|

| 3 |

+

{"current_steps": 3, "total_steps": 10, "eval_loss": 1.9926271438598633, "epoch": 0.024464831804281346, "percentage": 30.0, "elapsed_time": "0:00:30", "remaining_time": "0:01:11"}

|

| 4 |

+

{"current_steps": 4, "total_steps": 10, "eval_loss": 1.9593819379806519, "epoch": 0.0326197757390418, "percentage": 40.0, "elapsed_time": "0:00:41", "remaining_time": "0:01:01"}

|

| 5 |

+

{"current_steps": 5, "total_steps": 10, "eval_loss": 1.919368863105774, "epoch": 0.040774719673802244, "percentage": 50.0, "elapsed_time": "0:00:51", "remaining_time": "0:00:51"}

|

| 6 |

+

{"current_steps": 6, "total_steps": 10, "eval_loss": 1.8850003480911255, "epoch": 0.04892966360856269, "percentage": 60.0, "elapsed_time": "0:01:02", "remaining_time": "0:00:41"}

|

| 7 |

+

{"current_steps": 7, "total_steps": 10, "eval_loss": 1.8630123138427734, "epoch": 0.05708460754332314, "percentage": 70.0, "elapsed_time": "0:01:12", "remaining_time": "0:00:31"}

|

| 8 |

+

{"current_steps": 8, "total_steps": 10, "eval_loss": 1.849714756011963, "epoch": 0.0652395514780836, "percentage": 80.0, "elapsed_time": "0:01:23", "remaining_time": "0:00:20"}

|

| 9 |

+

{"current_steps": 9, "total_steps": 10, "eval_loss": 1.8416643142700195, "epoch": 0.07339449541284404, "percentage": 90.0, "elapsed_time": "0:01:33", "remaining_time": "0:00:10"}

|

| 10 |

+

{"current_steps": 10, "total_steps": 10, "loss": 1.6809, "learning_rate": 0.0, "epoch": 0.08154943934760449, "percentage": 100.0, "elapsed_time": "0:01:37", "remaining_time": "0:00:00"}

|

| 11 |

+

{"current_steps": 10, "total_steps": 10, "eval_loss": 1.8423653841018677, "epoch": 0.08154943934760449, "percentage": 100.0, "elapsed_time": "0:01:43", "remaining_time": "0:00:00"}

|

| 12 |

+

{"current_steps": 10, "total_steps": 10, "epoch": 0.08154943934760449, "percentage": 100.0, "elapsed_time": "0:01:45", "remaining_time": "0:00:00"}

|

trainer_state.json

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": null,

|

| 3 |

+

"best_model_checkpoint": null,

|

| 4 |

+

"epoch": 0.08154943934760449,

|

| 5 |

+

"eval_steps": 1,

|

| 6 |

+

"global_step": 10,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 0.00815494393476045,

|

| 13 |

+

"eval_loss": 2.0249228477478027,

|

| 14 |

+

"eval_runtime": 6.8992,

|

| 15 |

+

"eval_samples_per_second": 15.944,

|

| 16 |

+

"eval_steps_per_second": 15.944,

|

| 17 |

+

"step": 1

|

| 18 |

+

},

|

| 19 |

+

{

|

| 20 |

+

"epoch": 0.0163098878695209,

|

| 21 |

+

"eval_loss": 2.0154640674591064,

|

| 22 |

+

"eval_runtime": 6.8384,

|

| 23 |

+

"eval_samples_per_second": 16.086,

|

| 24 |

+

"eval_steps_per_second": 16.086,

|

| 25 |

+

"step": 2

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"epoch": 0.024464831804281346,

|

| 29 |

+

"eval_loss": 1.9926271438598633,

|

| 30 |

+

"eval_runtime": 6.8066,

|

| 31 |

+

"eval_samples_per_second": 16.161,

|

| 32 |

+

"eval_steps_per_second": 16.161,

|

| 33 |

+

"step": 3

|

| 34 |

+

},

|

| 35 |

+

{

|

| 36 |

+

"epoch": 0.0326197757390418,

|

| 37 |

+

"eval_loss": 1.9593819379806519,

|

| 38 |

+

"eval_runtime": 6.825,

|

| 39 |

+

"eval_samples_per_second": 16.117,

|

| 40 |

+

"eval_steps_per_second": 16.117,

|

| 41 |

+

"step": 4

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"epoch": 0.040774719673802244,

|

| 45 |

+

"eval_loss": 1.919368863105774,

|

| 46 |

+

"eval_runtime": 6.7341,

|

| 47 |

+

"eval_samples_per_second": 16.335,

|

| 48 |

+

"eval_steps_per_second": 16.335,

|

| 49 |

+

"step": 5

|

| 50 |

+

},

|

| 51 |

+

{

|

| 52 |

+

"epoch": 0.04892966360856269,

|

| 53 |

+

"eval_loss": 1.8850003480911255,

|

| 54 |

+

"eval_runtime": 6.9546,

|

| 55 |

+

"eval_samples_per_second": 15.817,

|

| 56 |

+

"eval_steps_per_second": 15.817,

|

| 57 |

+

"step": 6

|

| 58 |

+

},

|

| 59 |

+

{

|

| 60 |

+

"epoch": 0.05708460754332314,

|

| 61 |

+

"eval_loss": 1.8630123138427734,

|

| 62 |

+

"eval_runtime": 6.5806,

|

| 63 |

+

"eval_samples_per_second": 16.716,

|

| 64 |

+

"eval_steps_per_second": 16.716,

|

| 65 |

+

"step": 7

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"epoch": 0.0652395514780836,

|

| 69 |

+

"eval_loss": 1.849714756011963,

|

| 70 |

+

"eval_runtime": 6.796,

|

| 71 |

+

"eval_samples_per_second": 16.186,

|

| 72 |

+

"eval_steps_per_second": 16.186,

|

| 73 |

+

"step": 8

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"epoch": 0.07339449541284404,

|

| 77 |

+

"eval_loss": 1.8416643142700195,

|

| 78 |

+

"eval_runtime": 6.7749,

|

| 79 |

+

"eval_samples_per_second": 16.236,

|

| 80 |

+

"eval_steps_per_second": 16.236,

|

| 81 |

+

"step": 9

|

| 82 |

+

},

|

| 83 |

+

{

|

| 84 |

+

"epoch": 0.08154943934760449,

|

| 85 |

+

"grad_norm": 2.2355353832244873,

|

| 86 |

+

"learning_rate": 0.0,

|

| 87 |

+

"loss": 1.6809,

|

| 88 |

+

"step": 10

|

| 89 |

+

},

|

| 90 |

+

{

|

| 91 |

+

"epoch": 0.08154943934760449,

|

| 92 |

+

"eval_loss": 1.8393741846084595,

|

| 93 |

+

"eval_runtime": 6.8739,

|

| 94 |

+

"eval_samples_per_second": 16.003,

|

| 95 |

+

"eval_steps_per_second": 16.003,

|

| 96 |

+

"step": 10

|

| 97 |

+

},

|

| 98 |

+

{

|

| 99 |

+

"epoch": 0.08154943934760449,

|

| 100 |

+

"step": 10,

|

| 101 |

+

"total_flos": 124335445598208.0,

|

| 102 |

+

"train_loss": 1.6808662414550781,

|

| 103 |

+

"train_runtime": 109.4363,

|

| 104 |

+

"train_samples_per_second": 0.731,

|

| 105 |

+

"train_steps_per_second": 0.091

|

| 106 |

+

}

|

| 107 |

+

],

|

| 108 |

+

"logging_steps": 10,

|

| 109 |

+

"max_steps": 10,

|

| 110 |

+

"num_input_tokens_seen": 0,

|

| 111 |

+

"num_train_epochs": 1,

|

| 112 |

+

"save_steps": 1,

|

| 113 |

+

"stateful_callbacks": {

|

| 114 |

+

"TrainerControl": {

|

| 115 |

+

"args": {

|

| 116 |

+

"should_epoch_stop": false,

|

| 117 |

+

"should_evaluate": false,

|

| 118 |

+

"should_log": false,

|

| 119 |

+

"should_save": true,

|

| 120 |

+

"should_training_stop": true

|

| 121 |

+

},

|

| 122 |

+

"attributes": {}

|

| 123 |

+

}

|

| 124 |

+

},

|

| 125 |

+

"total_flos": 124335445598208.0,

|

| 126 |

+

"train_batch_size": 1,

|

| 127 |

+

"trial_name": null,

|

| 128 |

+

"trial_params": null

|

| 129 |

+

}

|

training_args.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:25089c0b7075d15d5b5a3525aaa9eed1438c9b00ad117d28a388d7a2aee836d1

|

| 3 |

+

size 5368

|

training_eval_loss.png

ADDED

|

training_loss.png

ADDED

|

vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|