File size: 3,056 Bytes

d9d20f3 31bad26 ce980fd 31bad26 18a8e5f 31bad26 31fcb57 0b22261 31bad26 18a8e5f 31bad26 57d87ea 31bad26 18a8e5f 31bad26 18a8e5f 31bad26 18a8e5f 31bad26 18a8e5f 31bad26 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 |

---

license: agpl-3.0

datasets:

- stvlynn/Cantonese-Dialogue

language:

- zh

pipeline_tag: text-generation

tags:

- Cantonese

- 廣東話

- 粤语

---

# Qwen-7B-Chat-Cantonese (通议千问·粤语)

## Intro

Qwen-7B-Chat-Cantonese is a fine-tuned version based on Qwen-7B-Chat, trained on a substantial amount of Cantonese language data.

Qwen-7B-Chat-Cantonese係基於Qwen-7B-Chat嘅微調版本,基於大量粵語數據進行訓練。

[ModelScope(魔搭社区)](https://www.modelscope.cn/models/stvlynn/Qwen-7B-Chat-Cantonese)

## Usage

### Requirements

* python 3.8 and above

* pytorch 1.12 and above, 2.0 and above are recommended

* CUDA 11.4 and above are recommended (this is for GPU users, flash-attention users, etc.)

### Dependency

To run Qwen-7B-Chat-Cantonese, please make sure you meet the above requirements, and then execute the following pip commands to install the dependent libraries.

```bash

pip install transformers==4.32.0 accelerate tiktoken einops scipy transformers_stream_generator==0.0.4 peft deepspeed

```

In addition, it is recommended to install the `flash-attention` library (**we support flash attention 2 now.**) for higher efficiency and lower memory usage.

```bash

git clone https://github.com/Dao-AILab/flash-attention

cd flash-attention && pip install .

```

### Quickstart

Pls turn to QwenLM/Qwen - [Quickstart](https://github.com/QwenLM/Qwen?tab=readme-ov-file#quickstart)

## Training Parameters

| Parameter | Description | Value |

|-----------------|----------------------------------------|--------|

| Learning Rate | AdamW optimizer learning rate | 7e-5 |

| Weight Decay | Regularization strength | 0.8 |

| Gamma | Learning rate decay factor | 1.0 |

| Batch Size | Number of samples per batch | 1000 |

| Precision | Floating point precision | fp16 |

| Learning Policy | Learning rate adjustment policy | cosine |

| Warmup Steps | Initial steps without learning rate adjustment | 0 |

| Total Steps | Total training steps | 1024 |

| Gradient Accumulation Steps | Number of steps to accumulate gradients before updating | 8 |

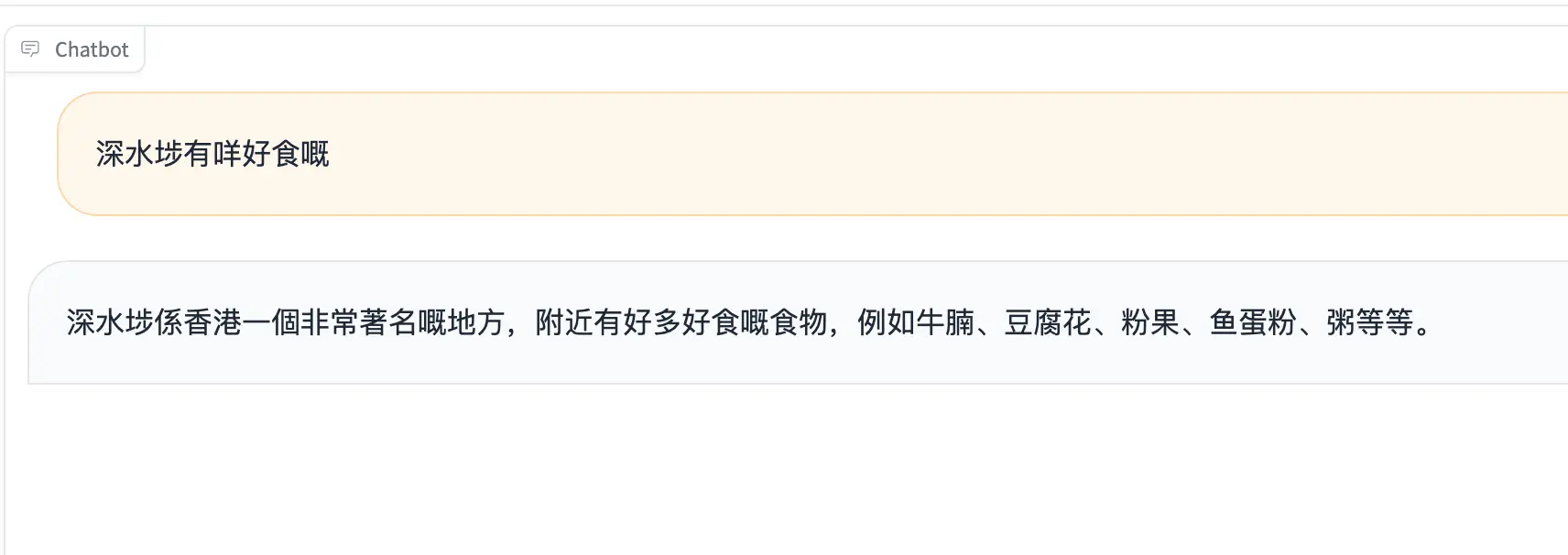

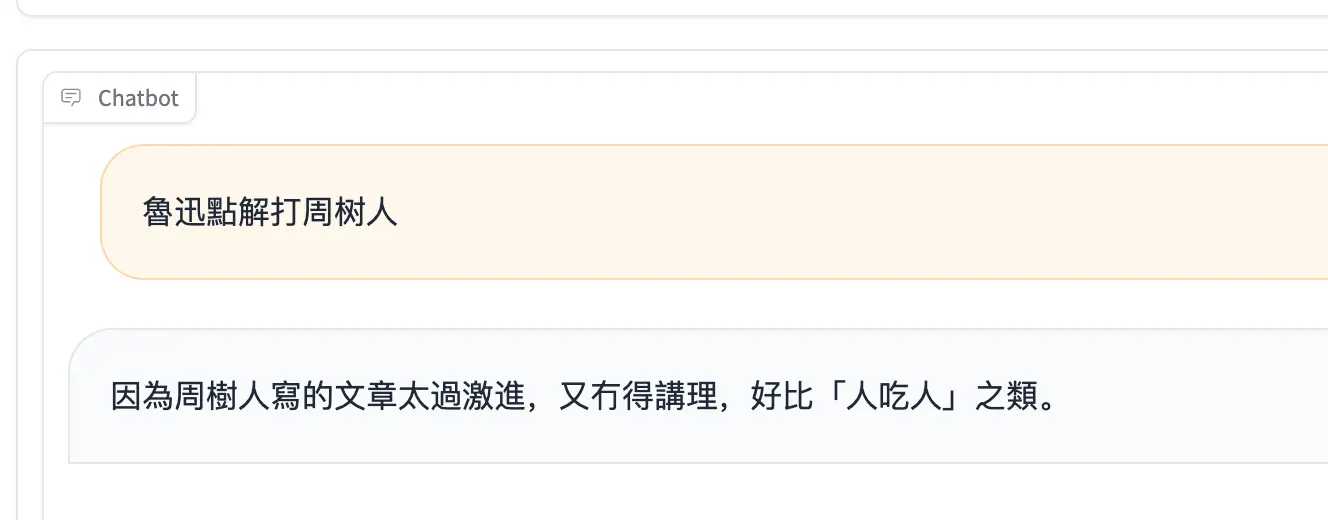

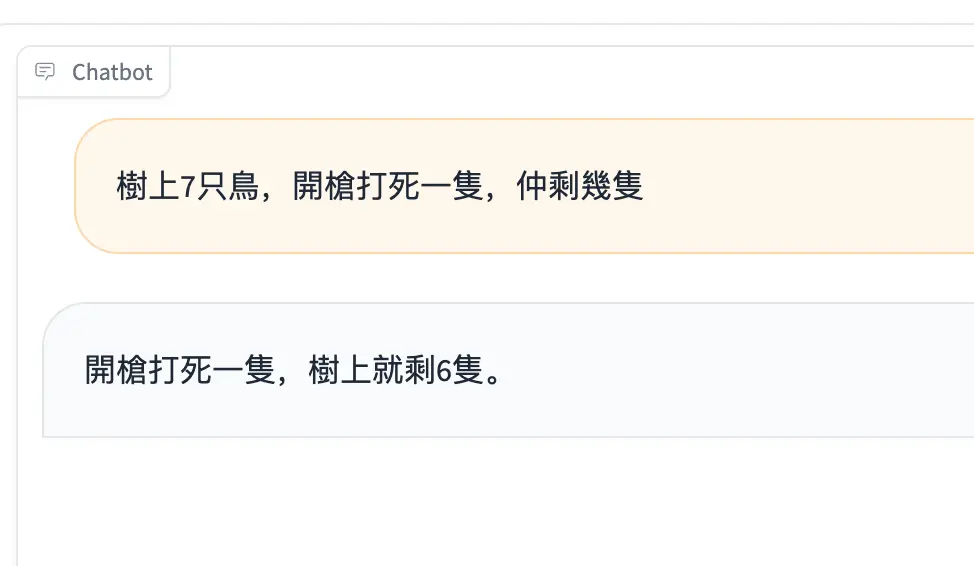

## Demo

## Special Note

This is my first fine-tuning LLM project. Pls forgive me if there's anything wrong.

If you have any questions or suggestions, feel free to contact me.

[Twitter @stv_lynn](https://x.com/stv_lynn)

[Telegram @stvlynn](https://t.me/stvlynn)

[email [email protected]](mailto://[email protected]) |