Spaces:

Runtime error

Runtime error

Upload 21 files

Browse files- .gitattributes +5 -0

- open-oasis-master/.gitattributes +1 -0

- open-oasis-master/LICENSE +21 -0

- open-oasis-master/README.md +37 -0

- open-oasis-master/attention.py +137 -0

- open-oasis-master/dit.py +310 -0

- open-oasis-master/embeddings.py +103 -0

- open-oasis-master/generate.py +119 -0

- open-oasis-master/media/arch.png +0 -0

- open-oasis-master/media/sample_0.gif +3 -0

- open-oasis-master/media/sample_1.gif +3 -0

- open-oasis-master/media/thumb.png +0 -0

- open-oasis-master/requirements.txt +31 -0

- open-oasis-master/rotary_embedding_torch.py +316 -0

- open-oasis-master/sample_data/Player729-f153ac423f61-20210806-224813.chunk_000.actions.pt +3 -0

- open-oasis-master/sample_data/Player729-f153ac423f61-20210806-224813.chunk_000.mp4 +3 -0

- open-oasis-master/sample_data/snippy-chartreuse-mastiff-f79998db196d-20220401-224517.chunk_001.actions.pt +3 -0

- open-oasis-master/sample_data/snippy-chartreuse-mastiff-f79998db196d-20220401-224517.chunk_001.mp4 +3 -0

- open-oasis-master/sample_data/treechop-f153ac423f61-20210916-183423.chunk_000.actions.pt +3 -0

- open-oasis-master/sample_data/treechop-f153ac423f61-20210916-183423.chunk_000.mp4 +3 -0

- open-oasis-master/utils.py +82 -0

- open-oasis-master/vae.py +381 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

open-oasis-master/media/sample_0.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

open-oasis-master/media/sample_1.gif filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

open-oasis-master/sample_data/Player729-f153ac423f61-20210806-224813.chunk_000.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

open-oasis-master/sample_data/snippy-chartreuse-mastiff-f79998db196d-20220401-224517.chunk_001.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

open-oasis-master/sample_data/treechop-f153ac423f61-20210916-183423.chunk_000.mp4 filter=lfs diff=lfs merge=lfs -text

|

open-oasis-master/.gitattributes

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

video.mp4 filter=lfs diff=lfs merge=lfs -text

|

open-oasis-master/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Etched & Decart

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

open-oasis-master/README.md

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Oasis 500M

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

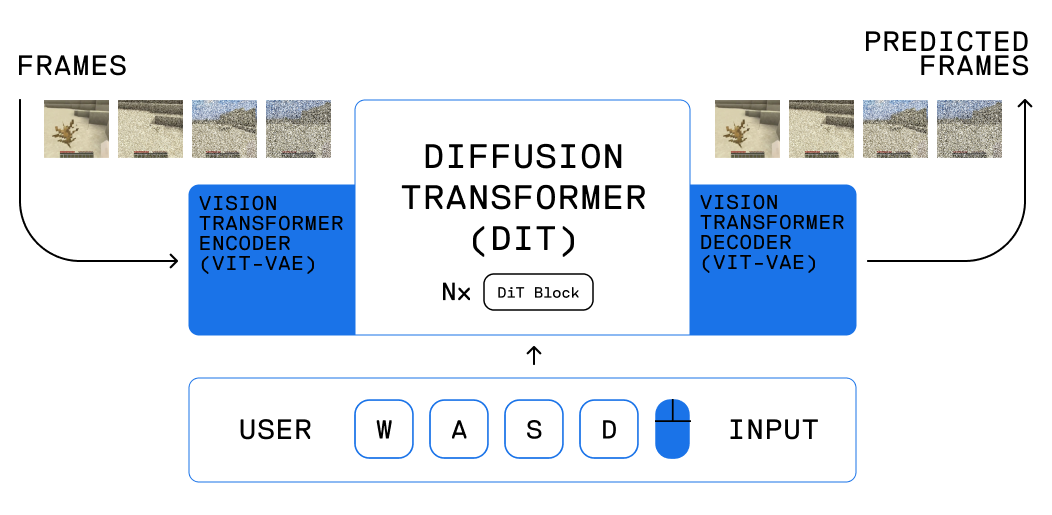

Oasis is an interactive world model developed by [Decart](https://www.decart.ai/) and [Etched](https://www.etched.com/). Based on diffusion transformers, Oasis takes in user keyboard input and generates gameplay in an autoregressive manner. We release the weights for Oasis 500M, a downscaled version of the model, along with inference code for action-conditional frame generation.

|

| 8 |

+

|

| 9 |

+

For more details, see our [joint blog post](https://oasis-model.github.io/) to learn more.

|

| 10 |

+

|

| 11 |

+

And to use the most powerful version of the model, be sure to check out the [live demo](https://oasis.us.decart.ai/) as well!

|

| 12 |

+

|

| 13 |

+

## Setup

|

| 14 |

+

```

|

| 15 |

+

git clone https://github.com/etched-ai/open-oasis.git

|

| 16 |

+

cd open-oasis

|

| 17 |

+

pip install -r requirements.txt

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

## Download the model weights

|

| 21 |

+

```

|

| 22 |

+

huggingface-cli login

|

| 23 |

+

huggingface-cli download Etched/oasis-500m oasis500m.pt # DiT checkpoint

|

| 24 |

+

huggingface-cli download Etched/oasis-500m vit-l-20.pt # ViT VAE checkpoint

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

## Basic Usage

|

| 28 |

+

We include a basic inference script that loads a prompt frame from a video and generates additional frames conditioned on actions.

|

| 29 |

+

```

|

| 30 |

+

python generate.py

|

| 31 |

+

```

|

| 32 |

+

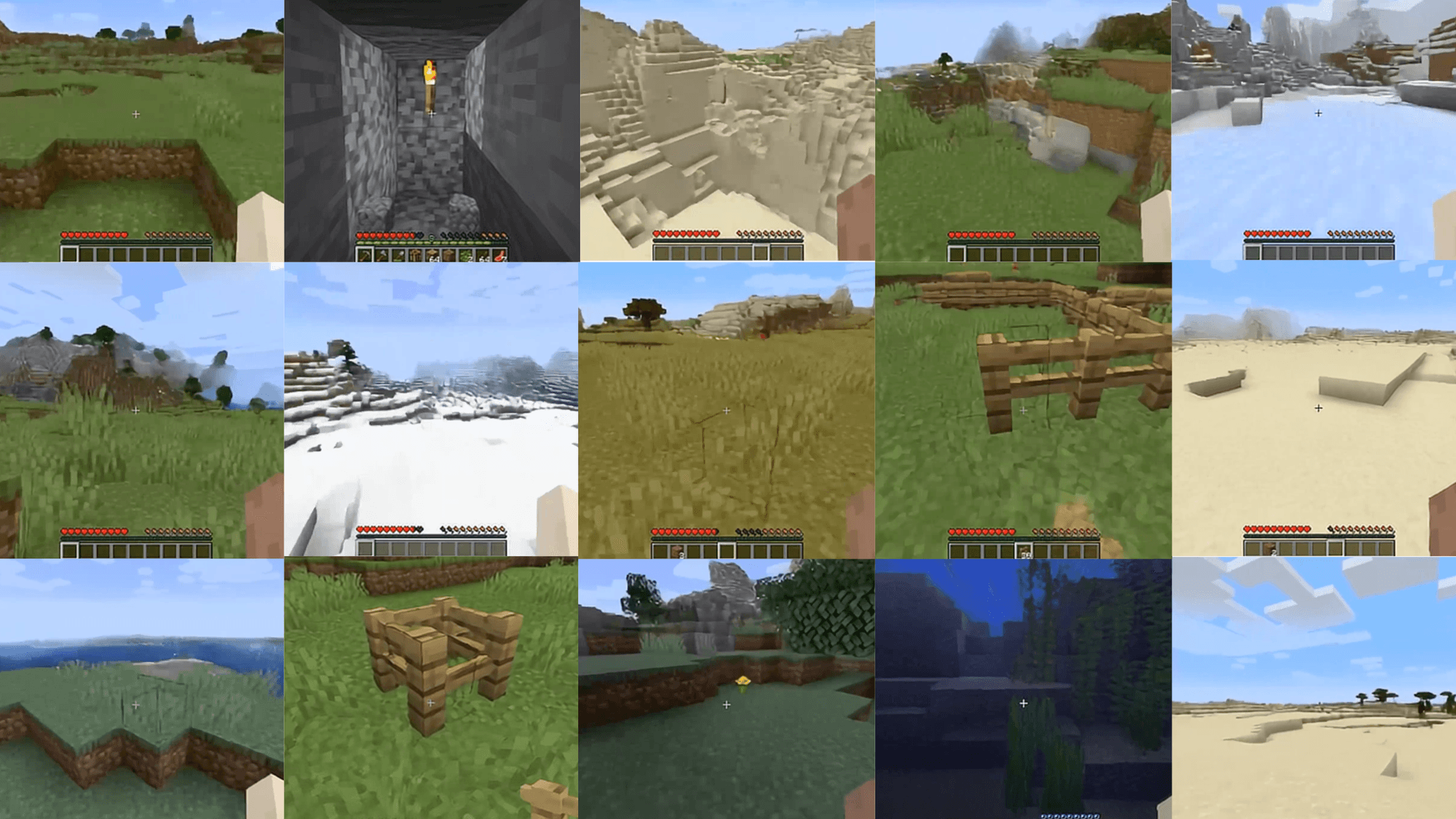

The resulting video will be saved to `video.mp4`. Here's are some examples of a generation from this 500M model!

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

> Hint: try swapping out the `.mp4` input file in the script to try different environments!

|

open-oasis-master/attention.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Based on https://github.com/buoyancy99/diffusion-forcing/blob/main/algorithms/diffusion_forcing/models/attention.py

|

| 3 |

+

"""

|

| 4 |

+

from typing import Optional

|

| 5 |

+

from collections import namedtuple

|

| 6 |

+

import torch

|

| 7 |

+

from torch import nn

|

| 8 |

+

from torch.nn import functional as F

|

| 9 |

+

from einops import rearrange

|

| 10 |

+

from rotary_embedding_torch import RotaryEmbedding, apply_rotary_emb

|

| 11 |

+

from embeddings import TimestepEmbedding, Timesteps, Positions2d

|

| 12 |

+

|

| 13 |

+

class TemporalAxialAttention(nn.Module):

|

| 14 |

+

def __init__(

|

| 15 |

+

self,

|

| 16 |

+

dim: int,

|

| 17 |

+

heads: int = 4,

|

| 18 |

+

dim_head: int = 32,

|

| 19 |

+

is_causal: bool = True,

|

| 20 |

+

rotary_emb: Optional[RotaryEmbedding] = None,

|

| 21 |

+

):

|

| 22 |

+

super().__init__()

|

| 23 |

+

self.inner_dim = dim_head * heads

|

| 24 |

+

self.heads = heads

|

| 25 |

+

self.head_dim = dim_head

|

| 26 |

+

self.inner_dim = dim_head * heads

|

| 27 |

+

self.to_qkv = nn.Linear(dim, self.inner_dim * 3, bias=False)

|

| 28 |

+

self.to_out = nn.Linear(self.inner_dim, dim)

|

| 29 |

+

|

| 30 |

+

self.rotary_emb = rotary_emb

|

| 31 |

+

self.time_pos_embedding = (

|

| 32 |

+

nn.Sequential(

|

| 33 |

+

Timesteps(dim),

|

| 34 |

+

TimestepEmbedding(in_channels=dim, time_embed_dim=dim * 4, out_dim=dim),

|

| 35 |

+

)

|

| 36 |

+

if rotary_emb is None

|

| 37 |

+

else None

|

| 38 |

+

)

|

| 39 |

+

self.is_causal = is_causal

|

| 40 |

+

|

| 41 |

+

def forward(self, x: torch.Tensor):

|

| 42 |

+

B, T, H, W, D = x.shape

|

| 43 |

+

|

| 44 |

+

if self.time_pos_embedding is not None:

|

| 45 |

+

time_emb = self.time_pos_embedding(

|

| 46 |

+

torch.arange(T, device=x.device)

|

| 47 |

+

)

|

| 48 |

+

x = x + rearrange(time_emb, "t d -> 1 t 1 1 d")

|

| 49 |

+

|

| 50 |

+

q, k, v = self.to_qkv(x).chunk(3, dim=-1)

|

| 51 |

+

|

| 52 |

+

q = rearrange(q, "B T H W (h d) -> (B H W) h T d", h=self.heads)

|

| 53 |

+

k = rearrange(k, "B T H W (h d) -> (B H W) h T d", h=self.heads)

|

| 54 |

+

v = rearrange(v, "B T H W (h d) -> (B H W) h T d", h=self.heads)

|

| 55 |

+

|

| 56 |

+

if self.rotary_emb is not None:

|

| 57 |

+

q = self.rotary_emb.rotate_queries_or_keys(q, self.rotary_emb.freqs)

|

| 58 |

+

k = self.rotary_emb.rotate_queries_or_keys(k, self.rotary_emb.freqs)

|

| 59 |

+

|

| 60 |

+

q, k, v = map(lambda t: t.contiguous(), (q, k, v))

|

| 61 |

+

|

| 62 |

+

x = F.scaled_dot_product_attention(

|

| 63 |

+

query=q, key=k, value=v, is_causal=self.is_causal

|

| 64 |

+

)

|

| 65 |

+

|

| 66 |

+

x = rearrange(x, "(B H W) h T d -> B T H W (h d)", B=B, H=H, W=W)

|

| 67 |

+

x = x.to(q.dtype)

|

| 68 |

+

|

| 69 |

+

# linear proj

|

| 70 |

+

x = self.to_out(x)

|

| 71 |

+

return x

|

| 72 |

+

|

| 73 |

+

class SpatialAxialAttention(nn.Module):

|

| 74 |

+

def __init__(

|

| 75 |

+

self,

|

| 76 |

+

dim: int,

|

| 77 |

+

heads: int = 4,

|

| 78 |

+

dim_head: int = 32,

|

| 79 |

+

rotary_emb: Optional[RotaryEmbedding] = None,

|

| 80 |

+

):

|

| 81 |

+

super().__init__()

|

| 82 |

+

self.inner_dim = dim_head * heads

|

| 83 |

+

self.heads = heads

|

| 84 |

+

self.head_dim = dim_head

|

| 85 |

+

self.inner_dim = dim_head * heads

|

| 86 |

+

self.to_qkv = nn.Linear(dim, self.inner_dim * 3, bias=False)

|

| 87 |

+

self.to_out = nn.Linear(self.inner_dim, dim)

|

| 88 |

+

|

| 89 |

+

self.rotary_emb = rotary_emb

|

| 90 |

+

self.space_pos_embedding = (

|

| 91 |

+

nn.Sequential(

|

| 92 |

+

Positions2d(dim),

|

| 93 |

+

TimestepEmbedding(in_channels=dim, time_embed_dim=dim * 4, out_dim=dim),

|

| 94 |

+

)

|

| 95 |

+

if rotary_emb is None

|

| 96 |

+

else None

|

| 97 |

+

)

|

| 98 |

+

|

| 99 |

+

def forward(self, x: torch.Tensor):

|

| 100 |

+

B, T, H, W, D = x.shape

|

| 101 |

+

|

| 102 |

+

if self.space_pos_embedding is not None:

|

| 103 |

+

h_steps = torch.arange(H, device=x.device)

|

| 104 |

+

w_steps = torch.arange(W, device=x.device)

|

| 105 |

+

grid = torch.meshgrid(h_steps, w_steps, indexing="ij")

|

| 106 |

+

space_emb = self.space_pos_embedding(grid)

|

| 107 |

+

x = x + rearrange(space_emb, "h w d -> 1 1 h w d")

|

| 108 |

+

|

| 109 |

+

q, k, v = self.to_qkv(x).chunk(3, dim=-1)

|

| 110 |

+

|

| 111 |

+

q = rearrange(q, "B T H W (h d) -> (B T) h H W d", h=self.heads)

|

| 112 |

+

k = rearrange(k, "B T H W (h d) -> (B T) h H W d", h=self.heads)

|

| 113 |

+

v = rearrange(v, "B T H W (h d) -> (B T) h H W d", h=self.heads)

|

| 114 |

+

|

| 115 |

+

if self.rotary_emb is not None:

|

| 116 |

+

freqs = self.rotary_emb.get_axial_freqs(H, W)

|

| 117 |

+

q = apply_rotary_emb(freqs, q)

|

| 118 |

+

k = apply_rotary_emb(freqs, k)

|

| 119 |

+

|

| 120 |

+

# prepare for attn

|

| 121 |

+

q = rearrange(q, "(B T) h H W d -> (B T) h (H W) d", B=B, T=T, h=self.heads)

|

| 122 |

+

k = rearrange(k, "(B T) h H W d -> (B T) h (H W) d", B=B, T=T, h=self.heads)

|

| 123 |

+

v = rearrange(v, "(B T) h H W d -> (B T) h (H W) d", B=B, T=T, h=self.heads)

|

| 124 |

+

|

| 125 |

+

q, k, v = map(lambda t: t.contiguous(), (q, k, v))

|

| 126 |

+

|

| 127 |

+

x = F.scaled_dot_product_attention(

|

| 128 |

+

query=q, key=k, value=v, is_causal=False

|

| 129 |

+

)

|

| 130 |

+

|

| 131 |

+

x = rearrange(x, "(B T) h (H W) d -> B T H W (h d)", B=B, H=H, W=W)

|

| 132 |

+

x = x.to(q.dtype)

|

| 133 |

+

|

| 134 |

+

# linear proj

|

| 135 |

+

x = self.to_out(x)

|

| 136 |

+

return x

|

| 137 |

+

|

open-oasis-master/dit.py

ADDED

|

@@ -0,0 +1,310 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

References:

|

| 3 |

+

- DiT: https://github.com/facebookresearch/DiT/blob/main/models.py

|

| 4 |

+

- Diffusion Forcing: https://github.com/buoyancy99/diffusion-forcing/blob/main/algorithms/diffusion_forcing/models/unet3d.py

|

| 5 |

+

- Latte: https://github.com/Vchitect/Latte/blob/main/models/latte.py

|

| 6 |

+

"""

|

| 7 |

+

from typing import Optional, Literal

|

| 8 |

+

import torch

|

| 9 |

+

from torch import nn

|

| 10 |

+

from rotary_embedding_torch import RotaryEmbedding

|

| 11 |

+

from einops import rearrange

|

| 12 |

+

from embeddings import Timesteps, TimestepEmbedding

|

| 13 |

+

from attention import SpatialAxialAttention, TemporalAxialAttention

|

| 14 |

+

from timm.models.vision_transformer import Mlp

|

| 15 |

+

from timm.layers.helpers import to_2tuple

|

| 16 |

+

import math

|

| 17 |

+

|

| 18 |

+

def modulate(x, shift, scale):

|

| 19 |

+

fixed_dims = [1] * len(shift.shape[1:])

|

| 20 |

+

shift = shift.repeat(x.shape[0] // shift.shape[0], *fixed_dims)

|

| 21 |

+

scale = scale.repeat(x.shape[0] // scale.shape[0], *fixed_dims)

|

| 22 |

+

while shift.dim() < x.dim():

|

| 23 |

+

shift = shift.unsqueeze(-2)

|

| 24 |

+

scale = scale.unsqueeze(-2)

|

| 25 |

+

return x * (1 + scale) + shift

|

| 26 |

+

|

| 27 |

+

def gate(x, g):

|

| 28 |

+

fixed_dims = [1] * len(g.shape[1:])

|

| 29 |

+

g = g.repeat(x.shape[0] // g.shape[0], *fixed_dims)

|

| 30 |

+

while g.dim() < x.dim():

|

| 31 |

+

g = g.unsqueeze(-2)

|

| 32 |

+

return g * x

|

| 33 |

+

|

| 34 |

+

class PatchEmbed(nn.Module):

|

| 35 |

+

"""2D Image to Patch Embedding"""

|

| 36 |

+

|

| 37 |

+

def __init__(

|

| 38 |

+

self,

|

| 39 |

+

img_height=256,

|

| 40 |

+

img_width=256,

|

| 41 |

+

patch_size=16,

|

| 42 |

+

in_chans=3,

|

| 43 |

+

embed_dim=768,

|

| 44 |

+

norm_layer=None,

|

| 45 |

+

flatten=True,

|

| 46 |

+

):

|

| 47 |

+

super().__init__()

|

| 48 |

+

img_size = (img_height, img_width)

|

| 49 |

+

patch_size = to_2tuple(patch_size)

|

| 50 |

+

self.img_size = img_size

|

| 51 |

+

self.patch_size = patch_size

|

| 52 |

+

self.grid_size = (img_size[0] // patch_size[0], img_size[1] // patch_size[1])

|

| 53 |

+

self.num_patches = self.grid_size[0] * self.grid_size[1]

|

| 54 |

+

self.flatten = flatten

|

| 55 |

+

|

| 56 |

+

self.proj = nn.Conv2d(

|

| 57 |

+

in_chans, embed_dim, kernel_size=patch_size, stride=patch_size

|

| 58 |

+

)

|

| 59 |

+

self.norm = norm_layer(embed_dim) if norm_layer else nn.Identity()

|

| 60 |

+

|

| 61 |

+

def forward(self, x, random_sample=False):

|

| 62 |

+

B, C, H, W = x.shape

|

| 63 |

+

assert random_sample or (

|

| 64 |

+

H == self.img_size[0] and W == self.img_size[1]

|

| 65 |

+

), f"Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]})."

|

| 66 |

+

x = self.proj(x)

|

| 67 |

+

if self.flatten:

|

| 68 |

+

x = rearrange(x, "B C H W -> B (H W) C")

|

| 69 |

+

else:

|

| 70 |

+

x = rearrange(x, "B C H W -> B H W C")

|

| 71 |

+

x = self.norm(x)

|

| 72 |

+

return x

|

| 73 |

+

|

| 74 |

+

class TimestepEmbedder(nn.Module):

|

| 75 |

+

"""

|

| 76 |

+

Embeds scalar timesteps into vector representations.

|

| 77 |

+

"""

|

| 78 |

+

def __init__(self, hidden_size, frequency_embedding_size=256):

|

| 79 |

+

super().__init__()

|

| 80 |

+

self.mlp = nn.Sequential(

|

| 81 |

+

nn.Linear(frequency_embedding_size, hidden_size, bias=True), # hidden_size is diffusion model hidden size

|

| 82 |

+

nn.SiLU(),

|

| 83 |

+

nn.Linear(hidden_size, hidden_size, bias=True),

|

| 84 |

+

)

|

| 85 |

+

self.frequency_embedding_size = frequency_embedding_size

|

| 86 |

+

|

| 87 |

+

@staticmethod

|

| 88 |

+

def timestep_embedding(t, dim, max_period=10000):

|

| 89 |

+

"""

|

| 90 |

+

Create sinusoidal timestep embeddings.

|

| 91 |

+

:param t: a 1-D Tensor of N indices, one per batch element.

|

| 92 |

+

These may be fractional.

|

| 93 |

+

:param dim: the dimension of the output.

|

| 94 |

+

:param max_period: controls the minimum frequency of the embeddings.

|

| 95 |

+

:return: an (N, D) Tensor of positional embeddings.

|

| 96 |

+

"""

|

| 97 |

+

# https://github.com/openai/glide-text2im/blob/main/glide_text2im/nn.py

|

| 98 |

+

half = dim // 2

|

| 99 |

+

freqs = torch.exp(

|

| 100 |

+

-math.log(max_period) * torch.arange(start=0, end=half, dtype=torch.float32) / half

|

| 101 |

+

).to(device=t.device)

|

| 102 |

+

args = t[:, None].float() * freqs[None]

|

| 103 |

+

embedding = torch.cat([torch.cos(args), torch.sin(args)], dim=-1)

|

| 104 |

+

if dim % 2:

|

| 105 |

+

embedding = torch.cat([embedding, torch.zeros_like(embedding[:, :1])], dim=-1)

|

| 106 |

+

return embedding

|

| 107 |

+

|

| 108 |

+

def forward(self, t):

|

| 109 |

+

t_freq = self.timestep_embedding(t, self.frequency_embedding_size)

|

| 110 |

+

t_emb = self.mlp(t_freq)

|

| 111 |

+

return t_emb

|

| 112 |

+

|

| 113 |

+

class FinalLayer(nn.Module):

|

| 114 |

+

"""

|

| 115 |

+

The final layer of DiT.

|

| 116 |

+

"""

|

| 117 |

+

def __init__(self, hidden_size, patch_size, out_channels):

|

| 118 |

+

super().__init__()

|

| 119 |

+

self.norm_final = nn.LayerNorm(hidden_size, elementwise_affine=False, eps=1e-6)

|

| 120 |

+

self.linear = nn.Linear(hidden_size, patch_size * patch_size * out_channels, bias=True)

|

| 121 |

+

self.adaLN_modulation = nn.Sequential(

|

| 122 |

+

nn.SiLU(),

|

| 123 |

+

nn.Linear(hidden_size, 2 * hidden_size, bias=True)

|

| 124 |

+

)

|

| 125 |

+

|

| 126 |

+

def forward(self, x, c):

|

| 127 |

+

shift, scale = self.adaLN_modulation(c).chunk(2, dim=-1)

|

| 128 |

+

x = modulate(self.norm_final(x), shift, scale)

|

| 129 |

+

x = self.linear(x)

|

| 130 |

+

return x

|

| 131 |

+

|

| 132 |

+

class SpatioTemporalDiTBlock(nn.Module):

|

| 133 |

+

def __init__(self, hidden_size, num_heads, mlp_ratio=4.0, is_causal=True, spatial_rotary_emb: Optional[RotaryEmbedding] = None, temporal_rotary_emb: Optional[RotaryEmbedding] = None):

|

| 134 |

+

super().__init__()

|

| 135 |

+

self.is_causal = is_causal

|

| 136 |

+

mlp_hidden_dim = int(hidden_size * mlp_ratio)

|

| 137 |

+

approx_gelu = lambda: nn.GELU(approximate="tanh")

|

| 138 |

+

|

| 139 |

+

self.s_norm1 = nn.LayerNorm(hidden_size, elementwise_affine=False, eps=1e-6)

|

| 140 |

+

self.s_attn = SpatialAxialAttention(hidden_size, heads=num_heads, dim_head=hidden_size // num_heads, rotary_emb=spatial_rotary_emb)

|

| 141 |

+

self.s_norm2 = nn.LayerNorm(hidden_size, elementwise_affine=False, eps=1e-6)

|

| 142 |

+

self.s_mlp = Mlp(in_features=hidden_size, hidden_features=mlp_hidden_dim, act_layer=approx_gelu, drop=0)

|

| 143 |

+

self.s_adaLN_modulation = nn.Sequential(

|

| 144 |

+

nn.SiLU(),

|

| 145 |

+

nn.Linear(hidden_size, 6 * hidden_size, bias=True)

|

| 146 |

+

)

|

| 147 |

+

|

| 148 |

+

self.t_norm1 = nn.LayerNorm(hidden_size, elementwise_affine=False, eps=1e-6)

|

| 149 |

+

self.t_attn = TemporalAxialAttention(hidden_size, heads=num_heads, dim_head=hidden_size // num_heads, is_causal=is_causal, rotary_emb=temporal_rotary_emb)

|

| 150 |

+

self.t_norm2 = nn.LayerNorm(hidden_size, elementwise_affine=False, eps=1e-6)

|

| 151 |

+

self.t_mlp = Mlp(in_features=hidden_size, hidden_features=mlp_hidden_dim, act_layer=approx_gelu, drop=0)

|

| 152 |

+

self.t_adaLN_modulation = nn.Sequential(

|

| 153 |

+

nn.SiLU(),

|

| 154 |

+

nn.Linear(hidden_size, 6 * hidden_size, bias=True)

|

| 155 |

+

)

|

| 156 |

+

|

| 157 |

+

def forward(self, x, c):

|

| 158 |

+

B, T, H, W, D = x.shape

|

| 159 |

+

|

| 160 |

+

# spatial block

|

| 161 |

+

s_shift_msa, s_scale_msa, s_gate_msa, s_shift_mlp, s_scale_mlp, s_gate_mlp = self.s_adaLN_modulation(c).chunk(6, dim=-1)

|

| 162 |

+

x = x + gate(self.s_attn(modulate(self.s_norm1(x), s_shift_msa, s_scale_msa)), s_gate_msa)

|

| 163 |

+

x = x + gate(self.s_mlp(modulate(self.s_norm2(x), s_shift_mlp, s_scale_mlp)), s_gate_mlp)

|

| 164 |

+

|

| 165 |

+

# temporal block

|

| 166 |

+

t_shift_msa, t_scale_msa, t_gate_msa, t_shift_mlp, t_scale_mlp, t_gate_mlp = self.t_adaLN_modulation(c).chunk(6, dim=-1)

|

| 167 |

+

x = x + gate(self.t_attn(modulate(self.t_norm1(x), t_shift_msa, t_scale_msa)), t_gate_msa)

|

| 168 |

+

x = x + gate(self.t_mlp(modulate(self.t_norm2(x), t_shift_mlp, t_scale_mlp)), t_gate_mlp)

|

| 169 |

+

|

| 170 |

+

return x

|

| 171 |

+

|

| 172 |

+

class DiT(nn.Module):

|

| 173 |

+

"""

|

| 174 |

+

Diffusion model with a Transformer backbone.

|

| 175 |

+

"""

|

| 176 |

+

def __init__(

|

| 177 |

+

self,

|

| 178 |

+

input_h=18,

|

| 179 |

+

input_w=32,

|

| 180 |

+

patch_size=2,

|

| 181 |

+

in_channels=16,

|

| 182 |

+

hidden_size=1024,

|

| 183 |

+

depth=12,

|

| 184 |

+

num_heads=16,

|

| 185 |

+

mlp_ratio=4.0,

|

| 186 |

+

external_cond_dim=25,

|

| 187 |

+

max_frames=32,

|

| 188 |

+

):

|

| 189 |

+

super().__init__()

|

| 190 |

+

self.in_channels = in_channels

|

| 191 |

+

self.out_channels = in_channels

|

| 192 |

+

self.patch_size = patch_size

|

| 193 |

+

self.num_heads = num_heads

|

| 194 |

+

self.max_frames = max_frames

|

| 195 |

+

|

| 196 |

+

self.x_embedder = PatchEmbed(input_h, input_w, patch_size, in_channels, hidden_size, flatten=False)

|

| 197 |

+

self.t_embedder = TimestepEmbedder(hidden_size)

|

| 198 |

+

frame_h, frame_w = self.x_embedder.grid_size

|

| 199 |

+

|

| 200 |

+

self.spatial_rotary_emb = RotaryEmbedding(dim=hidden_size // num_heads // 2, freqs_for="pixel", max_freq=256)

|

| 201 |

+

self.temporal_rotary_emb = RotaryEmbedding(dim=hidden_size // num_heads)

|

| 202 |

+

self.external_cond = nn.Linear(external_cond_dim, hidden_size) if external_cond_dim > 0 else nn.Identity()

|

| 203 |

+

|

| 204 |

+

self.blocks = nn.ModuleList(

|

| 205 |

+

[

|

| 206 |

+

SpatioTemporalDiTBlock(

|

| 207 |

+

hidden_size,

|

| 208 |

+

num_heads,

|

| 209 |

+

mlp_ratio=mlp_ratio,

|

| 210 |

+

is_causal=True,

|

| 211 |

+

spatial_rotary_emb=self.spatial_rotary_emb,

|

| 212 |

+

temporal_rotary_emb=self.temporal_rotary_emb,

|

| 213 |

+

)

|

| 214 |

+

for _ in range(depth)

|

| 215 |

+

]

|

| 216 |

+

)

|

| 217 |

+

|

| 218 |

+

self.final_layer = FinalLayer(hidden_size, patch_size, self.out_channels)

|

| 219 |

+

self.initialize_weights()

|

| 220 |

+

|

| 221 |

+

def initialize_weights(self):

|

| 222 |

+

# Initialize transformer layers:

|

| 223 |

+

def _basic_init(module):

|

| 224 |

+

if isinstance(module, nn.Linear):

|

| 225 |

+

torch.nn.init.xavier_uniform_(module.weight)

|

| 226 |

+

if module.bias is not None:

|

| 227 |

+

nn.init.constant_(module.bias, 0)

|

| 228 |

+

self.apply(_basic_init)

|

| 229 |

+

|

| 230 |

+

# Initialize patch_embed like nn.Linear (instead of nn.Conv2d):

|

| 231 |

+

w = self.x_embedder.proj.weight.data

|

| 232 |

+

nn.init.xavier_uniform_(w.view([w.shape[0], -1]))

|

| 233 |

+

nn.init.constant_(self.x_embedder.proj.bias, 0)

|

| 234 |

+

|

| 235 |

+

# Initialize timestep embedding MLP:

|

| 236 |

+

nn.init.normal_(self.t_embedder.mlp[0].weight, std=0.02)

|

| 237 |

+

nn.init.normal_(self.t_embedder.mlp[2].weight, std=0.02)

|

| 238 |

+

|

| 239 |

+

# Zero-out adaLN modulation layers in DiT blocks:

|

| 240 |

+

for block in self.blocks:

|

| 241 |

+

nn.init.constant_(block.s_adaLN_modulation[-1].weight, 0)

|

| 242 |

+

nn.init.constant_(block.s_adaLN_modulation[-1].bias, 0)

|

| 243 |

+

nn.init.constant_(block.t_adaLN_modulation[-1].weight, 0)

|

| 244 |

+

nn.init.constant_(block.t_adaLN_modulation[-1].bias, 0)

|

| 245 |

+

|

| 246 |

+

# Zero-out output layers:

|

| 247 |

+

nn.init.constant_(self.final_layer.adaLN_modulation[-1].weight, 0)

|

| 248 |

+

nn.init.constant_(self.final_layer.adaLN_modulation[-1].bias, 0)

|

| 249 |

+

nn.init.constant_(self.final_layer.linear.weight, 0)

|

| 250 |

+

nn.init.constant_(self.final_layer.linear.bias, 0)

|

| 251 |

+

|

| 252 |

+

def unpatchify(self, x):

|

| 253 |

+

"""

|

| 254 |

+

x: (N, H, W, patch_size**2 * C)

|

| 255 |

+

imgs: (N, H, W, C)

|

| 256 |

+

"""

|

| 257 |

+

c = self.out_channels

|

| 258 |

+

p = self.x_embedder.patch_size[0]

|

| 259 |

+

h = x.shape[1]

|

| 260 |

+

w = x.shape[2]

|

| 261 |

+

|

| 262 |

+

x = x.reshape(shape=(x.shape[0], h, w, p, p, c))

|

| 263 |

+

x = torch.einsum('nhwpqc->nchpwq', x)

|

| 264 |

+

imgs = x.reshape(shape=(x.shape[0], c, h * p, w * p))

|

| 265 |

+

return imgs

|

| 266 |

+

|

| 267 |

+

def forward(self, x, t, external_cond=None):

|

| 268 |

+

"""

|

| 269 |

+

Forward pass of DiT.

|

| 270 |

+

x: (B, T, C, H, W) tensor of spatial inputs (images or latent representations of images)

|

| 271 |

+

t: (B, T,) tensor of diffusion timesteps

|

| 272 |

+

"""

|

| 273 |

+

|

| 274 |

+

B, T, C, H, W = x.shape

|

| 275 |

+

|

| 276 |

+

# add spatial embeddings

|

| 277 |

+

x = rearrange(x, "b t c h w -> (b t) c h w")

|

| 278 |

+

x = self.x_embedder(x) # (B*T, C, H, W) -> (B*T, H/2, W/2, D) , C = 16, D = d_model

|

| 279 |

+

# restore shape

|

| 280 |

+

x = rearrange(x, "(b t) h w d -> b t h w d", t = T)

|

| 281 |

+

# embed noise steps

|

| 282 |

+

t = rearrange(t, "b t -> (b t)")

|

| 283 |

+

c = self.t_embedder(t) # (N, D)

|

| 284 |

+

c = rearrange(c, "(b t) d -> b t d", t = T)

|

| 285 |

+

if torch.is_tensor(external_cond):

|

| 286 |

+

c += self.external_cond(external_cond)

|

| 287 |

+

for block in self.blocks:

|

| 288 |

+

x = block(x, c) # (N, T, H, W, D)

|

| 289 |

+

x = self.final_layer(x, c) # (N, T, H, W, patch_size ** 2 * out_channels)

|

| 290 |

+

# unpatchify

|

| 291 |

+

x = rearrange(x, "b t h w d -> (b t) h w d")

|

| 292 |

+

x = self.unpatchify(x) # (N, out_channels, H, W)

|

| 293 |

+

x = rearrange(x, "(b t) c h w -> b t c h w", t = T)

|

| 294 |

+

|

| 295 |

+

return x

|

| 296 |

+

|

| 297 |

+

def DiT_S_2():

|

| 298 |

+

return DiT(

|

| 299 |

+

patch_size=2,

|

| 300 |

+

hidden_size=1024,

|

| 301 |

+

depth=16,

|

| 302 |

+

num_heads=16,

|

| 303 |

+

)

|

| 304 |

+

|

| 305 |

+

DiT_models = {

|

| 306 |

+

"DiT-S/2": DiT_S_2

|

| 307 |

+

}

|

| 308 |

+

|

| 309 |

+

|

| 310 |

+

|

open-oasis-master/embeddings.py

ADDED

|

@@ -0,0 +1,103 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Adapted from https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/embeddings.py

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

from typing import Optional

|

| 6 |

+

import math

|

| 7 |

+

import torch

|

| 8 |

+

from torch import nn

|

| 9 |

+

|

| 10 |

+

# pylint: disable=unused-import

|

| 11 |

+

from diffusers.models.embeddings import TimestepEmbedding

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

class Timesteps(nn.Module):

|

| 15 |

+

def __init__(

|

| 16 |

+

self,

|

| 17 |

+

num_channels: int,

|

| 18 |

+

flip_sin_to_cos: bool = True,

|

| 19 |

+

downscale_freq_shift: float = 0,

|

| 20 |

+

):

|

| 21 |

+

super().__init__()

|

| 22 |

+

self.num_channels = num_channels

|

| 23 |

+

self.flip_sin_to_cos = flip_sin_to_cos

|

| 24 |

+

self.downscale_freq_shift = downscale_freq_shift

|

| 25 |

+

|

| 26 |

+

def forward(self, timesteps):

|

| 27 |

+

t_emb = get_timestep_embedding(

|

| 28 |

+

timesteps,

|

| 29 |

+

self.num_channels,

|

| 30 |

+

flip_sin_to_cos=self.flip_sin_to_cos,

|

| 31 |

+

downscale_freq_shift=self.downscale_freq_shift,

|

| 32 |

+

)

|

| 33 |

+

return t_emb

|

| 34 |

+

|

| 35 |

+

class Positions2d(nn.Module):

|

| 36 |

+

def __init__(

|

| 37 |

+

self,

|

| 38 |

+

num_channels: int,

|

| 39 |

+

flip_sin_to_cos: bool = True,

|

| 40 |

+

downscale_freq_shift: float = 0,

|

| 41 |

+

):

|

| 42 |

+

super().__init__()

|

| 43 |

+

self.num_channels = num_channels

|

| 44 |

+

self.flip_sin_to_cos = flip_sin_to_cos

|

| 45 |

+

self.downscale_freq_shift = downscale_freq_shift

|

| 46 |

+

|

| 47 |

+

def forward(self, grid):

|

| 48 |

+

h_emb = get_timestep_embedding(

|

| 49 |

+

grid[0],

|

| 50 |

+

self.num_channels // 2,

|

| 51 |

+

flip_sin_to_cos=self.flip_sin_to_cos,

|

| 52 |

+

downscale_freq_shift=self.downscale_freq_shift,

|

| 53 |

+

)

|

| 54 |

+

w_emb = get_timestep_embedding(

|

| 55 |

+

grid[1],

|

| 56 |

+

self.num_channels // 2,

|

| 57 |

+

flip_sin_to_cos=self.flip_sin_to_cos,

|

| 58 |

+

downscale_freq_shift=self.downscale_freq_shift,

|

| 59 |

+

)

|

| 60 |

+

emb = torch.cat((h_emb, w_emb), dim=-1)

|

| 61 |

+

return emb

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def get_timestep_embedding(

|

| 65 |

+

timesteps: torch.Tensor,

|

| 66 |

+

embedding_dim: int,

|

| 67 |

+

flip_sin_to_cos: bool = False,

|

| 68 |

+

downscale_freq_shift: float = 1,

|

| 69 |

+

scale: float = 1,

|

| 70 |

+

max_period: int = 10000,

|

| 71 |

+

):

|

| 72 |

+

"""

|

| 73 |

+

This matches the implementation in Denoising Diffusion Probabilistic Models: Create sinusoidal timestep embeddings.

|

| 74 |

+

|

| 75 |

+

:param timesteps: a 1-D or 2-D Tensor of N indices, one per batch element.

|

| 76 |

+

These may be fractional.

|

| 77 |

+

:param embedding_dim: the dimension of the output. :param max_period: controls the minimum frequency of the

|

| 78 |

+

embeddings. :return: an [N x dim] or [N x M x dim] Tensor of positional embeddings.

|

| 79 |

+

"""

|

| 80 |

+

if len(timesteps.shape) not in [1, 2]:

|

| 81 |

+

raise ValueError("Timesteps should be a 1D or 2D tensor")

|

| 82 |

+

|

| 83 |

+

half_dim = embedding_dim // 2

|

| 84 |

+

exponent = -math.log(max_period) * torch.arange(start=0, end=half_dim, dtype=torch.float32, device=timesteps.device)

|

| 85 |

+

exponent = exponent / (half_dim - downscale_freq_shift)

|

| 86 |

+

|

| 87 |

+

emb = torch.exp(exponent)

|

| 88 |

+

emb = timesteps[..., None].float() * emb

|

| 89 |

+

|

| 90 |

+

# scale embeddings

|

| 91 |

+

emb = scale * emb

|

| 92 |

+

|

| 93 |

+

# concat sine and cosine embeddings

|

| 94 |

+

emb = torch.cat([torch.sin(emb), torch.cos(emb)], dim=-1)

|

| 95 |

+

|

| 96 |

+

# flip sine and cosine embeddings

|

| 97 |

+

if flip_sin_to_cos:

|

| 98 |

+

emb = torch.cat([emb[..., half_dim:], emb[..., :half_dim]], dim=-1)

|

| 99 |

+

|

| 100 |

+

# zero pad

|

| 101 |

+

if embedding_dim % 2 == 1:

|

| 102 |

+

emb = torch.nn.functional.pad(emb, (0, 1, 0, 0))

|

| 103 |

+

return emb

|

open-oasis-master/generate.py

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

References:

|

| 3 |

+

- Diffusion Forcing: https://github.com/buoyancy99/diffusion-forcing

|

| 4 |

+

"""

|

| 5 |

+

import torch

|

| 6 |

+

from dit import DiT_models

|

| 7 |

+

from vae import VAE_models

|

| 8 |

+

from torchvision.io import read_video, write_video

|

| 9 |

+

from utils import one_hot_actions, sigmoid_beta_schedule

|

| 10 |

+

from tqdm import tqdm

|

| 11 |

+

from einops import rearrange

|

| 12 |

+

from torch import autocast

|

| 13 |

+

assert torch.cuda.is_available()

|

| 14 |

+

device = "cuda:0"

|

| 15 |

+

|

| 16 |

+

# load DiT checkpoint

|

| 17 |

+

ckpt = torch.load("oasis500m.pt")

|

| 18 |

+

model = DiT_models["DiT-S/2"]()

|

| 19 |

+

model.load_state_dict(ckpt, strict=False)

|

| 20 |

+

model = model.to(device).eval()

|

| 21 |

+

|

| 22 |

+

# load VAE checkpoint

|

| 23 |

+

vae_ckpt = torch.load("vit-l-20.pt")

|

| 24 |

+

vae = VAE_models["vit-l-20-shallow-encoder"]()

|

| 25 |

+

vae.load_state_dict(vae_ckpt)

|

| 26 |

+

vae = vae.to(device).eval()

|

| 27 |

+

|

| 28 |

+

# sampling params

|

| 29 |

+

B = 1

|

| 30 |

+

total_frames = 32

|

| 31 |

+

max_noise_level = 1000

|

| 32 |

+

ddim_noise_steps = 100

|

| 33 |

+

noise_range = torch.linspace(-1, max_noise_level - 1, ddim_noise_steps + 1)

|

| 34 |

+

noise_abs_max = 20

|

| 35 |

+

ctx_max_noise_idx = ddim_noise_steps // 10 * 3

|

| 36 |

+

|

| 37 |

+

# get input video

|

| 38 |

+

video_id = "snippy-chartreuse-mastiff-f79998db196d-20220401-224517.chunk_001"

|

| 39 |

+

mp4_path = f"sample_data/{video_id}.mp4"

|

| 40 |

+

actions_path = f"sample_data/{video_id}.actions.pt"

|

| 41 |

+

video = read_video(mp4_path, pts_unit="sec")[0].float() / 255

|

| 42 |

+

actions = one_hot_actions(torch.load(actions_path))

|

| 43 |

+

offset = 100

|

| 44 |

+

video = video[offset:offset+total_frames].unsqueeze(0)

|

| 45 |

+

actions = actions[offset:offset+total_frames].unsqueeze(0)

|

| 46 |

+

|

| 47 |

+

# sampling inputs

|

| 48 |

+

n_prompt_frames = 1

|

| 49 |

+

x = video[:, :n_prompt_frames]

|

| 50 |

+

x = x.to(device)

|

| 51 |

+

actions = actions.to(device)

|

| 52 |

+

|

| 53 |

+

# vae encoding

|

| 54 |

+

scaling_factor = 0.07843137255

|

| 55 |

+

x = rearrange(x, "b t h w c -> (b t) c h w")

|

| 56 |

+

H, W = x.shape[-2:]

|

| 57 |

+

with torch.no_grad():

|

| 58 |

+

x = vae.encode(x * 2 - 1).mean * scaling_factor

|

| 59 |

+

x = rearrange(x, "(b t) (h w) c -> b t c h w", t=n_prompt_frames, h=H//vae.patch_size, w=W//vae.patch_size)

|

| 60 |

+

|

| 61 |

+

# get alphas

|

| 62 |

+

betas = sigmoid_beta_schedule(max_noise_level).to(device)

|

| 63 |

+

alphas = 1.0 - betas

|

| 64 |

+

alphas_cumprod = torch.cumprod(alphas, dim=0)

|

| 65 |

+

alphas_cumprod = rearrange(alphas_cumprod, "T -> T 1 1 1")

|

| 66 |

+

|

| 67 |

+

# sampling loop

|

| 68 |

+

for i in tqdm(range(n_prompt_frames, total_frames)):

|

| 69 |

+

chunk = torch.randn((B, 1, *x.shape[-3:]), device=device)

|

| 70 |

+

chunk = torch.clamp(chunk, -noise_abs_max, +noise_abs_max)

|

| 71 |

+

x = torch.cat([x, chunk], dim=1)

|

| 72 |

+

start_frame = max(0, i + 1 - model.max_frames)

|

| 73 |

+

|

| 74 |

+

for noise_idx in reversed(range(1, ddim_noise_steps + 1)):

|

| 75 |

+

# set up noise values

|

| 76 |

+

ctx_noise_idx = min(noise_idx, ctx_max_noise_idx)

|

| 77 |

+

t_ctx = torch.full((B, i), noise_range[ctx_noise_idx], dtype=torch.long, device=device)

|

| 78 |

+

t = torch.full((B, 1), noise_range[noise_idx], dtype=torch.long, device=device)

|

| 79 |

+

t_next = torch.full((B, 1), noise_range[noise_idx - 1], dtype=torch.long, device=device)

|

| 80 |

+

t_next = torch.where(t_next < 0, t, t_next)

|

| 81 |

+

t = torch.cat([t_ctx, t], dim=1)

|

| 82 |

+

t_next = torch.cat([t_ctx, t_next], dim=1)

|

| 83 |

+

|

| 84 |

+

# sliding window

|

| 85 |

+

x_curr = x.clone()

|

| 86 |

+

x_curr = x_curr[:, start_frame:]

|

| 87 |

+

t = t[:, start_frame:]

|

| 88 |

+

t_next = t_next[:, start_frame:]

|

| 89 |

+

|

| 90 |

+

# add some noise to the context

|

| 91 |

+

ctx_noise = torch.randn_like(x_curr[:, :-1])

|

| 92 |

+

ctx_noise = torch.clamp(ctx_noise, -noise_abs_max, +noise_abs_max)

|

| 93 |

+

x_curr[:, :-1] = alphas_cumprod[t[:, :-1]].sqrt() * x_curr[:, :-1] + (1 - alphas_cumprod[t[:, :-1]]).sqrt() * ctx_noise

|

| 94 |

+

|

| 95 |

+

# get model predictions

|

| 96 |

+

with torch.no_grad():

|

| 97 |

+

with autocast("cuda", dtype=torch.half):

|

| 98 |

+

v = model(x_curr, t, actions[:, start_frame : i + 1])

|

| 99 |

+

|

| 100 |

+

x_start = alphas_cumprod[t].sqrt() * x_curr - (1 - alphas_cumprod[t]).sqrt() * v

|

| 101 |

+

x_noise = ((1 / alphas_cumprod[t]).sqrt() * x_curr - x_start) \

|

| 102 |

+

/ (1 / alphas_cumprod[t] - 1).sqrt()

|

| 103 |

+

|

| 104 |

+

# get frame prediction

|

| 105 |

+

x_pred = alphas_cumprod[t_next].sqrt() * x_start + x_noise * (1 - alphas_cumprod[t_next]).sqrt()

|

| 106 |

+

x[:, -1:] = x_pred[:, -1:]

|

| 107 |

+

|

| 108 |

+

# vae decoding

|

| 109 |

+

x = rearrange(x, "b t c h w -> (b t) (h w) c")

|

| 110 |

+

with torch.no_grad():

|

| 111 |

+

x = (vae.decode(x / scaling_factor) + 1) / 2

|

| 112 |

+

x = rearrange(x, "(b t) c h w -> b t h w c", t=total_frames)

|

| 113 |

+

|

| 114 |

+

# save video

|

| 115 |

+

x = torch.clamp(x, 0, 1)

|

| 116 |

+

x = (x * 255).byte()

|

| 117 |

+

write_video("video.mp4", x[0], fps=20)

|

| 118 |

+

print("generation saved to video.mp4.")

|

| 119 |

+

|

open-oasis-master/media/arch.png

ADDED

|

open-oasis-master/media/sample_0.gif

ADDED

|

Git LFS Details

|

open-oasis-master/media/sample_1.gif

ADDED

|

Git LFS Details

|

open-oasis-master/media/thumb.png

ADDED

|

open-oasis-master/requirements.txt

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

av==13.1.0

|

| 2 |

+

certifi==2024.8.30

|

| 3 |

+

charset-normalizer==3.4.0

|

| 4 |

+

diffusers==0.31.0

|

| 5 |

+

einops==0.8.0

|

| 6 |

+

filelock==3.13.1

|

| 7 |

+

fsspec==2024.2.0

|

| 8 |

+

huggingface-hub==0.26.2

|

| 9 |

+

idna==3.10

|

| 10 |

+

importlib_metadata==8.5.0

|

| 11 |

+

Jinja2==3.1.3

|

| 12 |

+

MarkupSafe==2.1.5

|

| 13 |

+

mpmath==1.3.0

|

| 14 |

+

networkx==3.2.1

|

| 15 |

+

numpy==1.26.3

|

| 16 |

+

packaging==24.1

|

| 17 |

+

pillow==10.2.0

|

| 18 |

+

PyYAML==6.0.2

|

| 19 |

+

regex==2024.9.11

|

| 20 |

+

requests==2.32.3

|

| 21 |

+

safetensors==0.4.5

|

| 22 |

+

sympy==1.13.1

|

| 23 |

+

timm==1.0.11

|

| 24 |

+

torch==2.5.1

|

| 25 |

+

torchaudio==2.5.1

|

| 26 |

+

torchvision==0.20.1

|

| 27 |

+

tqdm==4.66.6

|

| 28 |

+

triton==3.1.0

|

| 29 |

+

typing_extensions==4.9.0

|

| 30 |

+

urllib3==2.2.3

|

| 31 |

+

zipp==3.20.2

|

open-oasis-master/rotary_embedding_torch.py

ADDED

|

@@ -0,0 +1,316 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|