Spaces:

Paused

Paused

Commit

•

c964d4c

1

Parent(s):

c6b26ba

init

Browse files- README.md +31 -13

- assets/EU.png +0 -0

- assets/reset.png +0 -0

- datasets/__init__.py +0 -0

- datasets/crowd.py +268 -0

- demo.py +148 -0

- examples/IMG_101.jpg +0 -0

- examples/IMG_125.jpg +0 -0

- examples/IMG_138.jpg +0 -0

- examples/IMG_18.jpg +0 -0

- examples/IMG_180.jpg +0 -0

- examples/IMG_206.jpg +0 -0

- examples/IMG_223.jpg +0 -0

- examples/IMG_247.jpg +0 -0

- examples/IMG_270.jpg +0 -0

- examples/IMG_306.jpg +0 -0

- losses/__init__.py +1 -0

- losses/bregman_pytorch.py +484 -0

- losses/consistency_loss.py +294 -0

- losses/dm_loss.py +62 -0

- losses/multi_con_loss.py +41 -0

- losses/ot_loss.py +68 -0

- losses/ramps.py +41 -0

- losses/rank_loss.py +53 -0

- network/pvt_cls.py +623 -0

- requirements.txt +7 -0

- sample_imgs/overview.png +0 -0

- test.py +117 -0

- train.py +369 -0

- utils/__init__.py +0 -0

- utils/log_utils.py +24 -0

- utils/pytorch_utils.py +58 -0

README.md

CHANGED

|

@@ -1,13 +1,31 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

# TreeFormer

|

| 3 |

+

|

| 4 |

+

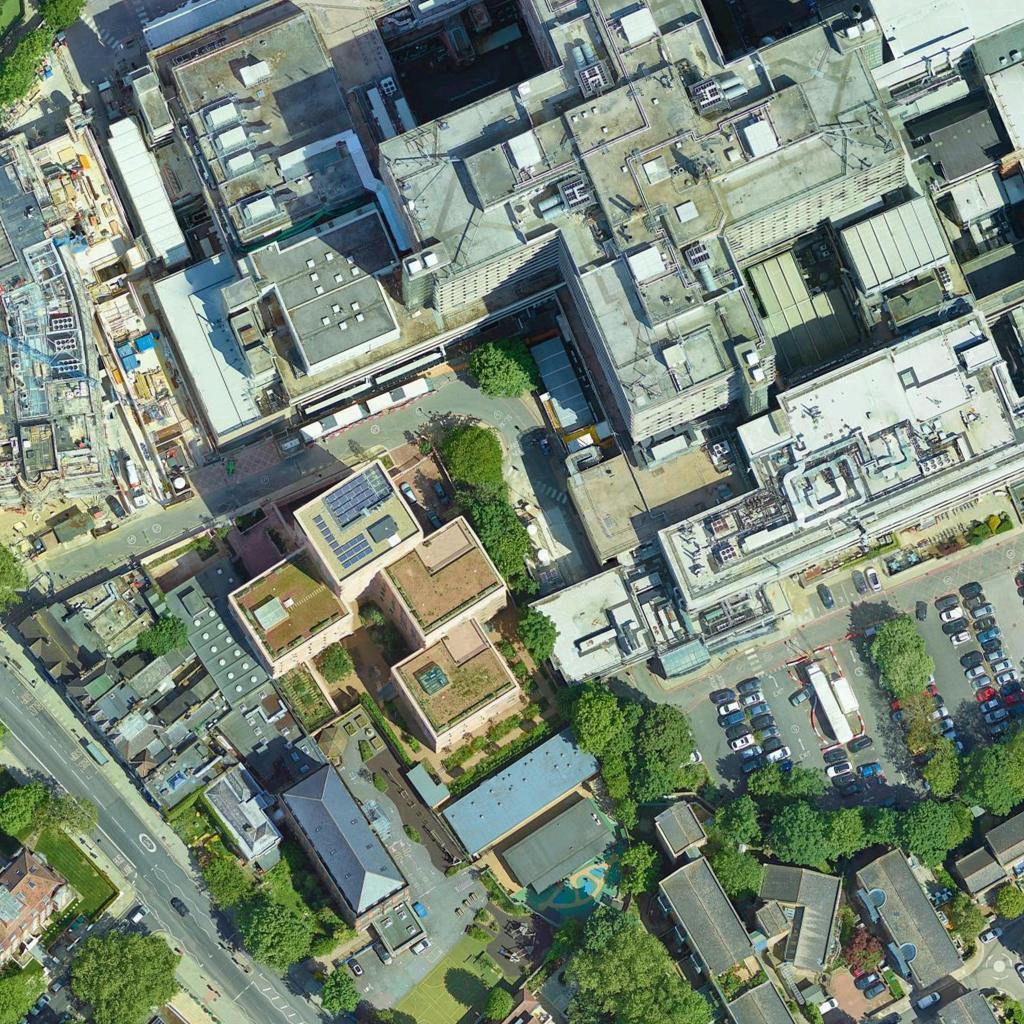

This is the code base for IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING (TGRS 2023) paper ['TreeFormer: a Semi-Supervised Transformer-based Framework for Tree Counting from a Single High Resolution Image'](https://arxiv.org/abs/2307.06118)

|

| 5 |

+

|

| 6 |

+

<img src="sample_imgs/overview.png">

|

| 7 |

+

|

| 8 |

+

## Installation

|

| 9 |

+

|

| 10 |

+

Python ≥ 3.7.

|

| 11 |

+

|

| 12 |

+

To install the required packages, please run:

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

```bash

|

| 16 |

+

pip install -r requirements.txt

|

| 17 |

+

```

|

| 18 |

+

|

| 19 |

+

## Dataset

|

| 20 |

+

Download the dataset from [google drive](https://drive.google.com/file/d/1xcjv8967VvvzcDM4aqAi7Corkb11T0i2/view?usp=drive_link).

|

| 21 |

+

## Evaluation

|

| 22 |

+

Download our trained model on [London](https://drive.google.com/file/d/14uuOF5758sxtM5EgeGcRtSln5lUXAHge/view?usp=sharing) dataset.

|

| 23 |

+

|

| 24 |

+

Modify the path to the dataset and model for evaluation in 'test.py'.

|

| 25 |

+

|

| 26 |

+

Run 'test.py'

|

| 27 |

+

## Acknowledgements

|

| 28 |

+

|

| 29 |

+

- Part of codes are borrowed from [PVT](https://github.com/whai362/PVT) and [DM Count](https://github.com/cvlab-stonybrook/DM-Count). Thanks for their great work!

|

| 30 |

+

|

| 31 |

+

|

assets/EU.png

ADDED

|

assets/reset.png

ADDED

|

datasets/__init__.py

ADDED

|

File without changes

|

datasets/crowd.py

ADDED

|

@@ -0,0 +1,268 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image

|

| 2 |

+

import torch.utils.data as data

|

| 3 |

+

import os

|

| 4 |

+

from glob import glob

|

| 5 |

+

import torch

|

| 6 |

+

import torchvision.transforms.functional as F

|

| 7 |

+

from torchvision import transforms

|

| 8 |

+

import random

|

| 9 |

+

import numpy as np

|

| 10 |

+

import scipy.io as sio

|

| 11 |

+

|

| 12 |

+

def random_crop(im_h, im_w, crop_h, crop_w):

|

| 13 |

+

res_h = im_h - crop_h

|

| 14 |

+

res_w = im_w - crop_w

|

| 15 |

+

i = random.randint(0, res_h)

|

| 16 |

+

j = random.randint(0, res_w)

|

| 17 |

+

return i, j, crop_h, crop_w

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def gen_discrete_map(im_height, im_width, points):

|

| 21 |

+

"""

|

| 22 |

+

func: generate the discrete map.

|

| 23 |

+

points: [num_gt, 2], for each row: [width, height]

|

| 24 |

+

"""

|

| 25 |

+

discrete_map = np.zeros([im_height, im_width], dtype=np.float32)

|

| 26 |

+

h, w = discrete_map.shape[:2]

|

| 27 |

+

num_gt = points.shape[0]

|

| 28 |

+

if num_gt == 0:

|

| 29 |

+

return discrete_map

|

| 30 |

+

|

| 31 |

+

# fast create discrete map

|

| 32 |

+

points_np = np.array(points).round().astype(int)

|

| 33 |

+

p_h = np.minimum(points_np[:, 1], np.array([h-1]*num_gt).astype(int))

|

| 34 |

+

p_w = np.minimum(points_np[:, 0], np.array([w-1]*num_gt).astype(int))

|

| 35 |

+

p_index = torch.from_numpy(p_h* im_width + p_w).to(torch.int64)

|

| 36 |

+

discrete_map = torch.zeros(im_width * im_height).scatter_add_(0, index=p_index, src=torch.ones(im_width*im_height)).view(im_height, im_width).numpy()

|

| 37 |

+

|

| 38 |

+

''' slow method

|

| 39 |

+

for p in points:

|

| 40 |

+

p = np.round(p).astype(int)

|

| 41 |

+

p[0], p[1] = min(h - 1, p[1]), min(w - 1, p[0])

|

| 42 |

+

discrete_map[p[0], p[1]] += 1

|

| 43 |

+

'''

|

| 44 |

+

assert np.sum(discrete_map) == num_gt

|

| 45 |

+

return discrete_map

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

class Base(data.Dataset):

|

| 49 |

+

def __init__(self, root_path, crop_size, downsample_ratio=8):

|

| 50 |

+

|

| 51 |

+

self.root_path = root_path

|

| 52 |

+

self.c_size = crop_size

|

| 53 |

+

self.d_ratio = downsample_ratio

|

| 54 |

+

assert self.c_size % self.d_ratio == 0

|

| 55 |

+

self.dc_size = self.c_size // self.d_ratio

|

| 56 |

+

self.trans = transforms.Compose([

|

| 57 |

+

transforms.ToTensor(),

|

| 58 |

+

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

|

| 59 |

+

])

|

| 60 |

+

|

| 61 |

+

def __len__(self):

|

| 62 |

+

pass

|

| 63 |

+

|

| 64 |

+

def __getitem__(self, item):

|

| 65 |

+

pass

|

| 66 |

+

|

| 67 |

+

def train_transform(self, img, keypoints, gauss_im):

|

| 68 |

+

wd, ht = img.size

|

| 69 |

+

st_size = 1.0 * min(wd, ht)

|

| 70 |

+

assert st_size >= self.c_size

|

| 71 |

+

assert len(keypoints) >= 0

|

| 72 |

+

i, j, h, w = random_crop(ht, wd, self.c_size, self.c_size)

|

| 73 |

+

img = F.crop(img, i, j, h, w)

|

| 74 |

+

gauss_im = F.crop(img, i, j, h, w)

|

| 75 |

+

if len(keypoints) > 0:

|

| 76 |

+

keypoints = keypoints - [j, i]

|

| 77 |

+

idx_mask = (keypoints[:, 0] >= 0) * (keypoints[:, 0] <= w) * \

|

| 78 |

+

(keypoints[:, 1] >= 0) * (keypoints[:, 1] <= h)

|

| 79 |

+

keypoints = keypoints[idx_mask]

|

| 80 |

+

else:

|

| 81 |

+

keypoints = np.empty([0, 2])

|

| 82 |

+

|

| 83 |

+

gt_discrete = gen_discrete_map(h, w, keypoints)

|

| 84 |

+

down_w = w // self.d_ratio

|

| 85 |

+

down_h = h // self.d_ratio

|

| 86 |

+

gt_discrete = gt_discrete.reshape([down_h, self.d_ratio, down_w, self.d_ratio]).sum(axis=(1, 3))

|

| 87 |

+

assert np.sum(gt_discrete) == len(keypoints)

|

| 88 |

+

|

| 89 |

+

if len(keypoints) > 0:

|

| 90 |

+

if random.random() > 0.5:

|

| 91 |

+

img = F.hflip(img)

|

| 92 |

+

gauss_im = F.hflip(gauss_im)

|

| 93 |

+

gt_discrete = np.fliplr(gt_discrete)

|

| 94 |

+

keypoints[:, 0] = w - keypoints[:, 0]

|

| 95 |

+

else:

|

| 96 |

+

if random.random() > 0.5:

|

| 97 |

+

img = F.hflip(img)

|

| 98 |

+

gauss_im = F.hflip(gauss_im)

|

| 99 |

+

gt_discrete = np.fliplr(gt_discrete)

|

| 100 |

+

gt_discrete = np.expand_dims(gt_discrete, 0)

|

| 101 |

+

|

| 102 |

+

return self.trans(img), gauss_im, torch.from_numpy(keypoints.copy()).float(), torch.from_numpy(gt_discrete.copy()).float()

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

class Crowd_TC(Base):

|

| 107 |

+

def __init__(self, root_path, crop_size, downsample_ratio=8, method='train'):

|

| 108 |

+

super().__init__(root_path, crop_size, downsample_ratio)

|

| 109 |

+

self.method = method

|

| 110 |

+

if method not in ['train', 'val']:

|

| 111 |

+

raise Exception("not implement")

|

| 112 |

+

|

| 113 |

+

self.im_list = sorted(glob(os.path.join(self.root_path, 'images', '*.jpg')))

|

| 114 |

+

|

| 115 |

+

print('number of img [{}]: {}'.format(method, len(self.im_list)))

|

| 116 |

+

|

| 117 |

+

def __len__(self):

|

| 118 |

+

return len(self.im_list)

|

| 119 |

+

|

| 120 |

+

def __getitem__(self, item):

|

| 121 |

+

img_path = self.im_list[item]

|

| 122 |

+

name = os.path.basename(img_path).split('.')[0]

|

| 123 |

+

gd_path = os.path.join(self.root_path, 'ground_truth', 'GT_{}.mat'.format(name))

|

| 124 |

+

img = Image.open(img_path).convert('RGB')

|

| 125 |

+

keypoints = sio.loadmat(gd_path)['image_info'][0][0][0][0][0]

|

| 126 |

+

gauss_path = os.path.join(self.root_path, 'ground_truth', '{}_densitymap.npy'.format(name))

|

| 127 |

+

gauss_im = torch.from_numpy(np.load(gauss_path)).float()

|

| 128 |

+

#import pdb;pdb.set_trace()

|

| 129 |

+

#print("label {}", item)

|

| 130 |

+

|

| 131 |

+

if self.method == 'train':

|

| 132 |

+

return self.train_transform(img, keypoints, gauss_im)

|

| 133 |

+

elif self.method == 'val':

|

| 134 |

+

wd, ht = img.size

|

| 135 |

+

st_size = 1.0 * min(wd, ht)

|

| 136 |

+

if st_size < self.c_size:

|

| 137 |

+

rr = 1.0 * self.c_size / st_size

|

| 138 |

+

wd = round(wd * rr)

|

| 139 |

+

ht = round(ht * rr)

|

| 140 |

+

st_size = 1.0 * min(wd, ht)

|

| 141 |

+

img = img.resize((wd, ht), Image.BICUBIC)

|

| 142 |

+

img = self.trans(img)

|

| 143 |

+

#import pdb;pdb.set_trace()

|

| 144 |

+

|

| 145 |

+

return img, len(keypoints), name, gauss_im

|

| 146 |

+

|

| 147 |

+

def train_transform(self, img, keypoints, gauss_im):

|

| 148 |

+

wd, ht = img.size

|

| 149 |

+

st_size = 1.0 * min(wd, ht)

|

| 150 |

+

# resize the image to fit the crop size

|

| 151 |

+

if st_size < self.c_size:

|

| 152 |

+

rr = 1.0 * self.c_size / st_size

|

| 153 |

+

wd = round(wd * rr)

|

| 154 |

+

ht = round(ht * rr)

|

| 155 |

+

st_size = 1.0 * min(wd, ht)

|

| 156 |

+

img = img.resize((wd, ht), Image.BICUBIC)

|

| 157 |

+

#gauss_im = gauss_im.resize((wd, ht), Image.BICUBIC)

|

| 158 |

+

keypoints = keypoints * rr

|

| 159 |

+

assert st_size >= self.c_size, print(wd, ht)

|

| 160 |

+

assert len(keypoints) >= 0

|

| 161 |

+

i, j, h, w = random_crop(ht, wd, self.c_size, self.c_size)

|

| 162 |

+

img = F.crop(img, i, j, h, w)

|

| 163 |

+

gauss_im = F.crop(gauss_im, i, j, h, w)

|

| 164 |

+

if len(keypoints) > 0:

|

| 165 |

+

keypoints = keypoints - [j, i]

|

| 166 |

+

idx_mask = (keypoints[:, 0] >= 0) * (keypoints[:, 0] <= w) * \

|

| 167 |

+

(keypoints[:, 1] >= 0) * (keypoints[:, 1] <= h)

|

| 168 |

+

keypoints = keypoints[idx_mask]

|

| 169 |

+

else:

|

| 170 |

+

keypoints = np.empty([0, 2])

|

| 171 |

+

|

| 172 |

+

gt_discrete = gen_discrete_map(h, w, keypoints)

|

| 173 |

+

down_w = w // self.d_ratio

|

| 174 |

+

down_h = h // self.d_ratio

|

| 175 |

+

gt_discrete = gt_discrete.reshape([down_h, self.d_ratio, down_w, self.d_ratio]).sum(axis=(1, 3))

|

| 176 |

+

assert np.sum(gt_discrete) == len(keypoints)

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

if len(keypoints) > 0:

|

| 180 |

+

if random.random() > 0.5:

|

| 181 |

+

img = F.hflip(img)

|

| 182 |

+

gauss_im = F.hflip(gauss_im)

|

| 183 |

+

gt_discrete = np.fliplr(gt_discrete)

|

| 184 |

+

keypoints[:, 0] = w - keypoints[:, 0] - 1

|

| 185 |

+

else:

|

| 186 |

+

if random.random() > 0.5:

|

| 187 |

+

img = F.hflip(img)

|

| 188 |

+

gauss_im = F.hflip(gauss_im)

|

| 189 |

+

gt_discrete = np.fliplr(gt_discrete)

|

| 190 |

+

gt_discrete = np.expand_dims(gt_discrete, 0)

|

| 191 |

+

#import pdb;pdb.set_trace()

|

| 192 |

+

|

| 193 |

+

return self.trans(img), gauss_im, torch.from_numpy(keypoints.copy()).float(), torch.from_numpy(gt_discrete.copy()).float()

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

class Base_UL(data.Dataset):

|

| 197 |

+

def __init__(self, root_path, crop_size, downsample_ratio=8):

|

| 198 |

+

self.root_path = root_path

|

| 199 |

+

self.c_size = crop_size

|

| 200 |

+

self.d_ratio = downsample_ratio

|

| 201 |

+

assert self.c_size % self.d_ratio == 0

|

| 202 |

+

self.dc_size = self.c_size // self.d_ratio

|

| 203 |

+

self.trans = transforms.Compose([

|

| 204 |

+

transforms.ToTensor(),

|

| 205 |

+

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

|

| 206 |

+

])

|

| 207 |

+

|

| 208 |

+

def __len__(self):

|

| 209 |

+

pass

|

| 210 |

+

|

| 211 |

+

def __getitem__(self, item):

|

| 212 |

+

pass

|

| 213 |

+

|

| 214 |

+

def train_transform_ul(self, img):

|

| 215 |

+

wd, ht = img.size

|

| 216 |

+

st_size = 1.0 * min(wd, ht)

|

| 217 |

+

assert st_size >= self.c_size

|

| 218 |

+

i, j, h, w = random_crop(ht, wd, self.c_size, self.c_size)

|

| 219 |

+

img = F.crop(img, i, j, h, w)

|

| 220 |

+

|

| 221 |

+

if random.random() > 0.5:

|

| 222 |

+

img = F.hflip(img)

|

| 223 |

+

|

| 224 |

+

return self.trans(img)

|

| 225 |

+

|

| 226 |

+

|

| 227 |

+

class Crowd_UL_TC(Base_UL):

|

| 228 |

+

def __init__(self, root_path, crop_size, downsample_ratio=8, method='train_ul'):

|

| 229 |

+

super().__init__(root_path, crop_size, downsample_ratio)

|

| 230 |

+

self.method = method

|

| 231 |

+

if method not in ['train_ul']:

|

| 232 |

+

raise Exception("not implement")

|

| 233 |

+

|

| 234 |

+

self.im_list = sorted(glob(os.path.join(self.root_path, 'images', '*.jpg')))

|

| 235 |

+

print('number of img [{}]: {}'.format(method, len(self.im_list)))

|

| 236 |

+

|

| 237 |

+

def __len__(self):

|

| 238 |

+

return len(self.im_list)

|

| 239 |

+

|

| 240 |

+

def __getitem__(self, item):

|

| 241 |

+

img_path = self.im_list[item]

|

| 242 |

+

name = os.path.basename(img_path).split('.')[0]

|

| 243 |

+

img = Image.open(img_path).convert('RGB')

|

| 244 |

+

#print("un_label {}", item)

|

| 245 |

+

|

| 246 |

+

return self.train_transform_ul(img)

|

| 247 |

+

|

| 248 |

+

|

| 249 |

+

def train_transform_ul(self, img):

|

| 250 |

+

wd, ht = img.size

|

| 251 |

+

st_size = 1.0 * min(wd, ht)

|

| 252 |

+

# resize the image to fit the crop size

|

| 253 |

+

if st_size < self.c_size:

|

| 254 |

+

rr = 1.0 * self.c_size / st_size

|

| 255 |

+

wd = round(wd * rr)

|

| 256 |

+

ht = round(ht * rr)

|

| 257 |

+

st_size = 1.0 * min(wd, ht)

|

| 258 |

+

img = img.resize((wd, ht), Image.BICUBIC)

|

| 259 |

+

|

| 260 |

+

assert st_size >= self.c_size, print(wd, ht)

|

| 261 |

+

|

| 262 |

+

i, j, h, w = random_crop(ht, wd, self.c_size, self.c_size)

|

| 263 |

+

img = F.crop(img, i, j, h, w)

|

| 264 |

+

if random.random() > 0.5:

|

| 265 |

+

img = F.hflip(img)

|

| 266 |

+

|

| 267 |

+

return self.trans(img),1

|

| 268 |

+

|

demo.py

ADDED

|

@@ -0,0 +1,148 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

import torch.nn.functional as F

|

| 6 |

+

from torchvision import transforms

|

| 7 |

+

|

| 8 |

+

from PIL import Image

|

| 9 |

+

from network import pvt_cls as TCN

|

| 10 |

+

|

| 11 |

+

import gradio as gr

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

def demo(img_path):

|

| 15 |

+

# config

|

| 16 |

+

batch_size = 8

|

| 17 |

+

crop_size = 256

|

| 18 |

+

model_path = '/users/k21163430/workspace/TreeFormer/models/best_model.pth'

|

| 19 |

+

|

| 20 |

+

device = torch.device('cuda')

|

| 21 |

+

|

| 22 |

+

# prepare model

|

| 23 |

+

model = TCN.pvt_treeformer(pretrained=False)

|

| 24 |

+

model.to(device)

|

| 25 |

+

model.load_state_dict(torch.load(model_path, device))

|

| 26 |

+

model.eval()

|

| 27 |

+

|

| 28 |

+

# preprocess

|

| 29 |

+

img = Image.open(img_path).convert('RGB')

|

| 30 |

+

show_img = np.array(img)

|

| 31 |

+

wd, ht = img.size

|

| 32 |

+

st_size = 1.0 * min(wd, ht)

|

| 33 |

+

if st_size < crop_size:

|

| 34 |

+

rr = 1.0 * crop_size / st_size

|

| 35 |

+

wd = round(wd * rr)

|

| 36 |

+

ht = round(ht * rr)

|

| 37 |

+

st_size = 1.0 * min(wd, ht)

|

| 38 |

+

img = img.resize((wd, ht), Image.BICUBIC)

|

| 39 |

+

transform = transforms.Compose([

|

| 40 |

+

transforms.ToTensor(),

|

| 41 |

+

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

|

| 42 |

+

])

|

| 43 |

+

img = transform(img)

|

| 44 |

+

img = img.unsqueeze(0)

|

| 45 |

+

|

| 46 |

+

# model forward

|

| 47 |

+

with torch.no_grad():

|

| 48 |

+

inputs = img.to(device)

|

| 49 |

+

crop_imgs, crop_masks = [], []

|

| 50 |

+

b, c, h, w = inputs.size()

|

| 51 |

+

rh, rw = crop_size, crop_size

|

| 52 |

+

|

| 53 |

+

for i in range(0, h, rh):

|

| 54 |

+

gis, gie = max(min(h - rh, i), 0), min(h, i + rh)

|

| 55 |

+

|

| 56 |

+

for j in range(0, w, rw):

|

| 57 |

+

gjs, gje = max(min(w - rw, j), 0), min(w, j + rw)

|

| 58 |

+

crop_imgs.append(inputs[:, :, gis:gie, gjs:gje])

|

| 59 |

+

mask = torch.zeros([b, 1, h, w]).to(device)

|

| 60 |

+

mask[:, :, gis:gie, gjs:gje].fill_(1.0)

|

| 61 |

+

crop_masks.append(mask)

|

| 62 |

+

crop_imgs, crop_masks = map(lambda x: torch.cat(

|

| 63 |

+

x, dim=0), (crop_imgs, crop_masks))

|

| 64 |

+

|

| 65 |

+

crop_preds = []

|

| 66 |

+

nz, bz = crop_imgs.size(0), batch_size

|

| 67 |

+

for i in range(0, nz, bz):

|

| 68 |

+

|

| 69 |

+

gs, gt = i, min(nz, i + bz)

|

| 70 |

+

crop_pred, _ = model(crop_imgs[gs:gt])

|

| 71 |

+

crop_pred = crop_pred[0]

|

| 72 |

+

|

| 73 |

+

_, _, h1, w1 = crop_pred.size()

|

| 74 |

+

crop_pred = F.interpolate(crop_pred, size=(

|

| 75 |

+

h1 * 4, w1 * 4), mode='bilinear', align_corners=True) / 16

|

| 76 |

+

crop_preds.append(crop_pred)

|

| 77 |

+

crop_preds = torch.cat(crop_preds, dim=0)

|

| 78 |

+

|

| 79 |

+

# splice them to the original size

|

| 80 |

+

idx = 0

|

| 81 |

+

pred_map = torch.zeros([b, 1, h, w]).to(device)

|

| 82 |

+

for i in range(0, h, rh):

|

| 83 |

+

gis, gie = max(min(h - rh, i), 0), min(h, i + rh)

|

| 84 |

+

for j in range(0, w, rw):

|

| 85 |

+

gjs, gje = max(min(w - rw, j), 0), min(w, j + rw)

|

| 86 |

+

pred_map[:, :, gis:gie, gjs:gje] += crop_preds[idx]

|

| 87 |

+

idx += 1

|

| 88 |

+

# for the overlapping area, compute average value

|

| 89 |

+

mask = crop_masks.sum(dim=0).unsqueeze(0)

|

| 90 |

+

outputs = pred_map / mask

|

| 91 |

+

|

| 92 |

+

outputs = F.interpolate(outputs, size=(

|

| 93 |

+

h, w), mode='bilinear', align_corners=True)/4

|

| 94 |

+

outputs = pred_map / mask

|

| 95 |

+

model_output = round(torch.sum(outputs).item())

|

| 96 |

+

|

| 97 |

+

print("{}: {}".format(img_path, model_output))

|

| 98 |

+

outputs = outputs.squeeze().cpu().numpy()

|

| 99 |

+

outputs = (outputs - np.min(outputs)) / \

|

| 100 |

+

(np.max(outputs) - np.min(outputs))

|

| 101 |

+

|

| 102 |

+

show_img = show_img / 255.0

|

| 103 |

+

show_img = show_img * 0.2 + outputs[:, :, None] * 0.8

|

| 104 |

+

|

| 105 |

+

return model_output, show_img

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

if __name__ == "__main__":

|

| 109 |

+

# test

|

| 110 |

+

# img_path = sys.argv[1]

|

| 111 |

+

# demo(img)

|

| 112 |

+

|

| 113 |

+

# Launch a gr.Interface

|

| 114 |

+

gr_demo = gr.Interface(fn=demo,

|

| 115 |

+

inputs=gr.Image(source="upload",

|

| 116 |

+

type="filepath",

|

| 117 |

+

label="Input Image",

|

| 118 |

+

width=768,

|

| 119 |

+

height=768,

|

| 120 |

+

),

|

| 121 |

+

outputs=[

|

| 122 |

+

gr.Number(label="Predicted Tree Count"),

|

| 123 |

+

gr.Image(label="Density Map",

|

| 124 |

+

width=768,

|

| 125 |

+

height=768,

|

| 126 |

+

)

|

| 127 |

+

],

|

| 128 |

+

title="TreeFormer",

|

| 129 |

+

description="TreeFormer is a semi-supervised transformer-based framework for tree counting from a single high resolution image. Upload an image and TreeFormer will predict the number of trees in the image and generate a density map of the trees.",

|

| 130 |

+

article="This work has been developed a spart of the ReSET project which has received funding from the European Union's Horizon 2020 FET Proactive Programme under grant agreement No 101017857. The contents of this publication are the sole responsibility of the ReSET consortium and do not necessarily reflect the opinion of the European Union.",

|

| 131 |

+

examples=[

|

| 132 |

+

["./examples/IMG_101.jpg"],

|

| 133 |

+

["./examples/IMG_125.jpg"],

|

| 134 |

+

["./examples/IMG_138.jpg"],

|

| 135 |

+

["./examples/IMG_180.jpg"],

|

| 136 |

+

["./examples/IMG_18.jpg"],

|

| 137 |

+

["./examples/IMG_206.jpg"],

|

| 138 |

+

["./examples/IMG_223.jpg"],

|

| 139 |

+

["./examples/IMG_247.jpg"],

|

| 140 |

+

["./examples/IMG_270.jpg"],

|

| 141 |

+

["./examples/IMG_306.jpg"],

|

| 142 |

+

],

|

| 143 |

+

# cache_examples=True,

|

| 144 |

+

examples_per_page=10,

|

| 145 |

+

allow_flagging=False,

|

| 146 |

+

theme=gr.themes.Default(),

|

| 147 |

+

)

|

| 148 |

+

gr_demo.launch(share=True, server_port=7861, favicon_path="./assets/reset.png")

|

examples/IMG_101.jpg

ADDED

|

examples/IMG_125.jpg

ADDED

|

examples/IMG_138.jpg

ADDED

|

examples/IMG_18.jpg

ADDED

|

examples/IMG_180.jpg

ADDED

|

examples/IMG_206.jpg

ADDED

|

examples/IMG_223.jpg

ADDED

|

examples/IMG_247.jpg

ADDED

|

examples/IMG_270.jpg

ADDED

|

examples/IMG_306.jpg

ADDED

|

losses/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

losses/bregman_pytorch.py

ADDED

|

@@ -0,0 +1,484 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

"""

|

| 3 |

+

Rewrite ot.bregman.sinkhorn in Python Optimal Transport (https://pythonot.github.io/_modules/ot/bregman.html#sinkhorn)

|

| 4 |

+

using pytorch operations.

|

| 5 |

+

Bregman projections for regularized OT (Sinkhorn distance).

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import torch

|

| 9 |

+

|

| 10 |

+

M_EPS = 1e-16

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def sinkhorn(a, b, C, reg=1e-1, method='sinkhorn', maxIter=1000, tau=1e3,

|

| 14 |

+

stopThr=1e-9, verbose=False, log=True, warm_start=None, eval_freq=10, print_freq=200, **kwargs):

|

| 15 |

+

"""

|

| 16 |

+

Solve the entropic regularization optimal transport

|

| 17 |

+

The input should be PyTorch tensors

|

| 18 |

+

The function solves the following optimization problem:

|

| 19 |

+

|

| 20 |

+

.. math::

|

| 21 |

+

\gamma = arg\min_\gamma <\gamma,C>_F + reg\cdot\Omega(\gamma)

|

| 22 |

+

s.t. \gamma 1 = a

|

| 23 |

+

\gamma^T 1= b

|

| 24 |

+

\gamma\geq 0

|

| 25 |

+

where :

|

| 26 |

+

- C is the (ns,nt) metric cost matrix

|

| 27 |

+

- :math:`\Omega` is the entropic regularization term :math:`\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})`

|

| 28 |

+

- a and b are target and source measures (sum to 1)

|

| 29 |

+

The algorithm used for solving the problem is the Sinkhorn-Knopp matrix scaling algorithm as proposed in [1].

|

| 30 |

+

|

| 31 |

+

Parameters

|

| 32 |

+

----------

|

| 33 |

+

a : torch.tensor (na,)

|

| 34 |

+

samples measure in the target domain

|

| 35 |

+

b : torch.tensor (nb,)

|

| 36 |

+

samples in the source domain

|

| 37 |

+

C : torch.tensor (na,nb)

|

| 38 |

+

loss matrix

|

| 39 |

+

reg : float

|

| 40 |

+

Regularization term > 0

|

| 41 |

+

method : str

|

| 42 |

+

method used for the solver either 'sinkhorn', 'greenkhorn', 'sinkhorn_stabilized' or

|

| 43 |

+

'sinkhorn_epsilon_scaling', see those function for specific parameters

|

| 44 |

+

maxIter : int, optional

|

| 45 |

+

Max number of iterations

|

| 46 |

+

stopThr : float, optional

|

| 47 |

+

Stop threshol on error ( > 0 )

|

| 48 |

+

verbose : bool, optional

|

| 49 |

+

Print information along iterations

|

| 50 |

+

log : bool, optional

|

| 51 |

+

record log if True

|

| 52 |

+

|

| 53 |

+

Returns

|

| 54 |

+

-------

|

| 55 |

+

gamma : (na x nb) torch.tensor

|

| 56 |

+

Optimal transportation matrix for the given parameters

|

| 57 |

+

log : dict

|

| 58 |

+

log dictionary return only if log==True in parameters

|

| 59 |

+

|

| 60 |

+

References

|

| 61 |

+

----------

|

| 62 |

+

[1] M. Cuturi, Sinkhorn Distances : Lightspeed Computation of Optimal Transport, Advances in Neural Information Processing Systems (NIPS) 26, 2013

|

| 63 |

+

See Also

|

| 64 |

+

--------

|

| 65 |

+

|

| 66 |

+

"""

|

| 67 |

+

|

| 68 |

+

if method.lower() == 'sinkhorn':

|

| 69 |

+

return sinkhorn_knopp(a, b, C, reg, maxIter=maxIter,

|

| 70 |

+

stopThr=stopThr, verbose=verbose, log=log,

|

| 71 |

+

warm_start=warm_start, eval_freq=eval_freq, print_freq=print_freq,

|

| 72 |

+

**kwargs)

|

| 73 |

+

elif method.lower() == 'sinkhorn_stabilized':

|

| 74 |

+

return sinkhorn_stabilized(a, b, C, reg, maxIter=maxIter, tau=tau,

|

| 75 |

+

stopThr=stopThr, verbose=verbose, log=log,

|

| 76 |

+

warm_start=warm_start, eval_freq=eval_freq, print_freq=print_freq,

|

| 77 |

+

**kwargs)

|

| 78 |

+

elif method.lower() == 'sinkhorn_epsilon_scaling':

|

| 79 |

+

return sinkhorn_epsilon_scaling(a, b, C, reg,

|

| 80 |

+

maxIter=maxIter, maxInnerIter=100, tau=tau,

|

| 81 |

+

scaling_base=0.75, scaling_coef=None, stopThr=stopThr,

|

| 82 |

+

verbose=False, log=log, warm_start=warm_start, eval_freq=eval_freq,

|

| 83 |

+

print_freq=print_freq, **kwargs)

|

| 84 |

+

else:

|

| 85 |

+

raise ValueError("Unknown method '%s'." % method)

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

def sinkhorn_knopp(a, b, C, reg=1e-1, maxIter=1000, stopThr=1e-9,

|

| 89 |

+

verbose=False, log=False, warm_start=None, eval_freq=10, print_freq=200, **kwargs):

|

| 90 |

+

"""

|

| 91 |

+

Solve the entropic regularization optimal transport

|

| 92 |

+

The input should be PyTorch tensors

|

| 93 |

+

The function solves the following optimization problem:

|

| 94 |

+

|

| 95 |

+

.. math::

|

| 96 |

+

\gamma = arg\min_\gamma <\gamma,C>_F + reg\cdot\Omega(\gamma)

|

| 97 |

+

s.t. \gamma 1 = a

|

| 98 |

+

\gamma^T 1= b

|

| 99 |

+

\gamma\geq 0

|

| 100 |

+

where :

|

| 101 |

+

- C is the (ns,nt) metric cost matrix

|

| 102 |

+

- :math:`\Omega` is the entropic regularization term :math:`\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})`

|

| 103 |

+

- a and b are target and source measures (sum to 1)

|

| 104 |

+

The algorithm used for solving the problem is the Sinkhorn-Knopp matrix scaling algorithm as proposed in [1].

|

| 105 |

+

|

| 106 |

+

Parameters

|

| 107 |

+

----------

|

| 108 |

+

a : torch.tensor (na,)

|

| 109 |

+

samples measure in the target domain

|

| 110 |

+

b : torch.tensor (nb,)

|

| 111 |

+

samples in the source domain

|

| 112 |

+

C : torch.tensor (na,nb)

|

| 113 |

+

loss matrix

|

| 114 |

+

reg : float

|

| 115 |

+

Regularization term > 0

|

| 116 |

+

maxIter : int, optional

|

| 117 |

+

Max number of iterations

|

| 118 |

+

stopThr : float, optional

|

| 119 |

+

Stop threshol on error ( > 0 )

|

| 120 |

+

verbose : bool, optional

|

| 121 |

+

Print information along iterations

|

| 122 |

+

log : bool, optional

|

| 123 |

+

record log if True

|

| 124 |

+

|

| 125 |

+

Returns

|

| 126 |

+

-------

|

| 127 |

+

gamma : (na x nb) torch.tensor

|

| 128 |

+

Optimal transportation matrix for the given parameters

|

| 129 |

+

log : dict

|

| 130 |

+

log dictionary return only if log==True in parameters

|

| 131 |

+

|

| 132 |

+

References

|

| 133 |

+

----------

|

| 134 |

+

[1] M. Cuturi, Sinkhorn Distances : Lightspeed Computation of Optimal Transport, Advances in Neural Information Processing Systems (NIPS) 26, 2013

|

| 135 |

+

See Also

|

| 136 |

+

--------

|

| 137 |

+

|

| 138 |

+

"""

|

| 139 |

+

|

| 140 |

+

device = a.device

|

| 141 |

+

na, nb = C.shape

|

| 142 |

+

|

| 143 |

+

assert na >= 1 and nb >= 1, 'C needs to be 2d'

|

| 144 |

+

assert na == a.shape[0] and nb == b.shape[0], "Shape of a or b does't match that of C"

|

| 145 |

+

assert reg > 0, 'reg should be greater than 0'

|

| 146 |

+

assert a.min() >= 0. and b.min() >= 0., 'Elements in a or b less than 0'

|

| 147 |

+

|

| 148 |

+

if log:

|

| 149 |

+

log = {'err': []}

|

| 150 |

+

|

| 151 |

+

if warm_start is not None:

|

| 152 |

+

u = warm_start['u']

|

| 153 |

+

v = warm_start['v']

|

| 154 |

+

else:

|

| 155 |

+

u = torch.ones(na, dtype=a.dtype).to(device) / na

|

| 156 |

+

v = torch.ones(nb, dtype=b.dtype).to(device) / nb

|

| 157 |

+

|

| 158 |

+

K = torch.empty(C.shape, dtype=C.dtype).to(device)

|

| 159 |

+

torch.div(C, -reg, out=K)

|

| 160 |

+

torch.exp(K, out=K)

|

| 161 |

+

|

| 162 |

+

b_hat = torch.empty(b.shape, dtype=C.dtype).to(device)

|

| 163 |

+

|

| 164 |

+

it = 1

|

| 165 |

+

err = 1

|

| 166 |

+

|

| 167 |

+

# allocate memory beforehand

|

| 168 |

+

KTu = torch.empty(v.shape, dtype=v.dtype).to(device)

|

| 169 |

+

Kv = torch.empty(u.shape, dtype=u.dtype).to(device)

|

| 170 |

+

|

| 171 |

+

while (err > stopThr and it <= maxIter):

|

| 172 |

+

upre, vpre = u, v

|

| 173 |

+

torch.matmul(u, K, out=KTu)

|

| 174 |

+

v = torch.div(b, KTu + M_EPS)

|

| 175 |

+

torch.matmul(K, v, out=Kv)

|

| 176 |

+

u = torch.div(a, Kv + M_EPS)

|

| 177 |

+

|

| 178 |

+

if torch.any(torch.isnan(u)) or torch.any(torch.isnan(v)) or \

|

| 179 |

+

torch.any(torch.isinf(u)) or torch.any(torch.isinf(v)):

|

| 180 |

+

print('Warning: numerical errors at iteration', it)

|

| 181 |

+

u, v = upre, vpre

|

| 182 |

+

break

|

| 183 |

+

|

| 184 |

+

if log and it % eval_freq == 0:

|

| 185 |

+

# we can speed up the process by checking for the error only all

|

| 186 |

+

# the eval_freq iterations

|

| 187 |

+

# below is equivalent to:

|

| 188 |

+

# b_hat = torch.sum(u.reshape(-1, 1) * K * v.reshape(1, -1), 0)

|

| 189 |

+

# but with more memory efficient

|

| 190 |

+

b_hat = torch.matmul(u, K) * v

|

| 191 |

+

err = (b - b_hat).pow(2).sum().item()

|

| 192 |

+

# err = (b - b_hat).abs().sum().item()

|

| 193 |

+

log['err'].append(err)

|

| 194 |

+

|

| 195 |

+

if verbose and it % print_freq == 0:

|

| 196 |

+

print('iteration {:5d}, constraint error {:5e}'.format(it, err))

|

| 197 |

+

|

| 198 |

+

it += 1

|

| 199 |

+

|

| 200 |

+

if log:

|

| 201 |

+

log['u'] = u

|

| 202 |

+

log['v'] = v

|

| 203 |

+

log['alpha'] = reg * torch.log(u + M_EPS)

|

| 204 |

+

log['beta'] = reg * torch.log(v + M_EPS)

|

| 205 |

+

|

| 206 |

+

# transport plan

|

| 207 |

+

P = u.reshape(-1, 1) * K * v.reshape(1, -1)

|

| 208 |

+

if log:

|

| 209 |

+

return P, log

|

| 210 |

+

else:

|

| 211 |

+

return P

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

def sinkhorn_stabilized(a, b, C, reg=1e-1, maxIter=1000, tau=1e3, stopThr=1e-9,

|

| 215 |

+

verbose=False, log=False, warm_start=None, eval_freq=10, print_freq=200, **kwargs):

|

| 216 |

+

"""

|

| 217 |

+

Solve the entropic regularization OT problem with log stabilization

|

| 218 |

+

The function solves the following optimization problem:

|

| 219 |

+

|

| 220 |

+

.. math::

|

| 221 |

+

\gamma = arg\min_\gamma <\gamma,C>_F + reg\cdot\Omega(\gamma)

|

| 222 |

+

s.t. \gamma 1 = a

|

| 223 |

+

\gamma^T 1= b

|

| 224 |

+

\gamma\geq 0

|

| 225 |

+

where :

|

| 226 |

+

- C is the (ns,nt) metric cost matrix

|

| 227 |

+

- :math:`\Omega` is the entropic regularization term :math:`\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})`

|

| 228 |

+

- a and b are target and source measures (sum to 1)

|

| 229 |

+

|

| 230 |

+

The algorithm used for solving the problem is the Sinkhorn-Knopp matrix scaling algorithm as proposed in [1]

|

| 231 |

+

but with the log stabilization proposed in [3] an defined in [2] (Algo 3.1)

|

| 232 |

+

|

| 233 |

+

Parameters

|

| 234 |

+

----------

|

| 235 |

+

a : torch.tensor (na,)

|

| 236 |

+

samples measure in the target domain

|

| 237 |

+

b : torch.tensor (nb,)

|

| 238 |

+

samples in the source domain

|

| 239 |

+

C : torch.tensor (na,nb)

|

| 240 |

+

loss matrix

|

| 241 |

+

reg : float

|

| 242 |

+

Regularization term > 0

|

| 243 |

+

tau : float

|

| 244 |

+

thershold for max value in u or v for log scaling

|

| 245 |

+

maxIter : int, optional

|

| 246 |

+

Max number of iterations

|

| 247 |

+

stopThr : float, optional

|

| 248 |

+

Stop threshol on error ( > 0 )

|

| 249 |

+

verbose : bool, optional

|

| 250 |

+

Print information along iterations

|

| 251 |

+

log : bool, optional

|

| 252 |

+

record log if True

|

| 253 |

+

|

| 254 |

+

Returns

|

| 255 |

+

-------

|

| 256 |

+

gamma : (na x nb) torch.tensor

|

| 257 |

+

Optimal transportation matrix for the given parameters

|

| 258 |

+

log : dict

|

| 259 |

+

log dictionary return only if log==True in parameters

|

| 260 |

+

|

| 261 |

+

References

|

| 262 |

+

----------

|

| 263 |

+

[1] M. Cuturi, Sinkhorn Distances : Lightspeed Computation of Optimal Transport, Advances in Neural Information Processing Systems (NIPS) 26, 2013

|

| 264 |

+

[2] Bernhard Schmitzer. Stabilized Sparse Scaling Algorithms for Entropy Regularized Transport Problems. SIAM Journal on Scientific Computing, 2019

|

| 265 |

+

[3] Chizat, L., Peyré, G., Schmitzer, B., & Vialard, F. X. (2016). Scaling algorithms for unbalanced transport problems. arXiv preprint arXiv:1607.05816.

|

| 266 |

+

|

| 267 |

+

See Also

|

| 268 |

+

--------

|

| 269 |

+

|

| 270 |

+

"""

|

| 271 |

+

|

| 272 |

+

device = a.device

|

| 273 |

+

na, nb = C.shape

|

| 274 |

+

|

| 275 |

+

assert na >= 1 and nb >= 1, 'C needs to be 2d'

|

| 276 |

+

assert na == a.shape[0] and nb == b.shape[0], "Shape of a or b does't match that of C"

|

| 277 |

+

assert reg > 0, 'reg should be greater than 0'

|

| 278 |

+

assert a.min() >= 0. and b.min() >= 0., 'Elements in a or b less than 0'

|

| 279 |

+

|

| 280 |

+

if log:

|

| 281 |

+

log = {'err': []}

|

| 282 |

+

|

| 283 |

+

if warm_start is not None:

|

| 284 |

+

alpha = warm_start['alpha']

|

| 285 |

+

beta = warm_start['beta']

|

| 286 |

+

else:

|

| 287 |

+

alpha = torch.zeros(na, dtype=a.dtype).to(device)

|

| 288 |

+

beta = torch.zeros(nb, dtype=b.dtype).to(device)

|

| 289 |

+

|

| 290 |

+

u = torch.ones(na, dtype=a.dtype).to(device) / na

|

| 291 |

+

v = torch.ones(nb, dtype=b.dtype).to(device) / nb

|

| 292 |

+

|

| 293 |

+

def update_K(alpha, beta):

|

| 294 |

+

"""log space computation"""

|

| 295 |

+

"""memory efficient"""

|

| 296 |

+

torch.add(alpha.reshape(-1, 1), beta.reshape(1, -1), out=K)

|

| 297 |

+

torch.add(K, -C, out=K)

|

| 298 |

+

torch.div(K, reg, out=K)

|

| 299 |

+

torch.exp(K, out=K)

|

| 300 |

+

|

| 301 |

+

def update_P(alpha, beta, u, v, ab_updated=False):

|

| 302 |

+

"""log space P (gamma) computation"""

|

| 303 |

+

torch.add(alpha.reshape(-1, 1), beta.reshape(1, -1), out=P)

|

| 304 |

+

torch.add(P, -C, out=P)

|

| 305 |

+

torch.div(P, reg, out=P)

|

| 306 |

+

if not ab_updated:

|

| 307 |

+

torch.add(P, torch.log(u + M_EPS).reshape(-1, 1), out=P)

|

| 308 |

+

torch.add(P, torch.log(v + M_EPS).reshape(1, -1), out=P)

|

| 309 |

+

torch.exp(P, out=P)

|

| 310 |

+

|

| 311 |

+

K = torch.empty(C.shape, dtype=C.dtype).to(device)

|

| 312 |

+

update_K(alpha, beta)

|

| 313 |

+

|

| 314 |

+

b_hat = torch.empty(b.shape, dtype=C.dtype).to(device)

|

| 315 |

+

|

| 316 |

+

it = 1

|

| 317 |

+

err = 1

|

| 318 |

+

ab_updated = False

|

| 319 |

+

|

| 320 |

+

# allocate memory beforehand

|

| 321 |

+

KTu = torch.empty(v.shape, dtype=v.dtype).to(device)

|

| 322 |

+

Kv = torch.empty(u.shape, dtype=u.dtype).to(device)

|

| 323 |

+

P = torch.empty(C.shape, dtype=C.dtype).to(device)

|

| 324 |

+

|

| 325 |

+

while (err > stopThr and it <= maxIter):

|

| 326 |

+

upre, vpre = u, v

|

| 327 |

+

torch.matmul(u, K, out=KTu)

|

| 328 |

+

v = torch.div(b, KTu + M_EPS)

|

| 329 |

+

torch.matmul(K, v, out=Kv)

|

| 330 |

+

u = torch.div(a, Kv + M_EPS)

|

| 331 |

+

|

| 332 |

+

ab_updated = False

|

| 333 |

+

# remove numerical problems and store them in K

|

| 334 |

+

if u.abs().sum() > tau or v.abs().sum() > tau:

|

| 335 |

+

alpha += reg * torch.log(u + M_EPS)

|

| 336 |

+

beta += reg * torch.log(v + M_EPS)

|

| 337 |

+

u.fill_(1. / na)

|

| 338 |

+

v.fill_(1. / nb)

|

| 339 |

+

update_K(alpha, beta)

|

| 340 |

+

ab_updated = True

|

| 341 |

+

|

| 342 |

+

if log and it % eval_freq == 0:

|

| 343 |

+

# we can speed up the process by checking for the error only all

|

| 344 |

+

# the eval_freq iterations

|

| 345 |

+