Commit

•

29e2769

1

Parent(s):

bfcc00c

Add index.html shim, and add hero.svg

Browse files- app.py +150 -56

- img/hero.png +0 -0

- img/hero.svg +0 -0

- index.html +7 -0

app.py

CHANGED

|

@@ -3,6 +3,7 @@ import pandas as pd

|

|

| 3 |

from PIL import Image

|

| 4 |

import base64

|

| 5 |

from io import BytesIO

|

|

|

|

| 6 |

|

| 7 |

# Define constants

|

| 8 |

MAJOR_A_WIN = "A>>B"

|

|

@@ -50,6 +51,14 @@ def pil_to_base64(img):

|

|

| 50 |

return img_str

|

| 51 |

|

| 52 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

# Load your dataframes

|

| 54 |

df_test_set = pd.read_json("data/test_set.jsonl", lines=True)

|

| 55 |

df_responses = pd.read_json("data/responses.jsonl", lines=True)

|

|

@@ -57,7 +66,9 @@ df_response_judging = pd.read_json("data/response_judging.jsonl", lines=True)

|

|

| 57 |

df_leaderboard = (

|

| 58 |

pd.read_csv("data/leaderboard_6_11.csv").sort_values("Rank").reset_index(drop=True)

|

| 59 |

)

|

| 60 |

-

df_leaderboard = df_leaderboard.rename(

|

|

|

|

|

|

|

| 61 |

|

| 62 |

# Prepare the scenario selector options

|

| 63 |

df_test_set["scenario_option"] = (

|

|

@@ -84,7 +95,6 @@ div.stButton > button {

|

|

| 84 |

}

|

| 85 |

</style>

|

| 86 |

"""

|

| 87 |

-

|

| 88 |

st.markdown(full_width_button_css, unsafe_allow_html=True)

|

| 89 |

|

| 90 |

# Create a button that triggers the JavaScript function

|

|

@@ -104,8 +114,11 @@ with col2:

|

|

| 104 |

st.write("Button 2 clicked")

|

| 105 |

|

| 106 |

with col3:

|

| 107 |

-

|

| 108 |

-

|

|

|

|

|

|

|

|

|

|

| 109 |

|

| 110 |

# Custom CSS to center title and header

|

| 111 |

center_css = """

|

|

@@ -118,35 +131,48 @@ h1, h2, h6{

|

|

| 118 |

|

| 119 |

st.markdown(center_css, unsafe_allow_html=True)

|

| 120 |

|

| 121 |

-

#

|

| 122 |

-

image = Image.open("img/lmc_icon.png")

|

| 123 |

-

|

| 124 |

-

#

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

#

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

"""

|

| 133 |

-

|

| 134 |

-

# Rendering the centered image

|

| 135 |

-

st.markdown(centered_image_html, unsafe_allow_html=True)

|

| 136 |

-

|

| 137 |

st.title("Language Model Council")

|

| 138 |

st.markdown(

|

| 139 |

-

"###### Benchmarking Foundation Models on Highly Subjective Tasks by Consensus"

|

| 140 |

)

|

| 141 |

|

| 142 |

-

|

| 143 |

-

|

| 144 |

-

|

| 145 |

|

| 146 |

-

|

| 147 |

-

|

| 148 |

-

|

| 149 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 150 |

)

|

| 151 |

st.markdown(

|

| 152 |

"This leaderboard comes from deploying a Council of 20 LLMs on an **open-ended emotional intelligence task: responding to interpersonal dilemmas**."

|

|

@@ -175,22 +201,66 @@ def colored_text_box(text, background_color, text_color="black"):

|

|

| 175 |

return html_code

|

| 176 |

|

| 177 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 178 |

with tabs[1]:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 179 |

st.markdown("### 1. Select a scenario.")

|

| 180 |

# Create the selectors

|

| 181 |

-

selected_scenario = st.selectbox(

|

| 182 |

-

"Select Scenario",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 183 |

)

|

| 184 |

|

| 185 |

# Get the selected scenario details

|

| 186 |

-

if selected_scenario:

|

| 187 |

-

selected_emobench_id = int(selected_scenario.split(": ")[0])

|

| 188 |

scenario_details = df_test_set[

|

| 189 |

df_test_set["emobench_id"] == selected_emobench_id

|

| 190 |

].iloc[0]

|

| 191 |

|

| 192 |

# Display the detailed dilemma and additional information

|

| 193 |

-

# st.write(scenario_details["detailed_dilemma"])

|

| 194 |

st.markdown(

|

| 195 |

colored_text_box(

|

| 196 |

scenario_details["detailed_dilemma"], "#eeeeeeff", "black"

|

|

@@ -217,14 +287,13 @@ with tabs[1]:

|

|

| 217 |

)

|

| 218 |

|

| 219 |

# Get the response string for the fixed model

|

| 220 |

-

if selected_scenario:

|

| 221 |

response_details_fixed = df_responses[

|

| 222 |

(df_responses["emobench_id"] == selected_emobench_id)

|

| 223 |

& (df_responses["llm_responder"] == fixed_model)

|

| 224 |

].iloc[0]

|

| 225 |

|

| 226 |

# Display the response string

|

| 227 |

-

# st.write(response_details_fixed["response_string"])

|

| 228 |

st.markdown(

|

| 229 |

colored_text_box(

|

| 230 |

response_details_fixed["response_string"], "#eeeeeeff", "black"

|

|

@@ -233,19 +302,26 @@ with tabs[1]:

|

|

| 233 |

)

|

| 234 |

|

| 235 |

with col2:

|

| 236 |

-

selected_model = st.selectbox(

|

| 237 |

-

"Select Model",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 238 |

)

|

| 239 |

|

| 240 |

# Get the response string for the selected model

|

| 241 |

-

if selected_model and selected_scenario:

|

| 242 |

response_details_dynamic = df_responses[

|

| 243 |

(df_responses["emobench_id"] == selected_emobench_id)

|

| 244 |

-

& (df_responses["llm_responder"] == selected_model)

|

| 245 |

].iloc[0]

|

| 246 |

|

| 247 |

# Display the response string

|

| 248 |

-

# st.write(response_details_dynamic["response_string"])

|

| 249 |

st.markdown(

|

| 250 |

colored_text_box(

|

| 251 |

response_details_dynamic["response_string"], "#eeeeeeff", "black"

|

|

@@ -262,43 +338,65 @@ with tabs[1]:

|

|

| 262 |

col1, col2 = st.columns(2)

|

| 263 |

|

| 264 |

with col1:

|

| 265 |

-

st.write(f"**{fixed_model}** vs **{selected_model}**")

|

| 266 |

pairwise_counts_left = df_response_judging[

|

| 267 |

(df_response_judging["first_completion_by"] == fixed_model)

|

| 268 |

-

& (

|

|

|

|

|

|

|

|

|

|

| 269 |

]["pairwise_choice"].value_counts()

|

| 270 |

st.bar_chart(pairwise_counts_left)

|

| 271 |

|

| 272 |

with col2:

|

| 273 |

-

st.write(f"**{selected_model}** vs **{fixed_model}**")

|

| 274 |

pairwise_counts_right = df_response_judging[

|

| 275 |

-

(

|

|

|

|

|

|

|

|

|

|

| 276 |

& (df_response_judging["second_completion_by"] == fixed_model)

|

| 277 |

]["pairwise_choice"].value_counts()

|

| 278 |

st.bar_chart(pairwise_counts_right)

|

| 279 |

|

| 280 |

# Create the llm_judge selector

|

| 281 |

-

st.markdown("####

|

| 282 |

-

selected_judge = st.selectbox(

|

| 283 |

-

"Select Judge",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 284 |

)

|

| 285 |

|

| 286 |

# Get the judging details for the selected judge and models

|

| 287 |

-

if selected_judge and selected_scenario:

|

| 288 |

col1, col2 = st.columns(2)

|

| 289 |

|

| 290 |

judging_details_left = df_response_judging[

|

| 291 |

-

(df_response_judging["llm_judge"] == selected_judge)

|

| 292 |

& (df_response_judging["first_completion_by"] == fixed_model)

|

| 293 |

-

& (

|

|

|

|

|

|

|

|

|

|

| 294 |

].iloc[0]

|

| 295 |

|

| 296 |

judging_details_right = df_response_judging[

|

| 297 |

-

(df_response_judging["llm_judge"] == selected_judge)

|

| 298 |

-

& (

|

|

|

|

|

|

|

|

|

|

| 299 |

& (df_response_judging["second_completion_by"] == fixed_model)

|

| 300 |

].iloc[0]

|

| 301 |

|

|

|

|

| 302 |

if is_consistent(

|

| 303 |

judging_details_left["pairwise_choice"],

|

| 304 |

judging_details_right["pairwise_choice"],

|

|

@@ -309,12 +407,10 @@ with tabs[1]:

|

|

| 309 |

|

| 310 |

# Display the judging details

|

| 311 |

with col1:

|

| 312 |

-

# st.write(f"**{fixed_model}** vs **{selected_model}**")

|

| 313 |

if not judging_details_left.empty:

|

| 314 |

st.write(

|

| 315 |

f"**Pairwise Choice:** {judging_details_left['pairwise_choice']}"

|

| 316 |

)

|

| 317 |

-

# st.code(judging_details_left["judging_response_string"])

|

| 318 |

st.markdown(

|

| 319 |

colored_text_box(

|

| 320 |

judging_details_left["judging_response_string"],

|

|

@@ -327,12 +423,10 @@ with tabs[1]:

|

|

| 327 |

st.write("No judging details found for the selected combination.")

|

| 328 |

|

| 329 |

with col2:

|

| 330 |

-

# st.write(f"**{selected_model}** vs **{fixed_model}**")

|

| 331 |

if not judging_details_right.empty:

|

| 332 |

st.write(

|

| 333 |

f"**Pairwise Choice:** {judging_details_right['pairwise_choice']}"

|

| 334 |

)

|

| 335 |

-

# st.code(judging_details_right["judging_response_string"])

|

| 336 |

st.markdown(

|

| 337 |

colored_text_box(

|

| 338 |

judging_details_right["judging_response_string"],

|

|

|

|

| 3 |

from PIL import Image

|

| 4 |

import base64

|

| 5 |

from io import BytesIO

|

| 6 |

+

import random

|

| 7 |

|

| 8 |

# Define constants

|

| 9 |

MAJOR_A_WIN = "A>>B"

|

|

|

|

| 51 |

return img_str

|

| 52 |

|

| 53 |

|

| 54 |

+

# Function to convert PIL image to base64

|

| 55 |

+

def pil_svg_to_base64(img):

|

| 56 |

+

buffered = BytesIO()

|

| 57 |

+

img.save(buffered, format="SVG")

|

| 58 |

+

img_str = base64.b64encode(buffered.getvalue()).decode()

|

| 59 |

+

return img_str

|

| 60 |

+

|

| 61 |

+

|

| 62 |

# Load your dataframes

|

| 63 |

df_test_set = pd.read_json("data/test_set.jsonl", lines=True)

|

| 64 |

df_responses = pd.read_json("data/responses.jsonl", lines=True)

|

|

|

|

| 66 |

df_leaderboard = (

|

| 67 |

pd.read_csv("data/leaderboard_6_11.csv").sort_values("Rank").reset_index(drop=True)

|

| 68 |

)

|

| 69 |

+

df_leaderboard = df_leaderboard.rename(

|

| 70 |

+

columns={"EI Score": "Council Arena EI Score (95% CI)"}

|

| 71 |

+

)

|

| 72 |

|

| 73 |

# Prepare the scenario selector options

|

| 74 |

df_test_set["scenario_option"] = (

|

|

|

|

| 95 |

}

|

| 96 |

</style>

|

| 97 |

"""

|

|

|

|

| 98 |

st.markdown(full_width_button_css, unsafe_allow_html=True)

|

| 99 |

|

| 100 |

# Create a button that triggers the JavaScript function

|

|

|

|

| 114 |

st.write("Button 2 clicked")

|

| 115 |

|

| 116 |

with col3:

|

| 117 |

+

st.link_button(

|

| 118 |

+

"Github",

|

| 119 |

+

"https://github.com/llm-council/llm-council",

|

| 120 |

+

use_container_width=True,

|

| 121 |

+

)

|

| 122 |

|

| 123 |

# Custom CSS to center title and header

|

| 124 |

center_css = """

|

|

|

|

| 131 |

|

| 132 |

st.markdown(center_css, unsafe_allow_html=True)

|

| 133 |

|

| 134 |

+

# Centered icon.

|

| 135 |

+

# image = Image.open("img/lmc_icon.png")

|

| 136 |

+

# img_base64 = pil_to_base64(image)

|

| 137 |

+

# centered_image_html = f"""

|

| 138 |

+

# <div style="text-align: center;">

|

| 139 |

+

# <img src="data:image/png;base64,{img_base64}" width="50"/>

|

| 140 |

+

# </div>

|

| 141 |

+

# """

|

| 142 |

+

# st.markdown(centered_image_html, unsafe_allow_html=True)

|

| 143 |

+

|

| 144 |

+

# Title and subtitle.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 145 |

st.title("Language Model Council")

|

| 146 |

st.markdown(

|

| 147 |

+

"###### Benchmarking Foundation Models on Highly Subjective Tasks by Consensus :classical_building:"

|

| 148 |

)

|

| 149 |

|

| 150 |

+

# Render hero image.

|

| 151 |

+

with open("img/hero.svg", "r") as file:

|

| 152 |

+

svg_content = file.read()

|

| 153 |

|

| 154 |

+

left_co, cent_co, last_co = st.columns([0.2, 0.6, 0.2])

|

| 155 |

+

with cent_co:

|

| 156 |

+

st.image(svg_content, use_column_width=True)

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

with cent_co.expander("Abstract"):

|

| 160 |

+

st.markdown(

|

| 161 |

+

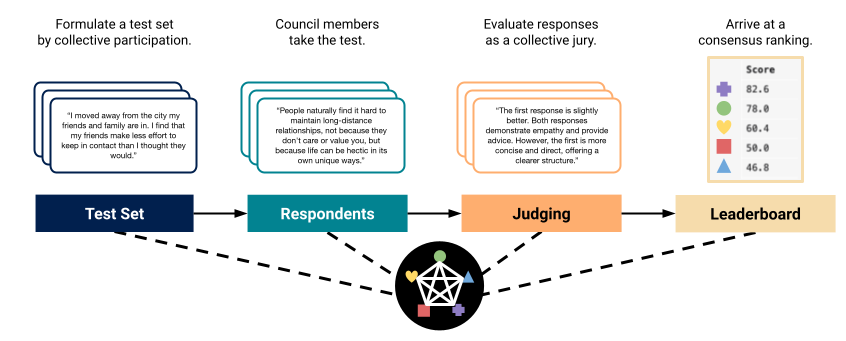

"""The rapid advancement of Large Language Models (LLMs) necessitates robust

|

| 162 |

+

and challenging benchmarks. Leaderboards like Chatbot Arena rank LLMs based

|

| 163 |

+

on how well their responses align with human preferences. However, many tasks

|

| 164 |

+

such as those related to emotional intelligence, creative writing, or persuasiveness,

|

| 165 |

+

are highly subjective and often lack majoritarian human agreement. Judges may

|

| 166 |

+

have irreconcilable disagreements about what constitutes a better response. To

|

| 167 |

+

address the challenge of ranking LLMs on highly subjective tasks, we propose

|

| 168 |

+

a novel benchmarking framework, the Language Model Council (LMC). The

|

| 169 |

+

LMC operates through a democratic process to: 1) formulate a test set through

|

| 170 |

+

equal participation, 2) administer the test among council members, and 3) evaluate

|

| 171 |

+

responses as a collective jury. We deploy a council of 20 newest LLMs on an

|

| 172 |

+

open-ended emotional intelligence task: responding to interpersonal dilemmas.

|

| 173 |

+

Our results show that the LMC produces rankings that are more separable, robust,

|

| 174 |

+

and less biased than those from any individual LLM judge, and is more consistent

|

| 175 |

+

with a human-established leaderboard compared to other benchmarks."""

|

| 176 |

)

|

| 177 |

st.markdown(

|

| 178 |

"This leaderboard comes from deploying a Council of 20 LLMs on an **open-ended emotional intelligence task: responding to interpersonal dilemmas**."

|

|

|

|

| 201 |

return html_code

|

| 202 |

|

| 203 |

|

| 204 |

+

# Ensure to initialize session state variables if they do not exist

|

| 205 |

+

if "selected_scenario" not in st.session_state:

|

| 206 |

+

st.session_state.selected_scenario = None

|

| 207 |

+

|

| 208 |

+

if "selected_model" not in st.session_state:

|

| 209 |

+

st.session_state.selected_model = None

|

| 210 |

+

|

| 211 |

+

if "selected_judge" not in st.session_state:

|

| 212 |

+

st.session_state.selected_judge = None

|

| 213 |

+

|

| 214 |

+

|

| 215 |

+

# Define callback functions to update session state

|

| 216 |

+

def update_scenario():

|

| 217 |

+

st.session_state.selected_scenario = st.session_state.scenario_selector

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

def update_model():

|

| 221 |

+

st.session_state.selected_model = st.session_state.model_selector

|

| 222 |

+

|

| 223 |

+

|

| 224 |

+

def update_judge():

|

| 225 |

+

st.session_state.selected_judge = st.session_state.judge_selector

|

| 226 |

+

|

| 227 |

+

|

| 228 |

+

def randomize_selection():

|

| 229 |

+

st.session_state.selected_scenario = random.choice(scenario_options)

|

| 230 |

+

st.session_state.selected_model = random.choice(model_options)

|

| 231 |

+

st.session_state.selected_judge = random.choice(judge_options)

|

| 232 |

+

|

| 233 |

+

|

| 234 |

with tabs[1]:

|

| 235 |

+

# Add randomize button at the top of the app

|

| 236 |

+

_, mid_column, _ = st.columns([0.4, 0.2, 0.4])

|

| 237 |

+

mid_column.button(

|

| 238 |

+

":game_die: Randomize!", on_click=randomize_selection, type="primary"

|

| 239 |

+

)

|

| 240 |

+

|

| 241 |

st.markdown("### 1. Select a scenario.")

|

| 242 |

# Create the selectors

|

| 243 |

+

st.session_state.selected_scenario = st.selectbox(

|

| 244 |

+

"Select Scenario",

|

| 245 |

+

scenario_options,

|

| 246 |

+

label_visibility="hidden",

|

| 247 |

+

key="scenario_selector",

|

| 248 |

+

on_change=update_scenario,

|

| 249 |

+

index=(

|

| 250 |

+

scenario_options.index(st.session_state.selected_scenario)

|

| 251 |

+

if st.session_state.selected_scenario

|

| 252 |

+

else 0

|

| 253 |

+

),

|

| 254 |

)

|

| 255 |

|

| 256 |

# Get the selected scenario details

|

| 257 |

+

if st.session_state.selected_scenario:

|

| 258 |

+

selected_emobench_id = int(st.session_state.selected_scenario.split(": ")[0])

|

| 259 |

scenario_details = df_test_set[

|

| 260 |

df_test_set["emobench_id"] == selected_emobench_id

|

| 261 |

].iloc[0]

|

| 262 |

|

| 263 |

# Display the detailed dilemma and additional information

|

|

|

|

| 264 |

st.markdown(

|

| 265 |

colored_text_box(

|

| 266 |

scenario_details["detailed_dilemma"], "#eeeeeeff", "black"

|

|

|

|

| 287 |

)

|

| 288 |

|

| 289 |

# Get the response string for the fixed model

|

| 290 |

+

if st.session_state.selected_scenario:

|

| 291 |

response_details_fixed = df_responses[

|

| 292 |

(df_responses["emobench_id"] == selected_emobench_id)

|

| 293 |

& (df_responses["llm_responder"] == fixed_model)

|

| 294 |

].iloc[0]

|

| 295 |

|

| 296 |

# Display the response string

|

|

|

|

| 297 |

st.markdown(

|

| 298 |

colored_text_box(

|

| 299 |

response_details_fixed["response_string"], "#eeeeeeff", "black"

|

|

|

|

| 302 |

)

|

| 303 |

|

| 304 |

with col2:

|

| 305 |

+

st.session_state.selected_model = st.selectbox(

|

| 306 |

+

"Select Model",

|

| 307 |

+

model_options,

|

| 308 |

+

key="model_selector",

|

| 309 |

+

on_change=update_model,

|

| 310 |

+

index=(

|

| 311 |

+

model_options.index(st.session_state.selected_model)

|

| 312 |

+

if st.session_state.selected_model

|

| 313 |

+

else 0

|

| 314 |

+

),

|

| 315 |

)

|

| 316 |

|

| 317 |

# Get the response string for the selected model

|

| 318 |

+

if st.session_state.selected_model and st.session_state.selected_scenario:

|

| 319 |

response_details_dynamic = df_responses[

|

| 320 |

(df_responses["emobench_id"] == selected_emobench_id)

|

| 321 |

+

& (df_responses["llm_responder"] == st.session_state.selected_model)

|

| 322 |

].iloc[0]

|

| 323 |

|

| 324 |

# Display the response string

|

|

|

|

| 325 |

st.markdown(

|

| 326 |

colored_text_box(

|

| 327 |

response_details_dynamic["response_string"], "#eeeeeeff", "black"

|

|

|

|

| 338 |

col1, col2 = st.columns(2)

|

| 339 |

|

| 340 |

with col1:

|

| 341 |

+

st.write(f"**{fixed_model}** vs **{st.session_state.selected_model}**")

|

| 342 |

pairwise_counts_left = df_response_judging[

|

| 343 |

(df_response_judging["first_completion_by"] == fixed_model)

|

| 344 |

+

& (

|

| 345 |

+

df_response_judging["second_completion_by"]

|

| 346 |

+

== st.session_state.selected_model

|

| 347 |

+

)

|

| 348 |

]["pairwise_choice"].value_counts()

|

| 349 |

st.bar_chart(pairwise_counts_left)

|

| 350 |

|

| 351 |

with col2:

|

| 352 |

+

st.write(f"**{st.session_state.selected_model}** vs **{fixed_model}**")

|

| 353 |

pairwise_counts_right = df_response_judging[

|

| 354 |

+

(

|

| 355 |

+

df_response_judging["first_completion_by"]

|

| 356 |

+

== st.session_state.selected_model

|

| 357 |

+

)

|

| 358 |

& (df_response_judging["second_completion_by"] == fixed_model)

|

| 359 |

]["pairwise_choice"].value_counts()

|

| 360 |

st.bar_chart(pairwise_counts_right)

|

| 361 |

|

| 362 |

# Create the llm_judge selector

|

| 363 |

+

st.markdown("#### Individual LLM judges")

|

| 364 |

+

st.session_state.selected_judge = st.selectbox(

|

| 365 |

+

"Select Judge",

|

| 366 |

+

judge_options,

|

| 367 |

+

label_visibility="hidden",

|

| 368 |

+

key="judge_selector",

|

| 369 |

+

on_change=update_judge,

|

| 370 |

+

index=(

|

| 371 |

+

judge_options.index(st.session_state.selected_judge)

|

| 372 |

+

if st.session_state.selected_judge

|

| 373 |

+

else 0

|

| 374 |

+

),

|

| 375 |

)

|

| 376 |

|

| 377 |

# Get the judging details for the selected judge and models

|

| 378 |

+

if st.session_state.selected_judge and st.session_state.selected_scenario:

|

| 379 |

col1, col2 = st.columns(2)

|

| 380 |

|

| 381 |

judging_details_left = df_response_judging[

|

| 382 |

+

(df_response_judging["llm_judge"] == st.session_state.selected_judge)

|

| 383 |

& (df_response_judging["first_completion_by"] == fixed_model)

|

| 384 |

+

& (

|

| 385 |

+

df_response_judging["second_completion_by"]

|

| 386 |

+

== st.session_state.selected_model

|

| 387 |

+

)

|

| 388 |

].iloc[0]

|

| 389 |

|

| 390 |

judging_details_right = df_response_judging[

|

| 391 |

+

(df_response_judging["llm_judge"] == st.session_state.selected_judge)

|

| 392 |

+

& (

|

| 393 |

+

df_response_judging["first_completion_by"]

|

| 394 |

+

== st.session_state.selected_model

|

| 395 |

+

)

|

| 396 |

& (df_response_judging["second_completion_by"] == fixed_model)

|

| 397 |

].iloc[0]

|

| 398 |

|

| 399 |

+

# Render consistency.

|

| 400 |

if is_consistent(

|

| 401 |

judging_details_left["pairwise_choice"],

|

| 402 |

judging_details_right["pairwise_choice"],

|

|

|

|

| 407 |

|

| 408 |

# Display the judging details

|

| 409 |

with col1:

|

|

|

|

| 410 |

if not judging_details_left.empty:

|

| 411 |

st.write(

|

| 412 |

f"**Pairwise Choice:** {judging_details_left['pairwise_choice']}"

|

| 413 |

)

|

|

|

|

| 414 |

st.markdown(

|

| 415 |

colored_text_box(

|

| 416 |

judging_details_left["judging_response_string"],

|

|

|

|

| 423 |

st.write("No judging details found for the selected combination.")

|

| 424 |

|

| 425 |

with col2:

|

|

|

|

| 426 |

if not judging_details_right.empty:

|

| 427 |

st.write(

|

| 428 |

f"**Pairwise Choice:** {judging_details_right['pairwise_choice']}"

|

| 429 |

)

|

|

|

|

| 430 |

st.markdown(

|

| 431 |

colored_text_box(

|

| 432 |

judging_details_right["judging_response_string"],

|

img/hero.png

ADDED

|

img/hero.svg

ADDED

|

index.html

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<iframe

|

| 2 |

+

id="your-iframe-id"

|

| 3 |

+

src="https://llm-council-emotional-intelligence-arena.hf.space"

|

| 4 |

+

frameborder="0"

|

| 5 |

+

width="100%"

|

| 6 |

+

height="100%"

|

| 7 |

+

></iframe>

|