Finished code generator

Browse files- app.py +70 -30

- contents.py +18 -5

- img/pecore_ui_output_example.png +0 -0

- presets.py +96 -1

- style.py +4 -0

app.py

CHANGED

|

@@ -27,11 +27,11 @@ from presets import (

|

|

| 27 |

set_zephyr_preset,

|

| 28 |

set_gemma_preset,

|

| 29 |

set_mistral_instruct_preset,

|

|

|

|

| 30 |

)

|

| 31 |

from style import custom_css

|

| 32 |

from utils import get_formatted_attribute_context_results

|

| 33 |

|

| 34 |

-

from inseq import list_feature_attribution_methods, list_step_functions

|

| 35 |

from inseq.commands.attribute_context.attribute_context import (

|

| 36 |

AttributeContextArgs,

|

| 37 |

attribute_context_with_model,

|

|

@@ -291,21 +291,37 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 291 |

value="kl_divergence",

|

| 292 |

label="Context sensitivity metric",

|

| 293 |

info="Metric to use to measure context sensitivity of generated tokens.",

|

| 294 |

-

choices=

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 295 |

interactive=True,

|

| 296 |

)

|

| 297 |

attribution_method = gr.Dropdown(

|

| 298 |

value="saliency",

|

| 299 |

label="Attribution method",

|

| 300 |

info="Attribution method identifier to identify relevant context tokens.",

|

| 301 |

-

choices=

|

|

|

|

|

|

|

|

|

|

|

|

|

| 302 |

interactive=True,

|

| 303 |

)

|

| 304 |

attributed_fn = gr.Dropdown(

|

| 305 |

value="contrast_prob_diff",

|

| 306 |

label="Attributed function",

|

| 307 |

info="Function of model logits to use as target for the attribution method.",

|

| 308 |

-

choices=

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 309 |

interactive=True,

|

| 310 |

)

|

| 311 |

gr.Markdown("#### Results Selection Parameters")

|

|

@@ -330,7 +346,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 330 |

maximum=10,

|

| 331 |

)

|

| 332 |

attribution_std_threshold = gr.Number(

|

| 333 |

-

value=

|

| 334 |

label="Attribution threshold",

|

| 335 |

info="Select N to keep attributed tokens with scores above N * std. 0 = above mean.",

|

| 336 |

precision=1,

|

|

@@ -461,8 +477,23 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 461 |

gr.Markdown(cci_explanation)

|

| 462 |

with gr.Tab("🔧 Usage Guide"):

|

| 463 |

gr.Markdown(how_to_use)

|

| 464 |

-

gr.HTML('<img src="file/img/pecore_ui_output_example.png" width=100% />')

|

| 465 |

gr.Markdown(example_explanation)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 466 |

with gr.Tab("📚 Citing PECoRe"):

|

| 467 |

gr.Markdown(citation)

|

| 468 |

with gr.Row(elem_classes="footer-container"):

|

|

@@ -479,36 +510,38 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 479 |

tokenizer_kwargs,

|

| 480 |

]

|

| 481 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 482 |

attribute_input_button.click(

|

| 483 |

lambda *args: [gr.DownloadButton(visible=False), gr.DownloadButton(visible=False)],

|

| 484 |

inputs=[],

|

| 485 |

outputs=[download_output_file_button, download_output_html_button],

|

| 486 |

).then(

|

| 487 |

pecore,

|

| 488 |

-

inputs=

|

| 489 |

-

input_current_text,

|

| 490 |

-

input_context_text,

|

| 491 |

-

output_current_text,

|

| 492 |

-

output_context_text,

|

| 493 |

-

model_name_or_path,

|

| 494 |

-

attribution_method,

|

| 495 |

-

attributed_fn,

|

| 496 |

-

context_sensitivity_metric,

|

| 497 |

-

context_sensitivity_std_threshold,

|

| 498 |

-

context_sensitivity_topk,

|

| 499 |

-

attribution_std_threshold,

|

| 500 |

-

attribution_topk,

|

| 501 |

-

input_template,

|

| 502 |

-

output_template,

|

| 503 |

-

contextless_input_template,

|

| 504 |

-

contextless_output_template,

|

| 505 |

-

special_tokens_to_keep,

|

| 506 |

-

decoder_input_output_separator,

|

| 507 |

-

model_kwargs,

|

| 508 |

-

tokenizer_kwargs,

|

| 509 |

-

generation_kwargs,

|

| 510 |

-

attribution_kwargs,

|

| 511 |

-

],

|

| 512 |

outputs=[

|

| 513 |

pecore_output_highlights,

|

| 514 |

download_output_file_button,

|

|

@@ -617,4 +650,11 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 617 |

],

|

| 618 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 619 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 620 |

demo.launch(allowed_paths=["outputs/", "img/"])

|

|

|

|

| 27 |

set_zephyr_preset,

|

| 28 |

set_gemma_preset,

|

| 29 |

set_mistral_instruct_preset,

|

| 30 |

+

update_code_snippets_fn,

|

| 31 |

)

|

| 32 |

from style import custom_css

|

| 33 |

from utils import get_formatted_attribute_context_results

|

| 34 |

|

|

|

|

| 35 |

from inseq.commands.attribute_context.attribute_context import (

|

| 36 |

AttributeContextArgs,

|

| 37 |

attribute_context_with_model,

|

|

|

|

| 291 |

value="kl_divergence",

|

| 292 |

label="Context sensitivity metric",

|

| 293 |

info="Metric to use to measure context sensitivity of generated tokens.",

|

| 294 |

+

choices=[

|

| 295 |

+

"probability",

|

| 296 |

+

"logit",

|

| 297 |

+

"kl_divergence",

|

| 298 |

+

"contrast_logits_diff",

|

| 299 |

+

"contrast_prob_diff",

|

| 300 |

+

"pcxmi"

|

| 301 |

+

],

|

| 302 |

interactive=True,

|

| 303 |

)

|

| 304 |

attribution_method = gr.Dropdown(

|

| 305 |

value="saliency",

|

| 306 |

label="Attribution method",

|

| 307 |

info="Attribution method identifier to identify relevant context tokens.",

|

| 308 |

+

choices=[

|

| 309 |

+

"saliency",

|

| 310 |

+

"input_x_gradient",

|

| 311 |

+

"value_zeroing",

|

| 312 |

+

],

|

| 313 |

interactive=True,

|

| 314 |

)

|

| 315 |

attributed_fn = gr.Dropdown(

|

| 316 |

value="contrast_prob_diff",

|

| 317 |

label="Attributed function",

|

| 318 |

info="Function of model logits to use as target for the attribution method.",

|

| 319 |

+

choices=[

|

| 320 |

+

"probability",

|

| 321 |

+

"logit",

|

| 322 |

+

"contrast_logits_diff",

|

| 323 |

+

"contrast_prob_diff",

|

| 324 |

+

],

|

| 325 |

interactive=True,

|

| 326 |

)

|

| 327 |

gr.Markdown("#### Results Selection Parameters")

|

|

|

|

| 346 |

maximum=10,

|

| 347 |

)

|

| 348 |

attribution_std_threshold = gr.Number(

|

| 349 |

+

value=2.0,

|

| 350 |

label="Attribution threshold",

|

| 351 |

info="Select N to keep attributed tokens with scores above N * std. 0 = above mean.",

|

| 352 |

precision=1,

|

|

|

|

| 477 |

gr.Markdown(cci_explanation)

|

| 478 |

with gr.Tab("🔧 Usage Guide"):

|

| 479 |

gr.Markdown(how_to_use)

|

|

|

|

| 480 |

gr.Markdown(example_explanation)

|

| 481 |

+

update_code_snippets = gr.Button("Update code snippets", variant="primary")

|

| 482 |

+

with gr.Row(equal_height=True):

|

| 483 |

+

python_code_snippet = gr.Code(

|

| 484 |

+

value="""Generate Python code snippet by pressing the button.""",

|

| 485 |

+

language="python",

|

| 486 |

+

label="Python",

|

| 487 |

+

interactive=False,

|

| 488 |

+

show_label=True,

|

| 489 |

+

)

|

| 490 |

+

shell_code_snippet = gr.Code(

|

| 491 |

+

value="""Generate Shell code snippet by pressing the button.""",

|

| 492 |

+

language="shell",

|

| 493 |

+

label="Shell",

|

| 494 |

+

interactive=False,

|

| 495 |

+

show_label=True,

|

| 496 |

+

)

|

| 497 |

with gr.Tab("📚 Citing PECoRe"):

|

| 498 |

gr.Markdown(citation)

|

| 499 |

with gr.Row(elem_classes="footer-container"):

|

|

|

|

| 510 |

tokenizer_kwargs,

|

| 511 |

]

|

| 512 |

|

| 513 |

+

pecore_args = [

|

| 514 |

+

input_current_text,

|

| 515 |

+

input_context_text,

|

| 516 |

+

output_current_text,

|

| 517 |

+

output_context_text,

|

| 518 |

+

model_name_or_path,

|

| 519 |

+

attribution_method,

|

| 520 |

+

attributed_fn,

|

| 521 |

+

context_sensitivity_metric,

|

| 522 |

+

context_sensitivity_std_threshold,

|

| 523 |

+

context_sensitivity_topk,

|

| 524 |

+

attribution_std_threshold,

|

| 525 |

+

attribution_topk,

|

| 526 |

+

input_template,

|

| 527 |

+

output_template,

|

| 528 |

+

contextless_input_template,

|

| 529 |

+

contextless_output_template,

|

| 530 |

+

special_tokens_to_keep,

|

| 531 |

+

decoder_input_output_separator,

|

| 532 |

+

model_kwargs,

|

| 533 |

+

tokenizer_kwargs,

|

| 534 |

+

generation_kwargs,

|

| 535 |

+

attribution_kwargs,

|

| 536 |

+

]

|

| 537 |

+

|

| 538 |

attribute_input_button.click(

|

| 539 |

lambda *args: [gr.DownloadButton(visible=False), gr.DownloadButton(visible=False)],

|

| 540 |

inputs=[],

|

| 541 |

outputs=[download_output_file_button, download_output_html_button],

|

| 542 |

).then(

|

| 543 |

pecore,

|

| 544 |

+

inputs=pecore_args,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 545 |

outputs=[

|

| 546 |

pecore_output_highlights,

|

| 547 |

download_output_file_button,

|

|

|

|

| 650 |

],

|

| 651 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 652 |

|

| 653 |

+

update_code_snippets.click(

|

| 654 |

+

update_code_snippets_fn,

|

| 655 |

+

inputs=pecore_args,

|

| 656 |

+

outputs=[python_code_snippet, shell_code_snippet],

|

| 657 |

+

|

| 658 |

+

)

|

| 659 |

+

|

| 660 |

demo.launch(allowed_paths=["outputs/", "img/"])

|

contents.py

CHANGED

|

@@ -33,18 +33,31 @@ cci_explanation = """

|

|

| 33 |

"""

|

| 34 |

|

| 35 |

how_to_use = """

|

| 36 |

-

<

|

| 37 |

|

| 38 |

<p>This demo provides a convenient UI for the Inseq implementation of PECoRe (the <a href="https://inseq.org/en/latest/main_classes/cli.html#attribute-context"><code>inseq attribute-context</code></a> CLI command).</p>

|

| 39 |

<p>In the demo tab, fill in the input and context fields with the text you want to analyze, and click the <code>Run PECoRe</code> button to produce an output where the tokens selected by PECoRe in the model generation and context are highlighted. For more details on the parameters and their meaning, check the <code>Parameters</code> tab.</p>

|

| 40 |

|

| 41 |

-

<

|

| 42 |

"""

|

| 43 |

|

| 44 |

example_explanation = """

|

| 45 |

-

<p>

|

| 46 |

-

<

|

| 47 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

"""

|

| 49 |

|

| 50 |

citation = r"""

|

|

|

|

| 33 |

"""

|

| 34 |

|

| 35 |

how_to_use = """

|

| 36 |

+

<h2>How to use this demo</h3>

|

| 37 |

|

| 38 |

<p>This demo provides a convenient UI for the Inseq implementation of PECoRe (the <a href="https://inseq.org/en/latest/main_classes/cli.html#attribute-context"><code>inseq attribute-context</code></a> CLI command).</p>

|

| 39 |

<p>In the demo tab, fill in the input and context fields with the text you want to analyze, and click the <code>Run PECoRe</code> button to produce an output where the tokens selected by PECoRe in the model generation and context are highlighted. For more details on the parameters and their meaning, check the <code>Parameters</code> tab.</p>

|

| 40 |

|

| 41 |

+

<h2>Interpreting PECoRe results</h3>

|

| 42 |

"""

|

| 43 |

|

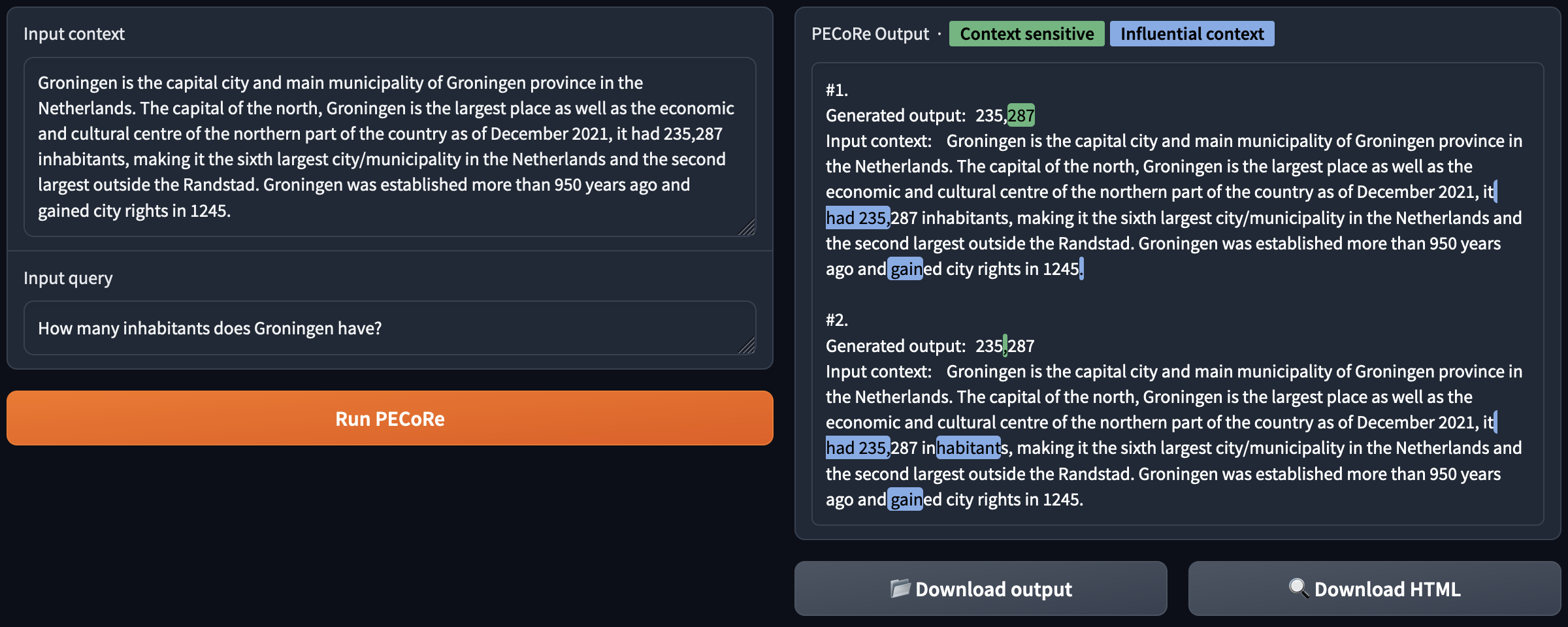

| 44 |

example_explanation = """

|

| 45 |

+

<p>Consider the following example, showing inputs and outputs of the <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model provided as default in the interface, using default settings.</p>

|

| 46 |

+

<img src="file/img/pecore_ui_output_example.png" width=100% />

|

| 47 |

+

<p>The PECoRe CTI step identified two context-sensitive tokens in the generation (<code>287</code> and <code>,</code>), while the CCI step associated each of those with the most influential tokens in the context. It can be observed that in both cases similar tokens from the passage stating the number of inhabitants are identified as salient (<code>235</code> and <code>,</code> for the generated <code>287</code>, while <code>had</code> is also found salient for the generated <code>,</code>).</p>

|

| 48 |

+

<h2>Usage tips</h3>

|

| 49 |

+

<ol>

|

| 50 |

+

<li>The <code>📂 Download output</code> button allows you to download the full JSON output produced by the Inseq CLI. It includes, among other things, the full set of CTI and CCI scores produced by PECoRe, tokenized versions of the input context and generated output and the full arguments used for the CLI call.</li>

|

| 51 |

+

<li>The <code>🔍 Download HTML</code> button allows you to download an HTML view of the output similar to the one visualized in the demo.

|

| 52 |

+

<li>By default, all generated tokens <b>above the mean CTI score</b> for the generated text are highlighted as context-sensitive. This might be reasonable for short answers, but the threshold can be raised by increasing the <code>Context sensitivity threshold</code> parameter to ensure only very sensitive tokens are picked up in longer replies.</li>

|

| 53 |

+

<li>Relatedly, all context tokens receiving <b>CCI scores >2 standard deviations</b> above the context mean are highlighted as influential. This might be reasonable for contexts with at least 50-100 tokens, but the threshold can be lowered by decreasing the <code>Attribution threshold</code> parameter to be more lenient in the selection for shorter contexts.</li>

|

| 54 |

+

<li>When using a model, make sure that the <b>contextual and contextless templates are set to match the expected format</b>. You can use presets to auto-fill these for the provided models.</li>

|

| 55 |

+

<li>If you are using an encoder-decoder expecting an output context (e.g. the multilingual MT preset), the <b>output context should be provided manually</b> before running PECoRe in the <code>Generation context</code> parameter. This is a requirement for the demo because the splitting between output context and current cannot be reliably performed in an automatic way. However, the <code>inseq attribute-context</code> CLI command actually support various strategies, including prompting users for a split and/or trying an automatic source-target alignment. </li>

|

| 56 |

+

</ol>

|

| 57 |

+

<h2>Using PECoRe from Python with Inseq</h3>

|

| 58 |

+

<p>This demo is useful for testing out various models and methods for PECoRe attribution, but the <a href="https://inseq.org/en/latest/main_classes/cli.html#attribute-context"><code>inseq attribute-context</code></a> CLI command is the way to go if you want to run experiments on several examples, or if you want to exploit the full customizability of the Inseq API.</p>

|

| 59 |

+

<p>The utility we provide in this section allows you to generate Python and Shell code calling the Inseq CLI with the parameters you set in the interface. This is useful to understand how to use the Inseq API and quickly get up to speed with running PECoRe on your own models and data.</p>

|

| 60 |

+

<p>Once you are satisfied with the parameters you set (including context/query strings in the <code>🐑 Demo</code> tab), just press the button and get your code snippets ready for usage! 🤗</p>

|

| 61 |

"""

|

| 62 |

|

| 63 |

citation = r"""

|

img/pecore_ui_output_example.png

CHANGED

|

|

presets.py

CHANGED

|

@@ -1,3 +1,5 @@

|

|

|

|

|

|

|

|

| 1 |

SYSTEM_PROMPT = "You are a helpful assistant that provide concise and accurate answers."

|

| 2 |

|

| 3 |

def set_cora_preset():

|

|

@@ -77,4 +79,97 @@ def set_mistral_instruct_preset():

|

|

| 77 |

"[INST]{context}\n{current}[/INST]" # input_template

|

| 78 |

"[INST]{current}[/INST]" # input_current_text_template

|

| 79 |

"\n" # decoder_input_output_separator

|

| 80 |

-

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

|

| 3 |

SYSTEM_PROMPT = "You are a helpful assistant that provide concise and accurate answers."

|

| 4 |

|

| 5 |

def set_cora_preset():

|

|

|

|

| 79 |

"[INST]{context}\n{current}[/INST]" # input_template

|

| 80 |

"[INST]{current}[/INST]" # input_current_text_template

|

| 81 |

"\n" # decoder_input_output_separator

|

| 82 |

+

)

|

| 83 |

+

|

| 84 |

+

def update_code_snippets_fn(

|

| 85 |

+

input_current_text: str,

|

| 86 |

+

input_context_text: str,

|

| 87 |

+

output_current_text: str,

|

| 88 |

+

output_context_text: str,

|

| 89 |

+

model_name_or_path: str,

|

| 90 |

+

attribution_method: str,

|

| 91 |

+

attributed_fn: str | None,

|

| 92 |

+

context_sensitivity_metric: str,

|

| 93 |

+

context_sensitivity_std_threshold: float,

|

| 94 |

+

context_sensitivity_topk: int,

|

| 95 |

+

attribution_std_threshold: float,

|

| 96 |

+

attribution_topk: int,

|

| 97 |

+

input_template: str,

|

| 98 |

+

output_template: str,

|

| 99 |

+

contextless_input_template: str,

|

| 100 |

+

contextless_output_template: str,

|

| 101 |

+

special_tokens_to_keep: str | list[str] | None,

|

| 102 |

+

decoder_input_output_separator: str,

|

| 103 |

+

model_kwargs: str,

|

| 104 |

+

tokenizer_kwargs: str,

|

| 105 |

+

generation_kwargs: str,

|

| 106 |

+

attribution_kwargs: str,

|

| 107 |

+

) -> tuple[str, str]:

|

| 108 |

+

def get_kwargs_str(kwargs: str, name: str, pad: str = " " * 4) -> str:

|

| 109 |

+

kwargs_dict = json.loads(kwargs)

|

| 110 |

+

return nl + pad + name + '=' + str(kwargs_dict) + ',' if kwargs_dict else ''

|

| 111 |

+

nl = "\n"

|

| 112 |

+

tq = "\"\"\""

|

| 113 |

+

# Python

|

| 114 |

+

python = f"""#!pip install inseq

|

| 115 |

+

import inseq

|

| 116 |

+

from inseq.commands.attribute_context import attribute_context_with_model

|

| 117 |

+

|

| 118 |

+

inseq_model = inseq.load_model(

|

| 119 |

+

"{model_name_or_path}",

|

| 120 |

+

"{attribution_method}",{get_kwargs_str(model_kwargs, "model_kwargs")}{get_kwargs_str(tokenizer_kwargs, "tokenizer_kwargs")}

|

| 121 |

+

)

|

| 122 |

+

|

| 123 |

+

pecore_args = AttributeContextArgs(

|

| 124 |

+

save_path="pecore_output.json",

|

| 125 |

+

viz_path="pecore_output.html",

|

| 126 |

+

model_name_or_path="{model_name_or_path}",

|

| 127 |

+

attribution_method="{attribution_method}",

|

| 128 |

+

attributed_fn="{attributed_fn}",

|

| 129 |

+

context_sensitivity_metric="{context_sensitivity_metric}",

|

| 130 |

+

special_tokens_to_keep={special_tokens_to_keep},

|

| 131 |

+

context_sensitivity_std_threshold={context_sensitivity_std_threshold},

|

| 132 |

+

attribution_std_threshold={attribution_std_threshold},

|

| 133 |

+

input_current_text=\"\"\"{input_current_text}\"\"\",

|

| 134 |

+

input_template=\"\"\"{input_template}\"\"\",

|

| 135 |

+

output_template="{output_template}",

|

| 136 |

+

contextless_input_current_text=\"\"\"{contextless_input_template}\"\"\",

|

| 137 |

+

contextless_output_current_text=\"\"\"{contextless_output_template}\"\"\",

|

| 138 |

+

context_sensitivity_topk={context_sensitivity_topk if context_sensitivity_topk > 0 else None},

|

| 139 |

+

attribution_topk={attribution_topk if attribution_topk > 0 else None},

|

| 140 |

+

input_context_text={tq + input_context_text + tq if input_context_text else None},

|

| 141 |

+

output_context_text={tq + output_context_text + tq if output_context_text else None},

|

| 142 |

+

output_current_text={tq + output_current_text + tq if output_current_text else None},

|

| 143 |

+

decoder_input_output_separator={tq + decoder_input_output_separator + tq if decoder_input_output_separator else None},{get_kwargs_str(model_kwargs, "model_kwargs")}{get_kwargs_str(tokenizer_kwargs, "tokenizer_kwargs")}{get_kwargs_str(generation_kwargs, "generation_kwargs")}{get_kwargs_str(attribution_kwargs, "attribution_kwargs")}

|

| 144 |

+

)

|

| 145 |

+

out = attribute_context_with_model(pecore_args, loaded_model)"""

|

| 146 |

+

# Bash

|

| 147 |

+

bash = f"""pip install inseq

|

| 148 |

+

inseq attribute-context \\

|

| 149 |

+

--save-path pecore_output.json \\

|

| 150 |

+

--viz-path pecore_output.html \\

|

| 151 |

+

--model-name-or-path "{model_name_or_path}" \\

|

| 152 |

+

--attribution-method "{attribution_method}" \\

|

| 153 |

+

--attributed-fn "{attributed_fn}" \\

|

| 154 |

+

--context-sensitivity-metric "{context_sensitivity_metric}" \\

|

| 155 |

+

--special-tokens-to-keep {" ".join(special_tokens_to_keep)} \\

|

| 156 |

+

--context-sensitivity-std-threshold {context_sensitivity_std_threshold} \\

|

| 157 |

+

--attribution-std-threshold {attribution_std_threshold} \\

|

| 158 |

+

--input-current-text "{input_current_text}" \\

|

| 159 |

+

--input-template "{input_template}" \\

|

| 160 |

+

--output-template "{output_template}" \\

|

| 161 |

+

--contextless-input-current-text "{contextless_input_template}" \\

|

| 162 |

+

--contextless-output-current-text "{contextless_output_template}" \\

|

| 163 |

+

--context-sensitivity-topk {context_sensitivity_topk if context_sensitivity_topk > 0 else None} \\

|

| 164 |

+

--attribution-topk {attribution_topk if attribution_topk > 0 else None} \\

|

| 165 |

+

--input-context-text "{input_context_text}" \\

|

| 166 |

+

--output-context-text "{output_context_text}" \\

|

| 167 |

+

--output-current-text "{output_current_text}" \\

|

| 168 |

+

--decoder-input-output-separator "{decoder_input_output_separator}" \\

|

| 169 |

+

--model-kwargs "{str(model_kwargs).replace(nl, "")}" \\

|

| 170 |

+

--tokenizer-kwargs "{str(tokenizer_kwargs).replace(nl, "")} \\

|

| 171 |

+

--generation-kwargs "{str(generation_kwargs).replace(nl, "")}" \\

|

| 172 |

+

--attribution-kwargs "{str(attribution_kwargs).replace(nl, "")}"

|

| 173 |

+

"""

|

| 174 |

+

return python, bash

|

| 175 |

+

|

style.py

CHANGED

|

@@ -38,4 +38,8 @@ custom_css = """

|

|

| 38 |

.footer-custom-block a {

|

| 39 |

margin-right: 15px;

|

| 40 |

}

|

|

|

|

|

|

|

|

|

|

|

|

|

| 41 |

"""

|

|

|

|

| 38 |

.footer-custom-block a {

|

| 39 |

margin-right: 15px;

|

| 40 |

}

|

| 41 |

+

|

| 42 |

+

ol {

|

| 43 |

+

padding-left: 30px;

|

| 44 |

+

}

|

| 45 |

"""

|