Spaces:

Runtime error

Runtime error

ydshieh

commited on

Commit

•

9a6a97f

1

Parent(s):

943681e

upload more samples

Browse files- app.py +6 -16

- model.py +8 -5

- samples/COCO_val2014_000000581632.jpg +0 -0

- samples/COCO_val2014_000000581654.jpg +0 -0

- samples/COCO_val2014_000000581655.jpg +0 -0

- samples/COCO_val2014_000000581683.jpg +0 -0

- samples/COCO_val2014_000000581702.jpg +0 -0

- samples/COCO_val2014_000000581717.jpg +0 -0

- samples/COCO_val2014_000000581726.jpg +0 -0

- samples/COCO_val2014_000000581731.jpg +0 -0

- samples/COCO_val2014_000000581736.jpg +0 -0

- samples/COCO_val2014_000000581749.jpg +0 -0

- samples/COCO_val2014_000000581781.jpg +0 -0

- samples/COCO_val2014_000000581827.jpg +0 -0

- samples/COCO_val2014_000000581829.jpg +0 -0

- samples/COCO_val2014_000000581831.jpg +0 -0

- samples/COCO_val2014_000000581863.jpg +0 -0

- samples/COCO_val2014_000000581886.jpg +0 -0

- samples/COCO_val2014_000000581887.jpg +0 -0

- samples/COCO_val2014_000000581899.jpg +0 -0

- samples/COCO_val2014_000000581913.jpg +0 -0

- samples/COCO_val2014_000000581929.jpg +0 -0

app.py

CHANGED

|

@@ -21,34 +21,24 @@ st.sidebar.title("Select a sample image")

|

|

| 21 |

|

| 22 |

sample_name = st.sidebar.selectbox(

|

| 23 |

"Please Choose the Model",

|

| 24 |

-

|

| 25 |

-

"sample 1",

|

| 26 |

-

"sample 2",

|

| 27 |

-

"sample 3",

|

| 28 |

-

"sample 4"

|

| 29 |

-

)

|

| 30 |

)

|

| 31 |

|

| 32 |

-

sample_name = f'

|

| 33 |

-

sample_path =

|

| 34 |

|

| 35 |

image = Image.open(sample_path)

|

| 36 |

show = st.image(image, use_column_width=True)

|

| 37 |

-

show.image(image, '

|

| 38 |

-

|

| 39 |

|

| 40 |

# For newline

|

| 41 |

st.sidebar.write('\n')

|

| 42 |

|

| 43 |

-

# if st.sidebar.button("Click here to get image caption"):

|

| 44 |

-

|

| 45 |

with st.spinner('Generating image caption ...'):

|

| 46 |

|

| 47 |

-

caption

|

| 48 |

-

|

| 49 |

st.success(f'caption: {caption}')

|

| 50 |

-

st.success(f'tokens: {tokens}')

|

| 51 |

-

st.success(f'token ids: {token_ids}')

|

| 52 |

|

| 53 |

st.sidebar.header("ViT-GPT2 predicts:")

|

| 54 |

st.sidebar.write(f"caption: {caption}", '\n')

|

|

|

|

| 21 |

|

| 22 |

sample_name = st.sidebar.selectbox(

|

| 23 |

"Please Choose the Model",

|

| 24 |

+

sample_fns

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 25 |

)

|

| 26 |

|

| 27 |

+

sample_name = f'COCO_val2014_{sample_name.replace('.jpg', '').zfill(12)}.jpg'

|

| 28 |

+

sample_path = os.path.join(sample_dir, sample_name)

|

| 29 |

|

| 30 |

image = Image.open(sample_path)

|

| 31 |

show = st.image(image, use_column_width=True)

|

| 32 |

+

show.image(image, 'Selected Image', use_column_width=True)

|

|

|

|

| 33 |

|

| 34 |

# For newline

|

| 35 |

st.sidebar.write('\n')

|

| 36 |

|

|

|

|

|

|

|

| 37 |

with st.spinner('Generating image caption ...'):

|

| 38 |

|

| 39 |

+

caption = predict_dummy(image)

|

| 40 |

+

image.close()

|

| 41 |

st.success(f'caption: {caption}')

|

|

|

|

|

|

|

| 42 |

|

| 43 |

st.sidebar.header("ViT-GPT2 predicts:")

|

| 44 |

st.sidebar.write(f"caption: {caption}", '\n')

|

model.py

CHANGED

|

@@ -53,18 +53,21 @@ def predict(image):

|

|

| 53 |

token_ids = np.array(generation.sequences)[0]

|

| 54 |

caption = tokenizer.decode(token_ids)

|

| 55 |

|

| 56 |

-

return caption

|

| 57 |

|

| 58 |

-

def

|

| 59 |

|

| 60 |

image_path = 'samples/val_000000039769.jpg'

|

| 61 |

image = Image.open(image_path)

|

| 62 |

|

| 63 |

-

caption

|

| 64 |

image.close()

|

| 65 |

|

| 66 |

def predict_dummy(image):

|

| 67 |

|

| 68 |

-

return 'dummy caption!'

|

| 69 |

|

| 70 |

-

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

token_ids = np.array(generation.sequences)[0]

|

| 54 |

caption = tokenizer.decode(token_ids)

|

| 55 |

|

| 56 |

+

return caption

|

| 57 |

|

| 58 |

+

def compile():

|

| 59 |

|

| 60 |

image_path = 'samples/val_000000039769.jpg'

|

| 61 |

image = Image.open(image_path)

|

| 62 |

|

| 63 |

+

caption = predict(image)

|

| 64 |

image.close()

|

| 65 |

|

| 66 |

def predict_dummy(image):

|

| 67 |

|

| 68 |

+

return 'dummy caption!'

|

| 69 |

|

| 70 |

+

compile()

|

| 71 |

+

|

| 72 |

+

sample_dir = './samples/'

|

| 73 |

+

sample_fns = tuple([f"{int(f.replace('COCO_val2014_', '').replace('.jpg', ''))}.jpg" for f in os.listdir(sample_dir) if f.startswith('COCO_val2014_')])

|

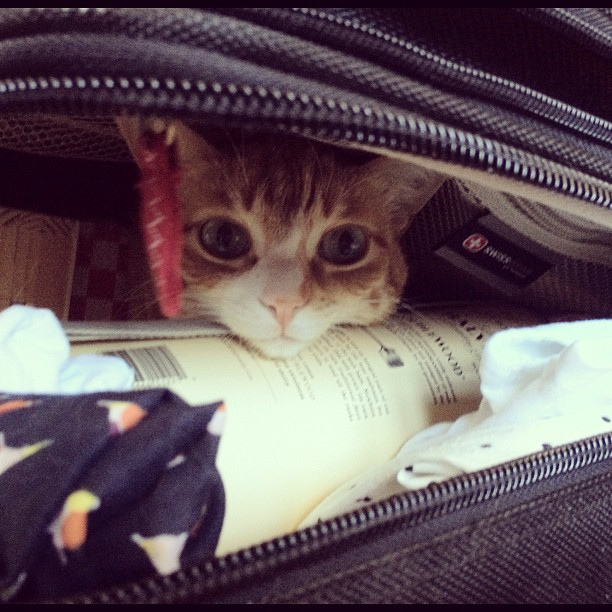

samples/COCO_val2014_000000581632.jpg

ADDED

|

samples/COCO_val2014_000000581654.jpg

ADDED

|

samples/COCO_val2014_000000581655.jpg

ADDED

|

samples/COCO_val2014_000000581683.jpg

ADDED

|

samples/COCO_val2014_000000581702.jpg

ADDED

|

samples/COCO_val2014_000000581717.jpg

ADDED

|

samples/COCO_val2014_000000581726.jpg

ADDED

|

samples/COCO_val2014_000000581731.jpg

ADDED

|

samples/COCO_val2014_000000581736.jpg

ADDED

|

samples/COCO_val2014_000000581749.jpg

ADDED

|

samples/COCO_val2014_000000581781.jpg

ADDED

|

samples/COCO_val2014_000000581827.jpg

ADDED

|

samples/COCO_val2014_000000581829.jpg

ADDED

|

samples/COCO_val2014_000000581831.jpg

ADDED

|

samples/COCO_val2014_000000581863.jpg

ADDED

|

samples/COCO_val2014_000000581886.jpg

ADDED

|

samples/COCO_val2014_000000581887.jpg

ADDED

|

samples/COCO_val2014_000000581899.jpg

ADDED

|

samples/COCO_val2014_000000581913.jpg

ADDED

|

samples/COCO_val2014_000000581929.jpg

ADDED

|