Spaces:

Sleeping

Sleeping

Added files

Browse files- README.md +33 -13

- app.py +228 -0

- data_processor.py +119 -0

- images/image-1.png +0 -0

- images/image-2.png +0 -0

- images/image-3.png +0 -0

- images/image.png +0 -0

- requirements.txt +6 -0

README.md

CHANGED

|

@@ -1,13 +1,33 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Building LLM Powered GitHub Assitant

|

| 2 |

+

|

| 3 |

+

This repository contains the code accompanying my Medium article, Building LLM Powered GitHub Assitant. Follow the steps below to set up and run the server for your needs.

|

| 4 |

+

|

| 5 |

+

## Setup Instructions

|

| 6 |

+

|

| 7 |

+

1. **Environment Variables**

|

| 8 |

+

Create a `.env` file in the root directory of the project and add your API key:

|

| 9 |

+

```

|

| 10 |

+

GEMINI_API_KEY=your_api_key_here

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

2. **Install Requirements**

|

| 14 |

+

Install the required packages using the `requirements.txt` file:

|

| 15 |

+

```

|

| 16 |

+

pip install -r requirements.txt

|

| 17 |

+

```

|

| 18 |

+

|

| 19 |

+

3. **Run the Streamlit Server**

|

| 20 |

+

Start the Streamlit server by running:

|

| 21 |

+

```

|

| 22 |

+

streamlit run app.py

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

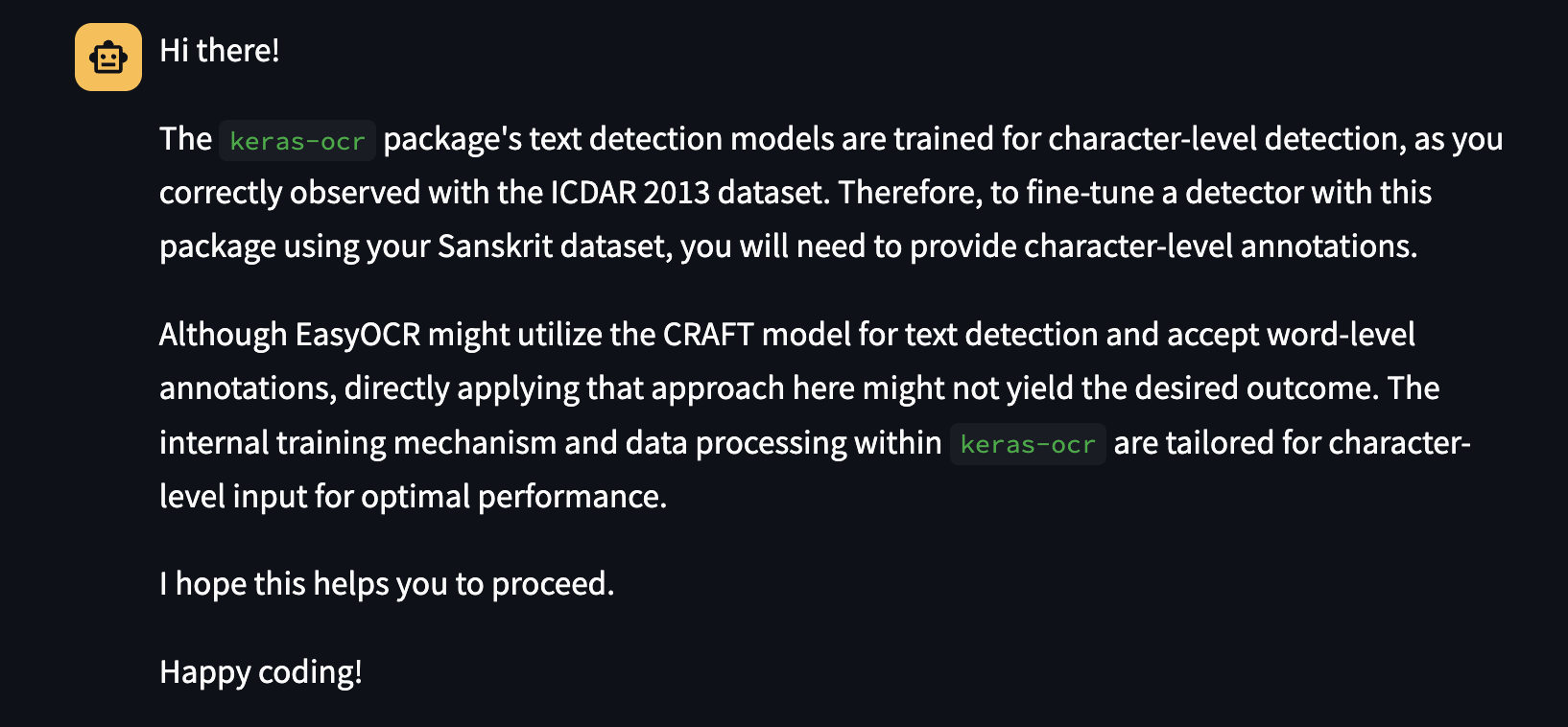

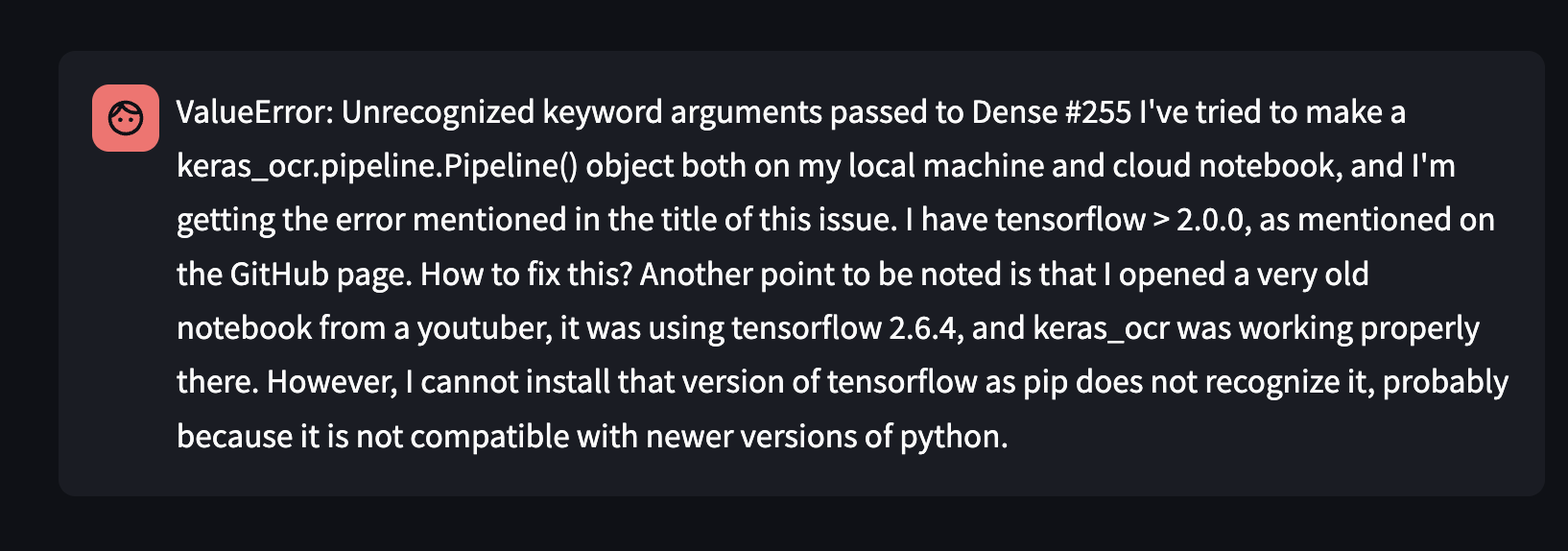

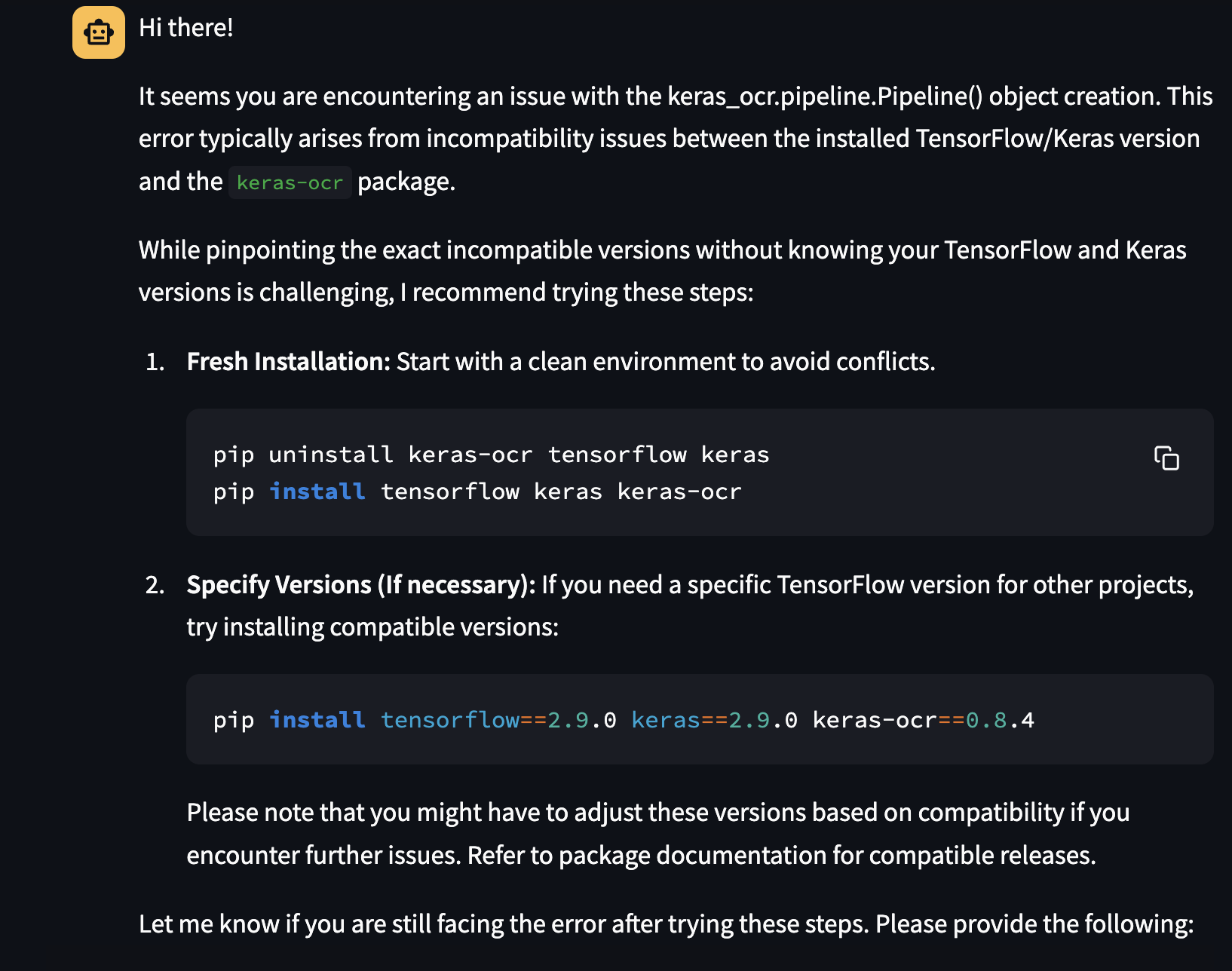

4. **Example (with [keras-ocr](https://github.com/faustomorales/keras-ocr))**

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

If you encounter any issues or have questions, feel free to open an issue in this repository.

|

| 32 |

+

|

| 33 |

+

Happy coding!

|

app.py

ADDED

|

@@ -0,0 +1,228 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import redis

|

| 2 |

+

import os

|

| 3 |

+

from dotenv import load_dotenv

|

| 4 |

+

import google.generativeai as genai

|

| 5 |

+

from typing import List

|

| 6 |

+

import numpy as np

|

| 7 |

+

from redis.commands.search.query import Query

|

| 8 |

+

from haystack import Pipeline, component

|

| 9 |

+

from haystack.utils import Secret

|

| 10 |

+

from haystack_integrations.components.generators.google_ai import GoogleAIGeminiChatGenerator, GoogleAIGeminiGenerator

|

| 11 |

+

from haystack.components.builders import PromptBuilder

|

| 12 |

+

import streamlit as st

|

| 13 |

+

from data_processor import fetch_data,ingest_data

|

| 14 |

+

|

| 15 |

+

load_dotenv()

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

genai.configure(api_key=os.environ["GEMINI_API_KEY"])

|

| 19 |

+

|

| 20 |

+

generation_config = {

|

| 21 |

+

"temperature": 1,

|

| 22 |

+

"top_p": 0.95,

|

| 23 |

+

"top_k": 64,

|

| 24 |

+

"max_output_tokens": 8192,

|

| 25 |

+

"response_mime_type": "text/plain",

|

| 26 |

+

}

|

| 27 |

+

|

| 28 |

+

model = genai.GenerativeModel(

|

| 29 |

+

model_name="gemini-1.5-flash",

|

| 30 |

+

generation_config=generation_config,

|

| 31 |

+

system_instruction="You are optimized to generate accurate descriptions for given Python codes. When the user inputs the code, you must return the description according to its goal and functionality. You are not allowed to generate additional details. The user expects at least 5 sentence-long descriptions.",

|

| 32 |

+

)

|

| 33 |

+

|

| 34 |

+

gemini = GoogleAIGeminiGenerator(api_key=Secret.from_env_var("GEMINI_API_KEY"), model='gemini-1.5-flash')

|

| 35 |

+

|

| 36 |

+

def get_embeddings(content: List):

|

| 37 |

+

return genai.embed_content(model='models/text-embedding-004',content=content)['embedding']

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def draft_prompt(query: str, chat_history: str) -> str:

|

| 41 |

+

"""

|

| 42 |

+

Perform a vector similarity search and retrieve related functions.

|

| 43 |

+

|

| 44 |

+

Args:

|

| 45 |

+

query (str): The input query to encode.

|

| 46 |

+

|

| 47 |

+

Returns:

|

| 48 |

+

str: A formatted string containing details of related functions.

|

| 49 |

+

"""

|

| 50 |

+

INDEX_NAME = "idx:codes_vss"

|

| 51 |

+

client = st.session_state.client

|

| 52 |

+

vector_search_query = (

|

| 53 |

+

Query('(*)=>[KNN 2 @vector $query_vector AS vector_score]')

|

| 54 |

+

.sort_by('vector_score')

|

| 55 |

+

.return_fields('vector_score', 'id', 'name', 'definition', 'file_name', 'type', 'uses')

|

| 56 |

+

.dialect(2)

|

| 57 |

+

)

|

| 58 |

+

|

| 59 |

+

encoded_query = get_embeddings(query)

|

| 60 |

+

vector_params = {

|

| 61 |

+

"query_vector": np.array(encoded_query, dtype=np.float32).tobytes()

|

| 62 |

+

}

|

| 63 |

+

|

| 64 |

+

result_docs = client.ft(INDEX_NAME).search(vector_search_query, vector_params).docs

|

| 65 |

+

|

| 66 |

+

related_items: List[str] = []

|

| 67 |

+

dependencies: List[str] = []

|

| 68 |

+

for doc in result_docs:

|

| 69 |

+

related_items.append(doc.name)

|

| 70 |

+

if doc.uses:

|

| 71 |

+

dependencies.extend(use for use in doc.uses.split(", ") if use)

|

| 72 |

+

|

| 73 |

+

dependencies = list(set(dependencies) - set(related_items))

|

| 74 |

+

|

| 75 |

+

def get_query(item_list):

|

| 76 |

+

return Query(f"@name:({' | '.join(item_list)})").return_fields(

|

| 77 |

+

'id', 'name', 'definition', 'file_name', 'type'

|

| 78 |

+

)

|

| 79 |

+

|

| 80 |

+

related_docs = client.ft(INDEX_NAME).search(get_query(related_items)).docs

|

| 81 |

+

dependency_docs = client.ft(INDEX_NAME).search(get_query(dependencies)).docs

|

| 82 |

+

|

| 83 |

+

def format_doc(doc):

|

| 84 |

+

return (

|

| 85 |

+

f"{'*' * 28} CODE SNIPPET {doc.id} {'*' * 28}\n"

|

| 86 |

+

f"* Name: {doc.name}\n"

|

| 87 |

+

f"* File: {doc.file_name}\n"

|

| 88 |

+

f"* {doc.type.capitalize()} definition:\n"

|

| 89 |

+

f"```python\n{doc.definition}\n```\n"

|

| 90 |

+

)

|

| 91 |

+

|

| 92 |

+

formatted_results_main = [format_doc(doc) for doc in related_docs]

|

| 93 |

+

formatted_results_support = [format_doc(doc) for doc in dependency_docs]

|

| 94 |

+

|

| 95 |

+

return (

|

| 96 |

+

f"User Question: {query}\n\n"

|

| 97 |

+

f"Current Chat History: \n{chat_history}\n\n"

|

| 98 |

+

f"USE BELOW CODES TO ANSWER USER QUESTIONS.\n"

|

| 99 |

+

f"{chr(10).join(formatted_results_main)}\n\n"

|

| 100 |

+

f"SOME SUPPORTING FUNCTIONS AND CLASS YOU MAY WANT.\n"

|

| 101 |

+

f"{chr(10).join(formatted_results_support)}"

|

| 102 |

+

)

|

| 103 |

+

|

| 104 |

+

@component

|

| 105 |

+

class RedisRetreiver:

|

| 106 |

+

@component.output_types(context=str)

|

| 107 |

+

def run(self, query:str, chat_history:str):

|

| 108 |

+

return {"context": draft_prompt(query, chat_history)}

|

| 109 |

+

|

| 110 |

+

llm = GoogleAIGeminiGenerator(api_key=Secret.from_env_var("GEMINI_API_KEY"), model='gemini-1.5-pro')

|

| 111 |

+

# llm = OpenAIGenerator()

|

| 112 |

+

|

| 113 |

+

template = """

|

| 114 |

+

You are a helpful agent optimized to resolve GitHub issues for your organization's libraries. Users will ask questions when they encounter problems with the code repository.

|

| 115 |

+

You have access to all the necessary code for addressing these issues.

|

| 116 |

+

First, you should understand the user's question and identify the relevant code blocks.

|

| 117 |

+

Then, craft a precise and targeted response that allows the user to find an exact solution to their problem.

|

| 118 |

+

You must provide code snippets rather than just opinions.

|

| 119 |

+

You should always assume user has installed this python package in their system and raised question raised while they are using the library.

|

| 120 |

+

|

| 121 |

+

In addition to the above tasks, you are free to:

|

| 122 |

+

* Greet the user.

|

| 123 |

+

* [ONLY IF THE QUESTION IS INSUFFICIENT] Request additional clarity.

|

| 124 |

+

* Politely decline irrelevant queries.

|

| 125 |

+

* Inform the user if their query cannot be processed or accomplished.

|

| 126 |

+

|

| 127 |

+

By any chance you should NOT,

|

| 128 |

+

* Ask or recommend user to use different library. Or code snipits related to other similar libraies.

|

| 129 |

+

* Provide inaccurate explnations.

|

| 130 |

+

* Provide sugestions without code examples.

|

| 131 |

+

|

| 132 |

+

{{context}}

|

| 133 |

+

"""

|

| 134 |

+

|

| 135 |

+

prompt_builder = PromptBuilder(template=template)

|

| 136 |

+

|

| 137 |

+

pipeline = Pipeline()

|

| 138 |

+

pipeline.add_component(name="retriever", instance=RedisRetreiver())

|

| 139 |

+

pipeline.add_component("prompt_builder", prompt_builder)

|

| 140 |

+

pipeline.add_component("llm", llm)

|

| 141 |

+

pipeline.connect("retriever.context", "prompt_builder")

|

| 142 |

+

pipeline.connect("prompt_builder", "llm")

|

| 143 |

+

|

| 144 |

+

# Initialize Streamlit app

|

| 145 |

+

st.title("Code Assistant Chat")

|

| 146 |

+

|

| 147 |

+

tabs = ["Data Fetching","Assistant"]

|

| 148 |

+

selected_tab = st.sidebar.radio("Select a Tab", tabs)

|

| 149 |

+

if selected_tab == 'Data Fetching':

|

| 150 |

+

if 'redis_connected' not in st.session_state:

|

| 151 |

+

st.session_state.redis_connected = False

|

| 152 |

+

|

| 153 |

+

if not st.session_state.redis_connected:

|

| 154 |

+

st.header("Redis Connection Settings")

|

| 155 |

+

|

| 156 |

+

redis_host = st.text_input("Redis Host")

|

| 157 |

+

redis_port = st.number_input("Redis Port", min_value=1, max_value=65535, value=6379)

|

| 158 |

+

redis_password = st.text_input("Redis Password", type="password")

|

| 159 |

+

|

| 160 |

+

if st.button("Connect to Redis"):

|

| 161 |

+

try:

|

| 162 |

+

client = redis.Redis(

|

| 163 |

+

host=redis_host,

|

| 164 |

+

port=redis_port,

|

| 165 |

+

password=redis_password

|

| 166 |

+

)

|

| 167 |

+

|

| 168 |

+

if client.ping():

|

| 169 |

+

st.success("Successfully connected to Redis!")

|

| 170 |

+

st.session_state.redis_connected = True

|

| 171 |

+

st.session_state.client = client

|

| 172 |

+

else:

|

| 173 |

+

st.error("Failed to connect to Redis. Please check your settings.")

|

| 174 |

+

except redis.ConnectionError:

|

| 175 |

+

st.error("Failed to connect to Redis. Please check your settings and try again.")

|

| 176 |

+

|

| 177 |

+

if st.session_state.redis_connected:

|

| 178 |

+

url = st.text_input("Enter git clone URL")

|

| 179 |

+

if url:

|

| 180 |

+

with st.spinner("Fetching data..."):

|

| 181 |

+

data = fetch_data(url)

|

| 182 |

+

|

| 183 |

+

with st.spinner("Ingesting data..."):

|

| 184 |

+

response_string = ingest_data(st.session_state.client, data)

|

| 185 |

+

|

| 186 |

+

st.write(response_string)

|

| 187 |

+

if selected_tab == 'Assistant':

|

| 188 |

+

# Initialize session state for chat history

|

| 189 |

+

client = redis.Redis(

|

| 190 |

+

host=os.environ['REDIS_HOST'],

|

| 191 |

+

port=12305,

|

| 192 |

+

password=os.environ['REDIS_PASSWORD']

|

| 193 |

+

)

|

| 194 |

+

if "messages" not in st.session_state:

|

| 195 |

+

st.session_state.messages = []

|

| 196 |

+

|

| 197 |

+

# Display chat messages

|

| 198 |

+

for message in st.session_state.messages:

|

| 199 |

+

with st.chat_message(message["role"]):

|

| 200 |

+

st.markdown(message["content"])

|

| 201 |

+

|

| 202 |

+

st.session_state.response = None

|

| 203 |

+

|

| 204 |

+

if prompt := st.chat_input("What's your question?"):

|

| 205 |

+

st.session_state.messages.append({"role": "user", "content": prompt})

|

| 206 |

+

with st.chat_message("user"):

|

| 207 |

+

st.markdown(prompt)

|

| 208 |

+

|

| 209 |

+

with st.chat_message("assistant"):

|

| 210 |

+

response_placeholder = st.empty()

|

| 211 |

+

response_placeholder.markdown("Thinking...")

|

| 212 |

+

|

| 213 |

+

try:

|

| 214 |

+

response = pipeline.run({"retriever": {"query": prompt, "chat_history": st.session_state.messages}}, include_outputs_from=['prompt_builder'])

|

| 215 |

+

st.session_state.response = response

|

| 216 |

+

llm_response = response["llm"]["replies"][0]

|

| 217 |

+

|

| 218 |

+

response_placeholder.markdown(llm_response)

|

| 219 |

+

st.session_state.messages.append({"role": "assistant", "content": llm_response})

|

| 220 |

+

except Exception as e:

|

| 221 |

+

response_placeholder.markdown(f"An error occurred: {str(e)}")

|

| 222 |

+

|

| 223 |

+

if st.button("Clear Chat History"):

|

| 224 |

+

st.session_state.messages = []

|

| 225 |

+

st.experimental_rerun()

|

| 226 |

+

|

| 227 |

+

with st.expander("See Chat History"):

|

| 228 |

+

st.markdown(st.session_state.response)

|

data_processor.py

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from typing import List

|

| 3 |

+

import numpy as np

|

| 4 |

+

import redis

|

| 5 |

+

import google.generativeai as genai

|

| 6 |

+

from dotenv import load_dotenv

|

| 7 |

+

from tqdm import tqdm

|

| 8 |

+

import time

|

| 9 |

+

|

| 10 |

+

from redis.commands.search.field import (

|

| 11 |

+

TagField,

|

| 12 |

+

TextField,

|

| 13 |

+

VectorField,

|

| 14 |

+

)

|

| 15 |

+

from redis.commands.search.indexDefinition import IndexDefinition, IndexType

|

| 16 |

+

from redis.commands.search.query import Query

|

| 17 |

+

from sourcegraph import Sourcegraph

|

| 18 |

+

|

| 19 |

+

load_dotenv()

|

| 20 |

+

|

| 21 |

+

INDEX_NAME = "idx:codes_vss"

|

| 22 |

+

|

| 23 |

+

genai.configure(api_key=os.environ["GEMINI_API_KEY"])

|

| 24 |

+

|

| 25 |

+

generation_config = {

|

| 26 |

+

"temperature": 1,

|

| 27 |

+

"top_p": 0.95,

|

| 28 |

+

"top_k": 64,

|

| 29 |

+

"max_output_tokens": 8192,

|

| 30 |

+

"response_mime_type": "text/plain",

|

| 31 |

+

}

|

| 32 |

+

|

| 33 |

+

model = genai.GenerativeModel(

|

| 34 |

+

model_name="gemini-1.5-flash",

|

| 35 |

+

generation_config=generation_config,

|

| 36 |

+

system_instruction="You are optimized to generate accurate descriptions for given Python codes. When the user inputs the code, you must return the description according to its goal and functionality. You are not allowed to generate additional details. The user expects at least 5 sentence-long descriptions.",

|

| 37 |

+

)

|

| 38 |

+

|

| 39 |

+

def fetch_data(url):

|

| 40 |

+

def get_description(code):

|

| 41 |

+

chat_session = model.start_chat(

|

| 42 |

+

history=[

|

| 43 |

+

{

|

| 44 |

+

"role": "user",

|

| 45 |

+

"parts": [

|

| 46 |

+

f"Code: {code}",

|

| 47 |

+

],

|

| 48 |

+

},

|

| 49 |

+

]

|

| 50 |

+

)

|

| 51 |

+

response = chat_session.send_message("INSERT_INPUT_HERE")

|

| 52 |

+

|

| 53 |

+

return response.text

|

| 54 |

+

gihub_repository = Sourcegraph(url)

|

| 55 |

+

gihub_repository.run()

|

| 56 |

+

data = dict(gihub_repository.node_data)

|

| 57 |

+

for key, value in tqdm(data.items()):

|

| 58 |

+

data[key]['description'] = get_description(value['definition'])

|

| 59 |

+

data[key]['uses'] = ", ".join(list(gihub_repository.get_dependencies(key)))

|

| 60 |

+

time.sleep(3) #to overcome limit issues

|

| 61 |

+

return data

|

| 62 |

+

|

| 63 |

+

def get_embeddings(content: List):

|

| 64 |

+

return genai.embed_content(model='models/text-embedding-004',content=content)['embedding']

|

| 65 |

+

|

| 66 |

+

def ingest_data(client: redis.Redis, data):

|

| 67 |

+

try:

|

| 68 |

+

client.delete(client.keys("code:*"))

|

| 69 |

+

except:

|

| 70 |

+

pass

|

| 71 |

+

pipeline = client.pipeline()

|

| 72 |

+

for i, code_metadata in enumerate(data.values(), start=1):

|

| 73 |

+

redis_key = f"code:{i:03}"

|

| 74 |

+

pipeline.json().set(redis_key, "$", code_metadata)

|

| 75 |

+

_ = pipeline.execute()

|

| 76 |

+

keys = sorted(client.keys("code:*"))

|

| 77 |

+

defs = client.json().mget(keys, "$.definition")

|

| 78 |

+

descs = client.json().mget(keys, "$.description")

|

| 79 |

+

embed_inputs = []

|

| 80 |

+

|

| 81 |

+

for i in range(1, len(keys)+1):

|

| 82 |

+

embed_inputs.append(

|

| 83 |

+

f"""{defs[i-1][0]}\n\n{descs[i-1][0]}"""

|

| 84 |

+

)

|

| 85 |

+

embeddings = get_embeddings(embed_inputs)

|

| 86 |

+

VECTOR_DIMENSION = len(embeddings[0])

|

| 87 |

+

pipeline = client.pipeline()

|

| 88 |

+

for key, embedding in zip(keys, embeddings):

|

| 89 |

+

pipeline.json().set(key, "$.embeddings", embedding)

|

| 90 |

+

pipeline.execute()

|

| 91 |

+

|

| 92 |

+

schema = (

|

| 93 |

+

TextField("$.name", no_stem=True, as_name="name"),

|

| 94 |

+

TagField("$.type", as_name="type"),

|

| 95 |

+

TextField("$.definition", no_stem=True, as_name="definition"),

|

| 96 |

+

TextField("$.file_name", no_stem=True, as_name="file_name"),

|

| 97 |

+

TextField("$.description", no_stem=True, as_name="description"),

|

| 98 |

+

TextField("$.uses", no_stem=True, as_name="uses"),

|

| 99 |

+

VectorField(

|

| 100 |

+

"$.embeddings",

|

| 101 |

+

"HNSW",

|

| 102 |

+

{

|

| 103 |

+

"TYPE": "FLOAT32",

|

| 104 |

+

"DIM": VECTOR_DIMENSION,

|

| 105 |

+

"DISTANCE_METRIC": "COSINE",

|

| 106 |

+

},

|

| 107 |

+

as_name="vector",

|

| 108 |

+

),

|

| 109 |

+

)

|

| 110 |

+

definition = IndexDefinition(prefix=["code:"], index_type=IndexType.JSON)

|

| 111 |

+

try:

|

| 112 |

+

_ = client.ft(INDEX_NAME).create_index(fields=schema, definition=definition)

|

| 113 |

+

except redis.exceptions.ResponseError:

|

| 114 |

+

client.ft(INDEX_NAME).dropindex()

|

| 115 |

+

_ = client.ft(INDEX_NAME).create_index(fields=schema, definition=definition)

|

| 116 |

+

info = client.ft(INDEX_NAME).info()

|

| 117 |

+

num_docs = info["num_docs"]

|

| 118 |

+

indexing_failures = info["hash_indexing_failures"]

|

| 119 |

+

return f"{num_docs} documents indexed with {indexing_failures} failures"

|

images/image-1.png

ADDED

|

images/image-2.png

ADDED

|

images/image-3.png

ADDED

|

images/image.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

protobuf==4.25.4

|

| 2 |

+

redis==5.0.8

|

| 3 |

+

haystack-ai==2.3.0

|

| 4 |

+

google-ai-haystack==1.1.1

|

| 5 |

+

sourcegraph==0.0.6

|

| 6 |

+

google-generativeai==0.7.2

|