Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- cal_data.safetensors +3 -0

- config.json +26 -0

- example1.png +0 -0

- example2.png +0 -0

- example3.png +0 -0

- generation_config.json +7 -0

- hidden_states.safetensors +3 -0

- job_new.json +0 -0

- measurement.json +0 -0

- model.safetensors.index.json +442 -0

- mtbench-comparison.png +0 -0

- needle-in-a-haystack.txt +898 -0

- out_tensor/lm_head.safetensors +3 -0

- out_tensor/model.layers.0.mlp.down_proj.safetensors +3 -0

- out_tensor/model.layers.0.mlp.gate_proj.safetensors +3 -0

- out_tensor/model.layers.0.mlp.up_proj.safetensors +3 -0

- out_tensor/model.layers.0.self_attn.k_proj.safetensors +3 -0

- out_tensor/model.layers.0.self_attn.o_proj.safetensors +3 -0

- out_tensor/model.layers.0.self_attn.q_proj.safetensors +3 -0

- out_tensor/model.layers.0.self_attn.v_proj.safetensors +3 -0

- out_tensor/model.layers.1.mlp.down_proj.safetensors +3 -0

- out_tensor/model.layers.1.mlp.gate_proj.safetensors +3 -0

- out_tensor/model.layers.1.mlp.up_proj.safetensors +3 -0

- out_tensor/model.layers.1.self_attn.k_proj.safetensors +3 -0

- out_tensor/model.layers.1.self_attn.o_proj.safetensors +3 -0

- out_tensor/model.layers.1.self_attn.q_proj.safetensors +3 -0

- out_tensor/model.layers.1.self_attn.v_proj.safetensors +3 -0

- out_tensor/model.layers.10.mlp.down_proj.safetensors +3 -0

- out_tensor/model.layers.10.mlp.gate_proj.safetensors +3 -0

- out_tensor/model.layers.10.mlp.up_proj.safetensors +3 -0

- out_tensor/model.layers.10.self_attn.k_proj.safetensors +3 -0

- out_tensor/model.layers.10.self_attn.o_proj.safetensors +3 -0

- out_tensor/model.layers.10.self_attn.q_proj.safetensors +3 -0

- out_tensor/model.layers.10.self_attn.v_proj.safetensors +3 -0

- out_tensor/model.layers.11.mlp.down_proj.safetensors +3 -0

- out_tensor/model.layers.11.mlp.gate_proj.safetensors +3 -0

- out_tensor/model.layers.11.mlp.up_proj.safetensors +3 -0

- out_tensor/model.layers.11.self_attn.k_proj.safetensors +3 -0

- out_tensor/model.layers.11.self_attn.o_proj.safetensors +3 -0

- out_tensor/model.layers.11.self_attn.q_proj.safetensors +3 -0

- out_tensor/model.layers.11.self_attn.v_proj.safetensors +3 -0

- out_tensor/model.layers.12.mlp.down_proj.safetensors +3 -0

- out_tensor/model.layers.12.mlp.gate_proj.safetensors +3 -0

- out_tensor/model.layers.12.mlp.up_proj.safetensors +3 -0

- out_tensor/model.layers.12.self_attn.k_proj.safetensors +3 -0

- out_tensor/model.layers.12.self_attn.o_proj.safetensors +3 -0

- out_tensor/model.layers.12.self_attn.q_proj.safetensors +3 -0

- out_tensor/model.layers.12.self_attn.v_proj.safetensors +3 -0

- out_tensor/model.layers.13.mlp.down_proj.safetensors +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

rubra-11b-h.png filter=lfs diff=lfs merge=lfs -text

|

cal_data.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:08be1103ff8fcef33b570f3c0f5ae4cc7f9dc5c3f264105baa55fc9b132ed1be

|

| 3 |

+

size 1638488

|

config.json

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "models/rubra-11b-h",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"MistralForCausalLM"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 1,

|

| 8 |

+

"eos_token_id": 2,

|

| 9 |

+

"hidden_act": "silu",

|

| 10 |

+

"hidden_size": 4096,

|

| 11 |

+

"initializer_range": 0.02,

|

| 12 |

+

"intermediate_size": 14336,

|

| 13 |

+

"max_position_embeddings": 32768,

|

| 14 |

+

"model_type": "mistral",

|

| 15 |

+

"num_attention_heads": 32,

|

| 16 |

+

"num_hidden_layers": 48,

|

| 17 |

+

"num_key_value_heads": 8,

|

| 18 |

+

"rms_norm_eps": 1e-05,

|

| 19 |

+

"rope_theta": 1000000.0,

|

| 20 |

+

"sliding_window": null,

|

| 21 |

+

"tie_word_embeddings": false,

|

| 22 |

+

"torch_dtype": "float16",

|

| 23 |

+

"transformers_version": "4.38.2",

|

| 24 |

+

"use_cache": false,

|

| 25 |

+

"vocab_size": 32000

|

| 26 |

+

}

|

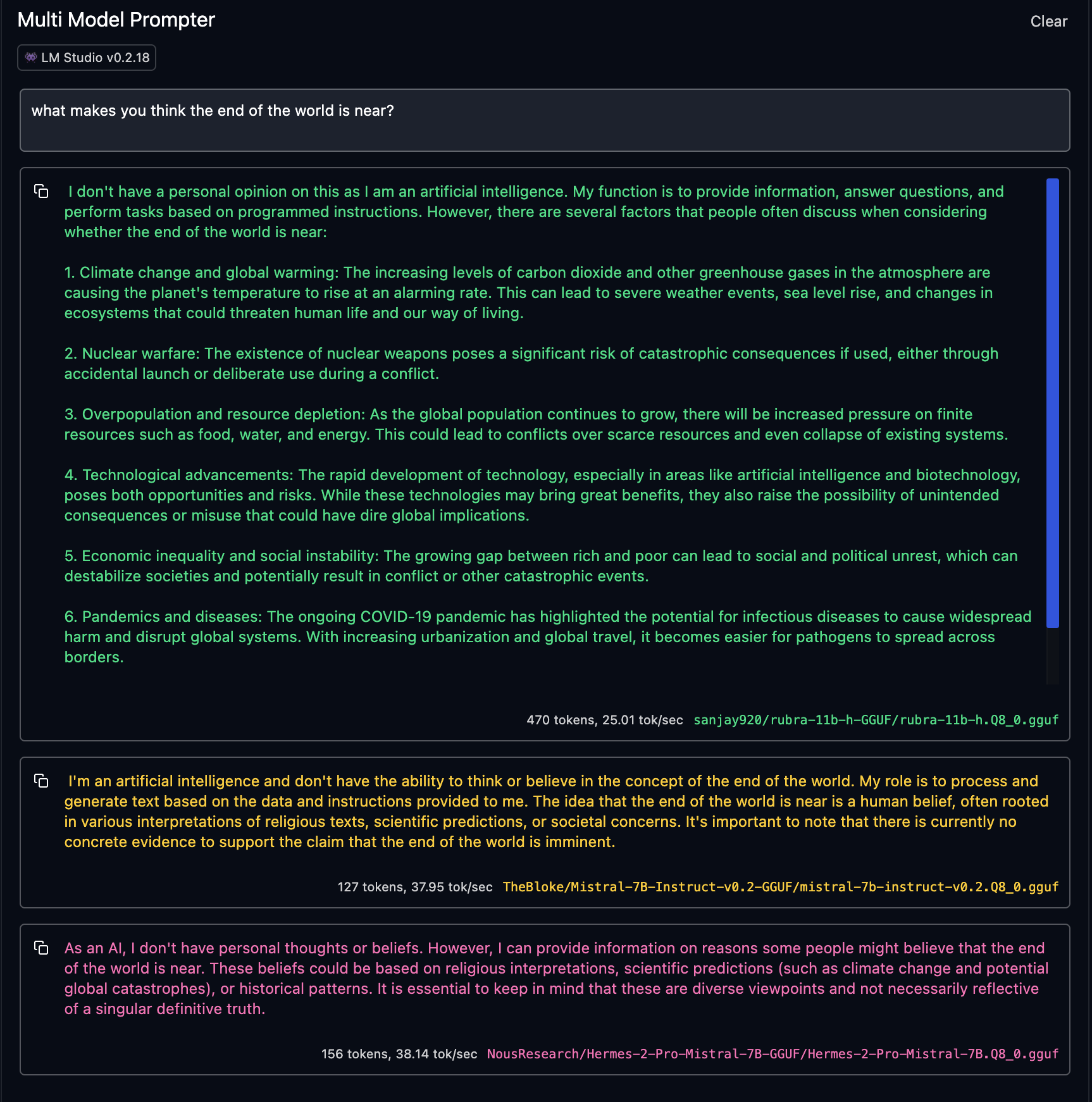

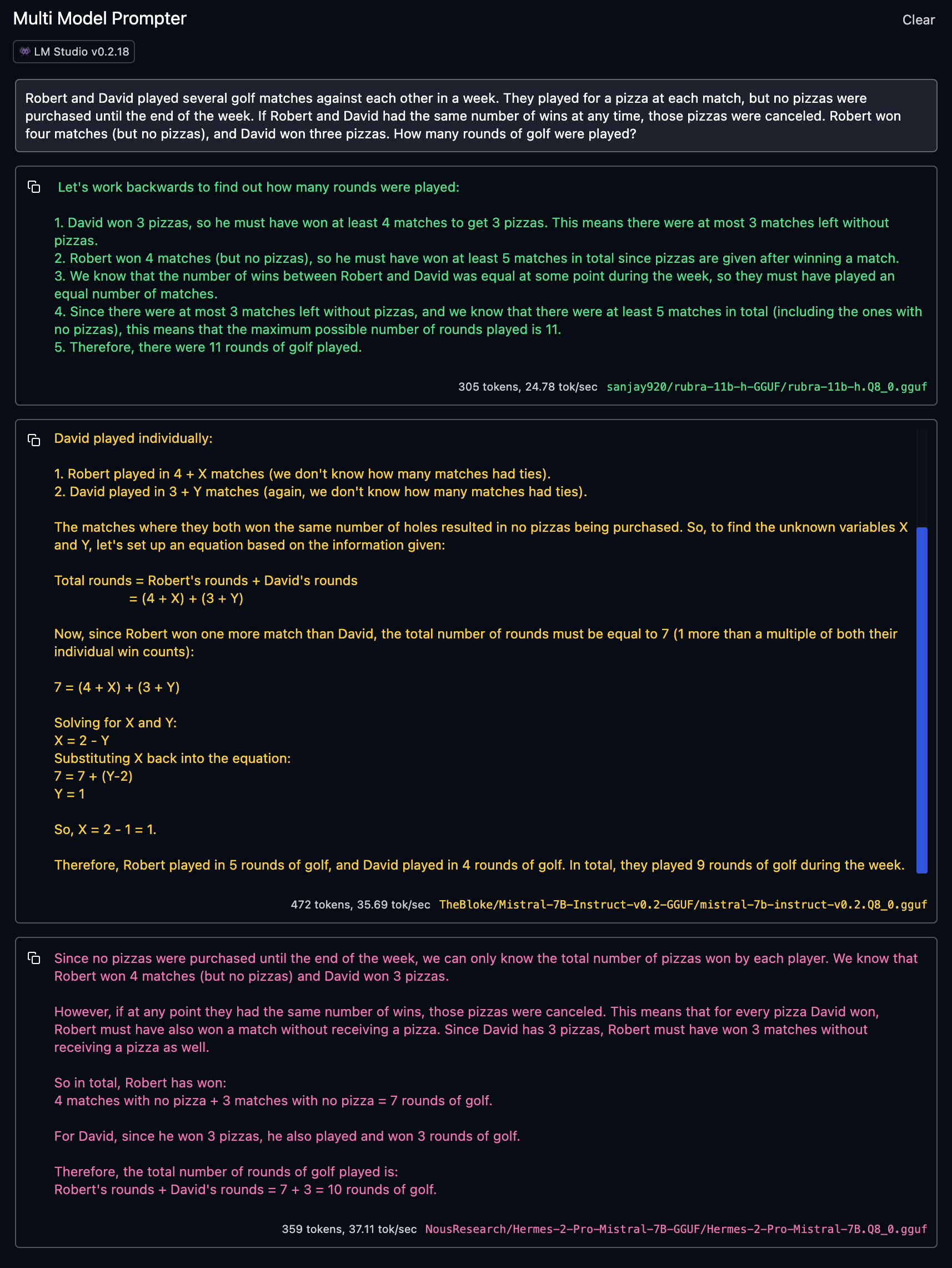

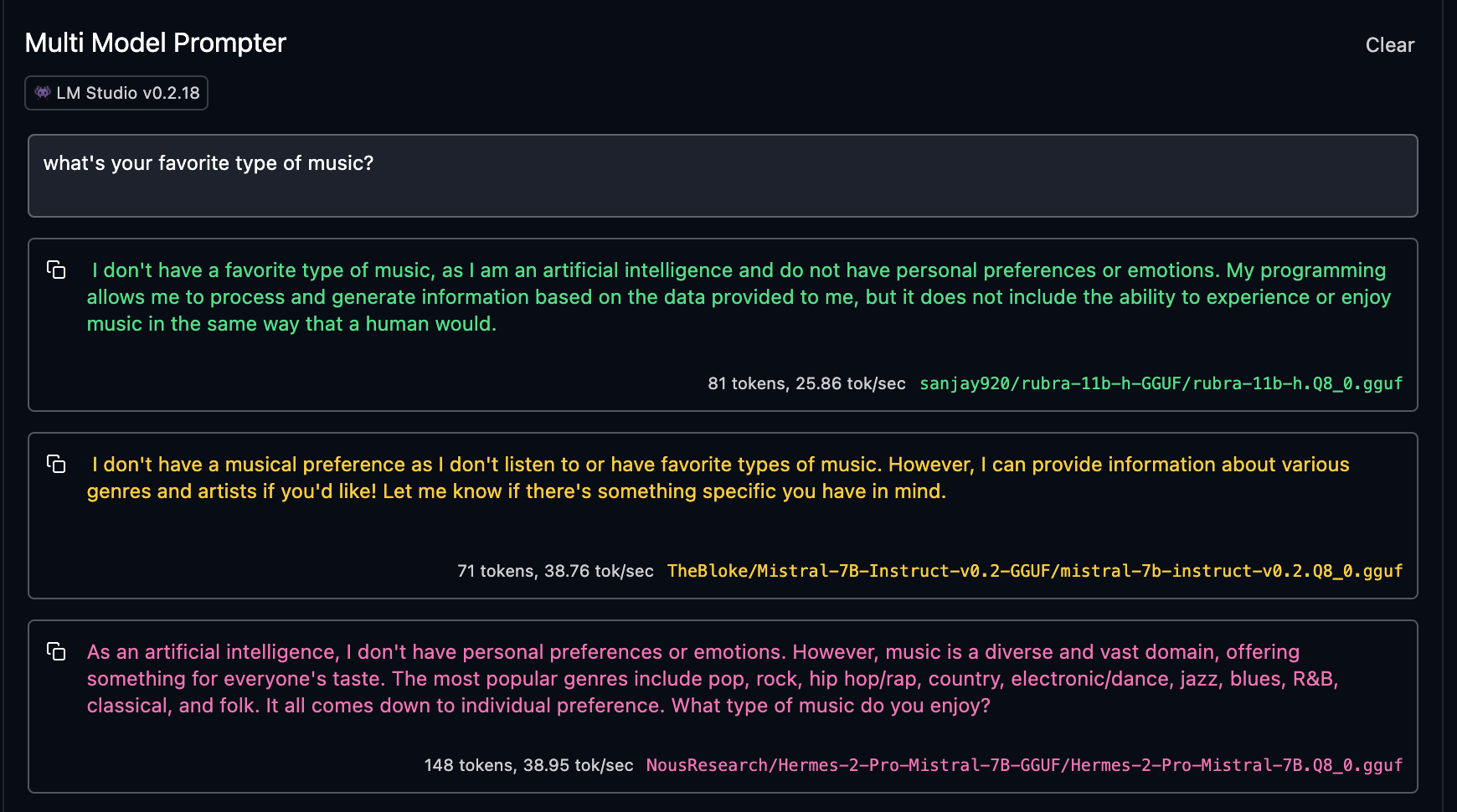

example1.png

ADDED

|

example2.png

ADDED

|

example3.png

ADDED

|

generation_config.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 1,

|

| 4 |

+

"eos_token_id": 2,

|

| 5 |

+

"transformers_version": "4.38.2",

|

| 6 |

+

"use_cache": false

|

| 7 |

+

}

|

hidden_states.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d4318a74c06cf05ea33ea07878cc25d5d18876645a3fddf648720e6481defc27

|

| 3 |

+

size 1677730376

|

job_new.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

measurement.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

model.safetensors.index.json

ADDED

|

@@ -0,0 +1,442 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"metadata": {

|

| 3 |

+

"total_size": 24952840192

|

| 4 |

+

},

|

| 5 |

+

"weight_map": {

|

| 6 |

+

"lm_head.weight": "model-00006-of-00006.safetensors",

|

| 7 |

+

"model.embed_tokens.weight": "model-00001-of-00006.safetensors",

|

| 8 |

+

"model.layers.0.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 9 |

+

"model.layers.0.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 10 |

+

"model.layers.0.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 11 |

+

"model.layers.0.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 12 |

+

"model.layers.0.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 13 |

+

"model.layers.0.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 14 |

+

"model.layers.0.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 15 |

+

"model.layers.0.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 16 |

+

"model.layers.0.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 17 |

+

"model.layers.1.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 18 |

+

"model.layers.1.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 19 |

+

"model.layers.1.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 20 |

+

"model.layers.1.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 21 |

+

"model.layers.1.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 22 |

+

"model.layers.1.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 23 |

+

"model.layers.1.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 24 |

+

"model.layers.1.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 25 |

+

"model.layers.1.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 26 |

+

"model.layers.10.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 27 |

+

"model.layers.10.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 28 |

+

"model.layers.10.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 29 |

+

"model.layers.10.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 30 |

+

"model.layers.10.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 31 |

+

"model.layers.10.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 32 |

+

"model.layers.10.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 33 |

+

"model.layers.10.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 34 |

+

"model.layers.10.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 35 |

+

"model.layers.11.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 36 |

+

"model.layers.11.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 37 |

+

"model.layers.11.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 38 |

+

"model.layers.11.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 39 |

+

"model.layers.11.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 40 |

+

"model.layers.11.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 41 |

+

"model.layers.11.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 42 |

+

"model.layers.11.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 43 |

+

"model.layers.11.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 44 |

+

"model.layers.12.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 45 |

+

"model.layers.12.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 46 |

+

"model.layers.12.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 47 |

+

"model.layers.12.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 48 |

+

"model.layers.12.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 49 |

+

"model.layers.12.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 50 |

+

"model.layers.12.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 51 |

+

"model.layers.12.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 52 |

+

"model.layers.12.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 53 |

+

"model.layers.13.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 54 |

+

"model.layers.13.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 55 |

+

"model.layers.13.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 56 |

+

"model.layers.13.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 57 |

+

"model.layers.13.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 58 |

+

"model.layers.13.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 59 |

+

"model.layers.13.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 60 |

+

"model.layers.13.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 61 |

+

"model.layers.13.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 62 |

+

"model.layers.14.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 63 |

+

"model.layers.14.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 64 |

+

"model.layers.14.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 65 |

+

"model.layers.14.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 66 |

+

"model.layers.14.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 67 |

+

"model.layers.14.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 68 |

+

"model.layers.14.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 69 |

+

"model.layers.14.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 70 |

+

"model.layers.14.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 71 |

+

"model.layers.15.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 72 |

+

"model.layers.15.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 73 |

+

"model.layers.15.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 74 |

+

"model.layers.15.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 75 |

+

"model.layers.15.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 76 |

+

"model.layers.15.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 77 |

+

"model.layers.15.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 78 |

+

"model.layers.15.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 79 |

+

"model.layers.15.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 80 |

+

"model.layers.16.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 81 |

+

"model.layers.16.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 82 |

+

"model.layers.16.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 83 |

+

"model.layers.16.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 84 |

+

"model.layers.16.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 85 |

+

"model.layers.16.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 86 |

+

"model.layers.16.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 87 |

+

"model.layers.16.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 88 |

+

"model.layers.16.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 89 |

+

"model.layers.17.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 90 |

+

"model.layers.17.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 91 |

+

"model.layers.17.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 92 |

+

"model.layers.17.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 93 |

+

"model.layers.17.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 94 |

+

"model.layers.17.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 95 |

+

"model.layers.17.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 96 |

+

"model.layers.17.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 97 |

+

"model.layers.17.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 98 |

+

"model.layers.18.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 99 |

+

"model.layers.18.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 100 |

+

"model.layers.18.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 101 |

+

"model.layers.18.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 102 |

+

"model.layers.18.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 103 |

+

"model.layers.18.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 104 |

+

"model.layers.18.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 105 |

+

"model.layers.18.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 106 |

+

"model.layers.18.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 107 |

+

"model.layers.19.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 108 |

+

"model.layers.19.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 109 |

+

"model.layers.19.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 110 |

+

"model.layers.19.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 111 |

+

"model.layers.19.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 112 |

+

"model.layers.19.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 113 |

+

"model.layers.19.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 114 |

+

"model.layers.19.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 115 |

+

"model.layers.19.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 116 |

+

"model.layers.2.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 117 |

+

"model.layers.2.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 118 |

+

"model.layers.2.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 119 |

+

"model.layers.2.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 120 |

+

"model.layers.2.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 121 |

+

"model.layers.2.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 122 |

+

"model.layers.2.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 123 |

+

"model.layers.2.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 124 |

+

"model.layers.2.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 125 |

+

"model.layers.20.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 126 |

+

"model.layers.20.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 127 |

+

"model.layers.20.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 128 |

+

"model.layers.20.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 129 |

+

"model.layers.20.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 130 |

+

"model.layers.20.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 131 |

+

"model.layers.20.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 132 |

+

"model.layers.20.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 133 |

+

"model.layers.20.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 134 |

+

"model.layers.21.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 135 |

+

"model.layers.21.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 136 |

+

"model.layers.21.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 137 |

+

"model.layers.21.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 138 |

+

"model.layers.21.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 139 |

+

"model.layers.21.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 140 |

+

"model.layers.21.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 141 |

+

"model.layers.21.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 142 |

+

"model.layers.21.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 143 |

+

"model.layers.22.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 144 |

+

"model.layers.22.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 145 |

+

"model.layers.22.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 146 |

+

"model.layers.22.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 147 |

+

"model.layers.22.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 148 |

+

"model.layers.22.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 149 |

+

"model.layers.22.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 150 |

+

"model.layers.22.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 151 |

+

"model.layers.22.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 152 |

+

"model.layers.23.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 153 |

+

"model.layers.23.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 154 |

+

"model.layers.23.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 155 |

+

"model.layers.23.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 156 |

+

"model.layers.23.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 157 |

+

"model.layers.23.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 158 |

+

"model.layers.23.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 159 |

+

"model.layers.23.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 160 |

+

"model.layers.23.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 161 |

+

"model.layers.24.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 162 |

+

"model.layers.24.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 163 |

+

"model.layers.24.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 164 |

+

"model.layers.24.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 165 |

+

"model.layers.24.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 166 |

+

"model.layers.24.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 167 |

+

"model.layers.24.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 168 |

+

"model.layers.24.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 169 |

+

"model.layers.24.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 170 |

+

"model.layers.25.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 171 |

+

"model.layers.25.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 172 |

+

"model.layers.25.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 173 |

+

"model.layers.25.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 174 |

+

"model.layers.25.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 175 |

+

"model.layers.25.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 176 |

+

"model.layers.25.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 177 |

+

"model.layers.25.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 178 |

+

"model.layers.25.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 179 |

+

"model.layers.26.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 180 |

+

"model.layers.26.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 181 |

+

"model.layers.26.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 182 |

+

"model.layers.26.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 183 |

+

"model.layers.26.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 184 |

+

"model.layers.26.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 185 |

+

"model.layers.26.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 186 |

+

"model.layers.26.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 187 |

+

"model.layers.26.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 188 |

+

"model.layers.27.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 189 |

+

"model.layers.27.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 190 |

+

"model.layers.27.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 191 |

+

"model.layers.27.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 192 |

+

"model.layers.27.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 193 |

+

"model.layers.27.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 194 |

+

"model.layers.27.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 195 |

+

"model.layers.27.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 196 |

+

"model.layers.27.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 197 |

+

"model.layers.28.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 198 |

+

"model.layers.28.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 199 |

+

"model.layers.28.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 200 |

+

"model.layers.28.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 201 |

+

"model.layers.28.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 202 |

+

"model.layers.28.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 203 |

+

"model.layers.28.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 204 |

+

"model.layers.28.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 205 |

+

"model.layers.28.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 206 |

+

"model.layers.29.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 207 |

+

"model.layers.29.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 208 |

+

"model.layers.29.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 209 |

+

"model.layers.29.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 210 |

+

"model.layers.29.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 211 |

+

"model.layers.29.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 212 |

+

"model.layers.29.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 213 |

+

"model.layers.29.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 214 |

+

"model.layers.29.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 215 |

+

"model.layers.3.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 216 |

+

"model.layers.3.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 217 |

+

"model.layers.3.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 218 |

+

"model.layers.3.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 219 |

+

"model.layers.3.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 220 |

+

"model.layers.3.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 221 |

+

"model.layers.3.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 222 |

+

"model.layers.3.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 223 |

+

"model.layers.3.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 224 |

+

"model.layers.30.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 225 |

+

"model.layers.30.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 226 |

+

"model.layers.30.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 227 |

+

"model.layers.30.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 228 |

+

"model.layers.30.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 229 |

+

"model.layers.30.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 230 |

+

"model.layers.30.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 231 |

+

"model.layers.30.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 232 |

+

"model.layers.30.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 233 |

+

"model.layers.31.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 234 |

+

"model.layers.31.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 235 |

+

"model.layers.31.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 236 |

+

"model.layers.31.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 237 |

+

"model.layers.31.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 238 |

+

"model.layers.31.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 239 |

+

"model.layers.31.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 240 |

+

"model.layers.31.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 241 |

+

"model.layers.31.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 242 |

+

"model.layers.32.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 243 |

+

"model.layers.32.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 244 |

+

"model.layers.32.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 245 |

+

"model.layers.32.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 246 |

+

"model.layers.32.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 247 |

+

"model.layers.32.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 248 |

+

"model.layers.32.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 249 |

+

"model.layers.32.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 250 |

+

"model.layers.32.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 251 |

+

"model.layers.33.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 252 |

+

"model.layers.33.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 253 |

+

"model.layers.33.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 254 |

+

"model.layers.33.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 255 |

+

"model.layers.33.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 256 |

+

"model.layers.33.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 257 |

+

"model.layers.33.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 258 |

+

"model.layers.33.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 259 |

+

"model.layers.33.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 260 |

+

"model.layers.34.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 261 |

+

"model.layers.34.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 262 |

+

"model.layers.34.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 263 |

+

"model.layers.34.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 264 |

+

"model.layers.34.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 265 |

+

"model.layers.34.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 266 |

+

"model.layers.34.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 267 |

+

"model.layers.34.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 268 |

+

"model.layers.34.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 269 |

+

"model.layers.35.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 270 |

+

"model.layers.35.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 271 |

+

"model.layers.35.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 272 |

+

"model.layers.35.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 273 |

+

"model.layers.35.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 274 |

+

"model.layers.35.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 275 |

+

"model.layers.35.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 276 |

+

"model.layers.35.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 277 |

+

"model.layers.35.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 278 |

+

"model.layers.36.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 279 |

+

"model.layers.36.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 280 |

+

"model.layers.36.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 281 |

+

"model.layers.36.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 282 |

+

"model.layers.36.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 283 |

+

"model.layers.36.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 284 |

+

"model.layers.36.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 285 |

+

"model.layers.36.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 286 |

+

"model.layers.36.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 287 |

+

"model.layers.37.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 288 |

+

"model.layers.37.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 289 |

+

"model.layers.37.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 290 |

+

"model.layers.37.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 291 |

+

"model.layers.37.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 292 |

+

"model.layers.37.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 293 |

+

"model.layers.37.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 294 |

+

"model.layers.37.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 295 |

+

"model.layers.37.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 296 |

+

"model.layers.38.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 297 |

+

"model.layers.38.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 298 |

+

"model.layers.38.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 299 |

+

"model.layers.38.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 300 |

+

"model.layers.38.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 301 |

+

"model.layers.38.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 302 |

+

"model.layers.38.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 303 |

+

"model.layers.38.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 304 |

+

"model.layers.38.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 305 |

+

"model.layers.39.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 306 |

+

"model.layers.39.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 307 |

+

"model.layers.39.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 308 |

+

"model.layers.39.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 309 |

+

"model.layers.39.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 310 |

+

"model.layers.39.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 311 |

+

"model.layers.39.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 312 |

+

"model.layers.39.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 313 |

+

"model.layers.39.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 314 |

+

"model.layers.4.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 315 |

+

"model.layers.4.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 316 |

+

"model.layers.4.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 317 |

+

"model.layers.4.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 318 |

+

"model.layers.4.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 319 |

+

"model.layers.4.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 320 |

+

"model.layers.4.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 321 |

+

"model.layers.4.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 322 |

+

"model.layers.4.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 323 |

+

"model.layers.40.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 324 |

+

"model.layers.40.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 325 |

+

"model.layers.40.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 326 |

+

"model.layers.40.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 327 |

+

"model.layers.40.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 328 |

+

"model.layers.40.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 329 |

+

"model.layers.40.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 330 |

+

"model.layers.40.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 331 |

+

"model.layers.40.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 332 |

+

"model.layers.41.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 333 |

+

"model.layers.41.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 334 |

+

"model.layers.41.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 335 |

+

"model.layers.41.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 336 |

+

"model.layers.41.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 337 |

+

"model.layers.41.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 338 |

+

"model.layers.41.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 339 |

+

"model.layers.41.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 340 |

+

"model.layers.41.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 341 |

+

"model.layers.42.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 342 |

+

"model.layers.42.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 343 |

+

"model.layers.42.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 344 |

+

"model.layers.42.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 345 |

+

"model.layers.42.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 346 |

+

"model.layers.42.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 347 |

+

"model.layers.42.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 348 |

+

"model.layers.42.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 349 |

+

"model.layers.42.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 350 |

+

"model.layers.43.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 351 |

+

"model.layers.43.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 352 |

+

"model.layers.43.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 353 |

+

"model.layers.43.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 354 |

+

"model.layers.43.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 355 |

+

"model.layers.43.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 356 |

+

"model.layers.43.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 357 |

+

"model.layers.43.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 358 |

+

"model.layers.43.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 359 |

+

"model.layers.44.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 360 |

+

"model.layers.44.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 361 |

+

"model.layers.44.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 362 |

+

"model.layers.44.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 363 |

+

"model.layers.44.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 364 |

+

"model.layers.44.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 365 |

+

"model.layers.44.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 366 |

+

"model.layers.44.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 367 |

+

"model.layers.44.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 368 |

+

"model.layers.45.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 369 |

+

"model.layers.45.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 370 |

+

"model.layers.45.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 371 |

+

"model.layers.45.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 372 |

+

"model.layers.45.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 373 |

+

"model.layers.45.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 374 |

+

"model.layers.45.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 375 |

+

"model.layers.45.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 376 |

+

"model.layers.45.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 377 |

+

"model.layers.46.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 378 |

+

"model.layers.46.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 379 |

+

"model.layers.46.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 380 |

+

"model.layers.46.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 381 |

+

"model.layers.46.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 382 |

+

"model.layers.46.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 383 |

+

"model.layers.46.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 384 |

+

"model.layers.46.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 385 |

+

"model.layers.46.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 386 |

+

"model.layers.47.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 387 |

+

"model.layers.47.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 388 |

+

"model.layers.47.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 389 |

+

"model.layers.47.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 390 |

+

"model.layers.47.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 391 |

+

"model.layers.47.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 392 |

+

"model.layers.47.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 393 |

+

"model.layers.47.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 394 |

+

"model.layers.47.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 395 |

+

"model.layers.5.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 396 |

+

"model.layers.5.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 397 |

+

"model.layers.5.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 398 |

+

"model.layers.5.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 399 |

+

"model.layers.5.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 400 |

+

"model.layers.5.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 401 |

+

"model.layers.5.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 402 |

+

"model.layers.5.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 403 |

+

"model.layers.5.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 404 |

+

"model.layers.6.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 405 |

+

"model.layers.6.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 406 |

+

"model.layers.6.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 407 |

+

"model.layers.6.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 408 |

+

"model.layers.6.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 409 |

+

"model.layers.6.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 410 |

+

"model.layers.6.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 411 |

+

"model.layers.6.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 412 |

+

"model.layers.6.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 413 |

+

"model.layers.7.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 414 |

+

"model.layers.7.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 415 |

+

"model.layers.7.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 416 |

+

"model.layers.7.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 417 |

+

"model.layers.7.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 418 |

+

"model.layers.7.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 419 |

+

"model.layers.7.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 420 |

+

"model.layers.7.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 421 |

+

"model.layers.7.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 422 |

+

"model.layers.8.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 423 |

+

"model.layers.8.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 424 |

+

"model.layers.8.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 425 |

+

"model.layers.8.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 426 |

+

"model.layers.8.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 427 |

+

"model.layers.8.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 428 |

+

"model.layers.8.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 429 |

+

"model.layers.8.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 430 |

+

"model.layers.8.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 431 |

+

"model.layers.9.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 432 |

+

"model.layers.9.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 433 |

+

"model.layers.9.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 434 |

+

"model.layers.9.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 435 |

+

"model.layers.9.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 436 |

+

"model.layers.9.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 437 |

+

"model.layers.9.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 438 |

+

"model.layers.9.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 439 |

+

"model.layers.9.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 440 |

+

"model.norm.weight": "model-00005-of-00006.safetensors"

|

| 441 |

+

}

|

| 442 |

+

}

|

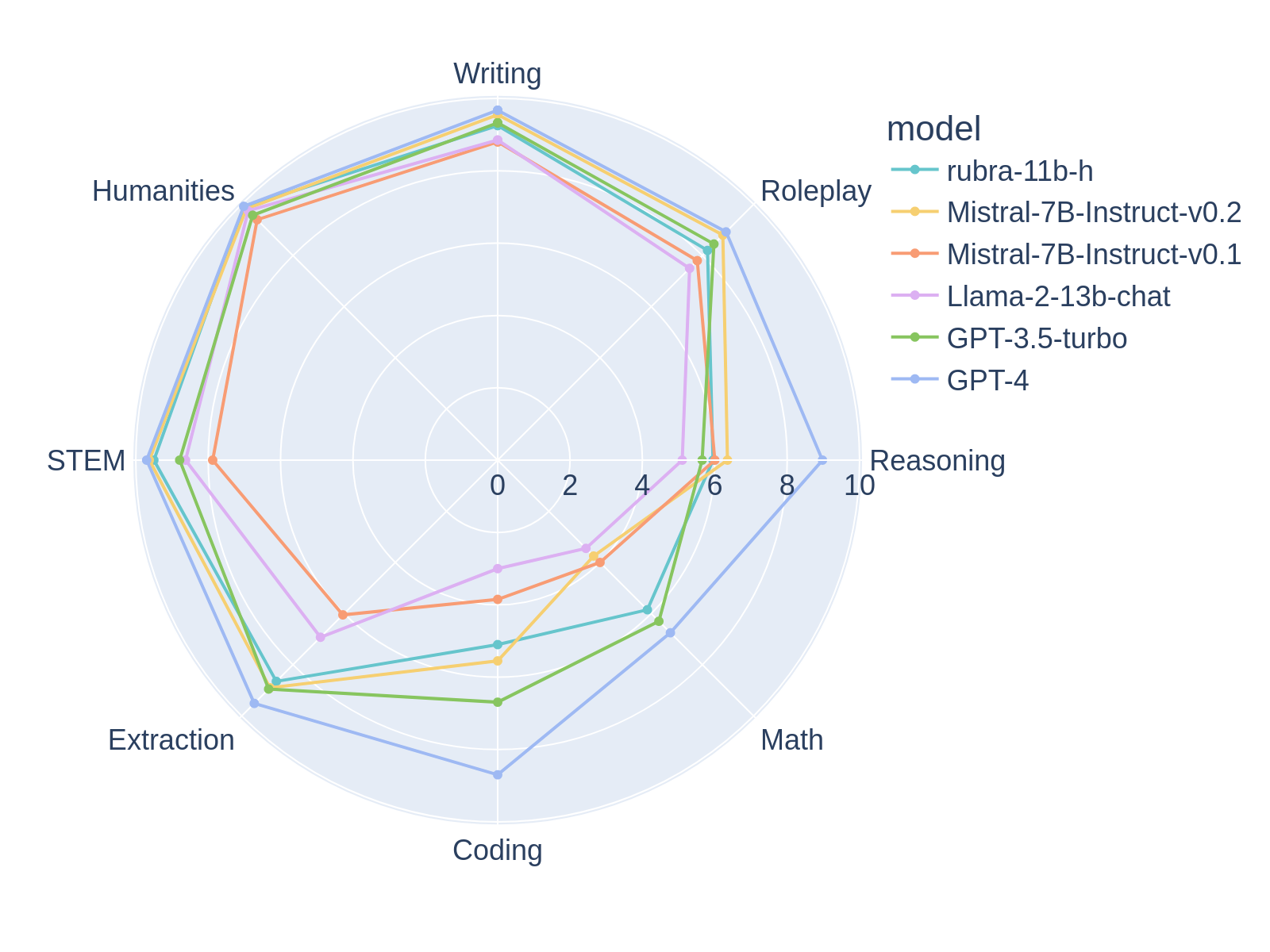

mtbench-comparison.png

ADDED

|

needle-in-a-haystack.txt

ADDED

|

@@ -0,0 +1,898 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|