Create README.md

Browse files- .gitattributes +3 -0

- README.md +357 -0

- assets/radar_final.png +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

*jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

*gif filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,357 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

pipeline_tag: text-generation

|

| 3 |

+

datasets:

|

| 4 |

+

- openbmb/RLAIF-V-Dataset

|

| 5 |

+

---

|

| 6 |

+

|

| 7 |

+

<h1>A GPT-4V Level MLLM for Single Image, Multi Image and Video on Your Phone</h1>

|

| 8 |

+

|

| 9 |

+

[GitHub](https://github.com/OpenBMB/MiniCPM-V) | [Demo](http://120.92.209.146:8887/)</a>

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## MiniCPM-V 2.6

|

| 13 |

+

|

| 14 |

+

**MiniCPM-V 2.6** is the latest and most capable model in the MiniCPM-V series. The model is built on SigLip-400M and Qwen2-7B with a total of 8B parameters. It exhibits a significant performance improvement over MiniCPM-Llama3-V 2.5, and introduces new features for multi-image and video understanding. Notable features of MiniCPM-V 2.6 include:

|

| 15 |

+

|

| 16 |

+

- 🔥 **Leading Performance.**

|

| 17 |

+

MiniCPM-V 2.6 achieves an average score of 65.2 on the latest version of OpenCompass, a comprehensive evaluation over 8 popular benchmarks. **With only 8B parameters, it surpasses widely used proprietary models like GPT-4o mini, GPT-4V, Gemini 1.5 Pro, and Claude 3.5 Sonnet** for single image understanding.

|

| 18 |

+

|

| 19 |

+

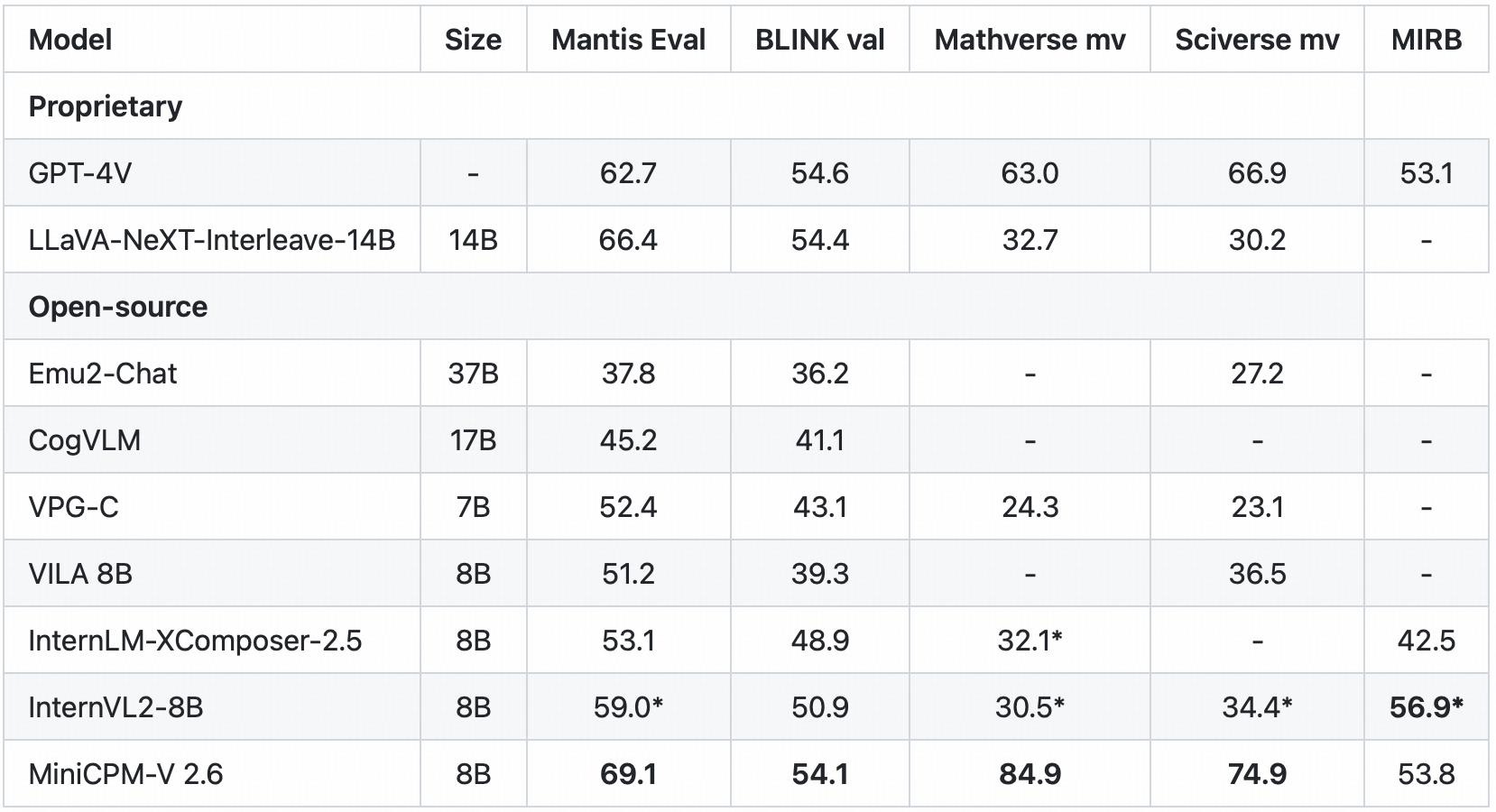

- 🖼️ **Multi Image Understanding and In-context Learning.** MiniCPM-V 2.6 can also perform **conversation and reasoning over multiple images**. It achieves **state-of-the-art performance** on popular multi-image benchmarks such as Mantis-Eval, BLINK, Mathverse mv and Sciverse mv, and also shows promising in-context learning capability.

|

| 20 |

+

|

| 21 |

+

- 🎬 **Video Understanding.** MiniCPM-V 2.6 can also **accept video inputs**, performing conversation and providing dense captions for spatial-temporal information. It outperforms **GPT-4V, Claude 3.5 Sonnet and LLaVA-NeXT-Video-34B** on Video-MME with/without subtitles.

|

| 22 |

+

|

| 23 |

+

- 💪 **Strong OCR Capability and Others.**

|

| 24 |

+

MiniCPM-V 2.6 can process images with any aspect ratio and up to 1.8 million pixels (e.g., 1344x1344). It achieves **state-of-the-art performance on OCRBench, surpassing proprietary models such as GPT-4o, GPT-4V, and Gemini 1.5 Pro**.

|

| 25 |

+

Based on the the latest [RLAIF-V](https://github.com/RLHF-V/RLAIF-V/) and [VisCPM](https://github.com/OpenBMB/VisCPM) techniques, it features **trustworthy behaviors**, with significantly lower hallucination rates than GPT-4o and GPT-4V on Object HalBench, and supports **multilingual capabilities** on English, Chiense, German, French, Italian, Korean, etc.

|

| 26 |

+

|

| 27 |

+

- 🚀 **Superior Efficiency.**

|

| 28 |

+

In addition to its friendly size, MiniCPM-V 2.6 also shows **state-of-the-art token density** (i.e., number of pixels encoded into each visual token). **It produces only 640 tokens when processing a 1.8M pixel image, which is 75% fewer than most models**. This directly improves the inference speed, first-token latency, memory usage, and power consumption. As a result, MiniCPM-V 2.6 can efficiently support **real-time video understanding** on end-side devices such as iPad.

|

| 29 |

+

|

| 30 |

+

- 💫 **Easy Usage.**

|

| 31 |

+

MiniCPM-V 2.6 can be easily used in various ways: (1) [llama.cpp](https://github.com/OpenBMB/llama.cpp/blob/minicpmv-main/examples/llava/README-minicpmv2.6.md) and [ollama](https://github.com/OpenBMB/ollama/tree/minicpm-v2.6) support for efficient CPU inference on local devices, (2) [int4](https://huggingface.co/openbmb/MiniCPM-V-2_6-int4) and [GGUF](https://huggingface.co/openbmb/MiniCPM-V-2_6-gguf) format quantized models in 16 sizes, (3) [vLLM](https://github.com/OpenBMB/MiniCPM-V/tree/main?tab=readme-ov-file#inference-with-vllm) support for high-throughput and memory-efficient inference, (4) fine-tuning on new domains and tasks, (5) quick local WebUI demo setup with [Gradio](https://github.com/OpenBMB/MiniCPM-V/tree/main?tab=readme-ov-file#chat-with-our-demo-on-gradio) and (6) online web [demo](http://120.92.209.146:8887).

|

| 32 |

+

|

| 33 |

+

### Evaluation <!-- omit in toc -->

|

| 34 |

+

<div align="center">

|

| 35 |

+

<img src=assets/radar_final.png width=66% />

|

| 36 |

+

</div>

|

| 37 |

+

|

| 38 |

+

Single image results on MME, MMVet, OCRBench, MMMU, MathVista, MMB, AI2D, TextVQA, DocVQA, HallusionBench, Object HalBench:

|

| 39 |

+

<div align="center">

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

</div>

|

| 44 |

+

|

| 45 |

+

<sup>*</sup> We evaluate this benchmark using Chain-Of-Thought prompting.

|

| 46 |

+

|

| 47 |

+

<sup>+</sup> Token Density: number of pixels encoded into each visual token at maximum resolution, i.e., # pixels at maximum resolution / # visual tokens.

|

| 48 |

+

|

| 49 |

+

Note: For proprietary models, we calculate token density based on the image encoding charging strategy defined in the official API documentation, which provides an upper-bound estimation.

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

<details>

|

| 53 |

+

<summary>Click to view multi-image results on Mantis Eval, BLINK Val, Mathverse mv, Sciverse mv, MIRB.</summary>

|

| 54 |

+

<div align="center">

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

</div>

|

| 59 |

+

<sup>*</sup> We evaluate the officially released checkpoint by ourselves.

|

| 60 |

+

</details>

|

| 61 |

+

|

| 62 |

+

<details>

|

| 63 |

+

<summary>Click to view video results on Video-MME and Video-ChatGPT.</summary>

|

| 64 |

+

<div align="center">

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

</div>

|

| 69 |

+

|

| 70 |

+

</details>

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

<details>

|

| 74 |

+

<summary>Click to view few-shot results on TextVQA, VizWiz, VQAv2, OK-VQA.</summary>

|

| 75 |

+

<div align="center">

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

</div>

|

| 81 |

+

* denotes zero image shot and two additional text shots following Flamingo.

|

| 82 |

+

|

| 83 |

+

<sup>+</sup> We evaluate the pretraining ckpt without SFT.

|

| 84 |

+

</details>

|

| 85 |

+

|

| 86 |

+

### Examples <!-- omit in toc -->

|

| 87 |

+

|

| 88 |

+

<div style="display: flex; flex-direction: column; align-items: center;">

|

| 89 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/multi_img-bike.png" alt="Bike" style="margin-bottom: 5px;">

|

| 90 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/multi_img-menu.png" alt="Menu" style="margin-bottom: 5px;">

|

| 91 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/multi_img-code.png" alt="Code" style="margin-bottom: 5px;">

|

| 92 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/ICL-Mem.png" alt="Mem" style="margin-bottom: 5px;">

|

| 93 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/multiling-medal.png" alt="medal" style="margin-bottom: 10px;">

|

| 94 |

+

</div>

|

| 95 |

+

<details>

|

| 96 |

+

<summary>Click to view more cases.</summary>

|

| 97 |

+

<div style="display: flex; flex-direction: column; align-items: center;">

|

| 98 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/ICL-elec.png" alt="elec" style="margin-bottom: 5px;">

|

| 99 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/minicpmv2_6/multiling-olympic.png" alt="Menu" style="margin-bottom: 10px;">

|

| 100 |

+

</div>

|

| 101 |

+

</details>

|

| 102 |

+

|

| 103 |

+

We deploy MiniCPM-V 2.6 on end devices. The demo video is the raw screen recording on a iPad Pro without edition.

|

| 104 |

+

|

| 105 |

+

<table align="center">

|

| 106 |

+

<p align="center">

|

| 107 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/gif_cases/ai.gif" width=32%/>

|

| 108 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/gif_cases/beer.gif" width=32%/>

|

| 109 |

+

</p>

|

| 110 |

+

</table>

|

| 111 |

+

|

| 112 |

+

<table align="center">

|

| 113 |

+

<p align="center">

|

| 114 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/gif_cases/ticket.gif" width=32%/>

|

| 115 |

+

<img src="https://github.com/OpenBMB/MiniCPM-V/blob/main/assets/gif_cases/wfh.gif" width=32%/>

|

| 116 |

+

</p>

|

| 117 |

+

</table>

|

| 118 |

+

|

| 119 |

+

<table align="center">

|

| 120 |

+

<p align="center">

|

| 121 |

+

<video src="https://github.com/user-attachments/assets/21f4b818-ede1-4822-920e-91281725c830" width="360" /> </video>

|

| 122 |

+

<video src="https://github.com/user-attachments/assets/c835f757-206b-4d9c-8e36-70d67b453628" width="360" /> </video>

|

| 123 |

+

</p>

|

| 124 |

+

</table>

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

## Demo

|

| 128 |

+

Click here to try out the Demo of [MiniCPM-V 2.6](http://120.92.209.146:8887/).

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

## Usage

|

| 132 |

+

Inference using Huggingface transformers on NVIDIA GPUs. Requirements tested on python 3.10:

|

| 133 |

+

```

|

| 134 |

+

Pillow==10.1.0

|

| 135 |

+

torch==2.1.2

|

| 136 |

+

torchvision==0.16.2

|

| 137 |

+

transformers==4.40.0

|

| 138 |

+

sentencepiece==0.1.99

|

| 139 |

+

decord

|

| 140 |

+

```

|

| 141 |

+

|

| 142 |

+

```python

|

| 143 |

+

# test.py

|

| 144 |

+

import torch

|

| 145 |

+

from PIL import Image

|

| 146 |

+

from transformers import AutoModel, AutoTokenizer

|

| 147 |

+

|

| 148 |

+

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

|

| 149 |

+

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

|

| 150 |

+

model = model.eval().cuda()

|

| 151 |

+

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

|

| 152 |

+

|

| 153 |

+

image = Image.open('xx.jpg').convert('RGB')

|

| 154 |

+

question = 'What is in the image?'

|

| 155 |

+

msgs = [{'role': 'user', 'content': [image, question]}]

|

| 156 |

+

|

| 157 |

+

res = model.chat(

|

| 158 |

+

image=None,

|

| 159 |

+

msgs=msgs,

|

| 160 |

+

tokenizer=tokenizer

|

| 161 |

+

)

|

| 162 |

+

print(res)

|

| 163 |

+

|

| 164 |

+

## if you want to use streaming, please make sure sampling=True and stream=True

|

| 165 |

+

## the model.chat will return a generator

|

| 166 |

+

res = model.chat(

|

| 167 |

+

image=None,

|

| 168 |

+

msgs=msgs,

|

| 169 |

+

tokenizer=tokenizer,

|

| 170 |

+

sampling=True,

|

| 171 |

+

stream=True

|

| 172 |

+

)

|

| 173 |

+

|

| 174 |

+

generated_text = ""

|

| 175 |

+

for new_text in res:

|

| 176 |

+

generated_text += new_text

|

| 177 |

+

print(new_text, flush=True, end='')

|

| 178 |

+

```

|

| 179 |

+

|

| 180 |

+

### Chat with multiple images

|

| 181 |

+

<details>

|

| 182 |

+

<summary> Click to view Python code running MiniCPM-V 2.6 with multiple images input. </summary>

|

| 183 |

+

|

| 184 |

+

```python

|

| 185 |

+

import torch

|

| 186 |

+

from PIL import Image

|

| 187 |

+

from transformers import AutoModel, AutoTokenizer

|

| 188 |

+

|

| 189 |

+

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

|

| 190 |

+

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

|

| 191 |

+

model = model.eval().cuda()

|

| 192 |

+

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

|

| 193 |

+

|

| 194 |

+

image1 = Image.open('image1.jpg').convert('RGB')

|

| 195 |

+

image2 = Image.open('image2.jpg').convert('RGB')

|

| 196 |

+

question = 'Compare image 1 and image 2, tell me about the differences between image 1 and image 2.'

|

| 197 |

+

|

| 198 |

+

msgs = [{'role': 'user', 'content': [image1, image2, question]}]

|

| 199 |

+

|

| 200 |

+

answer = model.chat(

|

| 201 |

+

image=None,

|

| 202 |

+

msgs=msgs,

|

| 203 |

+

tokenizer=tokenizer

|

| 204 |

+

)

|

| 205 |

+

print(answer)

|

| 206 |

+

```

|

| 207 |

+

</details>

|

| 208 |

+

|

| 209 |

+

### In-context few-shot learning

|

| 210 |

+

<details>

|

| 211 |

+

<summary> Click to view Python code running MiniCPM-V 2.6 with few-shot input. </summary>

|

| 212 |

+

|

| 213 |

+

```python

|

| 214 |

+

import torch

|

| 215 |

+

from PIL import Image

|

| 216 |

+

from transformers import AutoModel, AutoTokenizer

|

| 217 |

+

|

| 218 |

+

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

|

| 219 |

+

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

|

| 220 |

+

model = model.eval().cuda()

|

| 221 |

+

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

|

| 222 |

+

|

| 223 |

+

question = "production date"

|

| 224 |

+

image1 = Image.open('example1.jpg').convert('RGB')

|

| 225 |

+

answer1 = "2023.08.04"

|

| 226 |

+

image2 = Image.open('example2.jpg').convert('RGB')

|

| 227 |

+

answer2 = "2007.04.24"

|

| 228 |

+

image_test = Image.open('test.jpg').convert('RGB')

|

| 229 |

+

|

| 230 |

+

msgs = [

|

| 231 |

+

{'role': 'user', 'content': [image1, question]}, {'role': 'assistant', 'content': [answer1]},

|

| 232 |

+

{'role': 'user', 'content': [image2, question]}, {'role': 'assistant', 'content': [answer2]},

|

| 233 |

+

{'role': 'user', 'content': [image_test, question]}

|

| 234 |

+

]

|

| 235 |

+

|

| 236 |

+

answer = model.chat(

|

| 237 |

+

image=None,

|

| 238 |

+

msgs=msgs,

|

| 239 |

+

tokenizer=tokenizer

|

| 240 |

+

)

|

| 241 |

+

print(answer)

|

| 242 |

+

```

|

| 243 |

+

</details>

|

| 244 |

+

|

| 245 |

+

### Chat with video

|

| 246 |

+

<details>

|

| 247 |

+

<summary> Click to view Python code running MiniCPM-V 2.6 with video input. </summary>

|

| 248 |

+

|

| 249 |

+

```python

|

| 250 |

+

import torch

|

| 251 |

+

from PIL import Image

|

| 252 |

+

from transformers import AutoModel, AutoTokenizer

|

| 253 |

+

from decord import VideoReader, cpu # pip install decord

|

| 254 |

+

|

| 255 |

+

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

|

| 256 |

+

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

|

| 257 |

+

model = model.eval().cuda()

|

| 258 |

+

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

|

| 259 |

+

|

| 260 |

+

MAX_NUM_FRAMES=64

|

| 261 |

+

|

| 262 |

+

def encode_video(video_path):

|

| 263 |

+

def uniform_sample(l, n):

|

| 264 |

+

gap = len(l) / n

|

| 265 |

+

idxs = [int(i * gap + gap / 2) for i in range(n)]

|

| 266 |

+

return [l[i] for i in idxs]

|

| 267 |

+

|

| 268 |

+

vr = VideoReader(video_path, ctx=cpu(0))

|

| 269 |

+

sample_fps = round(vr.get_avg_fps() / 1) # FPS

|

| 270 |

+

frame_idx = [i for i in range(0, len(vr), sample_fps)]

|

| 271 |

+

if len(frame_idx) > MAX_NUM_FRAMES:

|

| 272 |

+

frame_idx = uniform_sample(frame_idx, MAX_NUM_FRAMES)

|

| 273 |

+

frames = vr.get_batch(frame_idx).asnumpy()

|

| 274 |

+

frames = [Image.fromarray(v.astype('uint8')) for v in frames]

|

| 275 |

+

print('num frames:', len(frames))

|

| 276 |

+

return frames

|

| 277 |

+

|

| 278 |

+

video_path="video_test.mp4"

|

| 279 |

+

frames = encode_video(video_path)

|

| 280 |

+

question = "Describe the video"

|

| 281 |

+

msgs = [

|

| 282 |

+

{'role': 'user', 'content': frames + [question]},

|

| 283 |

+

]

|

| 284 |

+

|

| 285 |

+

# Set decode params for video

|

| 286 |

+

params["max_inp_length"] = 4352 # 4096+256

|

| 287 |

+

params["use_image_id"] = False

|

| 288 |

+

params["max_slice_nums"] = 1 if len(frames) > 16 else 2

|

| 289 |

+

|

| 290 |

+

answer = model.chat(

|

| 291 |

+

image=None,

|

| 292 |

+

msgs=msgs,

|

| 293 |

+

tokenizer=tokenizer,

|

| 294 |

+

**params

|

| 295 |

+

)

|

| 296 |

+

print(answer)

|

| 297 |

+

```

|

| 298 |

+

</details>

|

| 299 |

+

|

| 300 |

+

|

| 301 |

+

Please look at [GitHub](https://github.com/OpenBMB/MiniCPM-V) for more detail about usage.

|

| 302 |

+

|

| 303 |

+

|

| 304 |

+

## Inference with llama.cpp<a id="llamacpp"></a>

|

| 305 |

+

MiniCPM-V 2.6 can run with llama.cpp. See our fork of [llama.cpp](https://github.com/OpenBMB/llama.cpp/tree/minicpm-v2.5/examples/minicpmv) for more detail.

|

| 306 |

+

|

| 307 |

+

|

| 308 |

+

## Int4 quantized version

|

| 309 |

+

Download the int4 quantized version for lower GPU memory (7GB) usage: [MiniCPM-V-2_6-int4](https://huggingface.co/openbmb/MiniCPM-V-2_6-int4).

|

| 310 |

+

|

| 311 |

+

|

| 312 |

+

## License

|

| 313 |

+

#### Model License

|

| 314 |

+

* The code in this repo is released under the [Apache-2.0](https://github.com/OpenBMB/MiniCPM/blob/main/LICENSE) License.

|

| 315 |

+

* The usage of MiniCPM-V series model weights must strictly follow [MiniCPM Model License.md](https://github.com/OpenBMB/MiniCPM/blob/main/MiniCPM%20Model%20License.md).

|

| 316 |

+

* The models and weights of MiniCPM are completely free for academic research. After filling out a ["questionnaire"](https://modelbest.feishu.cn/share/base/form/shrcnpV5ZT9EJ6xYjh3Kx0J6v8g) for registration, MiniCPM-V 2.6 weights are also available for free commercial use.

|

| 317 |

+

|

| 318 |

+

|

| 319 |

+

|

| 320 |

+

#### Statement

|

| 321 |

+

* As an LMM, MiniCPM-V 2.6 generates contents by learning a large mount of multimodal corpora, but it cannot comprehend, express personal opinions or make value judgement. Anything generated by MiniCPM-V 2.6 does not represent the views and positions of the model developers

|

| 322 |

+

* We will not be liable for any problems arising from the use of the MinCPM-V models, including but not limited to data security issues, risk of public opinion, or any risks and problems arising from the misdirection, misuse, dissemination or misuse of the model.

|

| 323 |

+

|

| 324 |

+

## Other Multimodal Projects from Our Team

|

| 325 |

+

|

| 326 |

+

[VisCPM](https://github.com/OpenBMB/VisCPM/tree/main) | [RLHF-V](https://github.com/RLHF-V/RLHF-V) | [LLaVA-UHD](https://github.com/thunlp/LLaVA-UHD) | [RLAIF-V](https://github.com/RLHF-V/RLAIF-V)

|

| 327 |

+

|

| 328 |

+

## Citation

|

| 329 |

+

|

| 330 |

+

If you find our work helpful, please consider citing the following papers

|

| 331 |

+

|

| 332 |

+

```bib

|

| 333 |

+

@article{yu2023rlhf,

|

| 334 |

+

title={Rlhf-v: Towards trustworthy mllms via behavior alignment from fine-grained correctional human feedback},

|

| 335 |

+

author={Yu, Tianyu and Yao, Yuan and Zhang, Haoye and He, Taiwen and Han, Yifeng and Cui, Ganqu and Hu, Jinyi and Liu, Zhiyuan and Zheng, Hai-Tao and Sun, Maosong and others},

|

| 336 |

+

journal={arXiv preprint arXiv:2312.00849},

|

| 337 |

+

year={2023}

|

| 338 |

+

}

|

| 339 |

+

@article{viscpm,

|

| 340 |

+

title={Large Multilingual Models Pivot Zero-Shot Multimodal Learning across Languages},

|

| 341 |

+

author={Jinyi Hu and Yuan Yao and Chongyi Wang and Shan Wang and Yinxu Pan and Qianyu Chen and Tianyu Yu and Hanghao Wu and Yue Zhao and Haoye Zhang and Xu Han and Yankai Lin and Jiao Xue and Dahai Li and Zhiyuan Liu and Maosong Sun},

|

| 342 |

+

journal={arXiv preprint arXiv:2308.12038},

|

| 343 |

+

year={2023}

|

| 344 |

+

}

|

| 345 |

+

@article{xu2024llava-uhd,

|

| 346 |

+

title={{LLaVA-UHD}: an LMM Perceiving Any Aspect Ratio and High-Resolution Images},

|

| 347 |

+

author={Xu, Ruyi and Yao, Yuan and Guo, Zonghao and Cui, Junbo and Ni, Zanlin and Ge, Chunjiang and Chua, Tat-Seng and Liu, Zhiyuan and Huang, Gao},

|

| 348 |

+

journal={arXiv preprint arXiv:2403.11703},

|

| 349 |

+

year={2024}

|

| 350 |

+

}

|

| 351 |

+

@article{yu2024rlaifv,

|

| 352 |

+

title={RLAIF-V: Aligning MLLMs through Open-Source AI Feedback for Super GPT-4V Trustworthiness},

|

| 353 |

+

author={Yu, Tianyu and Zhang, Haoye and Yao, Yuan and Dang, Yunkai and Chen, Da and Lu, Xiaoman and Cui, Ganqu and He, Taiwen and Liu, Zhiyuan and Chua, Tat-Seng and Sun, Maosong},

|

| 354 |

+

journal={arXiv preprint arXiv:2405.17220},

|

| 355 |

+

year={2024},

|

| 356 |

+

}

|

| 357 |

+

```

|

assets/radar_final.png

ADDED

|

Git LFS Details

|