Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,267 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

datasets:

|

| 4 |

+

- FreedomIntelligence/ApolloMoEDataset

|

| 5 |

+

language:

|

| 6 |

+

- ar

|

| 7 |

+

- en

|

| 8 |

+

- zh

|

| 9 |

+

- ko

|

| 10 |

+

- ja

|

| 11 |

+

- mn

|

| 12 |

+

- th

|

| 13 |

+

- vi

|

| 14 |

+

- lo

|

| 15 |

+

- mg

|

| 16 |

+

- de

|

| 17 |

+

- pt

|

| 18 |

+

- es

|

| 19 |

+

- fr

|

| 20 |

+

- ru

|

| 21 |

+

- it

|

| 22 |

+

- hr

|

| 23 |

+

- gl

|

| 24 |

+

- cs

|

| 25 |

+

- co

|

| 26 |

+

- la

|

| 27 |

+

- uk

|

| 28 |

+

- bs

|

| 29 |

+

- bg

|

| 30 |

+

- eo

|

| 31 |

+

- sq

|

| 32 |

+

- da

|

| 33 |

+

- sa

|

| 34 |

+

- gn

|

| 35 |

+

- sr

|

| 36 |

+

- sk

|

| 37 |

+

- gd

|

| 38 |

+

- lb

|

| 39 |

+

- hi

|

| 40 |

+

- ku

|

| 41 |

+

- mt

|

| 42 |

+

- he

|

| 43 |

+

- ln

|

| 44 |

+

- bm

|

| 45 |

+

- sw

|

| 46 |

+

- ig

|

| 47 |

+

- rw

|

| 48 |

+

- ha

|

| 49 |

+

metrics:

|

| 50 |

+

- accuracy

|

| 51 |

+

base_model:

|

| 52 |

+

- FreedomIntelligence/Apollo2-7B

|

| 53 |

+

pipeline_tag: question-answering

|

| 54 |

+

tags:

|

| 55 |

+

- biology

|

| 56 |

+

- medical

|

| 57 |

+

---

|

| 58 |

+

# Apollo2-7B-GGUF

|

| 59 |

+

Original model: [Apollo2-7B](https://huggingface.co/FreedomIntelligence/Apollo2-7B)

|

| 60 |

+

Made by: [FreedomIntelligence](https://huggingface.co/FreedomIntelligence)

|

| 61 |

+

|

| 62 |

+

## Quantization notes

|

| 63 |

+

Made with llama.cpp-b3938 with imatrix file based on Exllamav2 callibration dataset.

|

| 64 |

+

This model is meant to run with llama.cpp-compatible apps such as Text-Generation-WebUI, KoboldCpp, Jan, LM Studio and many many others.

|

| 65 |

+

|

| 66 |

+

# Original model card

|

| 67 |

+

# Democratizing Medical LLMs For Much More Languages

|

| 68 |

+

|

| 69 |

+

Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish, Arabic, Russian, Japanese, Korean, German, Italian, Portuguese and 38 Minor Languages So far.

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

<p align="center">

|

| 74 |

+

📃 <a href="https://arxiv.org/abs/2410.10626" target="_blank">Paper</a> • 🌐 <a href="" target="_blank">Demo</a> • 🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloMoEDataset" target="_blank">ApolloMoEDataset</a> • 🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloMoEBench" target="_blank">ApolloMoEBench</a> • 🤗 <a href="https://huggingface.co/collections/FreedomIntelligence/apollomoe-and-apollo2-670ddebe3bb1ba1aebabbf2c" target="_blank">Models</a> •🌐 <a href="https://github.com/FreedomIntelligence/Apollo" target="_blank">Apollo</a> • 🌐 <a href="https://github.com/FreedomIntelligence/ApolloMoE" target="_blank">ApolloMoE</a>

|

| 75 |

+

</p>

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

## 🌈 Update

|

| 83 |

+

|

| 84 |

+

* **[2024.10.15]** ApolloMoE repo is published!🎉

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

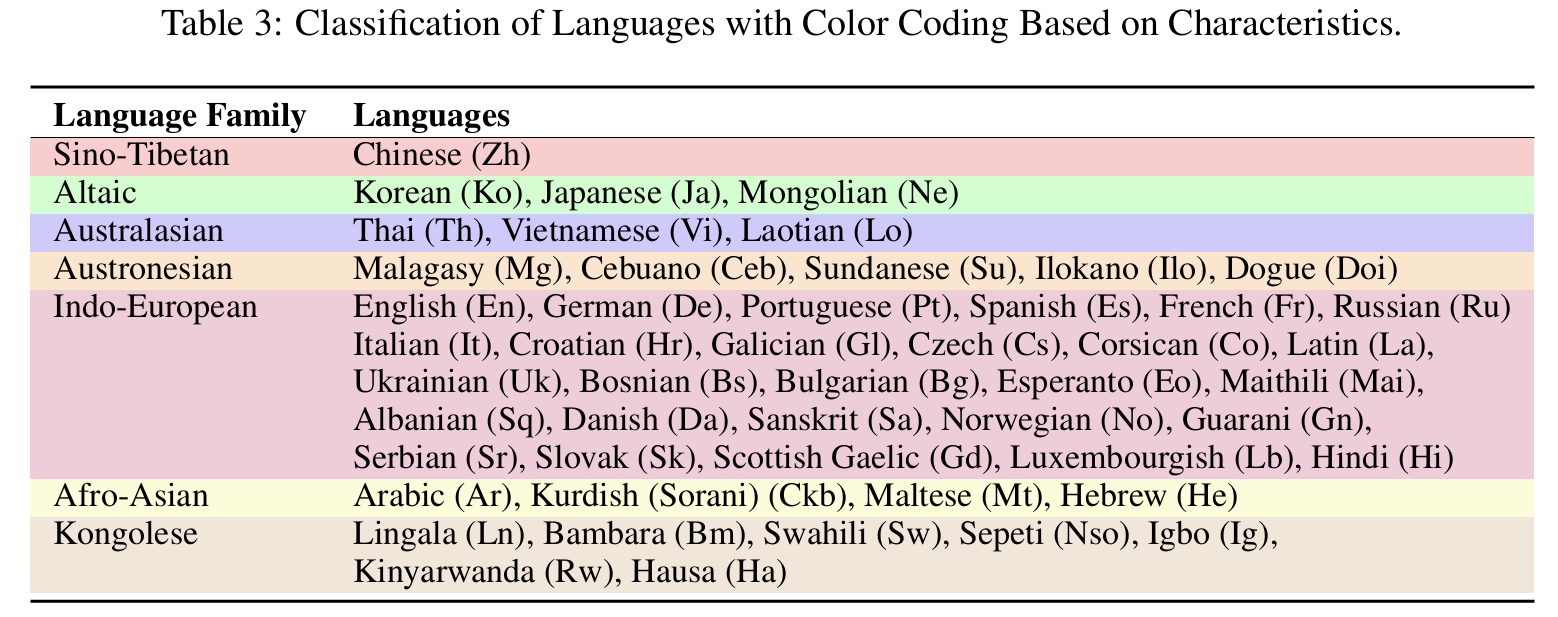

## Languages Coverage

|

| 88 |

+

12 Major Languages and 38 Minor Languages

|

| 89 |

+

|

| 90 |

+

<details>

|

| 91 |

+

<summary>Click to view the Languages Coverage</summary>

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

</details>

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

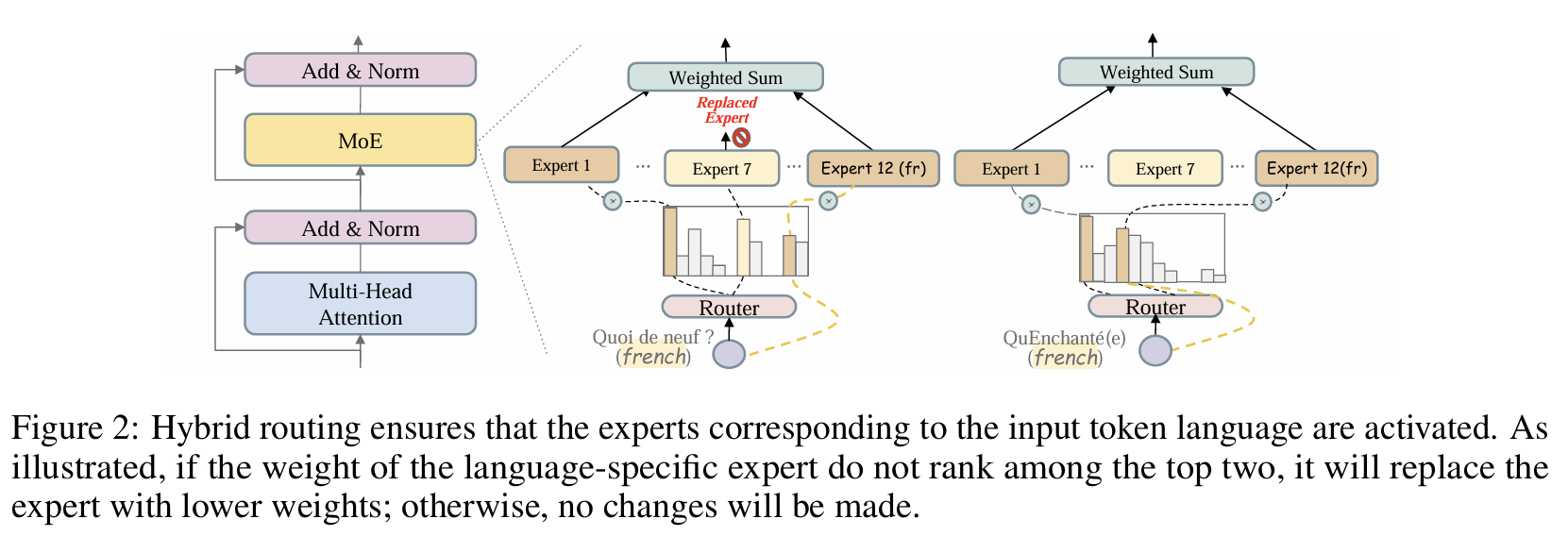

## Architecture

|

| 99 |

+

|

| 100 |

+

<details>

|

| 101 |

+

<summary>Click to view the MoE routing image</summary>

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

</details>

|

| 106 |

+

|

| 107 |

+

## Results

|

| 108 |

+

|

| 109 |

+

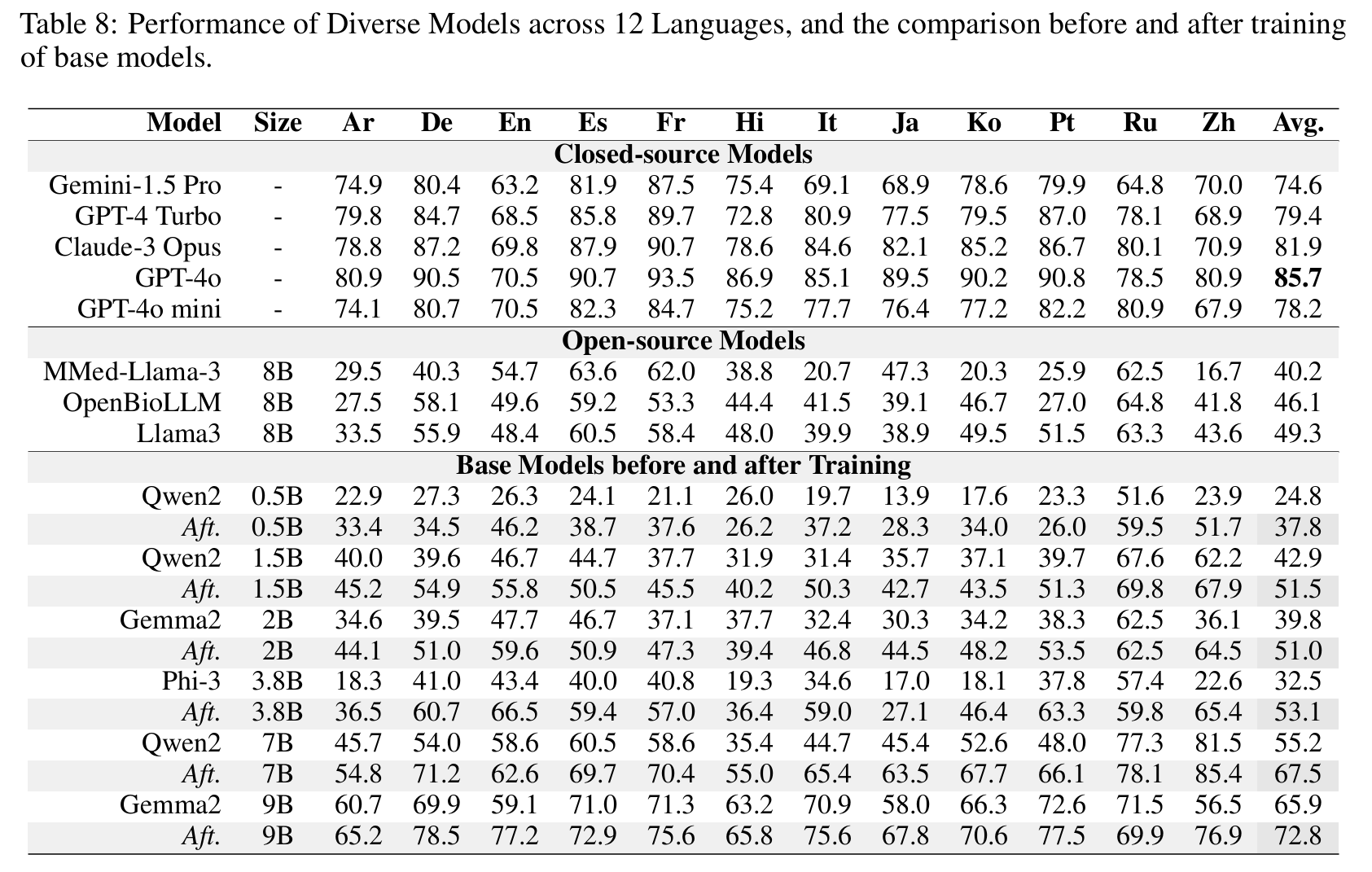

#### Dense

|

| 110 |

+

🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo2-0.5B" target="_blank">Apollo2-0.5B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo2-1.5B" target="_blank">Apollo2-1.5B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo2-2B" target="_blank">Apollo2-2B</a>

|

| 111 |

+

|

| 112 |

+

🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo2-3.8B" target="_blank">Apollo2-3.8B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo2-7B" target="_blank">Apollo2-7B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo2-9B" target="_blank">Apollo2-9B</a>

|

| 113 |

+

|

| 114 |

+

<details>

|

| 115 |

+

<summary>Click to view the Dense Models Results</summary>

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

</details>

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

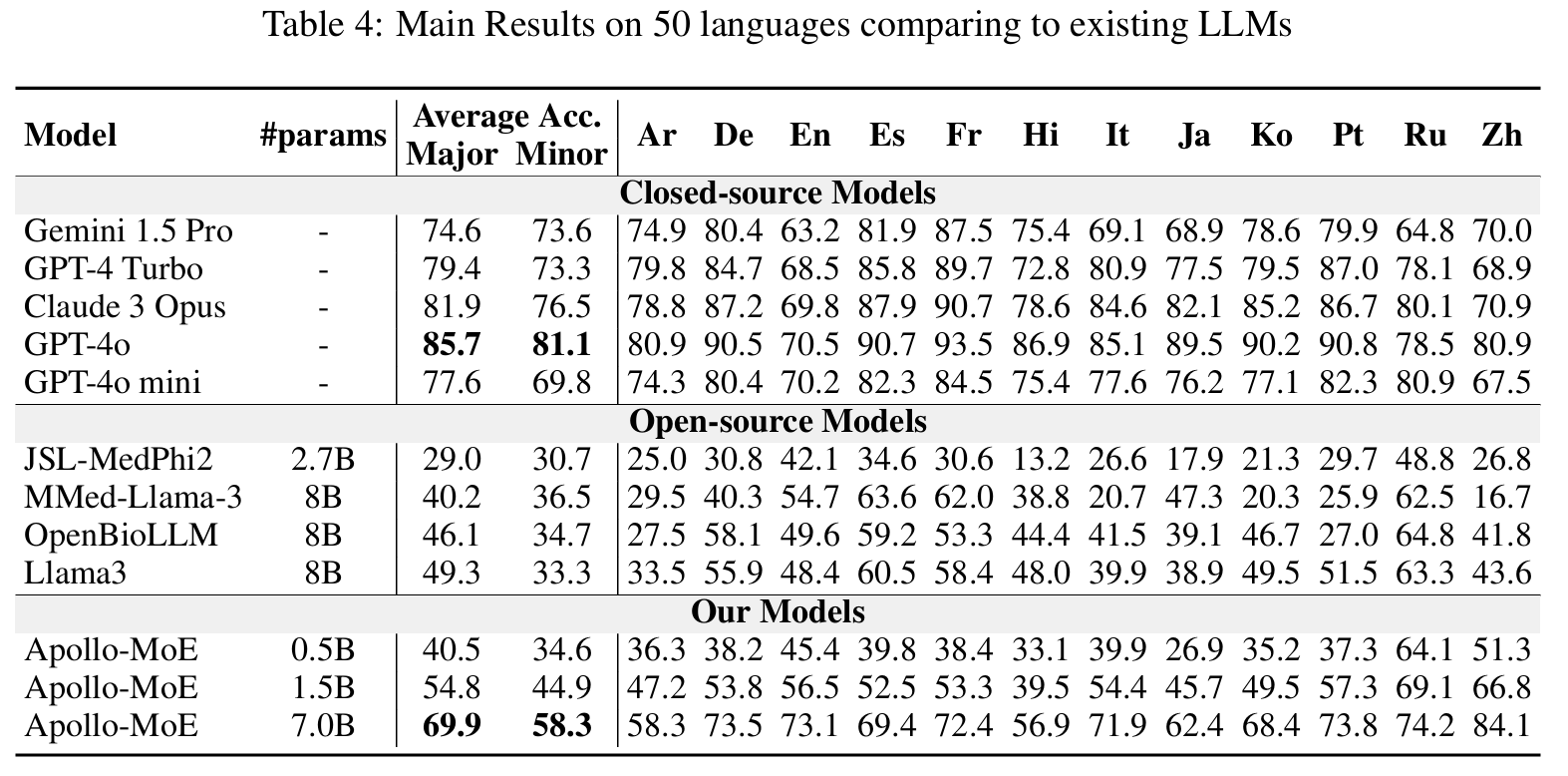

#### Post-MoE

|

| 123 |

+

🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-MoE-0.5B" target="_blank">Apollo-MoE-0.5B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-MoE-1.5B" target="_blank">Apollo-MoE-1.5B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-MoE-7B" target="_blank">Apollo-MoE-7B</a>

|

| 124 |

+

|

| 125 |

+

<details>

|

| 126 |

+

<summary>Click to view the Post-MoE Models Results</summary>

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

</details>

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

## Usage Format

|

| 136 |

+

##### Apollo2

|

| 137 |

+

- 0.5B, 1.5B, 7B: User:{query}\nAssistant:{response}<|endoftext|>

|

| 138 |

+

- 2B, 9B: User:{query}\nAssistant:{response}\<eos\>

|

| 139 |

+

- 3.8B: <|user|>\n{query}<|end|><|assisitant|>\n{response}<|end|>

|

| 140 |

+

|

| 141 |

+

##### Apollo-MoE

|

| 142 |

+

- 0.5B, 1.5B, 7B: User:{query}\nAssistant:{response}<|endoftext|>

|

| 143 |

+

|

| 144 |

+

## Dataset & Evaluation

|

| 145 |

+

|

| 146 |

+

- Dataset

|

| 147 |

+

🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloMoEDataset" target="_blank">ApolloMoEDataset</a>

|

| 148 |

+

|

| 149 |

+

<details><summary>Click to expand</summary>

|

| 150 |

+

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

- [Data category](https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus/tree/main/train)

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

</details>

|

| 157 |

+

|

| 158 |

+

- Evaluation

|

| 159 |

+

🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloMoEBench" target="_blank">ApolloMoEBench</a>

|

| 160 |

+

|

| 161 |

+

<details><summary>Click to expand</summary>

|

| 162 |

+

|

| 163 |

+

- EN:

|

| 164 |

+

- [MedQA-USMLE](https://huggingface.co/datasets/GBaker/MedQA-USMLE-4-options)

|

| 165 |

+

- [MedMCQA](https://huggingface.co/datasets/medmcqa/viewer/default/test)

|

| 166 |

+

- [PubMedQA](https://huggingface.co/datasets/pubmed_qa): Because the results fluctuated too much, they were not used in the paper.

|

| 167 |

+

- [MMLU-Medical](https://huggingface.co/datasets/cais/mmlu)

|

| 168 |

+

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

|

| 169 |

+

- ZH:

|

| 170 |

+

- [MedQA-MCMLE](https://huggingface.co/datasets/bigbio/med_qa/viewer/med_qa_zh_4options_bigbio_qa/test)

|

| 171 |

+

- [CMB-single](https://huggingface.co/datasets/FreedomIntelligence/CMB): Not used in the paper

|

| 172 |

+

- Randomly sample 2,000 multiple-choice questions with single answer.

|

| 173 |

+

- [CMMLU-Medical](https://huggingface.co/datasets/haonan-li/cmmlu)

|

| 174 |

+

- Anatomy, Clinical_knowledge, College_medicine, Genetics, Nutrition, Traditional_chinese_medicine, Virology

|

| 175 |

+

- [CExam](https://github.com/williamliujl/CMExam): Not used in the paper

|

| 176 |

+

- Randomly sample 2,000 multiple-choice questions

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

- ES: [Head_qa](https://huggingface.co/datasets/head_qa)

|

| 180 |

+

- FR:

|

| 181 |

+

- [Frenchmedmcqa](https://github.com/qanastek/FrenchMedMCQA)

|

| 182 |

+

- [MMLU_FR]

|

| 183 |

+

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

|

| 184 |

+

- HI: [MMLU_HI](https://huggingface.co/datasets/FreedomIntelligence/MMLU_Hindi)

|

| 185 |

+

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

|

| 186 |

+

- AR: [MMLU_AR](https://huggingface.co/datasets/FreedomIntelligence/MMLU_Arabic)

|

| 187 |

+

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

|

| 188 |

+

- JA: [IgakuQA](https://github.com/jungokasai/IgakuQA)

|

| 189 |

+

- KO: [KorMedMCQA](https://huggingface.co/datasets/sean0042/KorMedMCQA)

|

| 190 |

+

- IT:

|

| 191 |

+

- [MedExpQA](https://huggingface.co/datasets/HiTZ/MedExpQA)

|

| 192 |

+

- [MMLU_IT]

|

| 193 |

+

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

|

| 194 |

+

- DE: [BioInstructQA](https://huggingface.co/datasets/BioMistral/BioInstructQA): German part

|

| 195 |

+

- PT: [BioInstructQA](https://huggingface.co/datasets/BioMistral/BioInstructQA): Portuguese part

|

| 196 |

+

- RU: [RuMedBench](https://github.com/sb-ai-lab/MedBench)

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

|

| 200 |

+

|

| 201 |

+

</details>

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

## Results reproduction

|

| 205 |

+

<details><summary>Click to expand</summary>

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

We take Apollo2-7B or Apollo-MoE-0.5B as example

|

| 209 |

+

1. Download Dataset for project:

|

| 210 |

+

|

| 211 |

+

```

|

| 212 |

+

bash 0.download_data.sh

|

| 213 |

+

```

|

| 214 |

+

|

| 215 |

+

2. Prepare test and dev data for specific model:

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

- Create test data for with special token

|

| 219 |

+

|

| 220 |

+

```

|

| 221 |

+

bash 1.data_process_test&dev.sh

|

| 222 |

+

```

|

| 223 |

+

|

| 224 |

+

3. Prepare train data for specific model (Create tokenized data in advance):

|

| 225 |

+

|

| 226 |

+

|

| 227 |

+

- You can adjust data Training order and Training Epoch in this step

|

| 228 |

+

|

| 229 |

+

```

|

| 230 |

+

bash 2.data_process_train.sh

|

| 231 |

+

```

|

| 232 |

+

|

| 233 |

+

4. Train the model

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

- If you want to train in Multi Nodes please refer to ./src/sft/training_config/zero_multi.yaml

|

| 237 |

+

|

| 238 |

+

|

| 239 |

+

```

|

| 240 |

+

bash 3.single_node_train.sh

|

| 241 |

+

```

|

| 242 |

+

|

| 243 |

+

|

| 244 |

+

5. Evaluate your model: Generate score for benchmark

|

| 245 |

+

|

| 246 |

+

```

|

| 247 |

+

bash 4.eval.sh

|

| 248 |

+

```

|

| 249 |

+

|

| 250 |

+

</details>

|

| 251 |

+

|

| 252 |

+

|

| 253 |

+

|

| 254 |

+

## Citation

|

| 255 |

+

Please use the following citation if you intend to use our dataset for training or evaluation:

|

| 256 |

+

|

| 257 |

+

```

|

| 258 |

+

@misc{zheng2024efficientlydemocratizingmedicalllms,

|

| 259 |

+

title={Efficiently Democratizing Medical LLMs for 50 Languages via a Mixture of Language Family Experts},

|

| 260 |

+

author={Guorui Zheng and Xidong Wang and Juhao Liang and Nuo Chen and Yuping Zheng and Benyou Wang},

|

| 261 |

+

year={2024},

|

| 262 |

+

eprint={2410.10626},

|

| 263 |

+

archivePrefix={arXiv},

|

| 264 |

+

primaryClass={cs.CL},

|

| 265 |

+

url={https://arxiv.org/abs/2410.10626},

|

| 266 |

+

}

|

| 267 |

+

```

|