Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: zh

|

| 3 |

+

tags:

|

| 4 |

+

- SEGA

|

| 5 |

+

- data augmentation

|

| 6 |

+

- keywords-to-text generation

|

| 7 |

+

- sketch-to-text generation

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

datasets:

|

| 10 |

+

- beyond/chinese_clean_passages_80m

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

widget:

|

| 14 |

+

- text: "今天[MASK]篮球[MASK]上海财经大学[MASK]"

|

| 15 |

+

example_title: "草稿1"

|

| 16 |

+

- text: "自然语言处理[MASK]谷歌[MASK]通用人工智能[MASK]"

|

| 17 |

+

example_title: "草稿2"

|

| 18 |

+

|

| 19 |

+

inference:

|

| 20 |

+

parameters:

|

| 21 |

+

max_length: 100

|

| 22 |

+

num_beams: 3

|

| 23 |

+

do_sample: True

|

| 24 |

+

---

|

| 25 |

+

# `SEGA-base-chinese` model

|

| 26 |

+

|

| 27 |

+

**SEGA: SkEtch-based Generative Augmentation** \

|

| 28 |

+

**基于草稿的生成式增强模型**

|

| 29 |

+

|

| 30 |

+

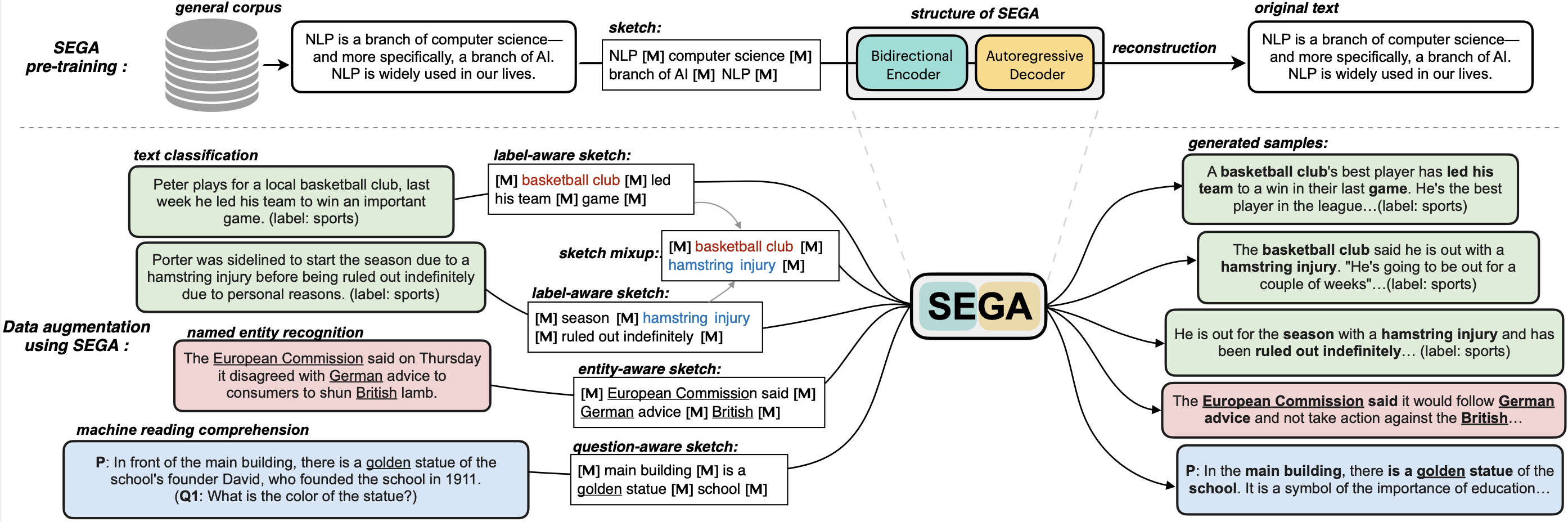

**SEGA** is a **general text augmentation model** that can be used for data augmentation for **various NLP tasks** (including sentiment analysis, topic classification, NER, and QA). SEGA uses an encoder-decoder structure (based on the BART architecture) and is pre-trained on a large-scale general corpus.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

- Paper: [coming soon](to_be_added)

|

| 36 |

+

- GitHub: [SEGA](https://github.com/beyondguo/SEGA).

|

| 37 |

+

|

| 38 |

+

**SEGA中文版** 可以根据你给出的一个**草稿**进行填词造句扩写,草稿可以是:

|

| 39 |

+

- 关键词组合,例如“今天[MASK]篮球[MASK]学校[MASK]”

|

| 40 |

+

- 短语组合,例如“自然语言处理[MASK]谷歌[MASK]通用人工智能[MASK]”

|

| 41 |

+

- 短句子组合,例如“我昨天做了一个梦[MASK]又遇见了她[MASK]曾经那段时光让人怀恋[MASK]”

|

| 42 |

+

- 以上的混合

|

| 43 |

+

|

| 44 |

+

### How to use / 如何使用

|

| 45 |

+

```python

|

| 46 |

+

# sega-chinese

|

| 47 |

+

from transformers import BertTokenizer, BartForConditionalGeneration, Text2TextGenerationPipeline

|

| 48 |

+

checkpoint = 'beyond/sega-base-chinese'

|

| 49 |

+

tokenizer = BertTokenizer.from_pretrained(checkpoint)

|

| 50 |

+

sega_model = BartForConditionalGeneration.from_pretrained(checkpoint)

|

| 51 |

+

sega_generator = Text2TextGenerationPipeline(sega_model, tokenizer, device=0)

|

| 52 |

+

sega_generator

|

| 53 |

+

|

| 54 |

+

sketchs = [

|

| 55 |

+

"今天[MASK]篮球[MASK]学校[MASK]",

|

| 56 |

+

"自然语言处理[MASK]谷歌[MASK]通用人工智能[MASK]",

|

| 57 |

+

"我昨天做了一个梦[MASK]又遇见了她[MASK]曾经那段时光让人怀恋[MASK]",

|

| 58 |

+

"[MASK]疫情[MASK]公园[MASK]散步[MASK]",

|

| 59 |

+

"[MASK]酸菜鱼火锅[MASK]很美味,味道绝了[MASK]周末真开心[MASK]"

|

| 60 |

+

""

|

| 61 |

+

]

|

| 62 |

+

for sketch in sketchs:

|

| 63 |

+

print('input sketch:\n>>> ', sketch)

|

| 64 |

+

print('SEGA-chinese output:\n>>> ',sega_generator(sketch, max_length=100, do_sample=True, num_beams=3)[0]['generated_text'].replace(' ',''),'\n')

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

## Model variations / SEGA其他版本

|

| 68 |

+

|

| 69 |

+

| Model | #params | Language |

|

| 70 |

+

|------------------------|--------------------------------|-------|

|

| 71 |

+

| [`sega-large`](https://huggingface.co/beyond/sega-large) | xM | English |

|

| 72 |

+

| [`sega-base`(coming soon)]() | xM | English |

|

| 73 |

+

| [`sega-large-chinese`(coming soon)]() | xM | Chinese |

|

| 74 |

+

| [`sega-base-chinese`](https://huggingface.co/beyond/sega-base-chinese) | xM | Chinese |

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

## Comparison / 效果对比

|

| 78 |

+

The following comes the comparison between [BART-base-chinese](https://huggingface.co/fnlp/bart-base-chinese) and our proposed [SEGA-base-chinese](https://huggingface.co/beyond/sega-base-chinese).\

|

| 79 |

+

下面对比了[BART-base-chinese](https://huggingface.co/fnlp/bart-base-chinese)和我们提出的**SEGA-base-chinese**在填词造句方面的表现:

|

| 80 |

+

|

| 81 |

+

```

|

| 82 |

+

input sketch:

|

| 83 |

+

>>> 今天[MASK]篮球[MASK]上海财经大学[MASK]

|

| 84 |

+

BART-chinese output:

|

| 85 |

+

>>> 今天的篮球是上海财经大学篮球

|

| 86 |

+

SEGA-chinese output:

|

| 87 |

+

>>> 今天,我们邀请到了中国篮球联盟主席、上海财经大学校长孙建国先生作为主题发言。

|

| 88 |

+

|

| 89 |

+

input sketch:

|

| 90 |

+

>>> 自然语言处理[MASK]谷歌[MASK]通用人工智能[MASK]

|

| 91 |

+

BART-chinese output:

|

| 92 |

+

>>> 自然语言处理是谷歌的通用人工智能技术

|

| 93 |

+

SEGA-chinese output:

|

| 94 |

+

>>> 自然语言处理是谷歌在通用人工智能领域的一个重要研究方向,其目的是为了促进人类智能的发展。

|

| 95 |

+

|

| 96 |

+

input sketch:

|

| 97 |

+

>>> 我昨天做了一个梦[MASK]又遇见了她[MASK]曾经那段时光让人怀恋[MASK]

|

| 98 |

+

BART-chinese output:

|

| 99 |

+

>>> 我昨天做了一个梦今天又遇见了她我曾经那段时光让人怀恋不已

|

| 100 |

+

SEGA-chinese output:

|

| 101 |

+

>>> 我昨天做了一个梦,梦见了我的妈妈,又遇见了她,我知道她曾经那段时光让人怀恋,但是现在,我不知道该怎么回事了,我只是想告诉她,不要再回去了。

|

| 102 |

+

|

| 103 |

+

input sketch:

|

| 104 |

+

>>> [MASK]疫情[MASK]公园[MASK]漫步[MASK]

|

| 105 |

+

BART-chinese output:

|

| 106 |

+

>>> 在疫情防控公园内漫步徜徉

|

| 107 |

+

SEGA-chinese output:

|

| 108 |

+

>>> 为了防止疫情扩散,公园内还设置了漫步区。

|

| 109 |

+

|

| 110 |

+

input sketch:

|

| 111 |

+

>>> [MASK]酸菜鱼火锅[MASK]很美味,味道绝了[MASK]周末真开心[MASK]

|

| 112 |

+

BART-chinese output:

|

| 113 |

+

>>> 这酸菜鱼火锅真的很美味,味道绝了这周末真开心啊

|

| 114 |

+

SEGA-chinese output:

|

| 115 |

+

>>> 这个酸菜鱼火锅真的很美味,味道绝了,吃的时间也长了,周末真开心,吃完以后就回家了,很满意的一次,很喜欢的一个品牌。

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

可以看出,BART只能填补简单的一些词,无法对这些片段进行很连贯的连接,而SEGA则可以扩写成连贯的句子甚至段落。

|