daliprf

commited on

Commit

•

1eced3c

1

Parent(s):

0376fae

init

Browse files- .gitattributes +2 -0

- AffectNetClass.py +130 -0

- FerPlusClass.py +143 -0

- LICENSE +21 -0

- README.md +97 -3

- RafdbClass.py +109 -0

- cnn_model.py +116 -0

- config.py +145 -0

- custom_loss.py +218 -0

- data_helper.py +80 -0

- dataset_class.py +71 -0

- img.jpg +3 -0

- main.py +12 -0

- paper_graphical_items/confm.jpg +3 -0

- paper_graphical_items/correlation_loss.jpg +3 -0

- paper_graphical_items/embedding_TSNE.jpg +3 -0

- paper_graphical_items/fd_ed.jpg +3 -0

- paper_graphical_items/md_component.jpg +3 -0

- paper_graphical_items/samples.jpg +3 -0

- requirements.txt +13 -0

- test_model.py +47 -0

- train.py +349 -0

- trained_models/AffectNet_6336.h5 +3 -0

- trained_models/Fer2013_7203.h5 +3 -0

- trained_models/RafDB_8696.h5 +3 -0

.gitattributes

CHANGED

|

@@ -29,3 +29,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

AffectNetClass.py

ADDED

|

@@ -0,0 +1,130 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from config import DatasetName, AffectnetConf, InputDataSize, LearningConfig, ExpressionCodesAffectnet

|

| 2 |

+

from config import LearningConfig, InputDataSize, DatasetName, AffectnetConf, DatasetType

|

| 3 |

+

|

| 4 |

+

import numpy as np

|

| 5 |

+

import os

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

import math

|

| 8 |

+

from datetime import datetime

|

| 9 |

+

from sklearn.utils import shuffle

|

| 10 |

+

from sklearn.model_selection import train_test_split

|

| 11 |

+

from numpy import save, load, asarray, savez_compressed, savez

|

| 12 |

+

import csv

|

| 13 |

+

from skimage.io import imread

|

| 14 |

+

import pickle

|

| 15 |

+

import csv

|

| 16 |

+

from tqdm import tqdm

|

| 17 |

+

from PIL import Image

|

| 18 |

+

from skimage.transform import resize

|

| 19 |

+

import tensorflow as tf

|

| 20 |

+

import random

|

| 21 |

+

import cv2

|

| 22 |

+

from skimage.feature import hog

|

| 23 |

+

from skimage import data, exposure

|

| 24 |

+

from matplotlib.path import Path

|

| 25 |

+

from scipy import ndimage, misc

|

| 26 |

+

from data_helper import DataHelper

|

| 27 |

+

from sklearn.metrics import accuracy_score

|

| 28 |

+

from sklearn.metrics import confusion_matrix

|

| 29 |

+

from dataset_class import CustomDataset

|

| 30 |

+

from sklearn.metrics import precision_recall_fscore_support as score

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

class AffectNet:

|

| 34 |

+

def __init__(self, ds_type):

|

| 35 |

+

"""we set the parameters needed during the whole class:

|

| 36 |

+

"""

|

| 37 |

+

self.ds_type = ds_type

|

| 38 |

+

if ds_type == DatasetType.train:

|

| 39 |

+

self.img_path = AffectnetConf.no_aug_train_img_path

|

| 40 |

+

self.anno_path = AffectnetConf.no_aug_train_annotation_path

|

| 41 |

+

self.img_path_aug = AffectnetConf.aug_train_img_path

|

| 42 |

+

self.masked_img_path = AffectnetConf.aug_train_masked_img_path

|

| 43 |

+

self.anno_path_aug = AffectnetConf.aug_train_annotation_path

|

| 44 |

+

|

| 45 |

+

elif ds_type == DatasetType.eval:

|

| 46 |

+

self.img_path_aug = AffectnetConf.eval_img_path

|

| 47 |

+

self.anno_path_aug = AffectnetConf.eval_annotation_path

|

| 48 |

+

self.img_path = AffectnetConf.eval_img_path

|

| 49 |

+

self.anno_path = AffectnetConf.eval_annotation_path

|

| 50 |

+

self.masked_img_path = AffectnetConf.eval_masked_img_path

|

| 51 |

+

|

| 52 |

+

elif ds_type == DatasetType.train_7:

|

| 53 |

+

self.img_path = AffectnetConf.no_aug_train_img_path_7

|

| 54 |

+

self.anno_path = AffectnetConf.no_aug_train_annotation_path_7

|

| 55 |

+

self.img_path_aug = AffectnetConf.aug_train_img_path_7

|

| 56 |

+

self.masked_img_path = AffectnetConf.aug_train_masked_img_path_7

|

| 57 |

+

self.anno_path_aug = AffectnetConf.aug_train_annotation_path_7

|

| 58 |

+

|

| 59 |

+

elif ds_type == DatasetType.eval_7:

|

| 60 |

+

self.img_path_aug = AffectnetConf.eval_img_path_7

|

| 61 |

+

self.anno_path_aug = AffectnetConf.eval_annotation_path_7

|

| 62 |

+

self.img_path = AffectnetConf.eval_img_path_7

|

| 63 |

+

self.anno_path = AffectnetConf.eval_annotation_path_7

|

| 64 |

+

self.masked_img_path = AffectnetConf.eval_masked_img_path_7

|

| 65 |

+

|

| 66 |

+

def test_accuracy(self, model, print_samples=False):

|

| 67 |

+

dhp = DataHelper()

|

| 68 |

+

|

| 69 |

+

batch_size = LearningConfig.batch_size

|

| 70 |

+

exp_pr_glob = []

|

| 71 |

+

exp_gt_glob = []

|

| 72 |

+

acc_per_label = []

|

| 73 |

+

'''create batches'''

|

| 74 |

+

img_filenames, exp_filenames = dhp.create_generator_full_path(

|

| 75 |

+

img_path=self.img_path,

|

| 76 |

+

annotation_path=self.anno_path, label=None)

|

| 77 |

+

|

| 78 |

+

print(len(img_filenames))

|

| 79 |

+

step_per_epoch = int(len(img_filenames) // batch_size)

|

| 80 |

+

exp_pr_lbl = []

|

| 81 |

+

exp_gt_lbl = []

|

| 82 |

+

|

| 83 |

+

cds = CustomDataset()

|

| 84 |

+

ds = cds.create_dataset(img_filenames=img_filenames,

|

| 85 |

+

anno_names=exp_filenames,

|

| 86 |

+

is_validation=True, ds=DatasetName.affectnet)

|

| 87 |

+

batch_index = 0

|

| 88 |

+

for img_batch, exp_gt_b in ds:

|

| 89 |

+

'''predict on batch'''

|

| 90 |

+

exp_gt_b = exp_gt_b[:, -1]

|

| 91 |

+

img_batch = img_batch[:, -1, :, :]

|

| 92 |

+

|

| 93 |

+

# probab_exp_pr_b, _ = model.predict_on_batch([img_batch]) # with embedding

|

| 94 |

+

pr_data = model.predict_on_batch([img_batch])

|

| 95 |

+

probab_exp_pr_b = pr_data[0]

|

| 96 |

+

|

| 97 |

+

scores_b = np.array([tf.nn.softmax(probab_exp_pr_b[i]) for i in range(len(probab_exp_pr_b))])

|

| 98 |

+

exp_pr_b = np.array([np.argmax(scores_b[i]) for i in range(len(probab_exp_pr_b))])

|

| 99 |

+

|

| 100 |

+

if print_samples:

|

| 101 |

+

for i in range(len(exp_pr_b)):

|

| 102 |

+

dhp.test_image_print_exp(str(i) + str(batch_index + 1), np.array(img_batch[i]),

|

| 103 |

+

np.int8(exp_gt_b[i]), np.int8(exp_pr_b[i]))

|

| 104 |

+

|

| 105 |

+

exp_pr_lbl += np.array(exp_pr_b).tolist()

|

| 106 |

+

exp_gt_lbl += np.array(exp_gt_b).tolist()

|

| 107 |

+

batch_index += 1

|

| 108 |

+

exp_pr_lbl = np.int64(np.array(exp_pr_lbl))

|

| 109 |

+

exp_gt_lbl = np.int64(np.array(exp_gt_lbl))

|

| 110 |

+

|

| 111 |

+

global_accuracy = accuracy_score(exp_gt_lbl, exp_pr_lbl)

|

| 112 |

+

|

| 113 |

+

precision, recall, fscore, support = score(exp_gt_lbl, exp_pr_lbl)

|

| 114 |

+

|

| 115 |

+

conf_mat = confusion_matrix(exp_gt_lbl, exp_pr_lbl) / 500.0

|

| 116 |

+

# conf_mat = tf.math.confusion_matrix(exp_gt_lbl, exp_pr_lbl, num_classes=7)/500.0

|

| 117 |

+

avg_acc = np.mean([conf_mat[i, i] for i in range(7)])

|

| 118 |

+

|

| 119 |

+

ds = None

|

| 120 |

+

face_img_filenames = None

|

| 121 |

+

eyes_img_filenames = None

|

| 122 |

+

nose_img_filenames = None

|

| 123 |

+

mouth_img_filenames = None

|

| 124 |

+

exp_filenames = None

|

| 125 |

+

global_bunch = None

|

| 126 |

+

upper_bunch = None

|

| 127 |

+

middle_bunch = None

|

| 128 |

+

bottom_bunch = None

|

| 129 |

+

|

| 130 |

+

return global_accuracy, conf_mat, avg_acc, precision, recall, fscore, support

|

FerPlusClass.py

ADDED

|

@@ -0,0 +1,143 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from config import DatasetName, AffectnetConf, InputDataSize, LearningConfig, ExpressionCodesAffectnet

|

| 2 |

+

from config import LearningConfig, InputDataSize, DatasetName, FerPlusConf, DatasetType

|

| 3 |

+

|

| 4 |

+

import numpy as np

|

| 5 |

+

import os

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

import math

|

| 8 |

+

from datetime import datetime

|

| 9 |

+

from sklearn.utils import shuffle

|

| 10 |

+

from sklearn.model_selection import train_test_split

|

| 11 |

+

from numpy import save, load, asarray, savez_compressed

|

| 12 |

+

import csv

|

| 13 |

+

from skimage.io import imread

|

| 14 |

+

import pickle

|

| 15 |

+

import csv

|

| 16 |

+

from tqdm import tqdm

|

| 17 |

+

from PIL import Image

|

| 18 |

+

from skimage.transform import resize

|

| 19 |

+

import tensorflow as tf

|

| 20 |

+

import random

|

| 21 |

+

import cv2

|

| 22 |

+

from skimage.feature import hog

|

| 23 |

+

from skimage import data, exposure

|

| 24 |

+

from matplotlib.path import Path

|

| 25 |

+

from scipy import ndimage, misc

|

| 26 |

+

from data_helper import DataHelper

|

| 27 |

+

from sklearn.metrics import accuracy_score

|

| 28 |

+

from sklearn.metrics import confusion_matrix

|

| 29 |

+

from shutil import copyfile

|

| 30 |

+

from dataset_class import CustomDataset

|

| 31 |

+

from sklearn.metrics import precision_recall_fscore_support as score

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

class FerPlus:

|

| 35 |

+

|

| 36 |

+

def __init__(self, ds_type):

|

| 37 |

+

"""we set the parameters needed during the whole class:

|

| 38 |

+

"""

|

| 39 |

+

self.ds_type = ds_type

|

| 40 |

+

if ds_type == DatasetType.train:

|

| 41 |

+

self.img_path = FerPlusConf.no_aug_train_img_path

|

| 42 |

+

self.anno_path = FerPlusConf.no_aug_train_annotation_path

|

| 43 |

+

self.img_path_aug = FerPlusConf.aug_train_img_path

|

| 44 |

+

self.anno_path_aug = FerPlusConf.aug_train_annotation_path

|

| 45 |

+

self.masked_img_path = FerPlusConf.aug_train_masked_img_path

|

| 46 |

+

self.orig_image_path = FerPlusConf.orig_image_path_train

|

| 47 |

+

|

| 48 |

+

elif ds_type == DatasetType.test:

|

| 49 |

+

self.img_path = FerPlusConf.test_img_path

|

| 50 |

+

self.anno_path = FerPlusConf.test_annotation_path

|

| 51 |

+

self.img_path_aug = FerPlusConf.test_img_path

|

| 52 |

+

self.anno_path_aug = FerPlusConf.test_annotation_path

|

| 53 |

+

self.masked_img_path = FerPlusConf.test_masked_img_path

|

| 54 |

+

self.orig_image_path = FerPlusConf.orig_image_path_train

|

| 55 |

+

|

| 56 |

+

def create_from_orig(self):

|

| 57 |

+

print('create_from_orig & relabel to affectNetLike--->')

|

| 58 |

+

"""

|

| 59 |

+

labels are from 1-7, but we save them from 0 to 6

|

| 60 |

+

:param ds_type:

|

| 61 |

+

:return:

|

| 62 |

+

"""

|

| 63 |

+

|

| 64 |

+

'''read the text file, and save exp, and image'''

|

| 65 |

+

dhl = DataHelper()

|

| 66 |

+

|

| 67 |

+

exp_affectnet_like_lbls = [6, 5, 4, 1, 0, 2, 3]

|

| 68 |

+

lbl_affectnet_like_lbls = ['angry/', 'disgust/', 'fear/', 'happy/', 'neutral/', 'sad/', 'surprise/']

|

| 69 |

+

|

| 70 |

+

for exp_index in range(len(lbl_affectnet_like_lbls)):

|

| 71 |

+

exp_prefix = lbl_affectnet_like_lbls[exp_index]

|

| 72 |

+

for i, file in tqdm(enumerate(os.listdir(self.orig_image_path + exp_prefix))):

|

| 73 |

+

if file.endswith(".jpg") or file.endswith(".png"):

|

| 74 |

+

img_source_address = self.orig_image_path + exp_prefix + file

|

| 75 |

+

img_dest_address = self.img_path + file

|

| 76 |

+

exp_dest_address = self.anno_path + file[:-4]

|

| 77 |

+

exp = exp_affectnet_like_lbls[exp_index]

|

| 78 |

+

|

| 79 |

+

img = np.array(Image.open(img_source_address))

|

| 80 |

+

res_img = resize(img, (InputDataSize.image_input_size, InputDataSize.image_input_size, 3),

|

| 81 |

+

anti_aliasing=True)

|

| 82 |

+

|

| 83 |

+

im = Image.fromarray(np.round(res_img * 255.0).astype(np.uint8))

|

| 84 |

+

'''save image'''

|

| 85 |

+

im.save(img_dest_address)

|

| 86 |

+

'''save annotation'''

|

| 87 |

+

np.save(exp_dest_address + '_exp', exp)

|

| 88 |

+

|

| 89 |

+

def test_accuracy(self, model, print_samples=False):

|

| 90 |

+

print('FER: test_accuracy')

|

| 91 |

+

dhp = DataHelper()

|

| 92 |

+

'''create batches'''

|

| 93 |

+

img_filenames, exp_filenames = dhp.create_generator_full_path(

|

| 94 |

+

img_path=self.img_path,

|

| 95 |

+

annotation_path=self.anno_path, label=None)

|

| 96 |

+

print(len(img_filenames))

|

| 97 |

+

exp_pr_lbl = []

|

| 98 |

+

exp_gt_lbl = []

|

| 99 |

+

|

| 100 |

+

cds = CustomDataset()

|

| 101 |

+

ds = cds.create_dataset(img_filenames=img_filenames,

|

| 102 |

+

anno_names=exp_filenames,

|

| 103 |

+

is_validation=True,

|

| 104 |

+

ds=DatasetName.fer2013)

|

| 105 |

+

|

| 106 |

+

batch_index = 0

|

| 107 |

+

print('FER: loading test ds')

|

| 108 |

+

for img_batch, exp_gt_b in tqdm(ds):

|

| 109 |

+

'''predict on batch'''

|

| 110 |

+

exp_gt_b = exp_gt_b[:, -1]

|

| 111 |

+

img_batch = img_batch[:, -1, :, :]

|

| 112 |

+

|

| 113 |

+

pr_data = model.predict_on_batch([img_batch])

|

| 114 |

+

probab_exp_pr_b = pr_data[0]

|

| 115 |

+

|

| 116 |

+

scores_b = np.array([tf.nn.softmax(probab_exp_pr_b[i]) for i in range(len(probab_exp_pr_b))])

|

| 117 |

+

exp_pr_b = np.array([np.argmax(scores_b[i]) for i in range(len(probab_exp_pr_b))])

|

| 118 |

+

|

| 119 |

+

if print_samples:

|

| 120 |

+

for i in range(len(exp_pr_b)):

|

| 121 |

+

dhp.test_image_print_exp(str(i)+str(batch_index+1), np.array(img_batch[i]),

|

| 122 |

+

np.int8(exp_gt_b[i]), np.int8(exp_pr_b[i]))

|

| 123 |

+

|

| 124 |

+

exp_pr_lbl += np.array(exp_pr_b).tolist()

|

| 125 |

+

exp_gt_lbl += np.array(exp_gt_b).tolist()

|

| 126 |

+

batch_index += 1

|

| 127 |

+

exp_pr_lbl = np.float64(np.array(exp_pr_lbl))

|

| 128 |

+

exp_gt_lbl = np.float64(np.array(exp_gt_lbl))

|

| 129 |

+

|

| 130 |

+

global_accuracy = accuracy_score(exp_gt_lbl, exp_pr_lbl)

|

| 131 |

+

precision, recall, fscore, support = score(exp_gt_lbl, exp_pr_lbl)

|

| 132 |

+

conf_mat = confusion_matrix(exp_gt_lbl, exp_pr_lbl, normalize='true')

|

| 133 |

+

# conf_mat = tf.math.confusion_matrix(exp_gt_lbl, exp_pr_lbl, num_classes=7)

|

| 134 |

+

avg_acc = np.mean([conf_mat[i,i] for i in range(7)])

|

| 135 |

+

|

| 136 |

+

ds = None

|

| 137 |

+

face_img_filenames = None

|

| 138 |

+

eyes_img_filenames = None

|

| 139 |

+

nose_img_filenames = None

|

| 140 |

+

mouth_img_filenames = None

|

| 141 |

+

exp_filenames = None

|

| 142 |

+

|

| 143 |

+

return global_accuracy, conf_mat, avg_acc, precision, recall, fscore, support

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Ali Pourramezan Fard

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,3 +1,97 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ad-Corre

|

| 2 |

+

Ad-Corre: Adaptive Correlation-Based Loss for Facial Expression Recognition in the Wild

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

[](https://paperswithcode.com/sota/facial-expression-recognition-on-raf-db?p=ad-corre-adaptive-correlation-based-loss-for)

|

| 6 |

+

<!--

|

| 7 |

+

[](https://paperswithcode.com/sota/facial-expression-recognition-on-affectnet?p=ad-corre-adaptive-correlation-based-loss-for)

|

| 8 |

+

|

| 9 |

+

[](https://paperswithcode.com/sota/facial-expression-recognition-on-fer2013?p=ad-corre-adaptive-correlation-based-loss-for)

|

| 10 |

+

-->

|

| 11 |

+

|

| 12 |

+

#### Link to the paper (open access):

|

| 13 |

+

https://ieeexplore.ieee.org/document/9727163

|

| 14 |

+

|

| 15 |

+

#### Link to the paperswithcode.com:

|

| 16 |

+

https://paperswithcode.com/paper/ad-corre-adaptive-correlation-based-loss-for

|

| 17 |

+

|

| 18 |

+

```

|

| 19 |

+

Please cite this work as:

|

| 20 |

+

|

| 21 |

+

@ARTICLE{9727163,

|

| 22 |

+

author={Fard, Ali Pourramezan and Mahoor, Mohammad H.},

|

| 23 |

+

journal={IEEE Access},

|

| 24 |

+

title={Ad-Corre: Adaptive Correlation-Based Loss for Facial Expression Recognition in the Wild},

|

| 25 |

+

year={2022},

|

| 26 |

+

volume={},

|

| 27 |

+

number={},

|

| 28 |

+

pages={1-1},

|

| 29 |

+

doi={10.1109/ACCESS.2022.3156598}}

|

| 30 |

+

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

## Introduction

|

| 34 |

+

|

| 35 |

+

Automated Facial Expression Recognition (FER) in the wild using deep neural networks is still challenging due to intra-class variations and inter-class similarities in facial images. Deep Metric Learning (DML) is among the widely used methods to deal with these issues by improving the discriminative power of the learned embedded features. This paper proposes an Adaptive Correlation (Ad-Corre) Loss to guide the network towards generating embedded feature vectors with high correlation for within-class samples and less correlation for between-class samples. Ad-Corre consists of 3 components called Feature Discriminator, Mean Discriminator, and Embedding Discriminator. We design the Feature Discriminator component to guide the network to create the embedded feature vectors to be highly correlated if they belong to a similar class, and less correlated if they belong to different classes. In addition, the Mean Discriminator component leads the network to make the mean embedded feature vectors of different classes to be less similar to each other.We use Xception network as the backbone of our model, and contrary to previous work, we propose an embedding feature space that contains k feature vectors. Then, the Embedding Discriminator component penalizes the network to generate the embedded feature vectors, which are dissimilar.We trained our model using the combination of our proposed loss functions called Ad-Corre Loss jointly with the cross-entropy loss. We achieved a very promising recognition accuracy on AffectNet, RAF-DB, and FER-2013. Our extensive experiments and ablation study indicate the power of our method to cope well with challenging FER tasks in the wild.

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

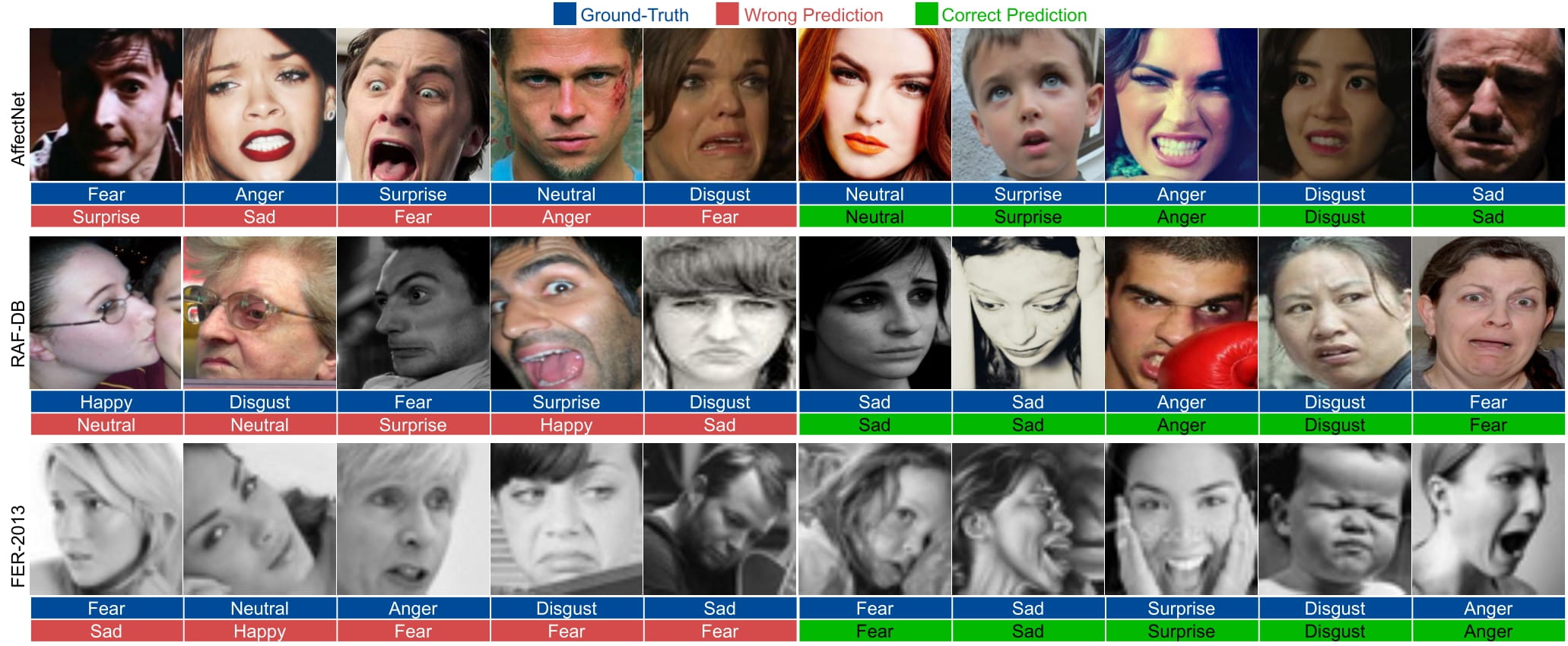

## Evaluation and Samples

|

| 39 |

+

The following samples are taken from the paper:

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

----------------------------------------------------------------------------------------------------------------------------------

|

| 45 |

+

## Installing the requirements

|

| 46 |

+

In order to run the code you need to install python >= 3.5.

|

| 47 |

+

The requirements and the libraries needed to run the code can be installed using the following command:

|

| 48 |

+

|

| 49 |

+

```

|

| 50 |

+

pip install -r requirements.txt

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

## Using the pre-trained models

|

| 55 |

+

The pretrained models for Affectnet, RafDB, and Fer2013 are provided in the [Trained_Models](https://github.com/aliprf/Ad-Corre/tree/main/Trained_Models) folder. You can use the following code to predict the facial emotionn of a facial image:

|

| 56 |

+

|

| 57 |

+

```

|

| 58 |

+

tester = TestModels(h5_address='./trained_models/AffectNet_6336.h5')

|

| 59 |

+

tester.recognize_fer(img_path='./img.jpg')

|

| 60 |

+

|

| 61 |

+

```

|

| 62 |

+

plaese see the following [main.py](https://github.com/aliprf/Ad-Corre/tree/main/main.py) file.

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

## Training Network from scratch

|

| 66 |

+

The information and the code to train the model is provided in train.py .Plaese see the following [main.py](https://github.com/aliprf/Ad-Corre/tree/main/main.py) file:

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

'''training part'''

|

| 70 |

+

trainer = TrainModel(dataset_name=DatasetName.affectnet, ds_type=DatasetType.train_7)

|

| 71 |

+

trainer.train(arch="xcp", weight_path="./")

|

| 72 |

+

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

### Preparing Data

|

| 77 |

+

Data needs to be normalized and saved in npy format.

|

| 78 |

+

|

| 79 |

+

---------------------------------------------------------------

|

| 80 |

+

|

| 81 |

+

```

|

| 82 |

+

Please cite this work as:

|

| 83 |

+

|

| 84 |

+

@ARTICLE{9727163,

|

| 85 |

+

author={Fard, Ali Pourramezan and Mahoor, Mohammad H.},

|

| 86 |

+

journal={IEEE Access},

|

| 87 |

+

title={Ad-Corre: Adaptive Correlation-Based Loss for Facial Expression Recognition in the Wild},

|

| 88 |

+

year={2022},

|

| 89 |

+

volume={},

|

| 90 |

+

number={},

|

| 91 |

+

pages={1-1},

|

| 92 |

+

doi={10.1109/ACCESS.2022.3156598}}

|

| 93 |

+

|

| 94 |

+

```

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

|

RafdbClass.py

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from config import DatasetName, AffectnetConf, InputDataSize, LearningConfig, ExpressionCodesAffectnet

|

| 2 |

+

from config import LearningConfig, InputDataSize, DatasetName, RafDBConf, DatasetType

|

| 3 |

+

from sklearn.metrics import precision_recall_fscore_support as score

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

import os

|

| 7 |

+

import matplotlib.pyplot as plt

|

| 8 |

+

import math

|

| 9 |

+

from datetime import datetime

|

| 10 |

+

from sklearn.utils import shuffle

|

| 11 |

+

from sklearn.model_selection import train_test_split

|

| 12 |

+

from numpy import save, load, asarray, savez_compressed

|

| 13 |

+

import csv

|

| 14 |

+

from skimage.io import imread

|

| 15 |

+

import pickle

|

| 16 |

+

import csv

|

| 17 |

+

from tqdm import tqdm

|

| 18 |

+

from PIL import Image

|

| 19 |

+

from skimage.transform import resize

|

| 20 |

+

import tensorflow as tf

|

| 21 |

+

import random

|

| 22 |

+

import cv2

|

| 23 |

+

from skimage.feature import hog

|

| 24 |

+

from skimage import data, exposure

|

| 25 |

+

from matplotlib.path import Path

|

| 26 |

+

from scipy import ndimage, misc

|

| 27 |

+

from data_helper import DataHelper

|

| 28 |

+

from sklearn.metrics import accuracy_score

|

| 29 |

+

from sklearn.metrics import confusion_matrix

|

| 30 |

+

from shutil import copyfile

|

| 31 |

+

from dataset_class import CustomDataset

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

class RafDB:

|

| 35 |

+

def __init__(self, ds_type):

|

| 36 |

+

"""we set the parameters needed during the whole class:

|

| 37 |

+

"""

|

| 38 |

+

self.ds_type = ds_type

|

| 39 |

+

if ds_type == DatasetType.train:

|

| 40 |

+

self.img_path = RafDBConf.no_aug_train_img_path

|

| 41 |

+

self.anno_path = RafDBConf.no_aug_train_annotation_path

|

| 42 |

+

self.img_path_aug = RafDBConf.aug_train_img_path

|

| 43 |

+

self.anno_path_aug = RafDBConf.aug_train_annotation_path

|

| 44 |

+

self.masked_img_path = RafDBConf.aug_train_masked_img_path

|

| 45 |

+

|

| 46 |

+

elif ds_type == DatasetType.test:

|

| 47 |

+

self.img_path = RafDBConf.test_img_path

|

| 48 |

+

self.anno_path = RafDBConf.test_annotation_path

|

| 49 |

+

self.img_path_aug = RafDBConf.test_img_path

|

| 50 |

+

self.anno_path_aug = RafDBConf.test_annotation_path

|

| 51 |

+

self.masked_img_path = RafDBConf.test_masked_img_path

|

| 52 |

+

|

| 53 |

+

def test_accuracy(self, model, print_samples=False):

|

| 54 |

+

dhp = DataHelper()

|

| 55 |

+

batch_size = LearningConfig.batch_size

|

| 56 |

+

# exp_pr_glob = []

|

| 57 |

+

# exp_gt_glob = []

|

| 58 |

+

# acc_per_label = []

|

| 59 |

+

'''create batches'''

|

| 60 |

+

img_filenames, exp_filenames = dhp.create_generator_full_path(

|

| 61 |

+

img_path=self.img_path,

|

| 62 |

+

annotation_path=self.anno_path, label=None)

|

| 63 |

+

print(len(img_filenames))

|

| 64 |

+

step_per_epoch = int(len(img_filenames) // batch_size)

|

| 65 |

+

exp_pr_lbl = []

|

| 66 |

+

exp_gt_lbl = []

|

| 67 |

+

|

| 68 |

+

cds = CustomDataset()

|

| 69 |

+

ds = cds.create_dataset(img_filenames=img_filenames,

|

| 70 |

+

anno_names=exp_filenames,

|

| 71 |

+

is_validation=True,

|

| 72 |

+

ds=DatasetName.rafdb)

|

| 73 |

+

|

| 74 |

+

batch_index = 0

|

| 75 |

+

for img_batch, exp_gt_b in tqdm(ds):

|

| 76 |

+

'''predict on batch'''

|

| 77 |

+

exp_gt_b = exp_gt_b[:, -1]

|

| 78 |

+

img_batch = img_batch[:, -1, :, :]

|

| 79 |

+

|

| 80 |

+

pr_data = model.predict_on_batch([img_batch])

|

| 81 |

+

|

| 82 |

+

probab_exp_pr_b = pr_data[0]

|

| 83 |

+

exp_pr_b = np.array([np.argmax(probab_exp_pr_b[i]) for i in range(len(probab_exp_pr_b))])

|

| 84 |

+

|

| 85 |

+

if print_samples:

|

| 86 |

+

for i in range(len(exp_pr_b)):

|

| 87 |

+

dhp.test_image_print_exp(str(i) + str(batch_index + 1), np.array(img_batch[i]),

|

| 88 |

+

np.int8(exp_gt_b[i]), np.int8(exp_pr_b[i]))

|

| 89 |

+

|

| 90 |

+

exp_pr_lbl += np.array(exp_pr_b).tolist()

|

| 91 |

+

exp_gt_lbl += np.array(exp_gt_b).tolist()

|

| 92 |

+

batch_index += 1

|

| 93 |

+

exp_pr_lbl = np.float64(np.array(exp_pr_lbl))

|

| 94 |

+

exp_gt_lbl = np.float64(np.array(exp_gt_lbl))

|

| 95 |

+

|

| 96 |

+

global_accuracy = accuracy_score(exp_gt_lbl, exp_pr_lbl)

|

| 97 |

+

precision, recall, fscore, support = score(exp_gt_lbl, exp_pr_lbl)

|

| 98 |

+

|

| 99 |

+

conf_mat = confusion_matrix(exp_gt_lbl, exp_pr_lbl, normalize='true')

|

| 100 |

+

avg_acc = np.mean([conf_mat[i, i] for i in range(7)])

|

| 101 |

+

|

| 102 |

+

ds = None

|

| 103 |

+

face_img_filenames = None

|

| 104 |

+

eyes_img_filenames = None

|

| 105 |

+

nose_img_filenames = None

|

| 106 |

+

mouth_img_filenames = None

|

| 107 |

+

exp_filenames = None

|

| 108 |

+

|

| 109 |

+

return global_accuracy, conf_mat, avg_acc, precision, recall, fscore, support

|

cnn_model.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from config import DatasetName, AffectnetConf, InputDataSize, LearningConfig

|

| 2 |

+

# from hg_Class import HourglassNet

|

| 3 |

+

|

| 4 |

+

import tensorflow as tf

|

| 5 |

+

# from tensorflow import keras

|

| 6 |

+

# from skimage.transform import resize

|

| 7 |

+

from keras.models import Model

|

| 8 |

+

|

| 9 |

+

from keras.applications import mobilenet_v2, mobilenet, resnet50, densenet, resnet

|

| 10 |

+

from keras.layers import Dense, MaxPooling2D, Conv2D, Flatten, \

|

| 11 |

+

BatchNormalization, Activation, GlobalAveragePooling2D, DepthwiseConv2D, \

|

| 12 |

+

Dropout, ReLU, Concatenate, Input, GlobalMaxPool2D, LeakyReLU, Softmax, ELU

|

| 13 |

+

|

| 14 |

+

class CNNModel:

|

| 15 |

+

def get_model(self, arch, num_of_classes, weights):

|

| 16 |

+

if arch == 'resnet':

|

| 17 |

+

model = self._create_resnetemb(num_of_classes,

|

| 18 |

+

num_of_embeddings=LearningConfig.num_embeddings,

|

| 19 |

+

input_shape=(InputDataSize.image_input_size,

|

| 20 |

+

InputDataSize.image_input_size, 3),

|

| 21 |

+

weights=weights

|

| 22 |

+

)

|

| 23 |

+

if arch == 'xcp':

|

| 24 |

+

model = self._create_Xception_l2(num_of_classes,

|

| 25 |

+

num_of_embeddings=LearningConfig.num_embeddings,

|

| 26 |

+

input_shape=(InputDataSize.image_input_size,

|

| 27 |

+

InputDataSize.image_input_size, 3),

|

| 28 |

+

weights=weights

|

| 29 |

+

)

|

| 30 |

+

|

| 31 |

+

return model

|

| 32 |

+

|

| 33 |

+

def _create_resnetemb(self, num_of_classes, input_shape, weights, num_of_embeddings):

|

| 34 |

+

resnet_model = resnet.ResNet50(

|

| 35 |

+

input_shape=input_shape,

|

| 36 |

+

include_top=True,

|

| 37 |

+

weights='imagenet',

|

| 38 |

+

# weights=None,

|

| 39 |

+

input_tensor=None,

|

| 40 |

+

pooling=None)

|

| 41 |

+

resnet_model.layers.pop()

|

| 42 |

+

|

| 43 |

+

avg_pool = resnet_model.get_layer('avg_pool').output # 2048

|

| 44 |

+

''''''

|

| 45 |

+

embeddings = []

|

| 46 |

+

for i in range(num_of_embeddings):

|

| 47 |

+

emb = tf.keras.layers.Dense(LearningConfig.embedding_size, activation=None)(avg_pool)

|

| 48 |

+

emb_l2 = tf.keras.layers.Lambda(lambda x: tf.math.l2_normalize(x, axis=1))(emb)

|

| 49 |

+

embeddings.append(emb_l2)

|

| 50 |

+

|

| 51 |

+

if num_of_embeddings > 1:

|

| 52 |

+

fused = tf.keras.layers.Concatenate(axis=1)([embeddings[i] for i in range(num_of_embeddings)])

|

| 53 |

+

else:

|

| 54 |

+

fused = embeddings[0]

|

| 55 |

+

|

| 56 |

+

fused = Dropout(rate=0.5)(fused)

|

| 57 |

+

|

| 58 |

+

'''out'''

|

| 59 |

+

out_categorical = Dense(num_of_classes,

|

| 60 |

+

activation='softmax',

|

| 61 |

+

name='out')(fused)

|

| 62 |

+

|

| 63 |

+

inp = [resnet_model.input]

|

| 64 |

+

|

| 65 |

+

revised_model = Model(inp, [out_categorical] + [embeddings[i] for i in range(num_of_embeddings)])

|

| 66 |

+

revised_model.summary()

|

| 67 |

+

'''save json'''

|

| 68 |

+

model_json = revised_model.to_json()

|

| 69 |

+

|

| 70 |

+

with open("./model_archs/resnetemb.json", "w") as json_file:

|

| 71 |

+

json_file.write(model_json)

|

| 72 |

+

|

| 73 |

+

return revised_model

|

| 74 |

+

|

| 75 |

+

def _create_Xception_l2(self, num_of_classes, num_of_embeddings, input_shape, weights):

|

| 76 |

+

xception_model = tf.keras.applications.Xception(

|

| 77 |

+

include_top=False,

|

| 78 |

+

# weights=None,

|

| 79 |

+

input_tensor=None,

|

| 80 |

+

weights='imagenet',

|

| 81 |

+

input_shape=input_shape,

|

| 82 |

+

pooling=None,

|

| 83 |

+

classes=num_of_classes

|

| 84 |

+

)

|

| 85 |

+

|

| 86 |

+

act_14 = xception_model.get_layer('block14_sepconv2_act').output

|

| 87 |

+

avg_pool = GlobalAveragePooling2D()(act_14)

|

| 88 |

+

|

| 89 |

+

embeddings = []

|

| 90 |

+

for i in range(num_of_embeddings):

|

| 91 |

+

emb = tf.keras.layers.Dense(LearningConfig.embedding_size, activation=None)(avg_pool)

|

| 92 |

+

emb_l2 = tf.keras.layers.Lambda(lambda x: tf.math.l2_normalize(x, axis=1))(emb)

|

| 93 |

+

|

| 94 |

+

embeddings.append(emb_l2)

|

| 95 |

+

if num_of_embeddings > 1:

|

| 96 |

+

fused = tf.keras.layers.Concatenate(axis=1)([embeddings[i] for i in range(num_of_embeddings)])

|

| 97 |

+

else:

|

| 98 |

+

fused = embeddings[0]

|

| 99 |

+

fused = Dropout(rate=0.5)(fused)

|

| 100 |

+

|

| 101 |

+

'''out'''

|

| 102 |

+

out_categorical = Dense(num_of_classes,

|

| 103 |

+

activation='softmax',

|

| 104 |

+

name='out')(fused)

|

| 105 |

+

|

| 106 |

+

inp = [xception_model.input]

|

| 107 |

+

|

| 108 |

+

revised_model = Model(inp, [out_categorical] + [embeddings[i] for i in range(num_of_embeddings)])

|

| 109 |

+

revised_model.summary()

|

| 110 |

+

'''save json'''

|

| 111 |

+

model_json = revised_model.to_json()

|

| 112 |

+

|

| 113 |

+

with open("./model_archs/xcp_embedding.json", "w") as json_file:

|

| 114 |

+

json_file.write(model_json)

|

| 115 |

+

|

| 116 |

+

return revised_model

|

config.py

ADDED

|

@@ -0,0 +1,145 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

class DatasetName:

|

| 2 |

+

affectnet = 'affectnet'

|

| 3 |

+

rafdb = 'rafdb'

|

| 4 |

+

fer2013 = 'fer2013'

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class ExpressionCodesRafdb:

|

| 8 |

+

Surprise = 1

|

| 9 |

+

Fear = 2

|

| 10 |

+

Disgust = 3

|

| 11 |

+

Happiness = 4

|

| 12 |

+

Sadness = 5

|

| 13 |

+

Anger = 6

|

| 14 |

+

Neutral = 7

|

| 15 |

+

|

| 16 |

+

class ExpressionCodesAffectnet:

|

| 17 |

+

neutral = 0

|

| 18 |

+

happy = 1

|

| 19 |

+

sad = 2

|

| 20 |

+

surprise = 3

|

| 21 |

+

fear = 4

|

| 22 |

+

disgust = 5

|

| 23 |

+

anger = 6

|

| 24 |

+

contempt = 7

|

| 25 |

+

none = 8

|

| 26 |

+

uncertain = 9

|

| 27 |

+

noface = 10

|

| 28 |

+

|

| 29 |

+

class DatasetType:

|

| 30 |

+

train = 0

|

| 31 |

+

train_7 = 1

|

| 32 |

+

eval = 2

|

| 33 |

+

eval_7 = 3

|

| 34 |

+

test = 4

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

class LearningConfig:

|

| 38 |

+

# batch_size = 100

|

| 39 |

+

batch_size = 50

|

| 40 |

+

# batch_size = 1

|

| 41 |

+

# batch_size = 5

|

| 42 |

+

virtual_batch_size = 5* batch_size

|

| 43 |

+

epochs = 300

|

| 44 |

+

embedding_size = 256 # we have 360 filters at the end

|

| 45 |

+

# embedding_size = 128 # we have 360 filters at the end

|

| 46 |

+

labels_history_frame = 1000

|

| 47 |

+

num_classes = 7

|

| 48 |

+

# num_embeddings = 7

|

| 49 |

+

num_embeddings = 10

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

class InputDataSize:

|

| 53 |

+

image_input_size = 224

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

class FerPlusConf:

|

| 57 |

+

_prefix_path = './FER_plus' # --> zeue

|

| 58 |

+

|

| 59 |

+

orig_image_path_train = _prefix_path + '/orig/train/'

|

| 60 |

+

orig_image_path_test = _prefix_path + '/orig/test/'

|

| 61 |

+

|

| 62 |

+

'''only 7 labels'''

|

| 63 |

+

no_aug_train_img_path = _prefix_path + '/train_set/images/'

|

| 64 |

+

no_aug_train_annotation_path = _prefix_path + '/train_set/annotations/'

|

| 65 |

+

|

| 66 |

+

aug_train_img_path = _prefix_path + '/train_set_aug/images/'

|

| 67 |

+

aug_train_annotation_path = _prefix_path + '/train_set_aug/annotations/'

|

| 68 |

+

aug_train_masked_img_path = _prefix_path + '/train_set_aug/masked_images/'

|

| 69 |

+

|

| 70 |

+

weight_save_path = _prefix_path + '/weight_saving_path/'

|

| 71 |

+

|

| 72 |

+

'''both public&private test:'''

|

| 73 |

+

test_img_path = _prefix_path + '/test_set/images/'

|

| 74 |

+

test_annotation_path = _prefix_path + '/test_set/annotations/'

|

| 75 |

+

test_masked_img_path = _prefix_path + '/test_set/masked_images/'

|

| 76 |

+

|

| 77 |

+

'''private test:'''

|

| 78 |

+

private_test_img_path = _prefix_path + '/private_test_set/images/'

|

| 79 |

+

private_test_annotation_path = _prefix_path + '/private_test_set/annotations/'

|

| 80 |

+

'''public test-> Eval'''

|

| 81 |

+

public_test_img_path = _prefix_path + '/public_test_set/images/'

|

| 82 |

+

public_test_annotation_path = _prefix_path + '/public_test_set/annotations/'

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

class RafDBConf:

|

| 86 |

+

_prefix_path = './RAF-DB' #

|

| 87 |

+

|

| 88 |

+

orig_annotation_txt_path = _prefix_path + '/list_patition_label.txt'

|

| 89 |

+

orig_image_path = _prefix_path + '/original/'

|

| 90 |

+

orig_bounding_box = _prefix_path + '/boundingbox/'

|

| 91 |

+

|

| 92 |

+

'''only 7 labels'''

|

| 93 |

+

no_aug_train_img_path = _prefix_path + '/train_set/images/'

|

| 94 |

+

no_aug_train_annotation_path = _prefix_path + '/train_set/annotations/'

|

| 95 |

+

|

| 96 |

+

aug_train_img_path = _prefix_path + '/train_set_aug/images/'

|

| 97 |

+

aug_train_annotation_path = _prefix_path + '/train_set_aug/annotations/'

|

| 98 |

+

aug_train_masked_img_path = _prefix_path + '/train_set_aug/masked_images/'

|

| 99 |

+

|

| 100 |

+

test_img_path = _prefix_path + '/test_set/images/'

|

| 101 |

+

test_annotation_path = _prefix_path + '/test_set/annotations/'

|

| 102 |

+

test_masked_img_path = _prefix_path + '/test_set/masked_images/'

|

| 103 |

+

|

| 104 |

+

augmentation_factor = 5

|

| 105 |

+

|

| 106 |

+

weight_save_path = _prefix_path + '/weight_saving_path/'

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

class AffectnetConf:

|

| 110 |

+

""""""

|

| 111 |

+

'''atlas'''

|

| 112 |

+

_prefix_path = './affectnet' # --> Aq

|

| 113 |

+

|

| 114 |

+

orig_csv_train_path = _prefix_path + '/orig/training.csv'

|

| 115 |

+

orig_csv_evaluate_path = _prefix_path + '/orig/validation.csv'

|

| 116 |

+

|

| 117 |

+

'''8 labels'''

|

| 118 |

+

no_aug_train_img_path = _prefix_path + '/train_set/images/'

|

| 119 |

+

no_aug_train_annotation_path = _prefix_path + '/train_set/annotations/'

|

| 120 |

+

|

| 121 |

+

aug_train_img_path = _prefix_path + '/train_set_aug/images/'

|

| 122 |

+

aug_train_annotation_path = _prefix_path + '/train_set_aug/annotations/'

|

| 123 |

+

aug_train_masked_img_path = _prefix_path + '/train_set_aug/masked_images/'

|

| 124 |

+

|

| 125 |

+

eval_img_path = _prefix_path + '/eval_set/images/'

|

| 126 |

+

eval_annotation_path = _prefix_path + '/eval_set/annotations/'

|

| 127 |

+

eval_masked_img_path = _prefix_path + '/eval_set/masked_images/'

|

| 128 |

+

|

| 129 |

+

'''7 labels'''

|

| 130 |

+

no_aug_train_img_path_7 = _prefix_path + '/train_set_7/images/'

|

| 131 |

+

no_aug_train_annotation_path_7 = _prefix_path + '/train_set_7/annotations/'

|

| 132 |

+

|

| 133 |

+

aug_train_img_path_7 = _prefix_path + '/train_set_7_aug/images/'

|

| 134 |

+

aug_train_annotation_path_7 = _prefix_path + '/train_set_7_aug/annotations/'

|

| 135 |

+

aug_train_masked_img_path_7 = _prefix_path + '/train_set_7_aug/masked_images/'

|

| 136 |

+

|

| 137 |

+

eval_img_path_7 = _prefix_path + '/eval_set_7/images/'

|

| 138 |

+

eval_annotation_path_7 = _prefix_path + '/eval_set_7/annotations/'

|

| 139 |

+

eval_masked_img_path_7 = _prefix_path + '/eval_set_7/masked_images/'

|

| 140 |

+

|

| 141 |

+

weight_save_path = _prefix_path + '/weight_saving_path/'

|

| 142 |

+

|

| 143 |

+

num_of_samples_train = 2420940

|

| 144 |

+

num_of_samples_train_7 = 0

|

| 145 |

+

num_of_samples_eval = 3999

|

custom_loss.py

ADDED

|

@@ -0,0 +1,218 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|