---

tags:

- RUDOLPH

- text-image

- image-text

- decoder

datasets:

- sberquad

---

# RUDOLPH-350M (Medium)

RUDOLPH: One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP

Model was trained by [Sber AI](https://github.com/sberbank-ai) and [SberDevices](https://sberdevices.ru/) teams.

* Task: `text2image generation`; `self reranking`; `text ranking`; `image ranking`; `image2text generation`; `zero-shot image classification`, `text2text generation`;

* Language: `Russian`

* Type: `encoder-decoder`

* Num Parameters: `350M`

* Training Data Volume: `156 million text-image pairs`

# Model Description

**RU**ssian **D**ecoder **O**n **L**anguage **P**icture **H**yper-tasking (**RUDOLPH**) **350M** is a fast and light text-image-text transformer (350M GPT-3) designed for a quick and easy fine-tuning for a range of tasks: from generating images by text description and image classification to visual question answering and more. This model demonstrates the power of Hyper-tasking Transformers.

*Hyper-tasking means generalized multi-tasking, e.g., the model that can solve almost all tasks within supported modalities (two modalities in case of RUDOLPH: images and Russian texts).*

# Details of architecture

### Parameters

Model was trained by [Sber AI](https://github.com/sberbank-ai) and [SberDevices](https://sberdevices.ru/) teams.

* Task: `text2image generation`; `self reranking`; `text ranking`; `image ranking`; `image2text generation`; `zero-shot image classification`, `text2text generation`;

* Language: `Russian`

* Type: `encoder-decoder`

* Num Parameters: `350M`

* Training Data Volume: `156 million text-image pairs`

# Model Description

**RU**ssian **D**ecoder **O**n **L**anguage **P**icture **H**yper-tasking (**RUDOLPH**) **350M** is a fast and light text-image-text transformer (350M GPT-3) designed for a quick and easy fine-tuning for a range of tasks: from generating images by text description and image classification to visual question answering and more. This model demonstrates the power of Hyper-tasking Transformers.

*Hyper-tasking means generalized multi-tasking, e.g., the model that can solve almost all tasks within supported modalities (two modalities in case of RUDOLPH: images and Russian texts).*

# Details of architecture

### Parameters

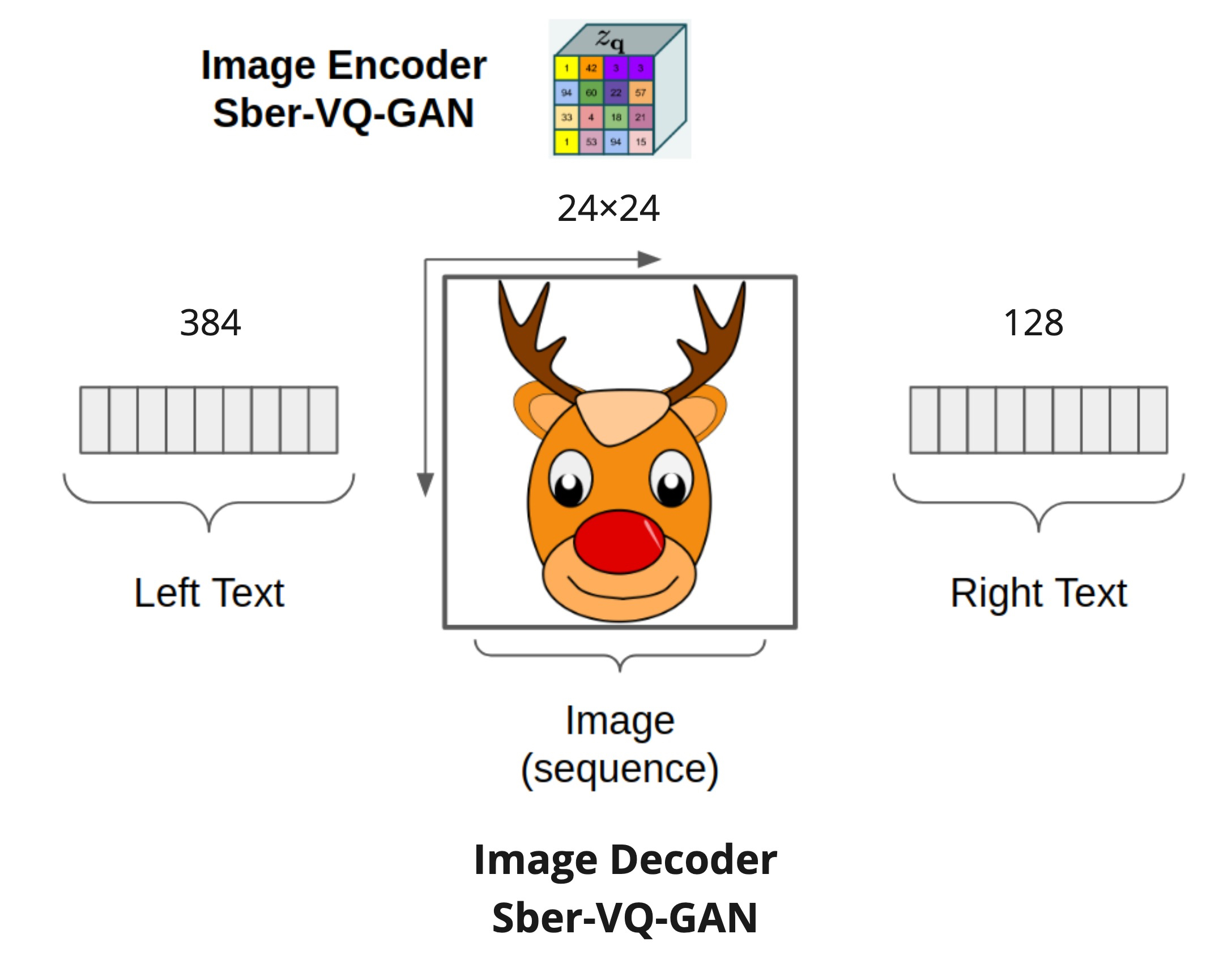

The maximum sequence length that this model may be used with depends on the modality and stands for 384 - 576 - 128 for the left text tokens, image tokens, and right text tokens, respectively.

RUDOLPH 2.7B is a Transformer-based decoder model with the following parameters:

* num\_layers (24) — Number of hidden layers in the Transformer decoder.

* hidden\_size (1024) — Dimensionality of the hidden layers.

* num\_attention\_heads (16) — Number of attention heads for each attention layer.

# Sparse Attention Mask

The primary proposed method is to modify the sparse transformer's attention mask to better control multi-modalities and up to the next level with "hyper-modality". It allows us to calculate the transitions of modalities in both directions, unlike another similar work DALL-E Transformer, which used only one direction, "text to image". The proposed "image to right text" direction is achieved by extension sparse attention mask to the right for auto-repressively text generation with image condition without attention to left text.

The maximum sequence length that this model may be used with depends on the modality and stands for 384 - 576 - 128 for the left text tokens, image tokens, and right text tokens, respectively.

RUDOLPH 2.7B is a Transformer-based decoder model with the following parameters:

* num\_layers (24) — Number of hidden layers in the Transformer decoder.

* hidden\_size (1024) — Dimensionality of the hidden layers.

* num\_attention\_heads (16) — Number of attention heads for each attention layer.

# Sparse Attention Mask

The primary proposed method is to modify the sparse transformer's attention mask to better control multi-modalities and up to the next level with "hyper-modality". It allows us to calculate the transitions of modalities in both directions, unlike another similar work DALL-E Transformer, which used only one direction, "text to image". The proposed "image to right text" direction is achieved by extension sparse attention mask to the right for auto-repressively text generation with image condition without attention to left text.

# Authors

+ Alex Shonenkov: [Github](https://github.com/shonenkov), [Kaggle GM](https://www.kaggle.com/shonenkov)

+ Michael Konstantinov: [Mishin Learning](https://t.me/mishin_learning), [Transformer Community](https://transformer.community/)

# Authors

+ Alex Shonenkov: [Github](https://github.com/shonenkov), [Kaggle GM](https://www.kaggle.com/shonenkov)

+ Michael Konstantinov: [Mishin Learning](https://t.me/mishin_learning), [Transformer Community](https://transformer.community/)

Model was trained by [Sber AI](https://github.com/sberbank-ai) and [SberDevices](https://sberdevices.ru/) teams.

* Task: `text2image generation`; `self reranking`; `text ranking`; `image ranking`; `image2text generation`; `zero-shot image classification`, `text2text generation`;

* Language: `Russian`

* Type: `encoder-decoder`

* Num Parameters: `350M`

* Training Data Volume: `156 million text-image pairs`

# Model Description

**RU**ssian **D**ecoder **O**n **L**anguage **P**icture **H**yper-tasking (**RUDOLPH**) **350M** is a fast and light text-image-text transformer (350M GPT-3) designed for a quick and easy fine-tuning for a range of tasks: from generating images by text description and image classification to visual question answering and more. This model demonstrates the power of Hyper-tasking Transformers.

*Hyper-tasking means generalized multi-tasking, e.g., the model that can solve almost all tasks within supported modalities (two modalities in case of RUDOLPH: images and Russian texts).*

# Details of architecture

### Parameters

Model was trained by [Sber AI](https://github.com/sberbank-ai) and [SberDevices](https://sberdevices.ru/) teams.

* Task: `text2image generation`; `self reranking`; `text ranking`; `image ranking`; `image2text generation`; `zero-shot image classification`, `text2text generation`;

* Language: `Russian`

* Type: `encoder-decoder`

* Num Parameters: `350M`

* Training Data Volume: `156 million text-image pairs`

# Model Description

**RU**ssian **D**ecoder **O**n **L**anguage **P**icture **H**yper-tasking (**RUDOLPH**) **350M** is a fast and light text-image-text transformer (350M GPT-3) designed for a quick and easy fine-tuning for a range of tasks: from generating images by text description and image classification to visual question answering and more. This model demonstrates the power of Hyper-tasking Transformers.

*Hyper-tasking means generalized multi-tasking, e.g., the model that can solve almost all tasks within supported modalities (two modalities in case of RUDOLPH: images and Russian texts).*

# Details of architecture

### Parameters