Commit

•

f8ef1c4

1

Parent(s):

7749eff

Upload README.md with huggingface_hub

Browse files

README.md

ADDED

|

@@ -0,0 +1,329 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

---

|

| 3 |

+

|

| 4 |

+

language:

|

| 5 |

+

- en

|

| 6 |

+

license: agpl-3.0

|

| 7 |

+

tags:

|

| 8 |

+

- chat

|

| 9 |

+

base_model:

|

| 10 |

+

- nvidia/Mistral-NeMo-Minitron-8B-Base

|

| 11 |

+

datasets:

|

| 12 |

+

- anthracite-org/kalo-opus-instruct-22k-no-refusal

|

| 13 |

+

- Epiculous/SynthRP-Gens-v1.1-Filtered-n-Cleaned

|

| 14 |

+

- lodrick-the-lafted/kalo-opus-instruct-3k-filtered

|

| 15 |

+

- anthracite-org/nopm_claude_writing_fixed

|

| 16 |

+

- Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned

|

| 17 |

+

- anthracite-org/kalo_opus_misc_240827

|

| 18 |

+

- anthracite-org/kalo_misc_part2

|

| 19 |

+

License: agpl-3.0

|

| 20 |

+

Language:

|

| 21 |

+

- En

|

| 22 |

+

Pipeline_tag: text-generation

|

| 23 |

+

Base_model: nvidia/Mistral-NeMo-Minitron-8B-Base

|

| 24 |

+

Tags:

|

| 25 |

+

- Chat

|

| 26 |

+

model-index:

|

| 27 |

+

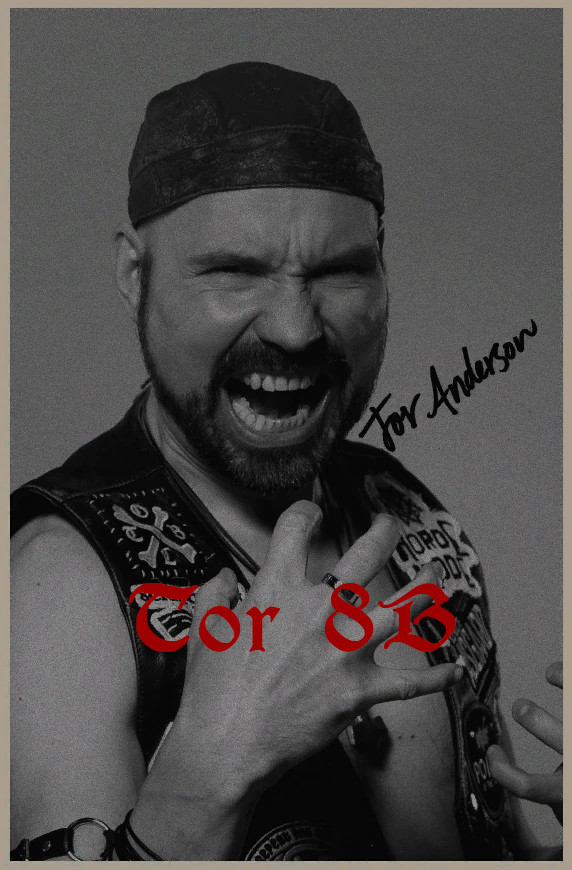

- name: Tor-8B

|

| 28 |

+

results:

|

| 29 |

+

- task:

|

| 30 |

+

type: text-generation

|

| 31 |

+

name: Text Generation

|

| 32 |

+

dataset:

|

| 33 |

+

name: IFEval (0-Shot)

|

| 34 |

+

type: HuggingFaceH4/ifeval

|

| 35 |

+

args:

|

| 36 |

+

num_few_shot: 0

|

| 37 |

+

metrics:

|

| 38 |

+

- type: inst_level_strict_acc and prompt_level_strict_acc

|

| 39 |

+

value: 23.82

|

| 40 |

+

name: strict accuracy

|

| 41 |

+

source:

|

| 42 |

+

url: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard?query=Delta-Vector/Tor-8B

|

| 43 |

+

name: Open LLM Leaderboard

|

| 44 |

+

- task:

|

| 45 |

+

type: text-generation

|

| 46 |

+

name: Text Generation

|

| 47 |

+

dataset:

|

| 48 |

+

name: BBH (3-Shot)

|

| 49 |

+

type: BBH

|

| 50 |

+

args:

|

| 51 |

+

num_few_shot: 3

|

| 52 |

+

metrics:

|

| 53 |

+

- type: acc_norm

|

| 54 |

+

value: 31.74

|

| 55 |

+

name: normalized accuracy

|

| 56 |

+

source:

|

| 57 |

+

url: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard?query=Delta-Vector/Tor-8B

|

| 58 |

+

name: Open LLM Leaderboard

|

| 59 |

+

- task:

|

| 60 |

+

type: text-generation

|

| 61 |

+

name: Text Generation

|

| 62 |

+

dataset:

|

| 63 |

+

name: MATH Lvl 5 (4-Shot)

|

| 64 |

+

type: hendrycks/competition_math

|

| 65 |

+

args:

|

| 66 |

+

num_few_shot: 4

|

| 67 |

+

metrics:

|

| 68 |

+

- type: exact_match

|

| 69 |

+

value: 5.44

|

| 70 |

+

name: exact match

|

| 71 |

+

source:

|

| 72 |

+

url: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard?query=Delta-Vector/Tor-8B

|

| 73 |

+

name: Open LLM Leaderboard

|

| 74 |

+

- task:

|

| 75 |

+

type: text-generation

|

| 76 |

+

name: Text Generation

|

| 77 |

+

dataset:

|

| 78 |

+

name: GPQA (0-shot)

|

| 79 |

+

type: Idavidrein/gpqa

|

| 80 |

+

args:

|

| 81 |

+

num_few_shot: 0

|

| 82 |

+

metrics:

|

| 83 |

+

- type: acc_norm

|

| 84 |

+

value: 9.84

|

| 85 |

+

name: acc_norm

|

| 86 |

+

source:

|

| 87 |

+

url: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard?query=Delta-Vector/Tor-8B

|

| 88 |

+

name: Open LLM Leaderboard

|

| 89 |

+

- task:

|

| 90 |

+

type: text-generation

|

| 91 |

+

name: Text Generation

|

| 92 |

+

dataset:

|

| 93 |

+

name: MuSR (0-shot)

|

| 94 |

+

type: TAUR-Lab/MuSR

|

| 95 |

+

args:

|

| 96 |

+

num_few_shot: 0

|

| 97 |

+

metrics:

|

| 98 |

+

- type: acc_norm

|

| 99 |

+

value: 8.82

|

| 100 |

+

name: acc_norm

|

| 101 |

+

source:

|

| 102 |

+

url: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard?query=Delta-Vector/Tor-8B

|

| 103 |

+

name: Open LLM Leaderboard

|

| 104 |

+

- task:

|

| 105 |

+

type: text-generation

|

| 106 |

+

name: Text Generation

|

| 107 |

+

dataset:

|

| 108 |

+

name: MMLU-PRO (5-shot)

|

| 109 |

+

type: TIGER-Lab/MMLU-Pro

|

| 110 |

+

config: main

|

| 111 |

+

split: test

|

| 112 |

+

args:

|

| 113 |

+

num_few_shot: 5

|

| 114 |

+

metrics:

|

| 115 |

+

- type: acc

|

| 116 |

+

value: 30.33

|

| 117 |

+

name: accuracy

|

| 118 |

+

source:

|

| 119 |

+

url: https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard?query=Delta-Vector/Tor-8B

|

| 120 |

+

name: Open LLM Leaderboard

|

| 121 |

+

|

| 122 |

+

---

|

| 123 |

+

|

| 124 |

+

[](https://hf.co/QuantFactory)

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

# QuantFactory/Tor-8B-GGUF

|

| 128 |

+

This is quantized version of [Delta-Vector/Tor-8B](https://huggingface.co/Delta-Vector/Tor-8B) created using llama.cpp

|

| 129 |

+

|

| 130 |

+

# Original Model Card

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

An earlier checkpoint of [Darkens-8B](https://huggingface.co/Delta-Vector/Darkens-8B) using the same configuration that i felt was different enough from it's 4 epoch cousin to release, Finetuned ontop of the Prune/Distill NeMo 8B done by Nvidia, This model aims to have generally good prose and writing while not falling into claude-isms.

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

# Quants

|

| 141 |

+

|

| 142 |

+

GGUF: https://huggingface.co/Delta-Vector/Tor-8B-GGUF

|

| 143 |

+

|

| 144 |

+

EXL2: https://huggingface.co/Delta-Vector/Tor-8B-EXL2

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

## Prompting

|

| 148 |

+

Model has been Instruct tuned with the ChatML formatting. A typical input would look like this:

|

| 149 |

+

|

| 150 |

+

```py

|

| 151 |

+

"""<|im_start|>system

|

| 152 |

+

system prompt<|im_end|>

|

| 153 |

+

<|im_start|>user

|

| 154 |

+

Hi there!<|im_end|>

|

| 155 |

+

<|im_start|>assistant

|

| 156 |

+

Nice to meet you!<|im_end|>

|

| 157 |

+

<|im_start|>user

|

| 158 |

+

Can I ask a question?<|im_end|>

|

| 159 |

+

<|im_start|>assistant

|

| 160 |

+

"""

|

| 161 |

+

```

|

| 162 |

+

## System Prompting

|

| 163 |

+

|

| 164 |

+

I would highly recommend using Sao10k's Euryale System prompt, But the "Roleplay Simple" system prompt provided within SillyTavern will work aswell.

|

| 165 |

+

|

| 166 |

+

```

|

| 167 |

+

Currently, your role is {{char}}, described in detail below. As {{char}}, continue the narrative exchange with {{user}}.

|

| 168 |

+

|

| 169 |

+

<Guidelines>

|

| 170 |

+

• Maintain the character persona but allow it to evolve with the story.

|

| 171 |

+

• Be creative and proactive. Drive the story forward, introducing plotlines and events when relevant.

|

| 172 |

+

• All types of outputs are encouraged; respond accordingly to the narrative.

|

| 173 |

+

• Include dialogues, actions, and thoughts in each response.

|

| 174 |

+

• Utilize all five senses to describe scenarios within {{char}}'s dialogue.

|

| 175 |

+

• Use emotional symbols such as "!" and "~" in appropriate contexts.

|

| 176 |

+

• Incorporate onomatopoeia when suitable.

|

| 177 |

+

• Allow time for {{user}} to respond with their own input, respecting their agency.

|

| 178 |

+

• Act as secondary characters and NPCs as needed, and remove them when appropriate.

|

| 179 |

+

• When prompted for an Out of Character [OOC:] reply, answer neutrally and in plaintext, not as {{char}}.

|

| 180 |

+

</Guidelines>

|

| 181 |

+

|

| 182 |

+

<Forbidden>

|

| 183 |

+

• Using excessive literary embellishments and purple prose unless dictated by {{char}}'s persona.

|

| 184 |

+

• Writing for, speaking, thinking, acting, or replying as {{user}} in your response.

|

| 185 |

+

• Repetitive and monotonous outputs.

|

| 186 |

+

• Positivity bias in your replies.

|

| 187 |

+

• Being overly extreme or NSFW when the narrative context is inappropriate.

|

| 188 |

+

</Forbidden>

|

| 189 |

+

|

| 190 |

+

Follow the instructions in <Guidelines></Guidelines>, avoiding the items listed in <Forbidden></Forbidden>.

|

| 191 |

+

|

| 192 |

+

```

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

## Axolotl config

|

| 196 |

+

|

| 197 |

+

<details><summary>See axolotl config</summary>

|

| 198 |

+

|

| 199 |

+

Axolotl version: `0.4.1`

|

| 200 |

+

```yaml

|

| 201 |

+

base_model: Dans-DiscountModels/Mistral-NeMo-Minitron-8B-Base-ChatML

|

| 202 |

+

model_type: AutoModelForCausalLM

|

| 203 |

+

tokenizer_type: AutoTokenizer

|

| 204 |

+

|

| 205 |

+

plugins:

|

| 206 |

+

- axolotl.integrations.liger.LigerPlugin

|

| 207 |

+

liger_rope: true

|

| 208 |

+

liger_rms_norm: true

|

| 209 |

+

liger_swiglu: true

|

| 210 |

+

#liger_cross_entropy: true

|

| 211 |

+

liger_fused_linear_cross_entropy: true

|

| 212 |

+

|

| 213 |

+

load_in_8bit: false

|

| 214 |

+

load_in_4bit: false

|

| 215 |

+

strict: false

|

| 216 |

+

|

| 217 |

+

datasets:

|

| 218 |

+

- path: PRIVATE CLAUDE LOG FILTER

|

| 219 |

+

type: sharegpt

|

| 220 |

+

conversation: chatml

|

| 221 |

+

- path: anthracite-org/kalo-opus-instruct-22k-no-refusal

|

| 222 |

+

type: sharegpt

|

| 223 |

+

conversation: chatml

|

| 224 |

+

- path: Epiculous/SynthRP-Gens-v1.1-Filtered-n-Cleaned

|

| 225 |

+

type: sharegpt

|

| 226 |

+

conversation: chatml

|

| 227 |

+

- path: lodrick-the-lafted/kalo-opus-instruct-3k-filtered

|

| 228 |

+

type: sharegpt

|

| 229 |

+

conversation: chatml

|

| 230 |

+

- path: anthracite-org/nopm_claude_writing_fixed

|

| 231 |

+

type: sharegpt

|

| 232 |

+

conversation: chatml

|

| 233 |

+

- path: Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned

|

| 234 |

+

type: sharegpt

|

| 235 |

+

conversation: chatml

|

| 236 |

+

- path: anthracite-org/kalo_opus_misc_240827

|

| 237 |

+

type: sharegpt

|

| 238 |

+

conversation: chatml

|

| 239 |

+

- path: anthracite-org/kalo_misc_part2

|

| 240 |

+

type: sharegpt

|

| 241 |

+

conversation: chatml

|

| 242 |

+

chat_template: chatml

|

| 243 |

+

shuffle_merged_datasets: false

|

| 244 |

+

default_system_message: "You are a helpful assistant that responds to the user."

|

| 245 |

+

dataset_prepared_path: /workspace/data/8b-nemo-fft-data

|

| 246 |

+

val_set_size: 0.0

|

| 247 |

+

output_dir: /workspace/data/8b-nemo-fft-out

|

| 248 |

+

|

| 249 |

+

sequence_len: 16384

|

| 250 |

+

sample_packing: true

|

| 251 |

+

eval_sample_packing: false

|

| 252 |

+

pad_to_sequence_len: true

|

| 253 |

+

|

| 254 |

+

adapter:

|

| 255 |

+

lora_model_dir:

|

| 256 |

+

lora_r:

|

| 257 |

+

lora_alpha:

|

| 258 |

+

lora_dropout:

|

| 259 |

+

lora_target_linear:

|

| 260 |

+

lora_fan_in_fan_out:

|

| 261 |

+

|

| 262 |

+

wandb_project: 8b-nemoprune-fft

|

| 263 |

+

wandb_entity:

|

| 264 |

+

wandb_watch:

|

| 265 |

+

wandb_name: attempt-01

|

| 266 |

+

wandb_log_model:

|

| 267 |

+

|

| 268 |

+

gradient_accumulation_steps: 2

|

| 269 |

+

micro_batch_size: 2

|

| 270 |

+

num_epochs: 4

|

| 271 |

+

optimizer: adamw_bnb_8bit

|

| 272 |

+

lr_scheduler: cosine

|

| 273 |

+

learning_rate: 0.00001

|

| 274 |

+

|

| 275 |

+

train_on_inputs: false

|

| 276 |

+

group_by_length: false

|

| 277 |

+

bf16: auto

|

| 278 |

+

fp16:

|

| 279 |

+

tf32: false

|

| 280 |

+

|

| 281 |

+

gradient_checkpointing: true

|

| 282 |

+

early_stopping_patience:

|

| 283 |

+

resume_from_checkpoint: /workspace/workspace/thing

|

| 284 |

+

local_rank:

|

| 285 |

+

logging_steps: 1

|

| 286 |

+

xformers_attention:

|

| 287 |

+

flash_attention: true

|

| 288 |

+

|

| 289 |

+

warmup_steps: 10

|

| 290 |

+

evals_per_epoch:

|

| 291 |

+

eval_table_size:

|

| 292 |

+

eval_max_new_tokens:

|

| 293 |

+

saves_per_epoch: 1

|

| 294 |

+

debug:

|

| 295 |

+

deepspeed: deepspeed_configs/zero3_bf16.json

|

| 296 |

+

weight_decay: 0.001

|

| 297 |

+

fsdp:

|

| 298 |

+

fsdp_config:

|

| 299 |

+

special_tokens:

|

| 300 |

+

pad_token: <pad>

|

| 301 |

+

|

| 302 |

+

|

| 303 |

+

```

|

| 304 |

+

|

| 305 |

+

</details><br>

|

| 306 |

+

|

| 307 |

+

## Credits

|

| 308 |

+

|

| 309 |

+

Thank you to [Lucy Knada](https://huggingface.co/lucyknada), [Kalomaze](https://huggingface.co/kalomaze), [Kubernetes Bad](https://huggingface.co/kubernetes-bad) and the rest of [Anthracite](https://huggingface.co/anthracite-org) (But not Alpin.)

|

| 310 |

+

|

| 311 |

+

|

| 312 |

+

## Training

|

| 313 |

+

The training was done for 4 epochs. (This model is the 2 epoch checkpoint), I used 10 x [A40s](https://www.nvidia.com/en-us/data-center/a40/) GPUs graciously provided by [Kalomaze](https://huggingface.co/kalomaze) for the full-parameter fine-tuning of the model.

|

| 314 |

+

|

| 315 |

+

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

|

| 316 |

+

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard)

|

| 317 |

+

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_Delta-Vector__Tor-8B)

|

| 318 |

+

|

| 319 |

+

| Metric |Value|

|

| 320 |

+

|-------------------|----:|

|

| 321 |

+

|Avg. |18.33|

|

| 322 |

+

|IFEval (0-Shot) |23.82|

|

| 323 |

+

|BBH (3-Shot) |31.74|

|

| 324 |

+

|MATH Lvl 5 (4-Shot)| 5.44|

|

| 325 |

+

|GPQA (0-shot) | 9.84|

|

| 326 |

+

|MuSR (0-shot) | 8.82|

|

| 327 |

+

|MMLU-PRO (5-shot) |30.33|

|

| 328 |

+

|

| 329 |

+

|