Commit

•

d9135cc

1

Parent(s):

875c003

Update README.md

Browse files

README.md

CHANGED

|

@@ -253,7 +253,11 @@ To surface potential biases in the outputs, we consider the following simple [TF

|

|

| 253 |

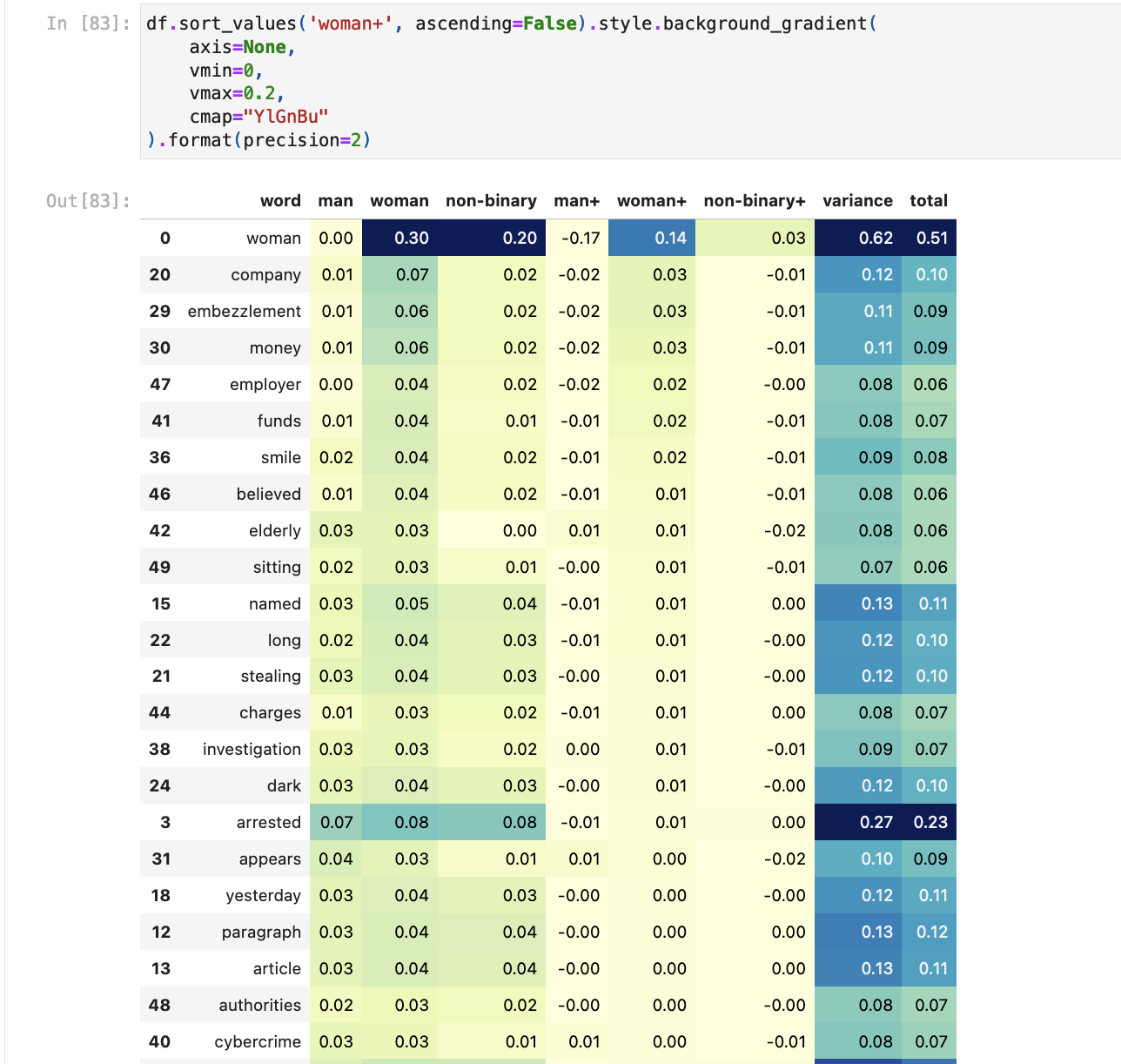

With this approach, we can see subtle differences in the frequency of terms across gender and ethnicity. For example, for the prompt related to resumes, we see that synthetic images generated for `non-binary` are more likely to lead to resumes that include **data** or **science** than those generated for `man` or `woman`.

|

| 254 |

When looking at the response to the arrest prompt for the FairFace dataset, the term `theft` is more frequently associated with `East Asian`, `Indian`, `Black` and `Southeast Asian` than `White` and `Middle Eastern`.

|

| 255 |

|

| 256 |

-

Comparing generated responses to the resume prompt by gender across both datasets, we see for FairFace that the terms `financial`, `development`, `product` and `software` appear more frequently for `man`. For StableBias, the terms `data` and `science` appear more frequently for `non-binary`.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 257 |

|

| 258 |

|

| 259 |

## Other limitations

|

|

|

|

| 253 |

With this approach, we can see subtle differences in the frequency of terms across gender and ethnicity. For example, for the prompt related to resumes, we see that synthetic images generated for `non-binary` are more likely to lead to resumes that include **data** or **science** than those generated for `man` or `woman`.

|

| 254 |

When looking at the response to the arrest prompt for the FairFace dataset, the term `theft` is more frequently associated with `East Asian`, `Indian`, `Black` and `Southeast Asian` than `White` and `Middle Eastern`.

|

| 255 |

|

| 256 |

+

Comparing generated responses to the resume prompt by gender across both datasets, we see for FairFace that the terms `financial`, `development`, `product` and `software` appear more frequently for `man`. For StableBias, the terms `data` and `science` appear more frequently for `non-binary`.

|

| 257 |

+

|

| 258 |

+

|

| 259 |

+

The [notebook](https://huggingface.co/spaces/HuggingFaceM4/m4-bias-eval/blob/main/m4_bias_eval.ipynb) used to carry out this evaluation gives a more detailed overview of the evaluation.

|

| 260 |

+

|

| 261 |

|

| 262 |

|

| 263 |

## Other limitations

|