file structure (#1)

Browse files- file structure (d044298cd7c34df859d10d64e2d606afe2d43082)

- Create unet/init.txt (e61e2ebb8b9efd62b5df4886ae3ecef64e7e0ca5)

- Create tokenizer/init.txt (f6c6c4a9b712631c68437206815dbedba8150b63)

- Create text_encoder/init.txt (b19b0b82c23a2a9dad54c896d73bfe534ddfe019)

- Create scheduler/init.txt (e108c085cd25b4c91701b7d4c2d40be21dd63caa)

- Create feature_extractor/init.txt (087ce4eaf219f96f03b2f072c5b922eddad4092a)

- Create ckpts/init.txt (364fe52b756700f9f7636d732e923a11247557e9)

- Upload 3 files (b9e74b3a27b79bfcfaefdaf413f31842fffa66b7)

- Upload PoWStyle_Abstralities.ckpt (b2695543a3ba3d37fe287ab950a5f844a8e59d91)

- Create images/init.txt (f18c4389256ecae488106fdf508a0f89b0da5bcd)

- Upload 5 files (1bf81ebb3dc4818c1cade6e14033ac1d2c61f163)

- Delete 091122/images/init.txt (b2ce300e31065b1188904c932ed637a86cba5fc9)

- Delete 091122/ckpts/init.txt (f2a3d2acc5c90a19e84acdc17f69515613add91d)

- Upload model_index.json (2d8fe7f7bd0f025d05abe7723ccda35947bab341)

- Upload 2 files (68f13ec2e0fce719e2332979a50bf24269e8e129)

- Delete 091122/diffusers/vae/init.txt (50147e7ae4c877083e3353241c63d77fbad61a50)

- Upload 2 files (a43463f5035a1e3353635dfc95f6b0652f434a3f)

- Delete 091122/diffusers/unet/init.txt (44e90e4004addda5e821685f7380a61058e6d5f1)

- Upload 4 files (fa97a13b79e74bd240f553a70244718321aa951d)

- Delete 091122/diffusers/tokenizer/init.txt (7b30cd25aa8777c1bc18886f265f94f359f5ae6e)

- Upload 2 files (affc971905ec74e6f0becd281711d78eaa409fe7)

- Delete 091122/diffusers/text_encoder/init.txt (28891014fd8f05771b656c773627f666a7466901)

- Upload scheduler_config.json (90ee92b4cf36186cb84977394d4a0d6afd11379b)

- Delete 091122/diffusers/scheduler/init.txt (3971cf3f233f20a40654da9a6fc4a76ed412ba7e)

- Upload preprocessor_config.json (fa13ec2a3624da94a60690cc16421667625271e0)

- Delete 091122/diffusers/feature_extractor/init.txt (e56461285d0c7472212aec9924102e79e8a64425)

- Upload 2 files (d90efd3278598f37da7fbc99ba29194bb9563cd7)

- Upload dataset.zip (ebd57e4d09fca7420b60cee70da3b56fa00167ae)

- Upload README.md (7f2265fd7892c1817f62a99925b56e4a43fad641)

- .gitattributes +3 -0

- 091122/ckpts/BendingReality_Style-v1.ckpt +3 -0

- 091122/ckpts/Bendstract-v1.ckpt +3 -0

- 091122/ckpts/PoWStyle_Abstralities.ckpt +3 -0

- 091122/ckpts/PoWStyle_midrun.ckpt +3 -0

- 091122/dataset.zip +3 -0

- 091122/diffusers/feature_extractor/preprocessor_config.json +20 -0

- 091122/diffusers/model_index.json +28 -0

- 091122/diffusers/scheduler/scheduler_config.json +12 -0

- 091122/diffusers/text_encoder/config.json +25 -0

- 091122/diffusers/text_encoder/pytorch_model.bin +3 -0

- 091122/diffusers/tokenizer/merges.txt +0 -0

- 091122/diffusers/tokenizer/special_tokens_map.json +1 -0

- 091122/diffusers/tokenizer/tokenizer_config.json +1 -0

- 091122/diffusers/tokenizer/vocab.json +0 -0

- 091122/diffusers/unet/config.json +36 -0

- 091122/diffusers/unet/diffusion_pytorch_model.bin +3 -0

- 091122/diffusers/vae/config.json +29 -0

- 091122/diffusers/vae/diffusion_pytorch_model.bin +3 -0

- 091122/images/AETHER.png +0 -0

- 091122/images/aether2.png +0 -0

- 091122/images/showcase_bendingreality.jpg +3 -0

- 091122/images/showcase_bendstract.jpg +3 -0

- 091122/images/showcase_pow_midrun.jpg +3 -0

- README.md +85 -0

- showcase.jpg +0 -0

|

@@ -32,3 +32,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

091122/images/showcase_bendstract.jpg filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

091122/images/showcase_pow_midrun.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

091122/images/showcase_bendingreality.jpg filter=lfs diff=lfs merge=lfs -text

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5893d0e6598a15aa2d48d46060da7f194515e095a45f252c79e64bacb110bec2

|

| 3 |

+

size 2132870858

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fc4a64bb612ee0acfdc1bfe5745c1070921245a7cbf7cf5922a389b9a1db5d28

|

| 3 |

+

size 2132866262

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b8f23dc37410061035ae24abc70738c62836c2f1e2e106eb96936f702099a57a

|

| 3 |

+

size 2132856622

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:eca0422f4dd852c162e20658e8f3eed6cbe8f544f247e257276e0569f4260b56

|

| 3 |

+

size 2132856622

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:61eef54f22b57908b102a174e5653a45de09837871978df293443cf2683135f3

|

| 3 |

+

size 5038865

|

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": 224,

|

| 3 |

+

"do_center_crop": true,

|

| 4 |

+

"do_convert_rgb": true,

|

| 5 |

+

"do_normalize": true,

|

| 6 |

+

"do_resize": true,

|

| 7 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 8 |

+

"image_mean": [

|

| 9 |

+

0.48145466,

|

| 10 |

+

0.4578275,

|

| 11 |

+

0.40821073

|

| 12 |

+

],

|

| 13 |

+

"image_std": [

|

| 14 |

+

0.26862954,

|

| 15 |

+

0.26130258,

|

| 16 |

+

0.27577711

|

| 17 |

+

],

|

| 18 |

+

"resample": 3,

|

| 19 |

+

"size": 224

|

| 20 |

+

}

|

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableDiffusionPipeline",

|

| 3 |

+

"_diffusers_version": "0.8.0.dev0",

|

| 4 |

+

"feature_extractor": [

|

| 5 |

+

"transformers",

|

| 6 |

+

"CLIPFeatureExtractor"

|

| 7 |

+

],

|

| 8 |

+

"scheduler": [

|

| 9 |

+

"diffusers",

|

| 10 |

+

"DDIMScheduler"

|

| 11 |

+

],

|

| 12 |

+

"text_encoder": [

|

| 13 |

+

"transformers",

|

| 14 |

+

"CLIPTextModel"

|

| 15 |

+

],

|

| 16 |

+

"tokenizer": [

|

| 17 |

+

"transformers",

|

| 18 |

+

"CLIPTokenizer"

|

| 19 |

+

],

|

| 20 |

+

"unet": [

|

| 21 |

+

"diffusers",

|

| 22 |

+

"UNet2DConditionModel"

|

| 23 |

+

],

|

| 24 |

+

"vae": [

|

| 25 |

+

"diffusers",

|

| 26 |

+

"AutoencoderKL"

|

| 27 |

+

]

|

| 28 |

+

}

|

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "DDIMScheduler",

|

| 3 |

+

"_diffusers_version": "0.8.0.dev0",

|

| 4 |

+

"beta_end": 0.012,

|

| 5 |

+

"beta_schedule": "scaled_linear",

|

| 6 |

+

"beta_start": 0.00085,

|

| 7 |

+

"clip_sample": false,

|

| 8 |

+

"num_train_timesteps": 1000,

|

| 9 |

+

"set_alpha_to_one": false,

|

| 10 |

+

"steps_offset": 1,

|

| 11 |

+

"trained_betas": null

|

| 12 |

+

}

|

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "openai/clip-vit-large-patch14",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"CLIPTextModel"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"dropout": 0.0,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"hidden_act": "quick_gelu",

|

| 11 |

+

"hidden_size": 768,

|

| 12 |

+

"initializer_factor": 1.0,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 3072,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 77,

|

| 17 |

+

"model_type": "clip_text_model",

|

| 18 |

+

"num_attention_heads": 12,

|

| 19 |

+

"num_hidden_layers": 12,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"projection_dim": 768,

|

| 22 |

+

"torch_dtype": "float32",

|

| 23 |

+

"transformers_version": "4.19.2",

|

| 24 |

+

"vocab_size": 49408

|

| 25 |

+

}

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:770a47a9ffdcfda0b05506a7888ed714d06131d60267e6cf52765d61cf59fd67

|

| 3 |

+

size 492305335

|

|

The diff for this file is too large to render.

See raw diff

|

|

|

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"bos_token": {"content": "<|startoftext|>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true}, "eos_token": {"content": "<|endoftext|>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true}, "unk_token": {"content": "<|endoftext|>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true}, "pad_token": "<|endoftext|>"}

|

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"errors": "replace", "unk_token": {"content": "<|endoftext|>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true, "__type": "AddedToken"}, "bos_token": {"content": "<|startoftext|>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true, "__type": "AddedToken"}, "eos_token": {"content": "<|endoftext|>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true, "__type": "AddedToken"}, "pad_token": "<|endoftext|>", "add_prefix_space": false, "do_lower_case": true, "name_or_path": "openai/clip-vit-large-patch14", "model_max_length": 77, "special_tokens_map_file": "./special_tokens_map.json", "tokenizer_class": "CLIPTokenizer"}

|

|

The diff for this file is too large to render.

See raw diff

|

|

|

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "UNet2DConditionModel",

|

| 3 |

+

"_diffusers_version": "0.8.0.dev0",

|

| 4 |

+

"act_fn": "silu",

|

| 5 |

+

"attention_head_dim": 8,

|

| 6 |

+

"block_out_channels": [

|

| 7 |

+

320,

|

| 8 |

+

640,

|

| 9 |

+

1280,

|

| 10 |

+

1280

|

| 11 |

+

],

|

| 12 |

+

"center_input_sample": false,

|

| 13 |

+

"cross_attention_dim": 768,

|

| 14 |

+

"down_block_types": [

|

| 15 |

+

"CrossAttnDownBlock2D",

|

| 16 |

+

"CrossAttnDownBlock2D",

|

| 17 |

+

"CrossAttnDownBlock2D",

|

| 18 |

+

"DownBlock2D"

|

| 19 |

+

],

|

| 20 |

+

"downsample_padding": 1,

|

| 21 |

+

"flip_sin_to_cos": true,

|

| 22 |

+

"freq_shift": 0,

|

| 23 |

+

"in_channels": 4,

|

| 24 |

+

"layers_per_block": 2,

|

| 25 |

+

"mid_block_scale_factor": 1,

|

| 26 |

+

"norm_eps": 1e-05,

|

| 27 |

+

"norm_num_groups": 32,

|

| 28 |

+

"out_channels": 4,

|

| 29 |

+

"sample_size": 32,

|

| 30 |

+

"up_block_types": [

|

| 31 |

+

"UpBlock2D",

|

| 32 |

+

"CrossAttnUpBlock2D",

|

| 33 |

+

"CrossAttnUpBlock2D",

|

| 34 |

+

"CrossAttnUpBlock2D"

|

| 35 |

+

]

|

| 36 |

+

}

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c7da0e21ba7ea50637bee26e81c220844defdf01aafca02b2c42ecdadb813de4

|

| 3 |

+

size 3438354725

|

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "AutoencoderKL",

|

| 3 |

+

"_diffusers_version": "0.8.0.dev0",

|

| 4 |

+

"act_fn": "silu",

|

| 5 |

+

"block_out_channels": [

|

| 6 |

+

128,

|

| 7 |

+

256,

|

| 8 |

+

512,

|

| 9 |

+

512

|

| 10 |

+

],

|

| 11 |

+

"down_block_types": [

|

| 12 |

+

"DownEncoderBlock2D",

|

| 13 |

+

"DownEncoderBlock2D",

|

| 14 |

+

"DownEncoderBlock2D",

|

| 15 |

+

"DownEncoderBlock2D"

|

| 16 |

+

],

|

| 17 |

+

"in_channels": 3,

|

| 18 |

+

"latent_channels": 4,

|

| 19 |

+

"layers_per_block": 2,

|

| 20 |

+

"norm_num_groups": 32,

|

| 21 |

+

"out_channels": 3,

|

| 22 |

+

"sample_size": 256,

|

| 23 |

+

"up_block_types": [

|

| 24 |

+

"UpDecoderBlock2D",

|

| 25 |

+

"UpDecoderBlock2D",

|

| 26 |

+

"UpDecoderBlock2D",

|

| 27 |

+

"UpDecoderBlock2D"

|

| 28 |

+

]

|

| 29 |

+

}

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1b134cded8eb78b184aefb8805b6b572f36fa77b255c483665dda931fa0130c5

|

| 3 |

+

size 334707217

|

|

|

|

Git LFS Details

|

|

Git LFS Details

|

|

Git LFS Details

|

|

@@ -1,3 +1,88 @@

|

|

| 1 |

---

|

|

|

|

|

|

|

| 2 |

license: creativeml-openrail-m

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

license: creativeml-openrail-m

|

| 5 |

+

thumbnail: "https://huggingface.co/Guizmus/SD_PoW_Collection/resolve/main/showcase.jpg"

|

| 6 |

+

tags:

|

| 7 |

+

- stable-diffusion

|

| 8 |

+

- text-to-image

|

| 9 |

+

- image-to-image

|

| 10 |

+

library_name: "https://github.com/ShivamShrirao/diffusers"

|

| 11 |

+

|

| 12 |

---

|

| 13 |

+

|

| 14 |

+

# Intro

|

| 15 |

+

|

| 16 |

+

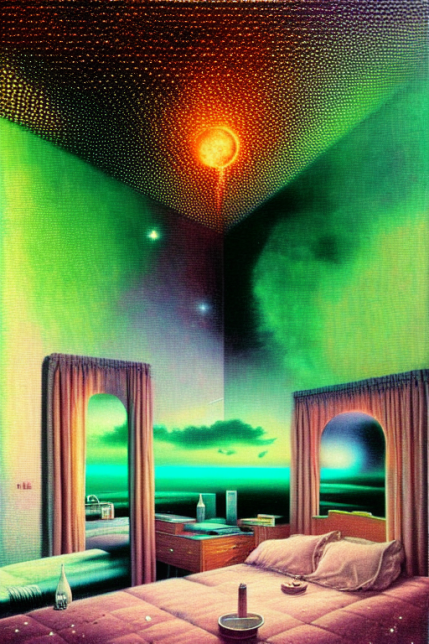

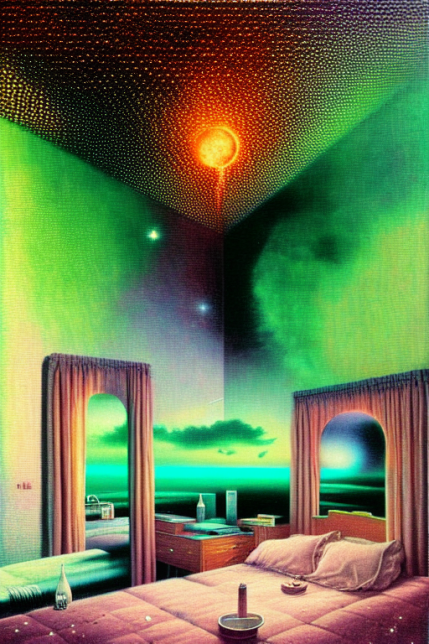

This is a collection of models related to the "Picture of the Week" contest on Stable Diffusion discord.

|

| 17 |

+

|

| 18 |

+

I try to make a model out of all the submission for people to continue enjoy the theme after the even, and see a little of their designs in other people's creations. The token stays "PoW Style" and I balance the learning on the low side, so that it doesn't just replicate creations.

|

| 19 |

+

|

| 20 |

+

I also make smaller quality models to help make pictures for the contest itself, based on the theme.

|

| 21 |

+

|

| 22 |

+

## 09 novembre 2022, "Abstralities"

|

| 23 |

+

|

| 24 |

+

### Theme : Abstract Realities

|

| 25 |

+

|

| 26 |

+

Glitch, warp, static, shape, flicker, break, bend, mend

|

| 27 |

+

|

| 28 |

+

Have you ever felt your reality shift out from under your feet? Our perception falters and repairs itself in the blink of an eye. Just how much do our brains influence what we perceive? How much control do we have over molding these realities?

|

| 29 |

+

|

| 30 |

+

With the introduction of AI and its rapid pace taking the world by storm, we are seeing single-handedly just how these realities can bring worlds into fruition.

|

| 31 |

+

|

| 32 |

+

* Can you show us your altered reality?

|

| 33 |

+

* Are these realities truly broken, or only bent?

|

| 34 |

+

|

| 35 |

+

Our example prompt for this event was created by @Aether !

|

| 36 |

+

|

| 37 |

+

"household objects floating in space, bedroom, furniture, home living, warped reality, cosmic horror, nightmare, retrofuturism, surrealism, abstract, illustrations by alan nasmith"

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

### Models

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

* Main model based on all the results from the PoW

|

| 48 |

+

* training: 51 pictures, 3000 steps on 1e-6 polynomial LR.

|

| 49 |

+

* balanced on the light side, add attention/weight on the activation token

|

| 50 |

+

* **Activation token :** `PoW Style`

|

| 51 |

+

* [CKPT link](https://huggingface.co/Guizmus/SD_PoW_Collection/resolve/main/091122/ckpts/PoWStyle_Abstralities.ckpt)

|

| 52 |

+

* [Diffusers : Guizmus/SD_PoW_Collection/091122/diffusers](https://huggingface.co/Guizmus/SD_PoW_Collection/091122/diffusers/)

|

| 53 |

+

* [Dataset](https://huggingface.co/Guizmus/SD_PoW_Collection/091122/dataset.zip)

|

| 54 |

+

|

| 55 |

+

---

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

* based on the suggested prompt

|

| 59 |

+

* training: 100 pictures, 7500 steps on 1e-6 polynomial LR. overtrained

|

| 60 |

+

* **Activation token :** `Bendstract Style`

|

| 61 |

+

* [CKPT link](https://huggingface.co/Guizmus/SD_PoW_Collection/resolve/main/091122/ckpts/Bendstract-v1.ckpt)

|

| 62 |

+

|

| 63 |

+

---

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

* based on the suggested prompt

|

| 67 |

+

* training: 68 pictures, 6000 steps on 1e-6 polynomial LR. overtrained

|

| 68 |

+

* **Activation token :** `BendingReality Style`

|

| 69 |

+

* [CKPT link](https://huggingface.co/Guizmus/SD_PoW_Collection/resolve/main/091122/ckpts/BendingReality_Style-v1.ckpt)

|

| 70 |

+

|

| 71 |

+

---

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

* based on the first few submissions

|

| 75 |

+

* training: 24 pictures, 2400 steps on 1e-6 polynomial LR. a little too trained

|

| 76 |

+

* **Activation token :** `PoW Style`

|

| 77 |

+

* [CKPT link](https://huggingface.co/Guizmus/SD_PoW_Collection/resolve/main/091122/ckpts/PoWStyle_midrun.ckpt)

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

## License

|

| 81 |

+

|

| 82 |

+

These models are open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

|

| 83 |

+

The CreativeML OpenRAIL License specifies:

|

| 84 |

+

|

| 85 |

+

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

|

| 86 |

+

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

|

| 87 |

+

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

|

| 88 |

+

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

|