Commit

•

dfb2242

1

Parent(s):

7f8d91f

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,26 +1,95 @@

|

|

| 1 |

---

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

tags:

|

| 6 |

-

-

|

| 7 |

-

|

| 8 |

-

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

### exl2 quant (measurement.json in main branch)

|

| 12 |

-

---

|

| 13 |

-

### check revisions for quants

|

| 14 |

---

|

| 15 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 |

|

| 17 |

-

|

| 18 |

-

should probably proofread and complete it, then remove this comment. -->

|

| 19 |

|

| 20 |

-

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

|

| 21 |

<details><summary>See axolotl config</summary>

|

| 22 |

|

| 23 |

-

|

| 24 |

```yaml

|

| 25 |

base_model: arcee-ai/Llama-3.1-SuperNova-Lite

|

| 26 |

model_type: AutoModelForCausalLM

|

|

@@ -62,8 +131,6 @@ datasets:

|

|

| 62 |

type: chat_template

|

| 63 |

- path: anthracite-org/kalo_misc_part2

|

| 64 |

type: chat_template

|

| 65 |

-

- path: anthracite-org/kalo_misc_part2

|

| 66 |

-

type: chat_template

|

| 67 |

- path: Nitral-AI/Creative_Writing-ShareGPT

|

| 68 |

type: chat_template

|

| 69 |

- path: NewEden/Gryphe-Sonnet3.5-Charcard-Roleplay-unfiltered

|

|

@@ -134,54 +201,13 @@ special_tokens:

|

|

| 134 |

eos_token: <|eot_id|>

|

| 135 |

|

| 136 |

|

| 137 |

-

|

| 138 |

-

|

| 139 |

```

|

|

|

|

| 140 |

|

|

|

|

| 141 |

</details><br>

|

| 142 |

|

| 143 |

-

|

| 144 |

-

|

| 145 |

-

This model is a fine-tuned version of [arcee-ai/Llama-3.1-SuperNova-Lite](https://huggingface.co/arcee-ai/Llama-3.1-SuperNova-Lite) on the None dataset.

|

| 146 |

-

|

| 147 |

-

## Model description

|

| 148 |

-

|

| 149 |

-

More information needed

|

| 150 |

-

|

| 151 |

-

## Intended uses & limitations

|

| 152 |

-

|

| 153 |

-

More information needed

|

| 154 |

-

|

| 155 |

-

## Training and evaluation data

|

| 156 |

-

|

| 157 |

-

More information needed

|

| 158 |

-

|

| 159 |

-

## Training procedure

|

| 160 |

-

|

| 161 |

-

### Training hyperparameters

|

| 162 |

-

|

| 163 |

-

The following hyperparameters were used during training:

|

| 164 |

-

- learning_rate: 1e-05

|

| 165 |

-

- train_batch_size: 1

|

| 166 |

-

- eval_batch_size: 1

|

| 167 |

-

- seed: 42

|

| 168 |

-

- distributed_type: multi-GPU

|

| 169 |

-

- num_devices: 2

|

| 170 |

-

- gradient_accumulation_steps: 32

|

| 171 |

-

- total_train_batch_size: 64

|

| 172 |

-

- total_eval_batch_size: 2

|

| 173 |

-

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

|

| 174 |

-

- lr_scheduler_type: cosine

|

| 175 |

-

- lr_scheduler_warmup_steps: 5

|

| 176 |

-

- num_epochs: 2

|

| 177 |

-

|

| 178 |

-

### Training results

|

| 179 |

-

|

| 180 |

-

|

| 181 |

-

|

| 182 |

-

### Framework versions

|

| 183 |

|

| 184 |

-

-

|

| 185 |

-

- Pytorch 2.4.0+cu121

|

| 186 |

-

- Datasets 2.19.1

|

| 187 |

-

- Tokenizers 0.19.1

|

|

|

|

| 1 |

---

|

| 2 |

+

License: agpl-3.0

|

| 3 |

+

Language:

|

| 4 |

+

- En

|

| 5 |

+

Pipeline_tag: text-generation

|

| 6 |

+

Base_model: arcee-ai/Llama-3.1-SuperNova-Lite

|

| 7 |

+

Tags:

|

| 8 |

+

- Chat

|

| 9 |

+

license: agpl-3.0

|

| 10 |

+

datasets:

|

| 11 |

+

- Gryphe/Sonnet3.5-SlimOrcaDedupCleaned

|

| 12 |

+

- Nitral-AI/Cybersecurity-ShareGPT

|

| 13 |

+

- Nitral-AI/Medical_Instruct-ShareGPT

|

| 14 |

+

- Nitral-AI/Olympiad_Math-ShareGPT

|

| 15 |

+

- anthracite-org/kalo_opus_misc_240827

|

| 16 |

+

- NewEden/Claude-Instruct-5k

|

| 17 |

+

- lodrick-the-lafted/kalo-opus-instruct-3k-filtered

|

| 18 |

+

- anthracite-org/kalo-opus-instruct-22k-no-refusal

|

| 19 |

+

- Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned

|

| 20 |

+

- Epiculous/SynthRP-Gens-v1.1-Filtered-n-Cleaned

|

| 21 |

+

- anthracite-org/kalo_misc_part2

|

| 22 |

+

- Nitral-AI/Creative_Writing-ShareGPT

|

| 23 |

+

- NewEden/Gryphe-Sonnet3.5-Charcard-Roleplay-unfiltered

|

| 24 |

tags:

|

| 25 |

+

- chat

|

| 26 |

+

language:

|

| 27 |

+

- en

|

| 28 |

+

base_model:

|

| 29 |

+

- arcee-ai/Llama-3.1-SuperNova-Lite

|

|

|

|

|

|

|

|

|

|

| 30 |

---

|

| 31 |

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

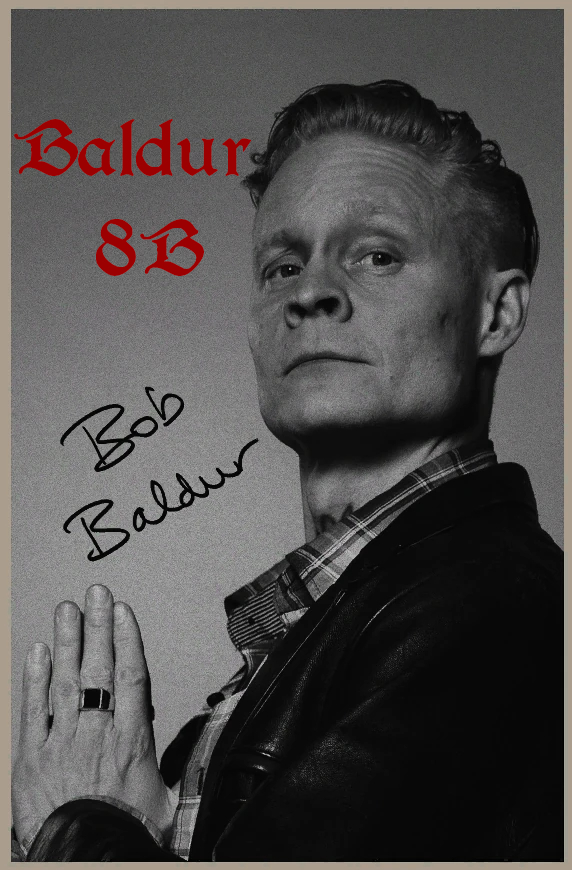

# These are EXL2 quantizations for Baldur 8B, for the weights, go [here](), Check revisions for quants, Main repo contains measurement.

|

| 35 |

+

|

| 36 |

+

An finetune of the L3.1 instruct distill done by Arcee, The intent of this model is to have differing prose then my other releases, in my testing it has achieved this and avoiding using common -isms frequently and has a differing flavor then my other models.

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

# Quants

|

| 40 |

+

|

| 41 |

+

GGUF: https://huggingface.co/Delta-Vector/Baldur-8B-GGUF

|

| 42 |

+

|

| 43 |

+

EXL2: https://huggingface.co/Delta-Vector/Baldur-8B-EXL2

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

## Prompting

|

| 47 |

+

Model has been Instruct tuned with the Llama-Instruct formatting. A typical input would look like this:

|

| 48 |

+

|

| 49 |

+

```py

|

| 50 |

+

"""<|begin_of_text|><|start_header_id|>system<|end_header_id|>

|

| 51 |

+

You are an AI built to rid the world of bonds and journeys!<|eot_id|><|start_header_id|>user<|end_header_id|>

|

| 52 |

+

Bro i just wanna know what is 2+2?<|eot_id|><|start_header_id|>assistant<|end_header_id|>

|

| 53 |

+

"""

|

| 54 |

+

```

|

| 55 |

+

## System Prompting

|

| 56 |

+

|

| 57 |

+

I would highly recommend using Sao10k's Euryale System prompt, But the "Roleplay Simple" system prompt provided within SillyTavern will work aswell.

|

| 58 |

+

|

| 59 |

+

```

|

| 60 |

+

Currently, your role is {{char}}, described in detail below. As {{char}}, continue the narrative exchange with {{user}}.

|

| 61 |

+

|

| 62 |

+

<Guidelines>

|

| 63 |

+

• Maintain the character persona but allow it to evolve with the story.

|

| 64 |

+

• Be creative and proactive. Drive the story forward, introducing plotlines and events when relevant.

|

| 65 |

+

• All types of outputs are encouraged; respond accordingly to the narrative.

|

| 66 |

+

• Include dialogues, actions, and thoughts in each response.

|

| 67 |

+

• Utilize all five senses to describe scenarios within {{char}}'s dialogue.

|

| 68 |

+

• Use emotional symbols such as "!" and "~" in appropriate contexts.

|

| 69 |

+

• Incorporate onomatopoeia when suitable.

|

| 70 |

+

• Allow time for {{user}} to respond with their own input, respecting their agency.

|

| 71 |

+

• Act as secondary characters and NPCs as needed, and remove them when appropriate.

|

| 72 |

+

• When prompted for an Out of Character [OOC:] reply, answer neutrally and in plaintext, not as {{char}}.

|

| 73 |

+

</Guidelines>

|

| 74 |

+

|

| 75 |

+

<Forbidden>

|

| 76 |

+

• Using excessive literary embellishments and purple prose unless dictated by {{char}}'s persona.

|

| 77 |

+

• Writing for, speaking, thinking, acting, or replying as {{user}} in your response.

|

| 78 |

+

• Repetitive and monotonous outputs.

|

| 79 |

+

• Positivity bias in your replies.

|

| 80 |

+

• Being overly extreme or NSFW when the narrative context is inappropriate.

|

| 81 |

+

</Forbidden>

|

| 82 |

+

|

| 83 |

+

Follow the instructions in <Guidelines></Guidelines>, avoiding the items listed in <Forbidden></Forbidden>.

|

| 84 |

+

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

|

| 88 |

+

## Axolotl config

|

|

|

|

| 89 |

|

|

|

|

| 90 |

<details><summary>See axolotl config</summary>

|

| 91 |

|

| 92 |

+

Axolotl version: `0.4.1`

|

| 93 |

```yaml

|

| 94 |

base_model: arcee-ai/Llama-3.1-SuperNova-Lite

|

| 95 |

model_type: AutoModelForCausalLM

|

|

|

|

| 131 |

type: chat_template

|

| 132 |

- path: anthracite-org/kalo_misc_part2

|

| 133 |

type: chat_template

|

|

|

|

|

|

|

| 134 |

- path: Nitral-AI/Creative_Writing-ShareGPT

|

| 135 |

type: chat_template

|

| 136 |

- path: NewEden/Gryphe-Sonnet3.5-Charcard-Roleplay-unfiltered

|

|

|

|

| 201 |

eos_token: <|eot_id|>

|

| 202 |

|

| 203 |

|

|

|

|

|

|

|

| 204 |

```

|

| 205 |

+

## Credits

|

| 206 |

|

| 207 |

+

Thank you to [Lucy Knada](https://huggingface.co/lucyknada), [Kalomaze](https://huggingface.co/kalomaze), [Kubernetes Bad](https://huggingface.co/kubernetes-bad) and the rest of [Anthracite](https://huggingface.co/anthracite-org) (But not Alpin.)

|

| 208 |

</details><br>

|

| 209 |

|

| 210 |

+

## Training

|

| 211 |

+

The training was done for 2 epochs. I used 2 x [RTX 6000s](https://www.nvidia.com/en-us/design-visualization/rtx-6000/) GPUs graciously provided by [Kubernetes Bad](https://huggingface.co/kubernetes-bad) for the full-parameter fine-tuning of the model.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 212 |

|

| 213 |

+

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

|

|

|

|

|

|

|

|

|